Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

NLP Core TutorialWelcome to my NLP core Tutorial

NLP Core TutorialWelcome to my NLP core Tutorial

Today, we’re going to be looking at NLTK.

Before moving forward I’ll highly recommend to go and take some familiarity with

- Regular Expression

- Python Complete Tutorial

- Numpy Tutorial

- Pandas Beginner Tutorial

- Python Data Science Handbook

What you’ll learn

Kaggle Script:

- Installing NLTK in Python

- Tokenizing Words and Sentences

- How tokenization works? — Text

- Introduction to Stemming and Lemmatization

- Stemming using NLTK

- Lemmatization using NLTK

- Stop word removal using NLTK

- Parts Of Speech Tagging

- POS Tag Meanings

- Named Entity Recognition

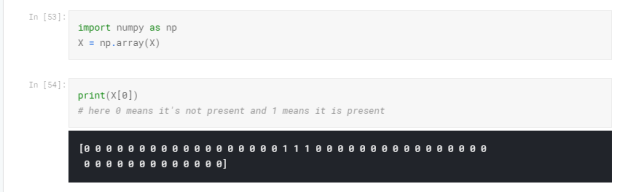

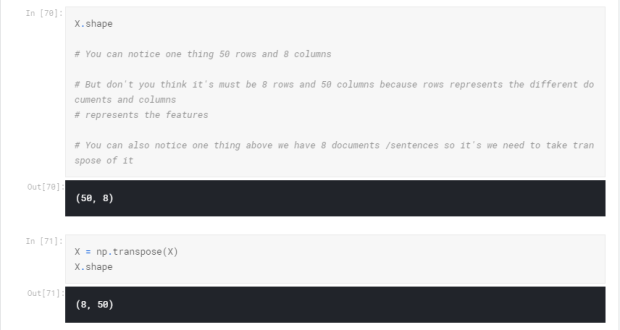

- Text Modelling using Bag of Words Model

- Text Modelling using TF-IDF Model

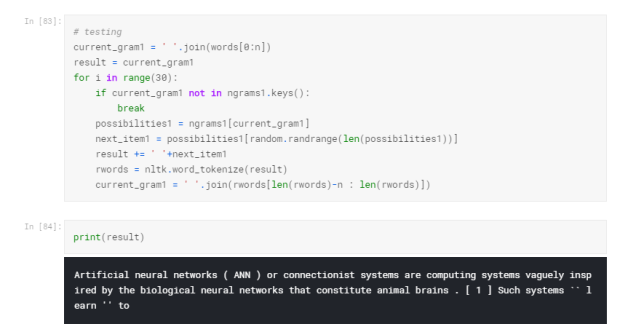

- Understanding the N-Gram Model

- Understanding Latent Semantic Analysis

What is NLP?

Natural language processing (NLP) is an area of computer science and artificial intelligence concerned with the interactions between computers and human (natural) languages, in particular how to program computers to process and analyze large amounts of natural language data. Refer Wikipedia

Installing NLTK in PythonNatural Langauge ToolKit

NLTK is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, wrappers for industrial-strength NLP libraries.

Installing NLTK

NLTK requires Python versions 2.7, 3.4, 3.5, or 3.6

Mac/Unix

Install NLTK: run sudo pip install -U nltk

Install Numpy (optional): run sudo pip install -U numpy

Test installation: run python then type import nltk

For older versions of Python, it might be necessary to install setuptools

(see http://pypi.python.org/pypi/setuptools) and to install pip (sudo easy_install pip).

Windows

These instructions assume that you do not already have Python installed on your machine.

32-bit binary installation

- Install Python 3.6: http://www.python.org/downloads/ (avoid the 64-bit versions)

- Install Numpy (optional): http://sourceforge.net/projects/numpy/files/NumPy/ (the version that specifies python3.5)

- Install NLTK: http://pypi.python.org/pypi/nltk

- Test installation: Start>Python35, then type import nltk

Installing Third-Party Software

Please see: https://github.com/nltk/nltk/wiki/Installing-Third-Party-Software

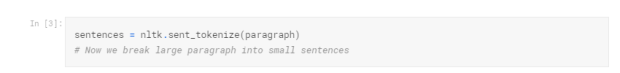

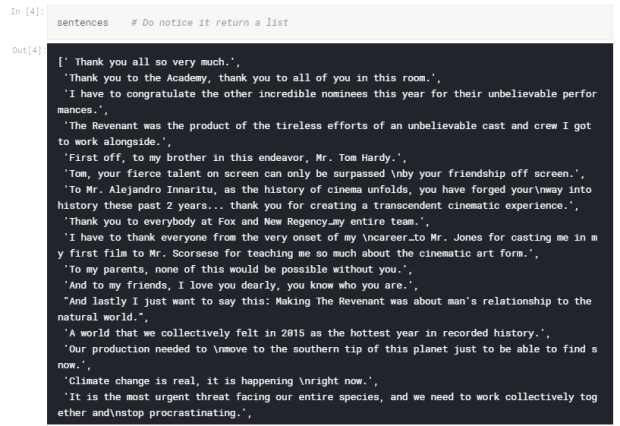

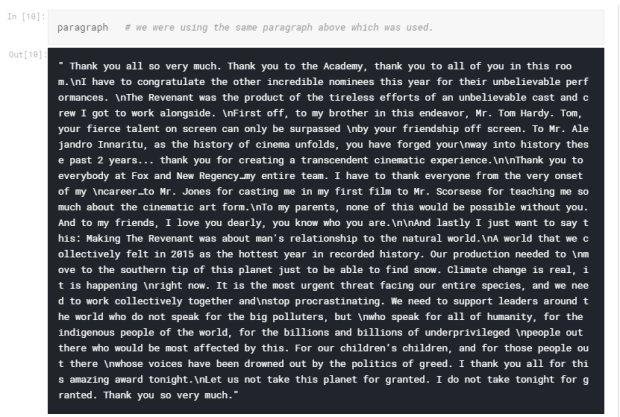

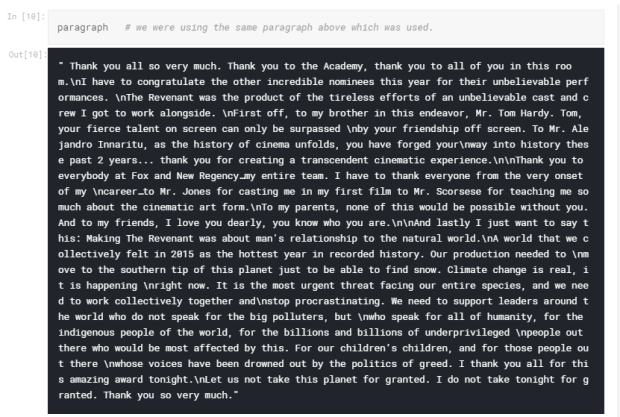

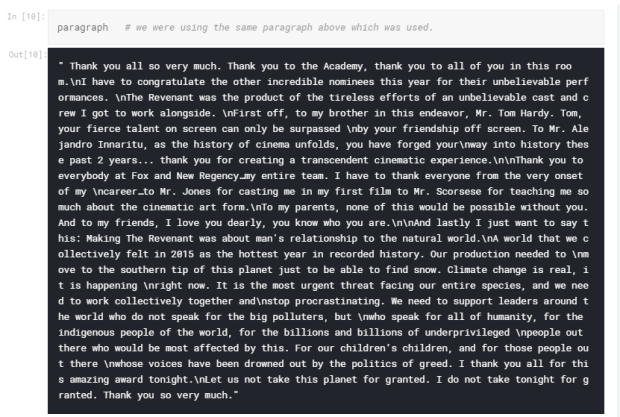

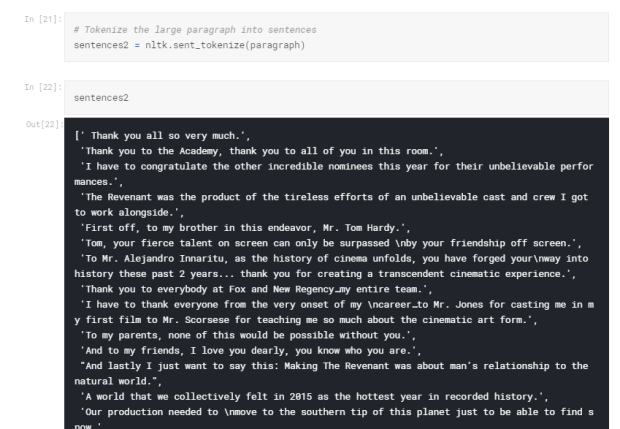

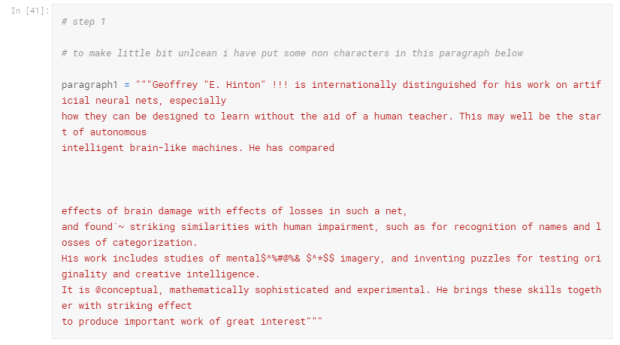

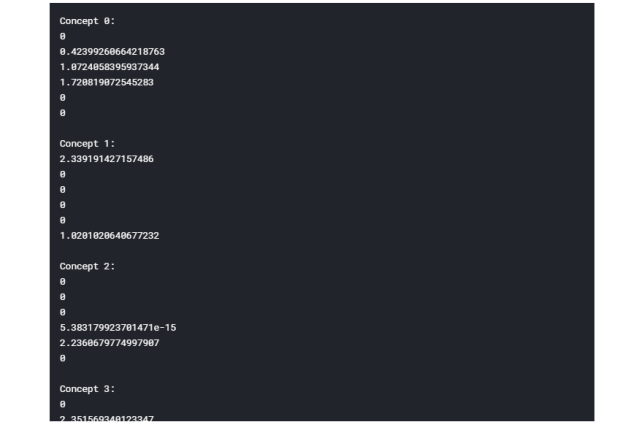

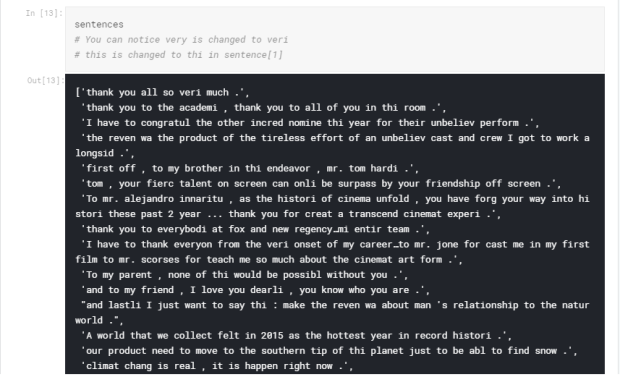

This is a Leonardo DiCaprio Speech taken from here

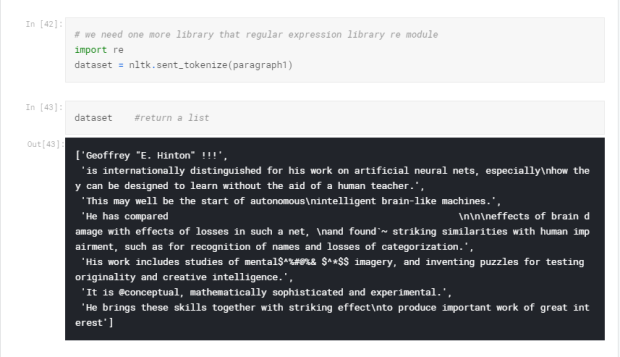

Now, we are going to use to NLTK function which is used to break down this large paragraph into a small sentence and another function is for calculating different words in the sentence.

- sent_tokenize()

- word_tokenize()

Tokenization is a process in which a sequence is broken down into pieces such as words, sentences, phrases etc. Just above, you have learned how to tokenize a paragraph into sentences or words. The way these tokenizations work are explained as follows.

- Word Tokenization:

In this process, a sequence like a sentence or a paragraph is broken down into words. These tokenizations are carried out based on the delimiter “space” (” “). Say, we have a sentence, “the sky is blue”. Then here the sentence consists of 4 words with spaces between them. Word tokenization tracks these spaces and returns the list of words in the sentence. [“the”,”sky”,”is”,”blue”]

- Sentence Tokenization:

In this process instead of tokenizing a paragraph based on “space”, we tokenize it based on “.” and “,”. Therefore, we get all the different sentences consisting of the paragraph.

We can perform these tokenizations even without the use of nltk library. We can use the split() function for this as follows,

str = “I love NLP” words = str.split(” “) print(words)

Out: [“I”,”love”,”NLP”] For sentence tokenization just replace str.split(” “) with str.split(“.”) .

Introduction to Stemming and Lemmatization

So what are Stemming and Lemmatization?

So Let’s get started !!

Corpus ( means a collection of written texts, especially the entire works of a particular author or a body of writing on a particular subject )

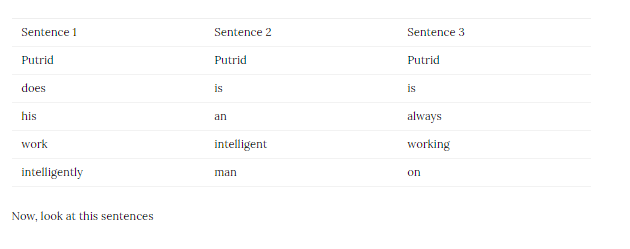

Sentences –

” Putrid does his work intelligently ”

” Putrid is an intelligent man ”

” Putrid is always working on “

What we have to do is to generate words as a feature from sentences and for that, we need stem the different words

I will first tokenize each of these sentences

You can notice sentence 1 and sentence 2 have one word common that is ” intelligently ” and “intelligent”, but they have a different form.

Similarly, the case happened in sentence1 and sentence3 have one word common that is “work”.

So,

Stemming is the process of reducing inflected (or sometimes derived) words to their word stem, base or root form — generally a written word form.

intelligence — — — -|

intelligent — — — — -| — — — — intelligen

intelligently — — — -|

All these words are reduced to intermediate root form which is called ” intelligen “

So we are gonna convert these words with intelligen for example ” Putrid does his work intelligen “

Problem with Stemming

Produced intermediate representation of the word may not have any meaning. For

Example — intelligen

Solution: Lemmatization

Lemmatization is same as stemming but a representation/root form has a meaning

intelligence — — — -|

intelligent — — — — -| — — — — intelligent

intelligently — — — -|

All these words are reduced to intermediate root form which is called ” intelligent “

Difference between Stemming and Lemmatization

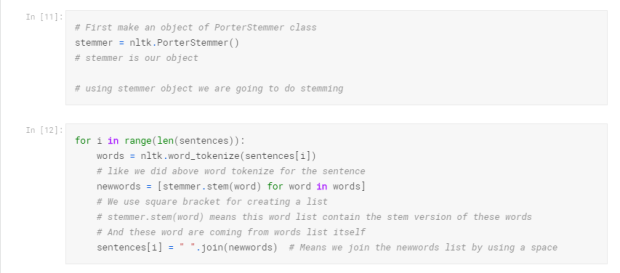

Stemming using NLTK

Stemming using NLTK

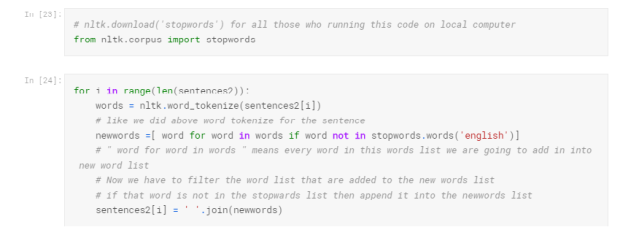

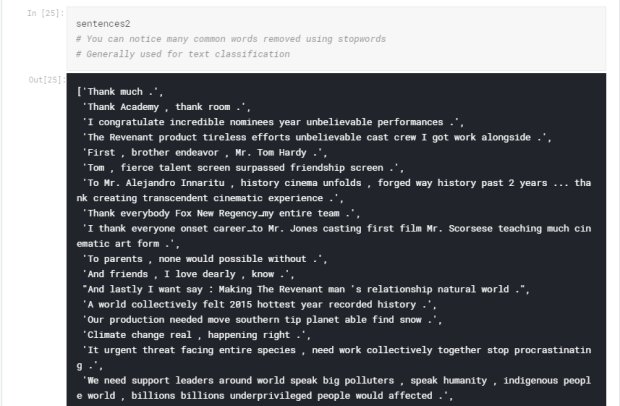

Now, look at the paragraph you can see there are some words that are so common like — to, of, in etc.

In computer search engines, a stop word is a commonly used word (such as “the”) that a search engine has been programmed to ignore, both when indexing entries for searching and when retrieving them as the result of a search query.

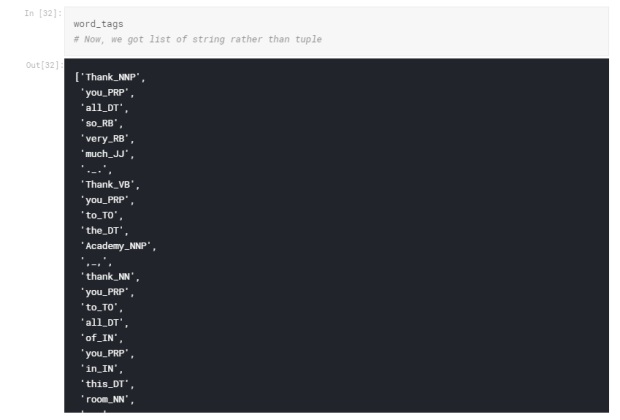

We will be going to learn how you can find out the different parts of speech associated with the word table or word.

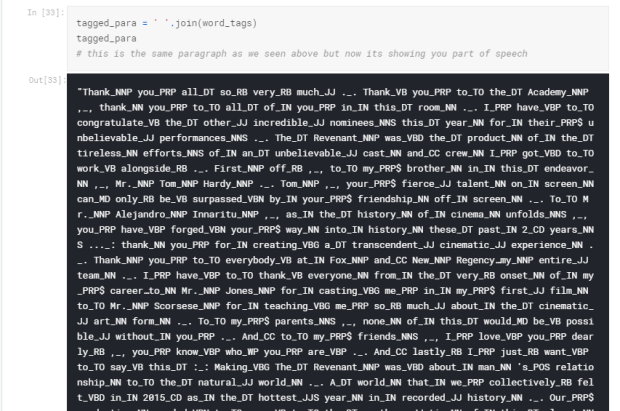

You can see the above paragraph it contains a lot of words. And each of the different words has a specific part of speech because some words are nouns, verbs, adjectives etc.

You can see the output somewhere thank is a proper noun and somewhere it is acting as the verb.

So we can easily get part of speech, but there is one problem that is output we get is a tuple

And we really can’t use them for formulating models so what we are going to do now is we will generate a whole new paragraph and there each of the words will be appended with their corresponding parts of speech.

Here are the meanings of the Parts-Of-Speech tags used in NLTK

CC — Coordinating conjunction

CD — Cardinal number

DT — Determiner

EX — Existential there

FW — Foreign word

IN — Preposition or subordinating conjunction

JJ — Adjective

JJR — Adjective, comparative

JJS — Adjective, superlative

LS — List item marker

MD — Modal

NN — Noun, singular or mass

NNS — Noun, plural

NNP — Proper noun, singular

NNPS — Proper noun, plural

PDT — Predeterminer

POS — Possessive ending

PRP — Personal pronoun

PRP$ — Possessive pronoun

RB — Adverb

RBR — Adverb, comparative

RBS — Adverb, superlative

RP — Particle

SYM — Symbol

TO — to

UH — Interjection

VB — Verb, base form

VBD — Verb, past tense

VBG — Verb, gerund or present participle

VBN — Verb, past participle

VBP — Verb, non-3rd person singular present

VBZ — Verb, 3rd person singular present

WDT — Wh-determiner

WP — Wh-pronoun

WP$ — Possessive wh-pronoun

WRB — Wh-adverb

Named Entity Recognition

Now, we are going to extract the entities out of the sentences

sentence3 = "The Taj Mahal was built by Emperor Shah Jahan"

This is our sentence and out of this sentence we are going to find out different entities

Step 1: Break the sentence using word_tokenize() into words

Step 2: We need to do POS tagging because to do Named Entity Recognition you need POS tagging

Step 3: Use ne_chunk() function and pass the argument

# step 1words2 = nltk.word_tokenize(sentence3)

words2

['The', 'Taj', 'Mahal', 'was', 'built', 'by', 'Emperor', 'Shah', 'Jahan']

# step 2tagged_words2 = nltk.pos_tag(words2)

tagged_words2

[('The', 'DT'), ('Taj', 'NNP'), ('Mahal', 'NNP'), ('was', 'VBD'), ('built', 'VBN'), ('by', 'IN'), ('Emperor', 'NNP'), ('Shah', 'NNP'), ('Jahan', 'NNP')]# step 3named_entity = nltk.ne_chunk(tagged_words2)

named_entity.draw#to see the graph you can use named_entity.draw() in your local Machine and the below output occur

Output :

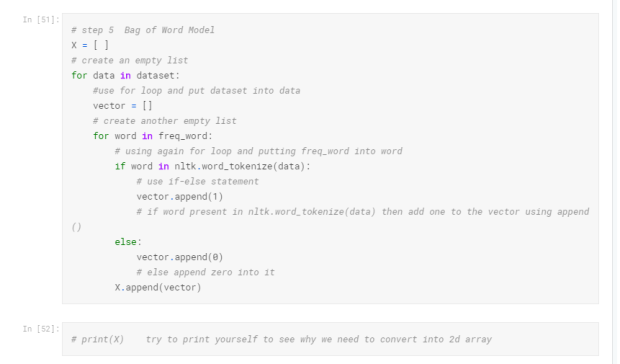

<bound method Tree.draw of Tree('S', [('The', 'DT'), Tree('ORGANIZATION', [('Taj', 'NNP'), ('Mahal', 'NNP')]), ('was', 'VBD'), ('built', 'VBN'), ('by', 'IN'), Tree('PERSON', [('Emperor', 'NNP'), ('Shah', 'NNP'), ('Jahan', 'NNP')])])>Text Modelling using Bag of Words Model

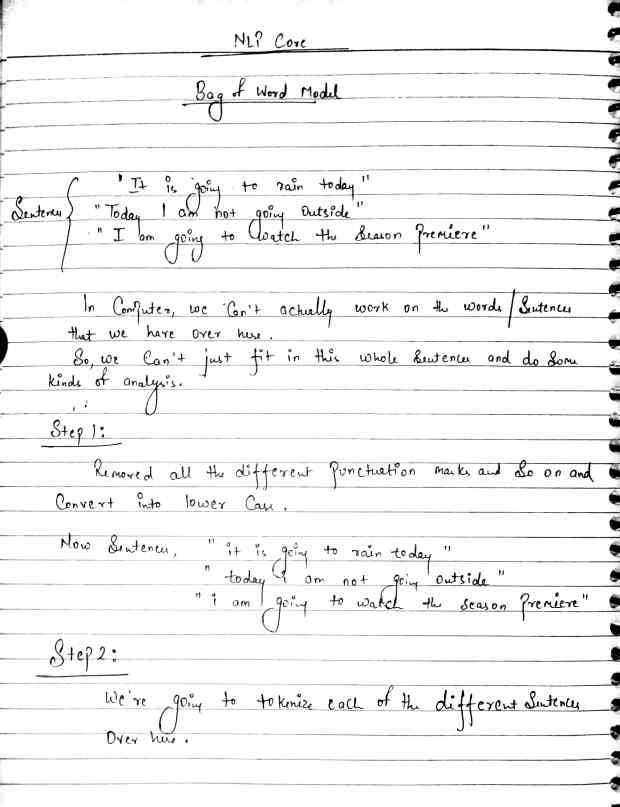

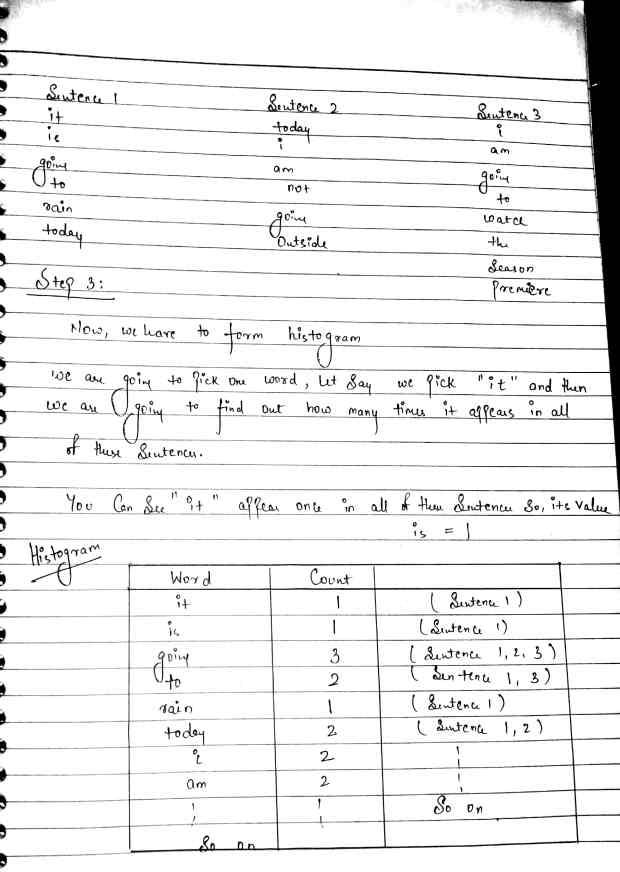

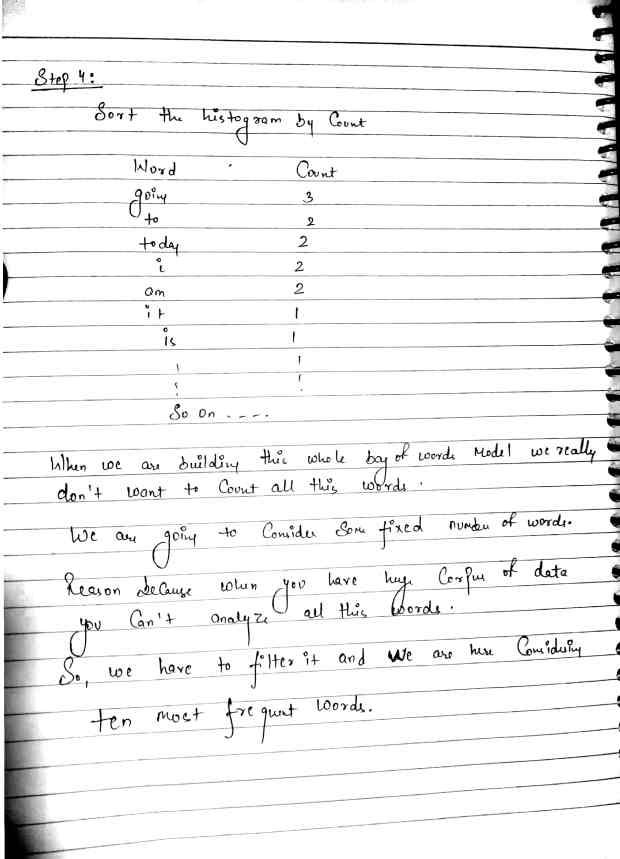

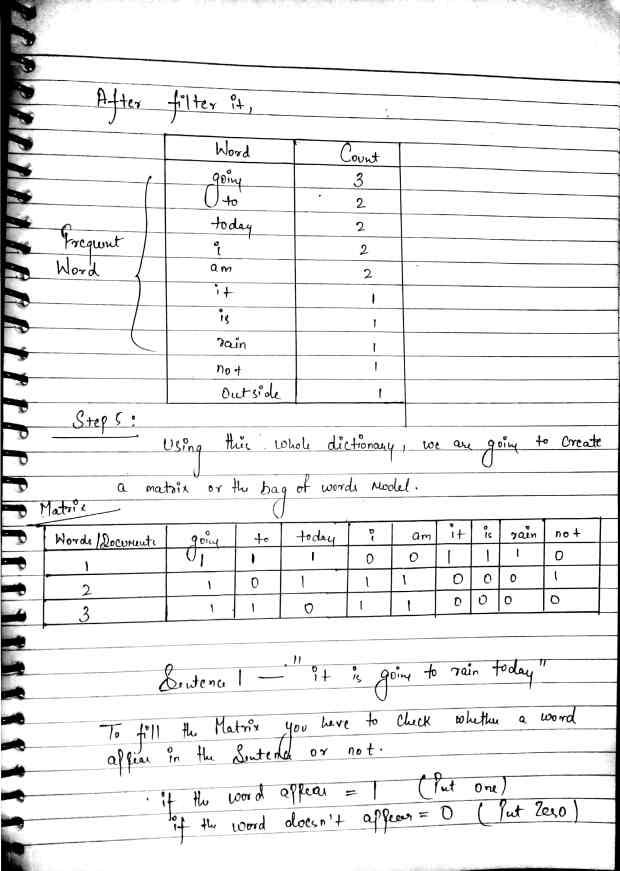

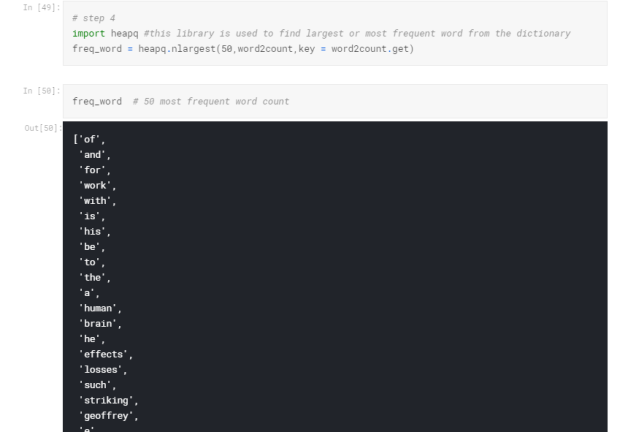

What is Bag of Words Model?

The bag-of-words model is a simplifying representation used in natural language processing and information retrieval (IR). Also known as the vector space model. In this model, a text (such as a sentence or a document) is represented as the bag (multiset) of its words, disregarding grammar and even word order but keeping multiplicity. The bag-of-words model has also been used for computer vision. Refer to Wikipedia

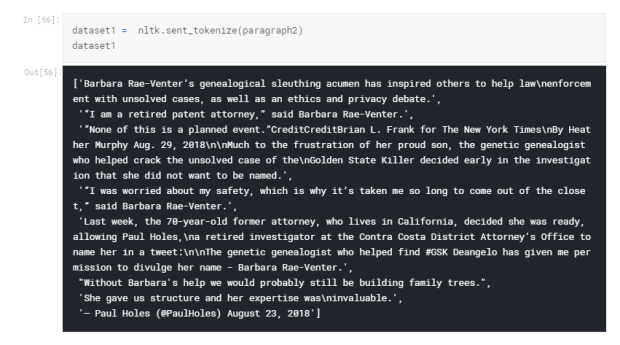

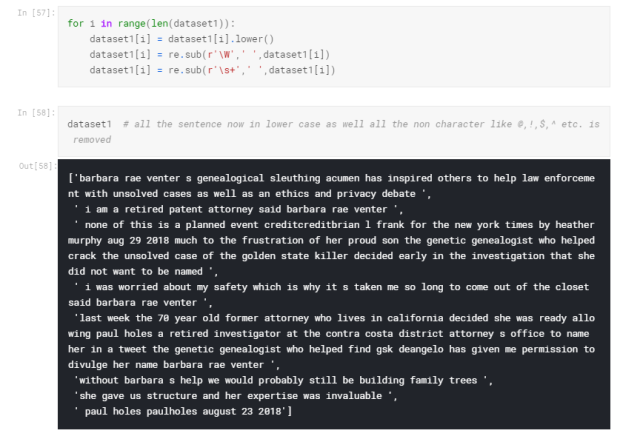

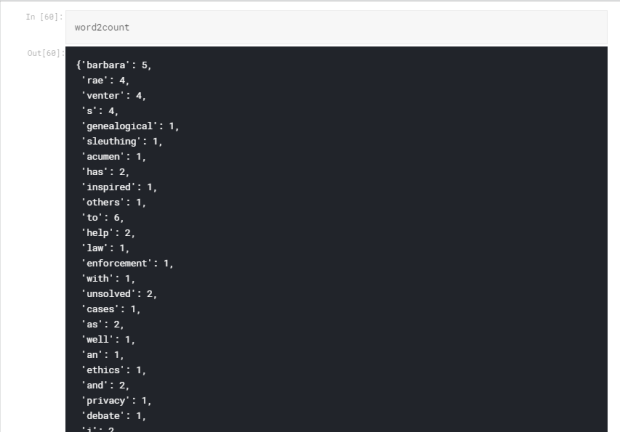

We are going to remove all the punctuation, errors as well as break down into sentences

Bag of Words Problem

- All the words have the same importance

- No semantic information preserved

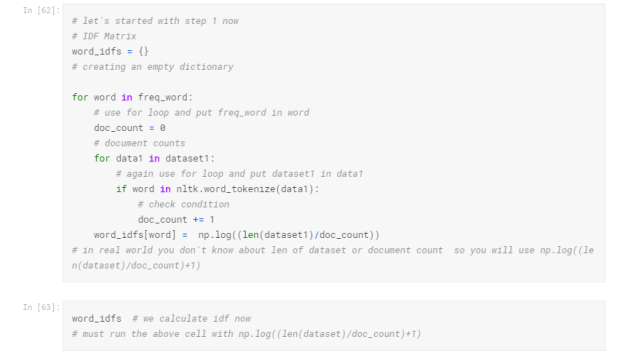

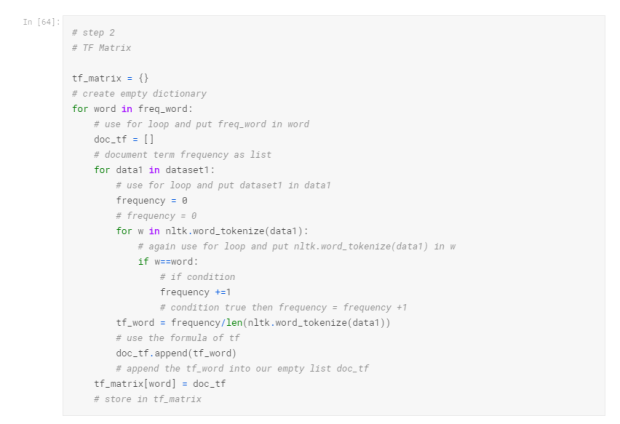

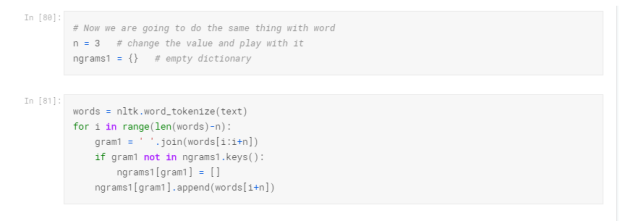

Solution : TF-IDF Model

TF = Term Frequency

IDF = Inverse Document Frequency

TF-IDF = TF*IDF

The formula of TF = Number of occurrences of a Word in a sentence / Number of the word in that document

Example: ” I know okay I don’t know “

TF for I = 2/6 TF for know = 2/6 etc.

The formula of IDF = log ( Number of documents / Number of documents containing word )

Make sure you take log base e

Example :

sentence 1 = ” to be or not to be”sentence 2 = ” I have to be “sentence 3 = ” you got to be “

IDF for to = log(3/3) log with base eIDF for be = log (3/3)IDF for or = log(3/1) etc.

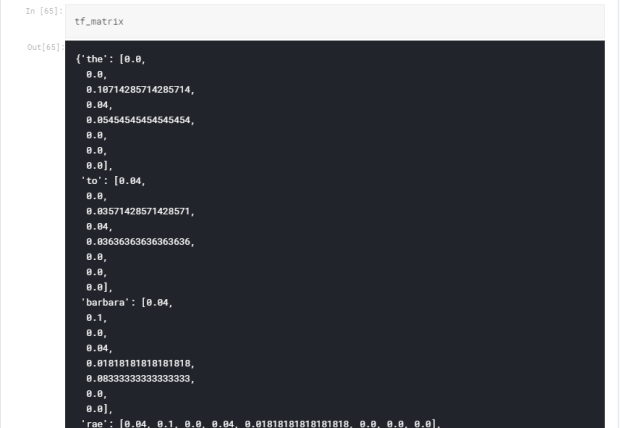

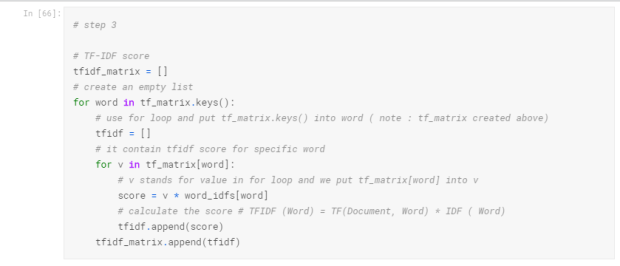

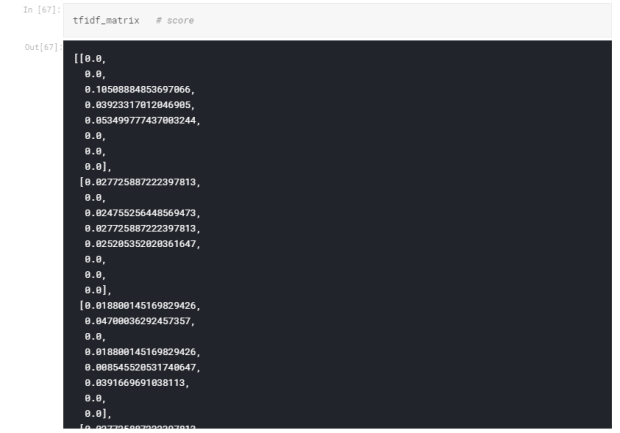

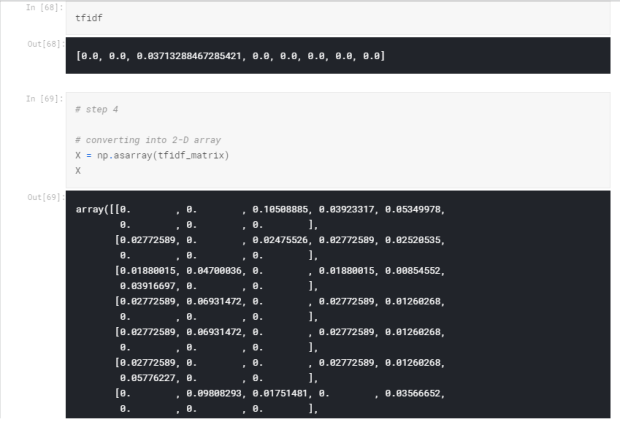

TFIDF (Word) = TF(Document, Word) * IDF ( Word)

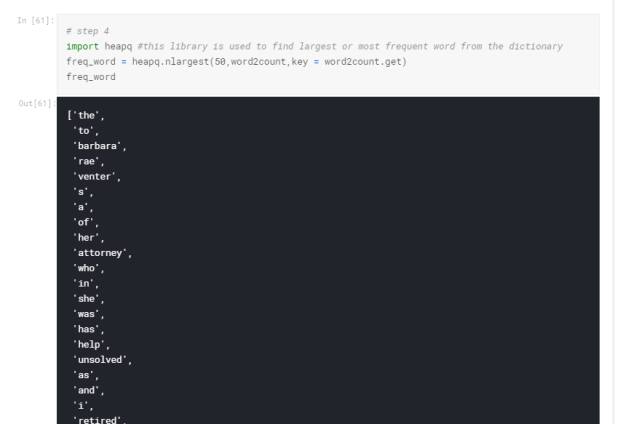

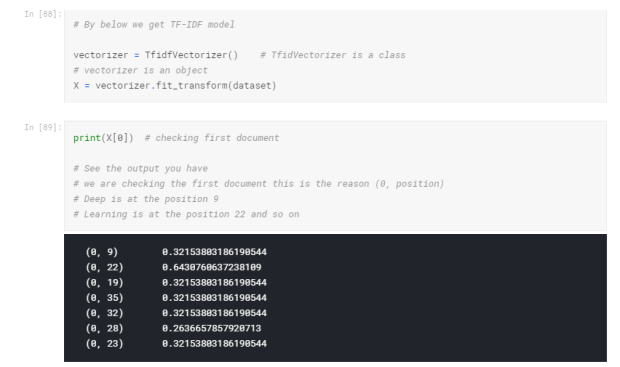

Just for you, I’ll be going to showing you manually how this output comes

I already provide you the formula above

The formula of TF = Number of occurrences of a Word in a sentence / Number of the word in that document

Example: ” I know okay I don’t know “

TF for I = 2/6 TF for know = 2/6 etc.

Let’s take the first sentence (taken from dataset1)

sentence = barbara rae venter s genealogical sleuthing acumen has inspired others to help law enforcement with unsolved cases as well as an ethics and privacy debate

Tf for ‘the’ = 0/8 = 0

now you wonder how 0.10714285714285714 value comes from

Actually, Tf value for ‘the’ is checked in all the 8 sentences which we got above

take a look at sentence 3

sentence 3 = none of this is a planned event creditcreditbrian l frank for the new york times by heather murphy aug 29 2018 much to the frustration of her proud son the genetic genealogist who helped crack the unsolved case of the golden state killer decided early in the investigation that she did not want to be named

Calculating Tf value for ‘the’ in sentence 3 which is = 6/56 = 0.1071428571

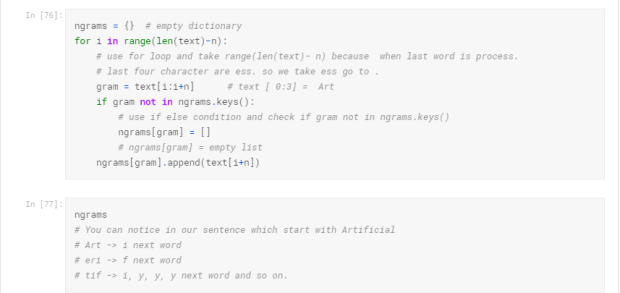

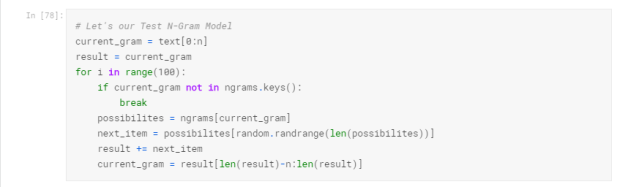

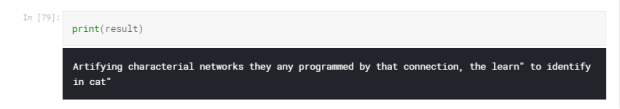

What is the N-Gram Model?

N-gram is a contiguous sequence of n items from a given sample of text or speech. The items can be phonemes, syllables, letters, words or base pairs according to the application. The n-grams typically are collected from a text or speech corpus. When the items are words, n-grams may also be called shingles.

Used in your phone for example — when you type any keyword in your phone and your cell phone automatically tells you did you mean this …

Example- When you type intell its show you Intelligence, Intelligent, Intell etc.

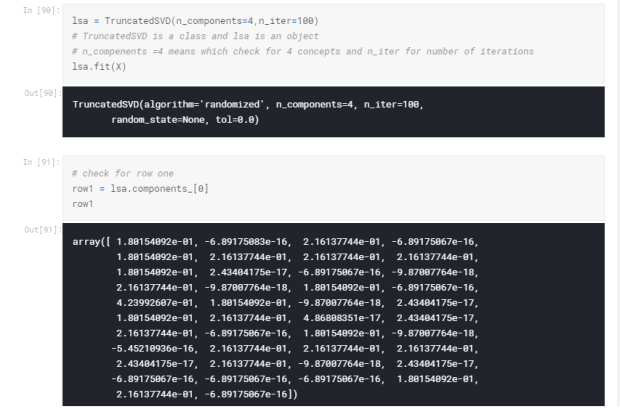

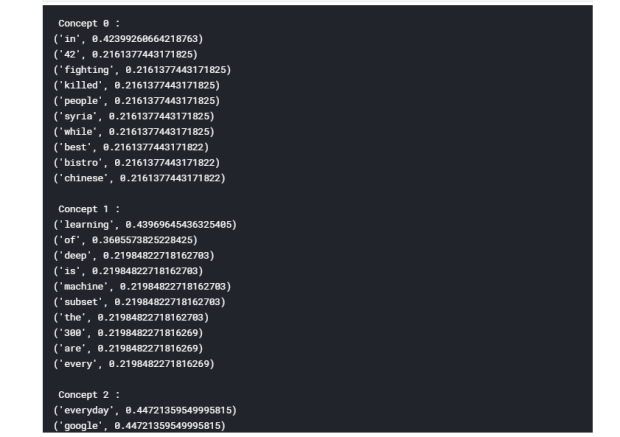

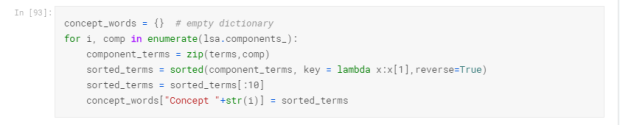

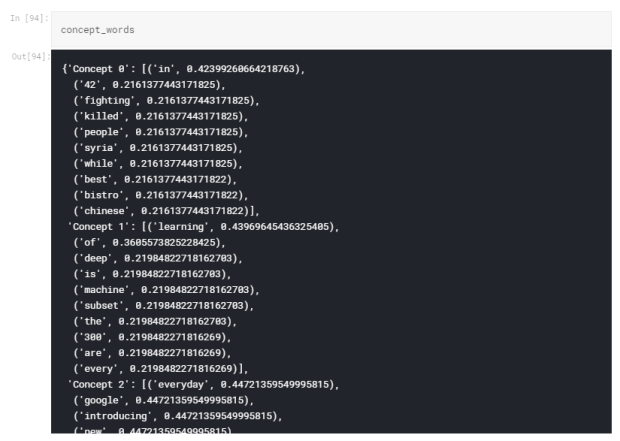

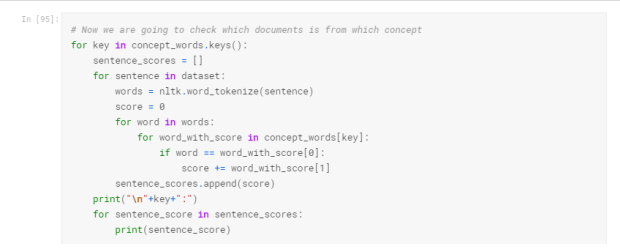

Latent Semantic Analysis is a technique of analyzing relationships between a set of documents and the term they contain by producing a set of concepts related to the documents and terms.

So that was all about the NLP core tutorial. IF you have any feedback on the article or questions on any of the above concepts, connect with me in the comment section below.

Please share it, like it, subscribe to it my themenyouwanttobe!Share Some Love too ❤

NLP CORE was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.