Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Did you know that Data Scientists spend 80% of their time cleaning data and the other 20% complaining about it?

Not to be confused with Sanitization (classified information) or Data scrubbing

This blog will present simplified explanations to some of today’s hottest topics in data science, including:

- What is Data Cleaning

- Why Data Cleaning is Required

- How to clean the data?

- Handle Missing Values

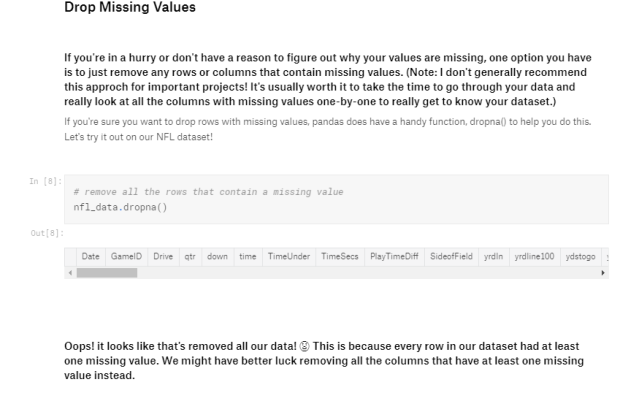

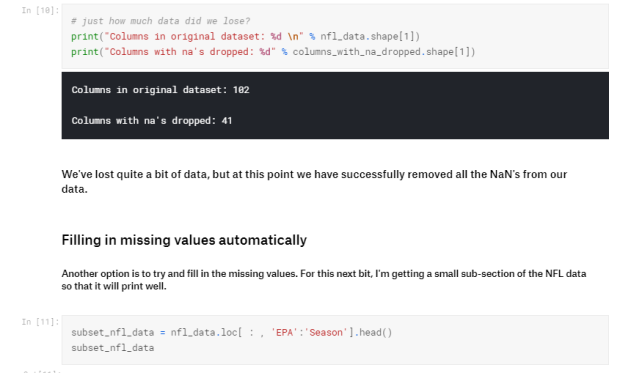

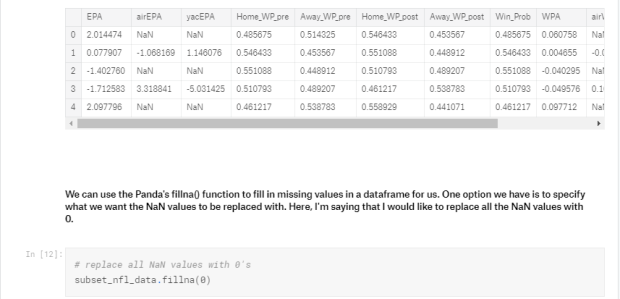

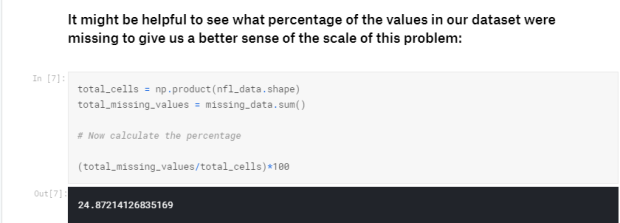

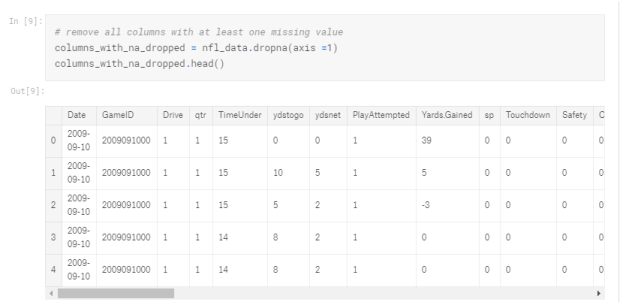

Take a first look at the dataSee how many missing data points we haveFigure out why the data is missingDrop missing valuesFilling in missing values

- Scaling and normalization

Get our environment set upScaling vs. Normalization: What’s the difference?Practice scalingPractice normalization

- Parsing dates

Get our environment set upCheck the data type of our date columnConvert our date columns to DateTimeSelect just the day of the month from our columnPlot the day of the month to check the date parsing

- Character encodings

Get our environment set upWhat are the encodings?Reading in files with encoding problemsSaving your files with UTF-8 encoding

- Inconsistent Data Entry

Get our environment set upDo some preliminary text pre-processingUse fuzzy matching to correct inconsistent data entry

What is Data Cleaning?

Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data.[1] Data cleansing may be performed interactivelywith data wrangling tools, or as batch processing through scripting. Refer to learn more Wikipedia

Why Data Cleaning is Required?

Data cleansing is a valuable process that can help companies save time and increase their efficiency. Data cleansing software tools are used by various organisations to remove duplicate data, fix and amend badly-formatted, incorrect and amend incomplete data from marketing lists, databases and CRM’s.

Some Advantages are:-

- Improves the Efficiency of Customer Acquisition Activities

- Streamlines Business Practices

- Increases Revenue

- Increases Productivity

- Improves Decision Making Process ( Refer to this Article)

How to clean the data?

As you know, data cleaning is an important phase and require a good time to clean up the data. But, how to clean the data? How are data looks like? What are those ways if in our dataset we have missing values? What if in our data we have categorical missing values? What if our data is inconsistent? What if in our dataset dates are missing?

In this blog, I’ll try to answer and explain in detail about each and every case how to deal with unclean data and how to solve them.

Let’s get started!!Handling Missing Values

The first thing we’ll need to do is load in the libraries we’ll be using. I’ll be using a dataset of events that occurred in American Football games for demonstration.

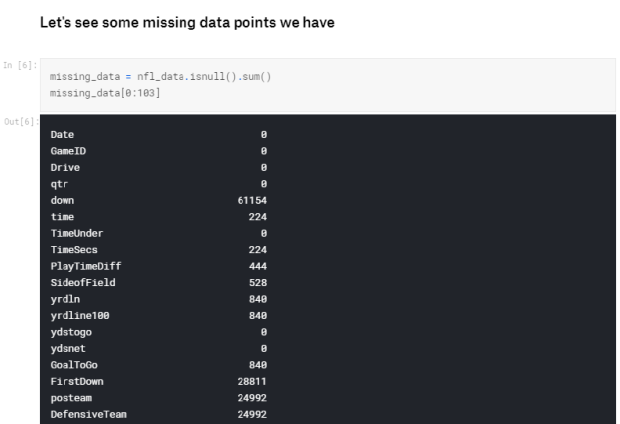

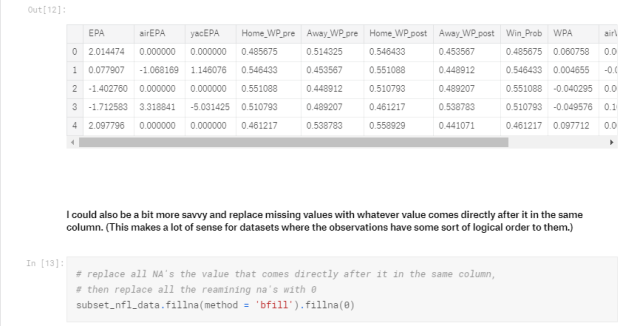

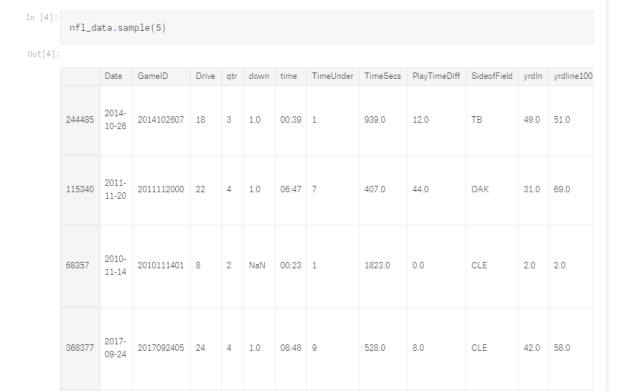

The first thing I do when I get a new dataset is to take a look at some of it. This lets me see that it all read in correctly and get an idea of what’s going on with the data. In this case, I’m looking to see if I see any missing values, which will be represented with NaN or None.

Step 1: Import the libraries and Dataset ( NFL DATASET)

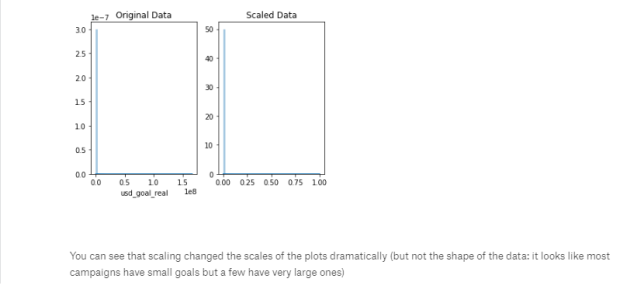

Scaling vs. Normalization: What’s the difference?

One of the reasons that it’s easy to get confused between scaling and normalization is because the terms are sometimes used interchangeably and, to make it even more confusing, they are very similar! In both cases, you’re transforming the values of numeric variables so that the transformed data points have specific helpful properties. The difference is that, in scaling, you’re changing the range of your data while in normalization you’re changing the shape of the distribution of your data. Let’s talk a little more in-depth about each of these options.

Scaling

This means that you’re transforming your data so that it fits within a specific scale, like 0–100 or 0–1. You want to scale data when you’re using methods based on measures of how far apart data points, like support vector machines, or SVM or k-nearest neighbors, or KNN. With these algorithms, a change of “1” in any numeric feature is given the same importance.

For example, you might be looking at the prices of some products in both Yen and US Dollars. One US Dollar is worth about 100 Yen, but if you don’t scale your prices methods like SVM or KNN will consider a difference in price of 1 Yen as important as a difference of 1 US Dollar! This clearly doesn’t fit with our intuitions of the world. With currency, you can convert between currencies. But what about if you’re looking at something like height and weight? It’s not entirely clear how many pounds should equal one inch (or how many kilograms should equal one meter).

By scaling your variables, you can help compare different variables on equal footing. To help solidify what scaling looks like, let’s look at a made-up example. (Don’t worry, we’ll work with real data in just a second, this is just to help illustrate my point.)

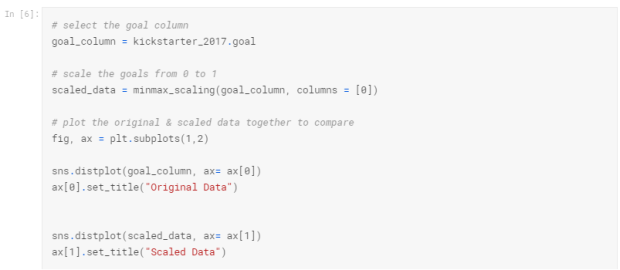

Step 2: Use Min-Max Scaling to Scaled Data

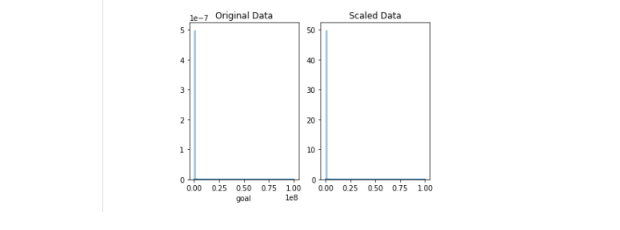

Normalization

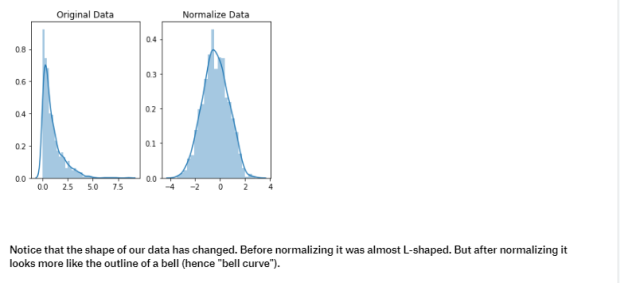

Scaling just changes the range of your data. Normalization is a more radical transformation. The point of normalization is to change your observations so that they can be described as a normal distribution.

Normal distribution: Also known as the “bell curve”, this is a specific statistical distribution where a roughly equal observations fall above and below the mean, the mean and the median are the same, and there are more observations closer to the mean. The normal distribution is also known as the Gaussian distribution.

In general, you’ll only want to normalize your data if you’re going to be using a machine learning or statistics technique that assumes your data is normally distributed. Some examples of these include t-tests, ANOVAs, linear regression, linear discriminant analysis (LDA) and Gaussian naive Bayes. (Pro tip: any method with “Gaussian” in the name probably assumes normality.)

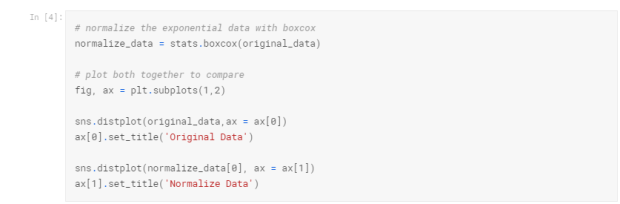

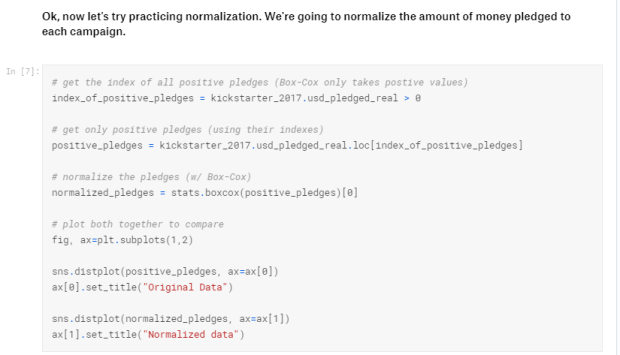

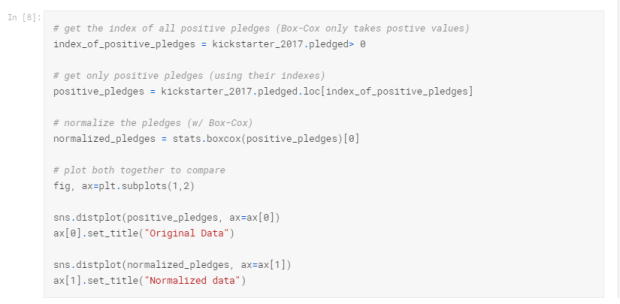

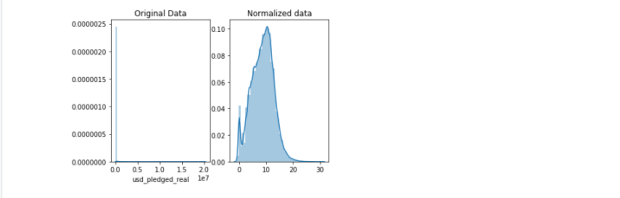

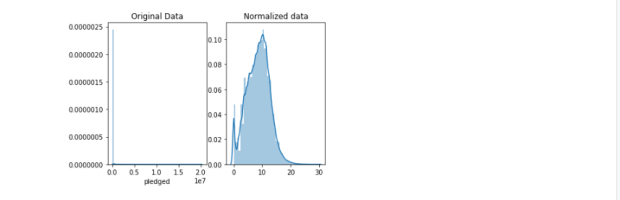

The method we’re using to normalize here is called the Box-Cox Transformation. Let’s take a quick peek at what normalizing some data looks like:

Step 3: Use Box-Cox Transformation for Normalization

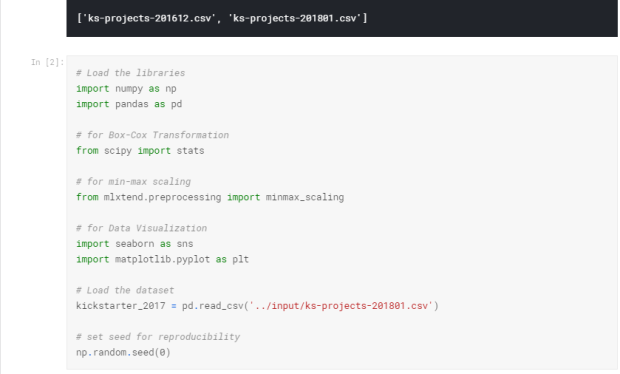

The first thing we’ll need to do is load in the libraries and datasets we’ll be using. For today, we’ll be working with datasets: containing information on landslides that occurred between 2007 and 2016.

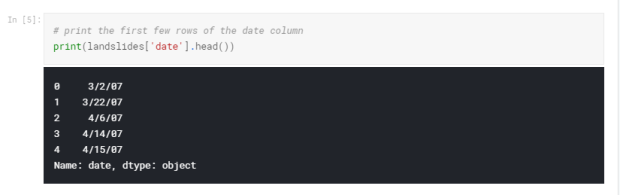

For this part of the challenge, I’ll be working with the date column from the landslides data frame. The very first thing I’m going to do is take a peek at the first few rows to make sure it actually looks like it contains dates.

Yep, those are dates! But just because I, a human, can tell that these are dates doesn’t mean that Python knows that they’re dates. Notice that the at the bottom of the output of head(), you can see that it says that the data type of this column is “object”.

Pandas uses the “object” dtype for storing various types of data types, but most often when you see a column with the dtype “object” it will have strings in it.

If you check the pandas dtype documentation here, you’ll notice that there’s also a specific datetime64 dtypes. Because the dtype of our column is object rather than datetime64, we can tell that Python doesn’t know that this column contains dates.

We can also look at just the dtype of your column without printing the first few rows if we like:

You may have to check the numpy documentation to match the letter code to the dtype of the object. “O” is the code for “object”, so we can see that these two methods give us the same information.

Step 3: Convert our date columns to datetime

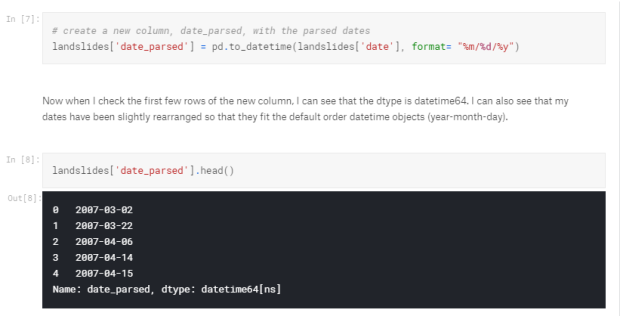

Now that we know that our date column isn’t being recognized as a date, it’s time to convert it so that it is recognized as a date. This is called “parsing dates” because we’re taking in a string and identifying its component parts.

We can pandas what the format of our dates are with a guide called as “strftime directive”, which you can find more information on at this link. The basic idea is that you need to point out which parts of the date are where and what punctuation is between them. There are lots of possible parts of a date, but the most common are %d for day, %m for a month, %y for a two-digit year and %Y for a four-digit year.

Some examples:

1/17/07 has the format “%m/%d/%y” 17–1–2007 has the format “%d-%m-%Y”

Looking back up at the head of the date column in the landslides dataset, we can see that it’s in the format “month/day/two-digit year”, so we can use the same syntax as the first example to parse in our dates:

Now that our dates are parsed correctly, we can interact with them in useful ways.

What if I run into an error with multiple date formats? While we’re specifying the date format here, sometimes you’ll run into an error when there are multiple date formats in a single column. If that happens, you have pandas try to infer what the right date format should be. You can do that like so: landslides[‘date_parsed’] = pd.to_datetime(landslides[‘Date’], infer_datetime_format=True)

Why don’t you always use infer_datetime_format = True? There are two big reasons not to always have pandas guess the time format. The first is that pandas won’t always be able to figure out the correct date format, especially if someone has gotten creative with data entry. The second is that it’s much slower than specifying the exact format of the dates.

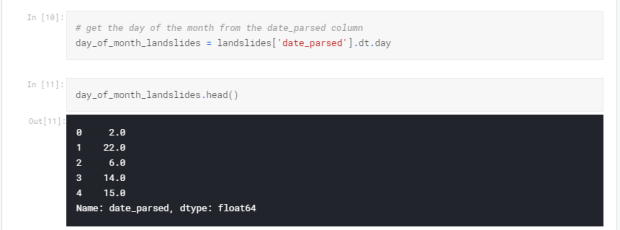

Select just the day of the month from our column

“This messing around with data types is fine, I guess, but what’s the point?” To answer your question, let’s try to get information on the day of the month that a landslide occurred on from the original “date” column, which has an “object” dtype:

We got an error! The important part to look at here is the part at the very end that says AttributeError: Can only use .dt accessor with datetimelike values. We’re getting this error because the dt.day() function doesn’t know how to deal with a column with the dtype “object”. Even though our data frame has dates in it, because they haven’t been parsed we can’t interact with them in a useful way.

Luckily, we have a column that we parsed earlier, and that lets us get the day of the month out no problem:

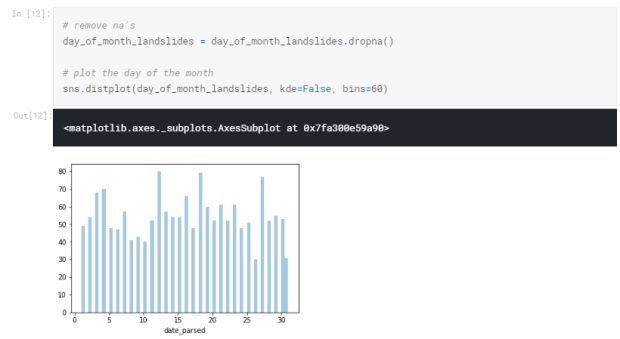

One of the biggest dangers in parsing dates is mixing up the months and days. The to_datetime() function does have very helpful error messages, but it doesn’t hurt to double-check that the days of the month we’ve extracted make sense.

To do this, let’s plot a histogram of the days of the month. We expect it to have values between 1 and 31 and since there’s no reason to suppose the landslides are more common on some days of the month than others, a relatively even distribution. (With a dip on 31 because not all months have 31 days.) Let’s see if that’s the case:

What are encodings?

Character encodings are specific sets of rules for mapping from raw binary byte strings (that look like this: 0110100001101001) to characters that make up human-readable text (like “hi”). There are many different encodings, and if you tried to read in the text with a different encoding than the one it was originally written in, you ended up with a scrambled text called “mojibake” (said like mo-gee-bah-kay). Here’s an example of mojibake:

æ–‡å — 化ã??

You might also end up with an “unknown” characters. There are what gets printed when there’s no mapping between a particular byte and a character in the encoding you’re using to read your byte string in and they look like this:

����������

Character encoding mismatches are less common today than they used to be, but it’s definitely still a problem. There are lots of different character encodings, but the main one you need to know is UTF-8.

UTF-8 is the standard text encoding. All Python code is in UTF-8 and, ideally, all your data should be as well. It’s when things aren’t in UTF-8 that you run into trouble.

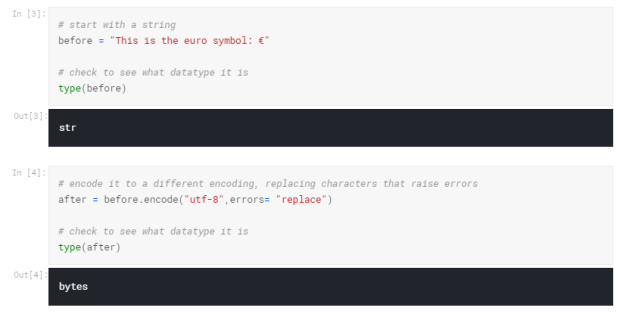

It was pretty hard to deal with encodings in Python 2, but thankfully in Python 3 it’s a lot simpler. (Kaggle Kernels only use Python 3.) There are two main data types you’ll encounter when working with text in Python 3. One is is the string, which is what text is by default.

If you look at a bytes object, you’ll see that it has a b in front of it, and then maybe some text after. That’s because bytes are printed out as if they were characters encoded in ASCII. (ASCII is an older character encoding that doesn’t really work for writing any language other than English.) Here you can see that our euro symbol has been replaced with some mojibake that looks like “\xe2\x82\xac” when it’s printed as if it were an ASCII string.

However, when we try to use a different encoding to map our bytes into a string,, we get an error. This is because the encoding we’re trying to use doesn’t know what to do with the bytes we’re trying to pass it. You need to tell Python the encoding that the byte string is actually supposed to be in.

You can think of different encodings as different ways of recording music. You can record the same music on a CD, cassette tape or 8-track. While the music may sound more-or-less the same, you need to use the right equipment to play the music from each recording format. The correct decoder is like a cassette player or a cd player. If you try to play a cassette in a CD player, it just won’t work.

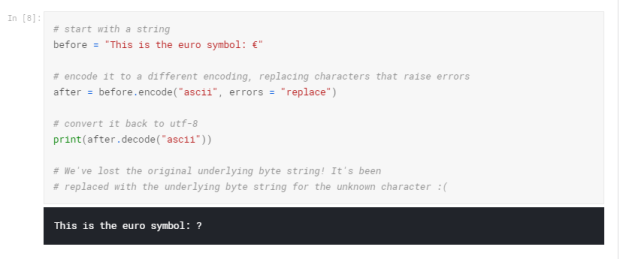

We can also run into trouble if we try to use the wrong encoding to map from a string to bytes. Like I said earlier, strings are UTF-8 by default in Python 3, so if we try to treat them like they were in another encoding we’ll create problems.

For example, if we try to convert a string to bytes for ascii using encode(), we can ask for the bytes to be what they would be if the text was in ASCII. Since our text isn’t in ASCII, though, there will be some characters it can’t handle. We can automatically replace the characters that ASCII can’t handle. If we do that, however, any characters not in ASCII will just be replaced with the unknown character. Then, when we convert the bytes back to a string, the character will be replaced with the unknown character. The dangerous part about this is that there’s no a way to tell which character it should have been. That means we may have just made our data unusable!

This is bad and we want to avoid doing it! It’s far better to convert all our text to UTF-8 as soon as we can and keep it in that encoding. The best time to convert non UTF-8 input into UTF-8 is when you read in files, which we’ll talk about next.

Reading in files with encoding problems Most files you’ll encounter will probably be encoded with UTF-8. This is what Python expects by default, so most of the time you won’t run into problems. However, sometimes you’ll get an error like this:

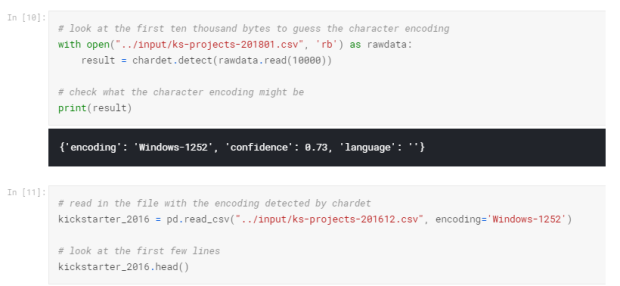

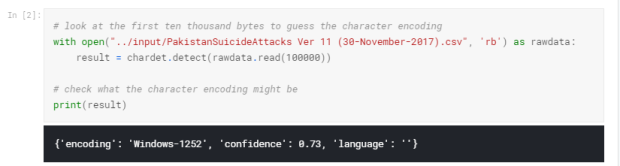

I’m going to just look at the first ten thousand bytes of this file. This is usually enough for a good guess about what the encoding is and is much faster than trying to look at the whole file. (Especially with a large file this can be very slow.) Another reason to just look at the first part of the file is that we can see by looking at the error message that the first problem is the 11th character. So we probably only need to look at the first little bit of the file to figure out what’s going on.

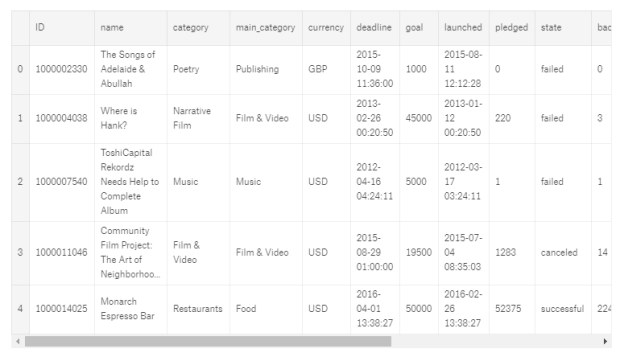

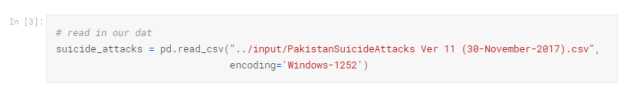

Yep, looks like chardet was right! The file reads in with no problem (although we do get a warning about datatypes) and when we look at the first few rows it seems to be fine.

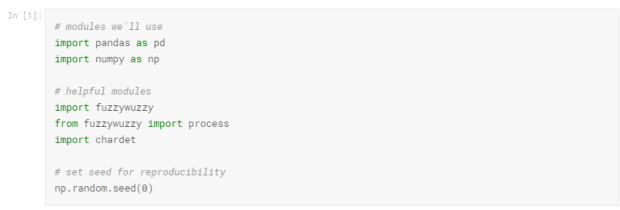

Inconsistent Data EntryStep 1: Load the libraries and Dataset ( Pakistan Suicide Attacks)

When I tried to read in the PakistanSuicideAttacks Ver 11 (30-November-2017).csvfile the first time, I got a character encoding error, so I’m going to quickly check out what the encoding should be.

Now we’re ready to get started! You can, as always, take a moment here to look at the data and get familiar with it.

Do some preliminary text pre-processing

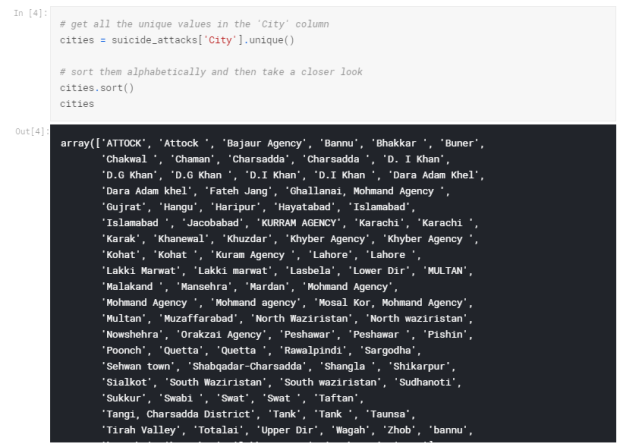

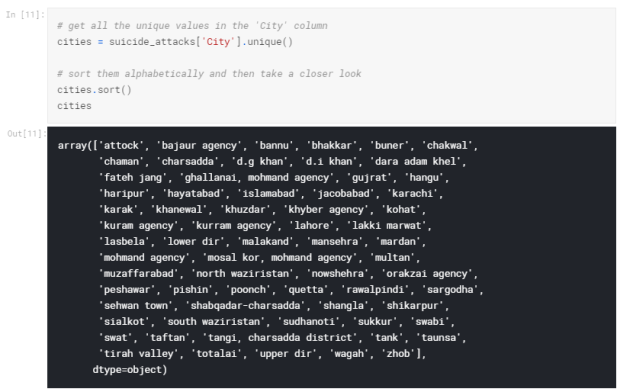

For this exercise, I’m interested in cleaning up the “City” column to make sure there’s no data entry inconsistencies in it. We could go through and check each row by hand, of course, and hand-correct inconsistencies when we find them. There’s a more efficient way to do this though!

Just looking at this, I can see some problems due to inconsistent data entry: ‘Lahore’ and ‘Lahore ‘, for example, or ‘Lakki Marwat’ and ‘Lakki marwat’.

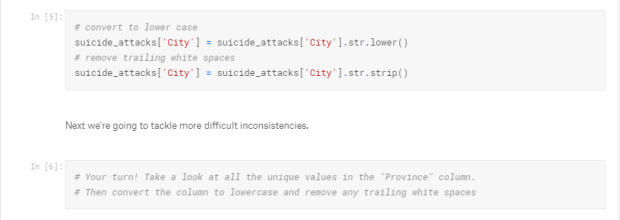

The first thing I’m going to do is make everything lower case (I can change it back at the end if I like) and remove any white spaces at the beginning and end of cells. Inconsistencies in capitalizations and trailing white spaces are very common in text data and you can fix a good 80% of your text data entry inconsistencies by doing this.

Use fuzzy matching to correct inconsistent data entry

Alright, let’s take another look at the city column and see if there’s any more data cleaning we need to do.

It does look like there are some remaining inconsistencies: ‘d. i khan’ and ‘d.i khan’ should probably be the same. (I looked it up and ‘d.g khan’ is a seperate city, so I shouldn’t combine those.)

I’m going to use the fuzzywuzzy package to help identify which string are closest to each other. This dataset is small enough that we could probably could correct errors by hand, but that approach doesn’t scale well. (Would you want to correct a thousand errors by hand? What about ten thousand? Automating things as early as possible is generally a good idea. Plus, it’s fun!

Fuzzy matching: The process of automatically finding text strings that are very similar to the target string. In general, a string is considered “closer” to another one the fewer characters you’d need to change if you were transforming one string into another. So “apple” and “snapple” are two changes away from each other (add “s” and “n”) while “in” and “on” and one change away (rplace “i” with “o”). You won’t always be able to rely on fuzzy matching 100%, but it will usually end up saving you at least a little time.

Fuzzywuzzy returns a ratio given two strings. The closer the ratio is to 100, the smaller the edit distance between the two strings. Here, we’re going to get the ten strings from our list of cities that have the closest distance to “d.i khan”.

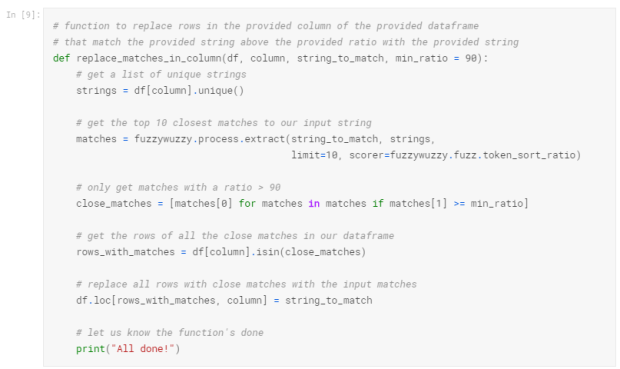

We can see that two of the items in the cities are very close to “d.i khan”: “d. i khan” and “d.i khan”. We can also see the “d.g khan”, which is a seperate city, has a ratio of 88. Since we don’t want to replace “d.g khan” with “d.i khan”, let’s replace all rows in our City column that have a ratio of > 90 with “d. i khan”.

To do this, I’m going to write a function. (It’s a good idea to write a general purpose function you can reuse if you think you might have to do a specific task more than once or twice. This keeps you from having to copy and paste code too often, which saves time and can help prevent mistakes.)

If you like this tutorial do like, share and comment as well as subscribe to my blogs

TheMenYouWantToBe

Data Cleaning was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.