Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

By Rostislav Rumenov and Daniel Sharifi

The update call is a core operation in the Internet Computer Protocol, enabling users to make changes within canisters — smart contracts hosted on the Internet Computer. This post explores each stage of the update call lifecycle and highlights how the Tokamak milestone has optimized its end-to-end latency.

Background

To fully understand the update call lifecycle, it helps to cover a few foundational components of the Internet Computer’s architecture:

1. Canisters

Canisters are smart contracts on the Internet Computer Protocol (ICP) that store state and execute code. Users interact with canisters by submitting update calls, which initiate actions on the smart contract.

2. Subnets

Subnets are groups of nodes that host and manage canisters. Each subnet functions as an independent blockchain network, enabling ICP to scale by distributing load across multiple subnets, with each managing a unique set of canisters. A smart contract on one subnet can communicate with another smart contract on a different subnet by sending messages.

3. Replicas

Within each subnet, nodes — called replicas — store the code and data of every canister on that subnet. Each replica also executes the canister’s code. This replication of storage and computation ensures fault tolerance, allowing canister smart contracts to operate reliably even if some nodes crash or become compromised by malicious actors.

4. Boundary Nodes

Boundary Nodes are responsible for routing requests to the appropriate subnet and balancing the load across replicas within that subnet.

Life of an Update Call

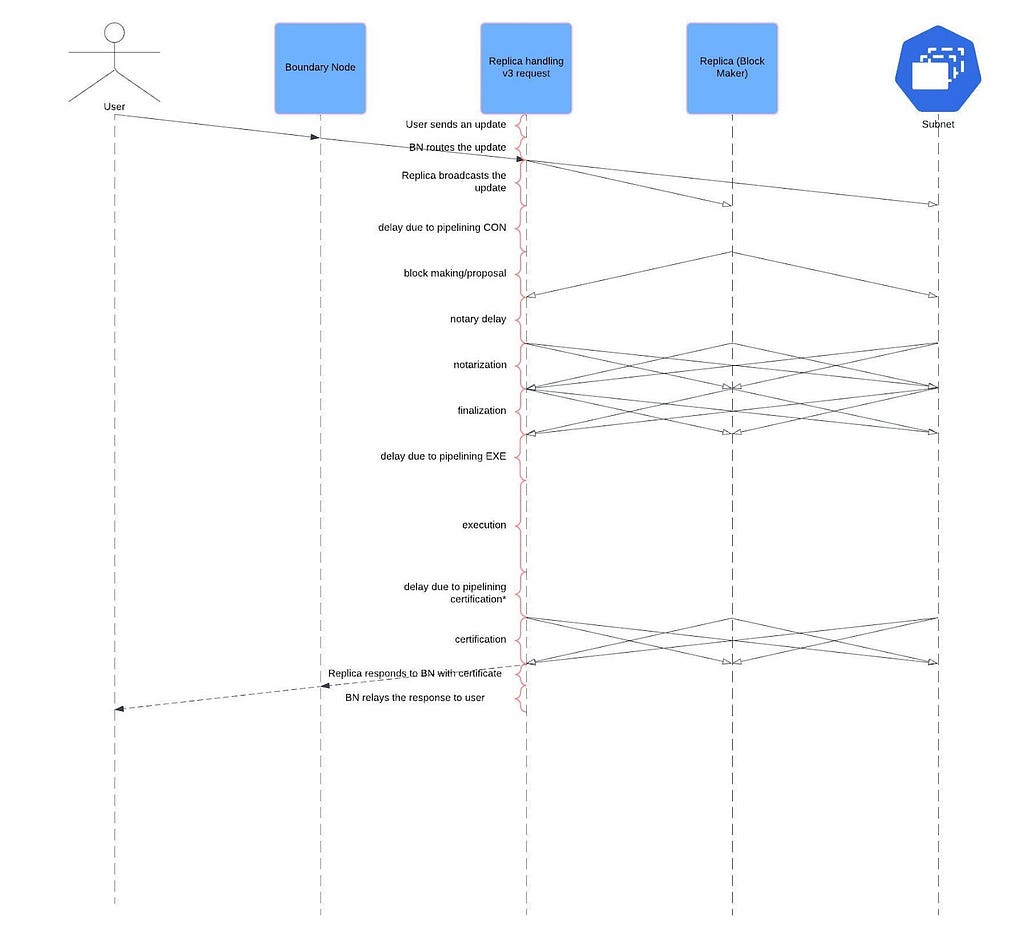

The following diagram outlines the life cycle of an update call on the Internet Computer Protocol.

1. Internet Computer (IC) Receives the Update Call

The update call begins with the user, who sends the request using an IC agent implementation (e.g., agent-rs or agent-js). These libraries provide a user-friendly interface to interact with ICP, handling request formatting, signing, and communication protocols. The request is then sent to a Boundary Node, resolved through DNS.

2. Routing Through the Boundary Node

The Boundary Node routes the update call to a replica within the subnet hosting the target canister. A round-robin selection approach distributes the request load across F+1 replicas to ensure performance and reliability. Here, F represents ICP’s fault tolerance threshold — the maximum number of faulty replicas that can be tolerated in each subnet. For more on fault tolerance in the Internet Computer, refer to this link.

3. Broadcasting the Update Call

Once a replica receives the update call, it broadcasts the request to other replicas in the subnet using an abortable broadcast primitive. This method ensures strong delivery guarantees, even in cases of network congestion, peer or link failure, and backpressure.

The abortable broadcast is essential for efficient inter-replica communication in a Byzantine Fault Tolerant (BFT) environment. It preserves bandwidth, ensures all data structures remain bounded even in the presence of malicious peers, and maintains reliable communication for consistent update processing within ICP. For more technical details, you can refer to the paper explaining the abortable broadcast solution here.

4. Block Proposal (Block Making)

One replica, designated as the Block Maker, takes on the responsibility of creating a new block that includes the update call. The Block Maker then proposes this block to other replicas in the subnet for processing.

Steps 4 through 7 make up the phases of a consensus round on the Internet Computer, where replicas work together to reach agreement on the proposed block. For a detailed explanation of the consensus mechanism, you can read more here.

5. Notary Delay

A brief delay, known as the notary delay, is introduced to synchronize the network and give all replicas time to receive the block proposal. This delay is critical for maintaining a consistent state across replicas.

6. Notarization

In the notarization phase, replicas review the proposed block for validity and agree to notarize it. Notarization is an initial consensus step, signaling that the block meets ICP’s criteria.

7. Finalization

Once notarized, the block undergoes finalization — a process where all replicas in the subnet agree on its validity, ensuring it is accepted and added to the chain. Finalization guarantees that all nodes acknowledge the block, securing consensus across the network.

8. Execution

Upon finalization, the block enters the execution phase, where the canister’s state is updated according to the update call. Several factors influence the latency of this phase, including:

- Canister Code Complexity: The complexity of a canister’s code directly impacts execution speed. More complex logic or data-heavy operations may introduce additional latency.

- Subnet Load: Since each subnet hosts multiple canisters, execution resources are shared. High load on a subnet can increase latency as canisters compete for computational resources.

Even simple operations can experience latency depending on subnet activity. During peak usage, an update call may face delays while waiting for resources.

9. Certification Sharing

Once executed, replicas share certification information within the subnet, verifying that the update call was executed accurately and that the resulting state change is consistent.

10. Replica Responds with Certificate

After certification, the replica sends a response containing the certificate to the Boundary Node, signaling the successful completion of the update call.

11. Boundary Node Relays the Response

Finally, the Boundary Node relays the certified response to the user, marking the end of the update call lifecycle.

Tokamak Milestone

The streamlined flow of the update call lifecycle described above was significantly enhanced by the Tokamak milestone, which introduced several key improvements to the Internet Computer:

- Abortable Broadcast over QUIC: The abortable broadcast primitive, implemented on top of the QUIC protocol, now manages all replica-to-replica communication, providing reliable and efficient message delivery across the network. This solution allowed for a significant reduction in the notary delay, thereby speeding up consensus without sacrificing reliability.

- Enhanced Boundary Node Routing: Improved routing logic in Boundary Nodes optimizes the distribution of update calls to replicas, as seen in the second stage of the life cycle.

- Synchronous Update Calls: The introduction of synchronous update calls allows for direct responses to users immediately after certification, simplifying and speeding up the final stages of the life cycle.

These advancements have collectively boosted the efficiency, speed, and reliability of update calls on the Internet Computer, creating a more seamless user experience and a more robust protocol.

Key Factors Affecting Update Call Latency

End-to-end latency on the Internet Computer is influenced by several prominent factors:

- Subnet Topology: The physical and network layout of a subnet affects the round-trip time (RTT) between replicas. Shorter RTTs facilitate faster communication, whereas larger geographical distances between replicas can increase latency.

- Subnet Load: The number of canisters and the volume of messages processed on a subnet impact latency. Since the IC operates as shared infrastructure, canisters co-located on a heavily loaded subnet can experience higher latency due to competing demands on the same resources.

- Pipelined Architecture: ICP’s architecture is designed to maximize throughput by pipelining consensus and execution stages. This design allows multiple processes to run concurrently, but it introduces a tradeoff: while throughput is increased, each stage in the pipeline may experience additional latency as it waits for the previous stages to complete.

ICP’s design prioritizes high throughput and scalability, balancing these requirements with the performance tradeoffs inherent in a distributed, decentralized network.

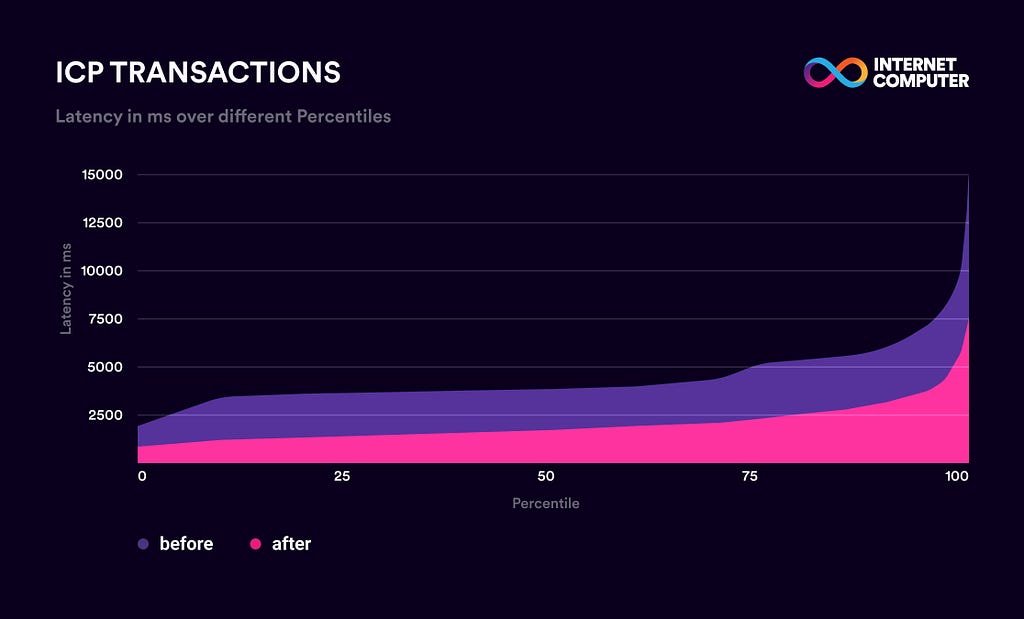

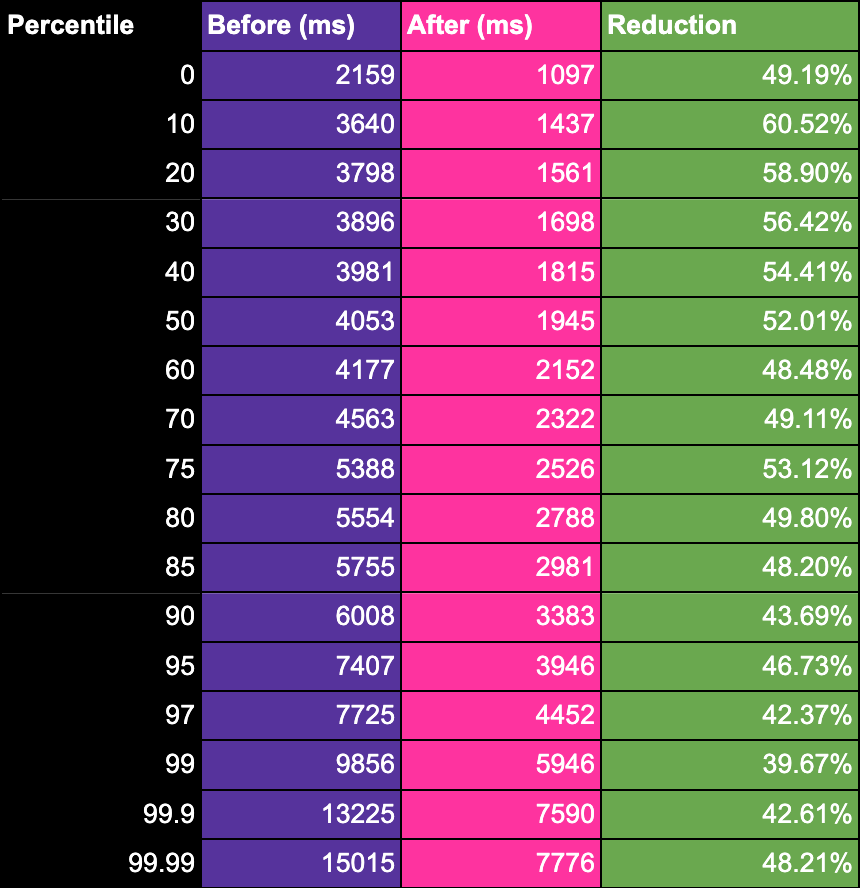

Benchmarks of ICP before and after Tokamak

To measure the impact of the Tokamak milestone, we measured the end to end (E2E) latency for three different smart contracts hosted on ICP. As a baseline, we did benchmarks before the Tokamak milestone was rolled out, and then we repeated the benchmarks after completing the milestone to compare. The results are very exciting, and shows that users can expect much lower latencies from ICP going forward, leading to a better user experience.

For each use case we benchmarked, we’ve attached a table and figure to display the E2E latency for 0–99.99th percentile.

ICP Ledger

The ICP ledger is a smart contract hosted on the NNS subnet, that serves as the ledger for ICP tokens. Users can interact with the ledger in many ways, but the most popular dapp and frontend is the NNS dapp which is also hosted on the NNS subnet.

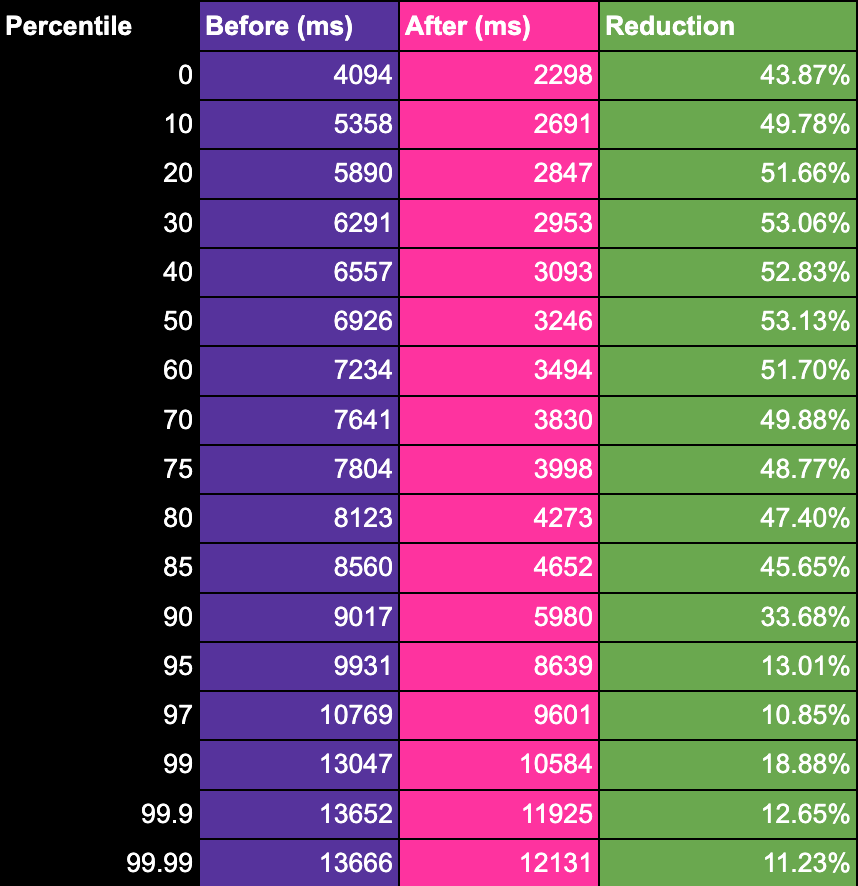

We ran benchmarks over several days, during which we sent ICP tokens in a loop and recorded the time it took from submitting a transaction to getting a response back from ICP, with a certificate proving the tokens were sent. Average latencies are decreased by 51% from 4.57 seconds to 2.23 seconds.

Figure 1

Table 1

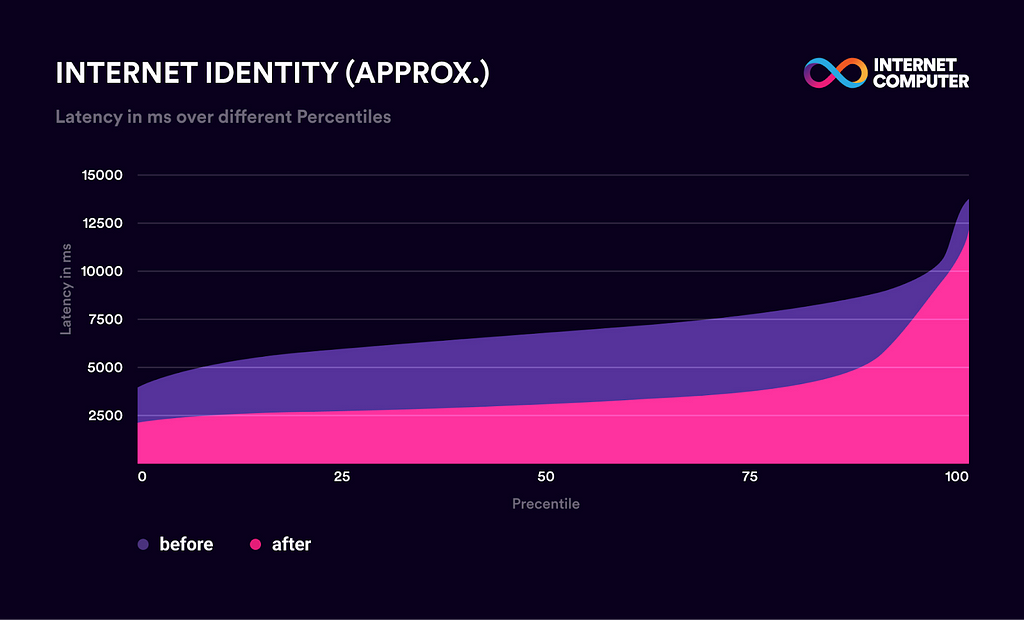

Internet Identity

The Internet Identity dapp, hosted on the Internet Identity subnet, is a federated authentication service running on the Internet Computer. If you have interacted with dapps on ICP, you have most likely spent time signing in with Internet Identity. This benchmark measures the time it takes to sign-in with the Internet Identity service.

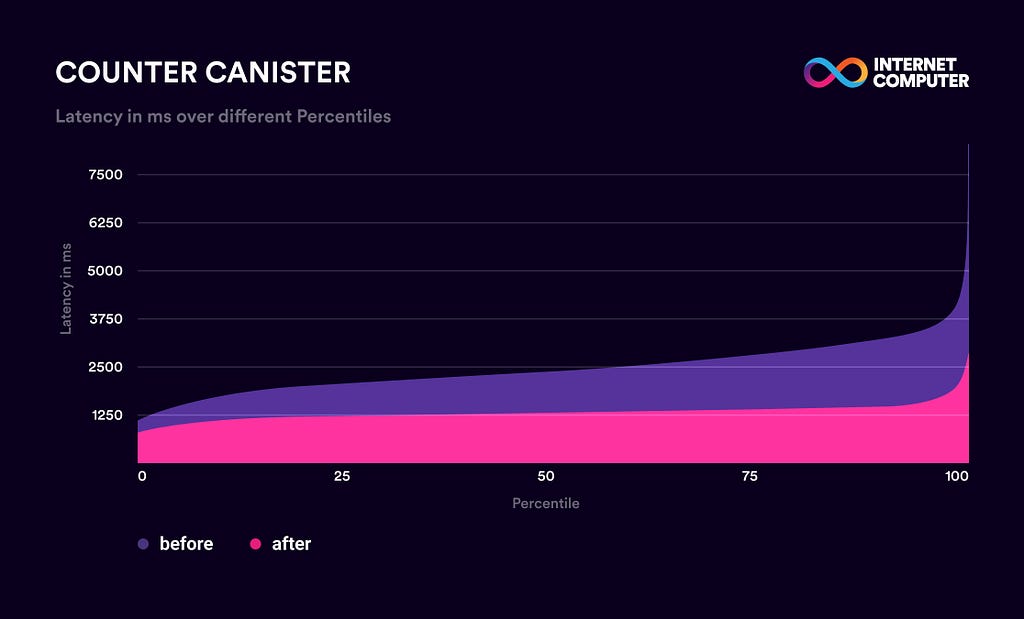

Our results show that the average latency of sign in times were reduced from 7.12 seconds, down to 3.9 seconds, which is a 45.2% reduction! Figure 2 below shows the before and after results for different percentiles. The figure shows that for the 50th percentile, i.e. the median, sign-in time was reduced from 6.9 seconds to 3.2 seconds. The purple area highlights the saved time for each percentile. One can also look at Table 2 for a more detailed look at the results for each percentile.

Figure 2

Table 2

Application Subnets

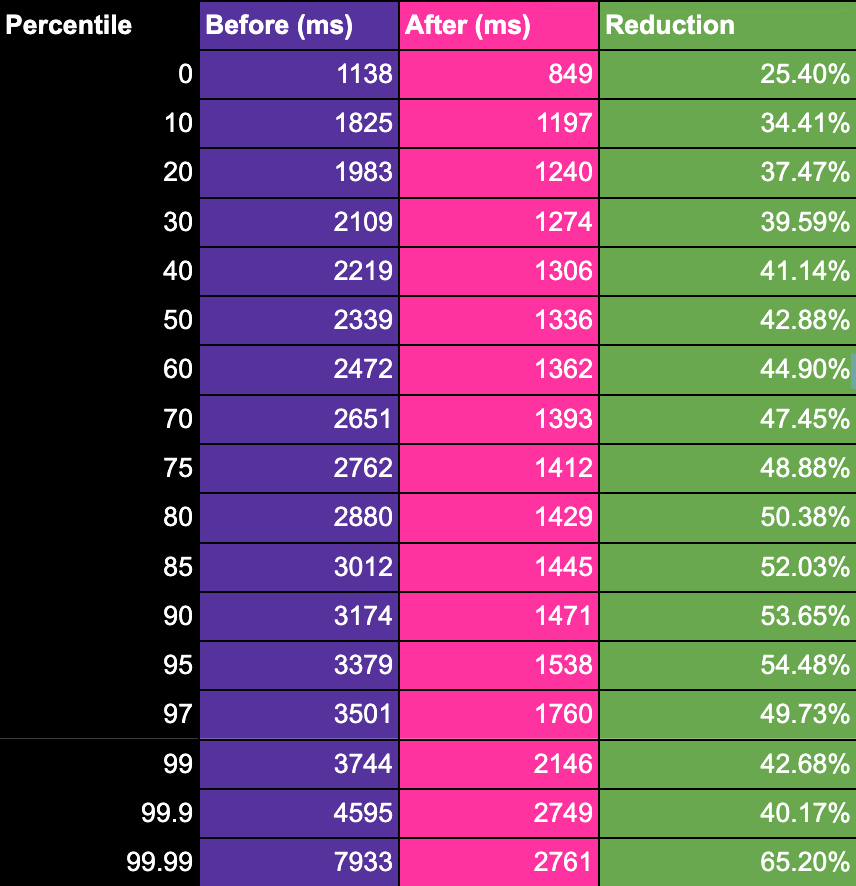

We have a canister hosted on a 13 node application subnet, snjp. This subnet allows us to test how 13 node application subnets improve after the Tokamak milestone. Our benchmarks show that average E2E latencies decreased from 2.43 seconds to 1.35 seconds, meaning a ~44% reduction.

Figure 3

Table 3

Tokamak: A Significant Improvement in End-to-End Latency was originally published in The Internet Computer Review on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.