Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Here I show you how to visualize contract information in a Word document in a web browser.

For context, suppose you have an AI pipeline which processes Word or PDF documents. Maybe they are legal documents; contracts perhaps.

How do you present the results to a human user?

This is a critical client-facing UI design choice, of course:- How do your customers interact with your product? Is it a joy to use? Does the document look like it does in Word?

But document visualization is equally important in any initial AI training phase, and in online learning.

In the initial training phase, you’ll need to prepare training data sets. How easy can you make this? Then, when you are evaluating the results, same question: can QA easily see what the AI got right and wrong?

At Native Documents, we’ve built an API which makes it easy for you to present results against the Word document in your web app. In this article, we’ll demonstrate interacting with LexNLP, the only open source NLP library we know of which is designed for working with legal text.

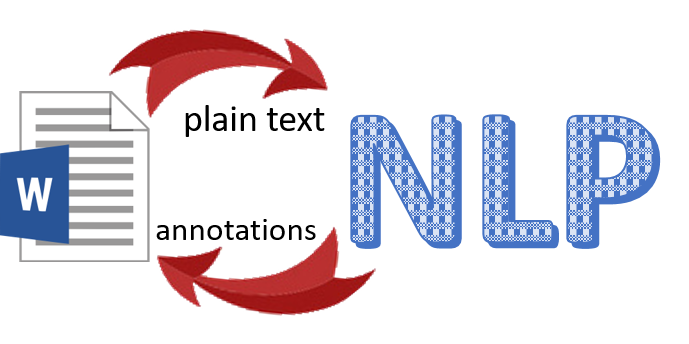

The general patterns is as follows:

- get the document as text or rich text

- push it through your NLP pipeline

- use our ranges API to annotate the document with the NLP results. The results will appear like Word comments

- optionally, attach any custom user actions to the comments (eg accept, dismiss, flag/dispute)

- optionally, export as Word comment

What can we do with it?

There are lots of AI applications in law. These include AI-assisted contract drafting, review and negotiation. Until a contract is signed, you typically need to be able to edit it, which means you need a Word format document, not a PDF.

Here we’ll work through an “entity extraction” example.

An entity is an item of interest in the document. For example, a party name or date: the sorts of things you need to find if you are ingesting a contract into a contract management system, or scrubbing sensitive information.

Enter LexNLP

Here we’ll use LexNLP’s definition extraction capability: definitions are useful if you want to implement contract drafting assistant functionality and for knowledge management/precedent search applications.

Preparation

I assume you have a functional Python installation.

To follow along, you’ll need a Native Documents dev id and secret. Get yours at https://developers.nativedocuments.com/

Then visit https://canary.nativedocuments.com and enter your dev id and secret. (There are several ways your dev id and secret can be handled, but for the purposes of this walkthrough, this is the easiest. In production, you’d compile it into your Javascript.)

Next, get/install our python tool:

pip install — upgrade http://downloads.nativedocuments.com/python/ndapi.tar.gz

On Windows create C:\Users\name\.ndapi\config.default-config and on Linux/Mac create ~/.ndapi/config.default-config

The config.default-config file should have the form:

{ “dev_id”: “YOUR DEV ID GOES HERE”, “dev_secret”: “YOUR DEV SECRET GOES HERE”, “service_url”: “https://canary.nativedocuments.com", “api_ver”: “DEV” }That’s the Native Documents config done.

Next, install LexNLP, something like:

git clone https://github.com/LexPredict/lexpredict-lexnlp.gitcd lexpredict-lexnlppython setup.py install

Now you can check everything works:

wget http://downloads.nativedocuments.com/python/extractDefinitions.pypythonexec(open(“extractDefinitions.py”).read())extractDefs(“default-config”, “https://www.ycombinator.com/docs/YC_Form_SaaS_Agreement.doc")

If you load the resulting URL in Chrome, you should that doc, with the definitions in it identified. If you don’t see the document or the definitions, please comment below or at https://helpdesk.nativedocuments.com/

In what follows, we’ll walk through what just happened.

To do this, first you need a sample document (Word .doc or .docx format). We’ll assume you used YC_Form_SaaS_Agreement.doc

Overview

To recap, here’s what we need to do:

- load a document into Native Documents and export as text

- get the items of interest (using NLP)

- create annotation instruction

Then you can visualise the results, and/or get the result as a docx.

Step 1: load a document

$ pythonPython 3.6.8 (default, Jun 1 2019, 16:00:10) >>> import ndapi>>> ndapi.init(user=”default-config”)True>>> mydocx=open(“./YC_Form_SaaS_Agreement.doc”,”rb”)>>> sessionInfo = ndapi.upload_document_to_service(mydocx)>>> print(sessionInfo){‘nid’: ‘DEV7BDVM9BGGAIMAUI487AB49QUQS000000000000000000000000002I0THD1D829MQUI4EVGNMFDM860000’, ‘author’: ‘eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJuYW1lIjoiTmF0aXZlIERvY3VtZW50cyIsIm5pY2tuYW1lIjoibmQifQ.tN0R0W3YjpwjnFBUmAj8dxfEMRtcNsXA677QcDtHb2s’, ‘rid’: ‘I2522’}Notice the nid. That’s what we need to interact with our document.

>>> nid=sessionInfo[‘nid’]

Aside: At this point you could look at your document in our editor.

Here’s a little function to compute the URL to open your document.

def printEditUrl(nid, author): print( “https://canary.nativedocuments.com/edit/" + nid + “?author=” + author)

To get the URL:

printEditUrl(sessionInfo[‘nid’],sessionInfo[‘author’])

Go ahead and open that URL if you want to see the document.

Didn’t work? Had you visited https://canary.nativedocuments.com and entered your dev id and secret?

Step 2: export as plain text

Like most NLP/AI, LexNLP consumes plain text. So let’s get that.

>>> ndRawText = ndapi.download_document_from_service(nid, mime_type=”application/vnd.nativedocuments.raw.text+text”) >>> print(ndRawText.data)b”urn:nd:stream:5591RH3HVNOMMJM0LOH9DIDRTENGG00000…Jf0seo4p6vv-sf\nSAAS SERVICES ORDER FORM\nCustomer:\t\t\nContact: \nAddress:\nPhone: \n \nE-Mail: \n\nServices: [Name and briefly describe services here]

You can see the result consists of a stream header and then the text of the document, separated by a newline character.

Let’s put the header to one side for the moment.

splits = ndRawText.data.decode().split(‘\n’,1)

Step 3: talk to the NLP

Here we talk to LexNLP to get the definitions:

import lexnlp.extract.en.definitions

print(list(lexnlp.extract.en.definitions.get_definitions_explicit(splits[1])))

[(‘Service(s)’, ‘Services: [Name and briefly describe services here] ___________ (the “Service(s)”).’, (140, 158)),:(‘Support Hours’, ‘EXHIBIT C\nSupport Terms\n[OPTIONAL]\nCompany will provide Technical Support to Customer via both telephone and electronic mail on weekdays during the hours of 9:00 am through 5:00 pm Pacific time, with the exclusion of Federal Holidays (“Support Hours”).’, (22449, 22466))]

For each definition LexNLP finds, you can see it returns the defined term, the meaning, and the character range at which the defined term was found.

We simply re-use that character range to tell Native Documents where to attach the annotation.

Step 4: convert NLP response to annotation instruction

For Native Documents to display the annotations, we need to send it JSON of the form:

urn:nd:stream:5591RH3HVNOMMJM0LOH9DIDRTENGG00000…Jf0seo4p6vv-sf “[140..157):0.MEANING”: { “meaning”: “Services: [Name and briefly describe services here].. (the Service(s))”}:“[22449..22465):17.MEANING”: { “meaning”: “EXHIBIT C Support Terms [OPTIONAL] Company will provide.. Holidays (Support Hours)”}To craft this, let’s use a couple of helper functions:

class ndComment: def __init__(self, start, end, slot, mng, content): self.start = start self.end = end self.slot = slot self.mng = mng self.content = content.replace(‘\n’, ‘ ‘) self.content = self.content.replace(‘\t’, ‘ ‘) self.content = self.content.replace(‘\”’, ‘’) def toString(self): return “\”[“ + str(self.start) + “..” + str(self.end) + “):” + str(self.slot) + “.” + self.mng + “\”: { \”meaning\”: \”” + str(self.content) + “\”} \n”def constructPayload(): definitions=lexnlp.extract.en.definitions.get_definitions_explicit(splits[1]) results = [ndComment(d[2][0], d[2][1]-1,ind, “MEANING”, d[1]).toString() for ind, d in enumerate(definitions)] payload=splits[0]+”\n”+’’.join([‘’.join(result) for result in results]) return payload

Now we can use that to attach the annotations to the document:

annotations = constructPayload()ndapi.upload_document_to_service(annotations, “ranges”, nid=nid, mime_type=”application/vnd.nativedocuments.raw.text+text”)

Visualizing the Annotated Document

Now let’s look at the document in the editor.

First, please visit https://canary.nativedocuments.com if you haven’t already, then enter your dev key and secret.

After doing that, you can open the URL. To get the URL to load:

printEditUrl(sessionInfo[‘nid’],sessionInfo[‘author’])

If you look at it in Chrome, you may notice a bit of debugging in the annotations; in a follow-up article, I’ll show you how to customize the appearance of the comment annotations.

Putting it all together

You can find the code at http://downloads.nativedocuments.com/python/extractDefinitions.py

It contains a function extractDefs which takes 2 arguments, your .ndapi/config (eg “config-default”), and the path or URL of a Word document (doc or docx). For example:

pythonexec(open(“extractDefinitions.py”).read())extractDefs(“default-config”, “https://www.ycombinator.com/docs/YC_Form_SaaS_Agreement.doc")

It returns a URL you can open in your web browser. To make it easy to share a working URL with colleagues (but not for production!), the URL includes devid as a parameter.

You can modify it to extract other info supported by LexNLP, or indeed, to substitute some other NLP engine entirely.

Feedback and comments welcome.

Hooking up an AI pipeline to a Word document in Python was originally published in HackerNoon.com on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.