Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Photo by Patrick Hendry on Unsplash

Photo by Patrick Hendry on Unsplash

AI systems are moving from development and testing into production. At their heart, AI systems are simply computation and therefore deploying a system remotely provides remote computation. If this system involves multiple steps of computation, there are many advantages to be gained by providing this computation as composable chunks that can be combined.

I’m describing a concept that is usually called a pipeline. You package each bit of computation into a component. Then you send data through multiple components in sequence. Pipelines can be a very valuable deployment tool. They allow us to reuse bits of computation and make our architecture more flexible, allowing us to respond to new demands with agility.

This post is divided into two parts. The first (which you are reading now) explains ways to architect Machine Learning pipelines with a concrete example. The second part dives into all the glorious details of implementing the different architectures with GraphPipe, including an implementation of the real-world example.

Why Pipeline?

Constructing pipelines provides many of the same advantages that decoupling does in software development. These advantages include:

- Flexibility: Units of computation are easy to replace. If you discover a better implementation for one chunk, you can replace it without changing the rest of the system.

- Scalability: Each bit of computation is exposed via a common interface. If any part becomes a bottleneck, you can scale that component independently. Common scaling techniques might involve a load balancer or additional backends.

- Extensibility: when the system is divided into meaningful pieces it creates natural points of extension for new functionality.

Classy Image Recognition

Pipelining becomes much clearer with a concrete example. Lets discuss how we might build the backend of an example application. Our example app is Classy, a mobile app that classifies images. This application allows a user to select one or more images and it determines the class of each image. It also allows the user to enter one or more urls to get classifications for images from the web. We are targeting users with older phones and limited mobile bandwidth, so the majority of our computation must be done on the server side. We are going to architect (and pipeline!) the server components of Classy.

For classification, we will use an implementation of the venerable VGG model (a convolutional classification model that won the ImageNet competition a few years back). We might start by deploying the VGG model with a GraphPipe server. Normally VGG accepts images as a 224x224x3 array of floating point values. Additionally it expects the image to be preprocessed by being put in BGR order (instead of the normal RGB), and for each channel to be normalized by subtracting a constant value that is the average value for that channel across the entire ImageNet data set.

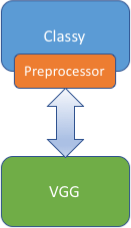

Initial Architecture Classy Initial Architecture

Classy Initial Architecture

The simplest architecture for our system is to do the preprocessing on the mobile side. The application loads images from internal storage or downloads them from urls, does the preprocessing internally, and then sends the data to the remote VGG for inference.

There are a few of drawbacks to our initial architecture:

- Sending uncompressed pixel data is inefficient. A 224x224x3 array of floats is about 600K. It would be much better if we could just send compressed jpgs (a few K) or urls (a few bytes) directly.

- Preprocessing on the phone uses cpu and may drain the battery (admittedly loading a jpg is not very processor-intensive, but one could imagine more complex preprocessing steps where this would be a concern).

- Preprocessing on the client side couples the client to the server implementation. If you decide to replace VGG with another model like Inception (which has different preprocessing requirements), you will have to update your client code.

Our refined architectures will attempt to remove these drawbacks.

Client Sequencing

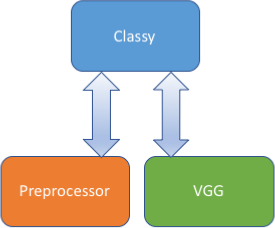

The first win is to move the preprocessor onto the server side. This will become a component that takes one or more jpgs and does the preprocessing necessary to prepare them for VGG. Note that we could include the preprocessing in the VGG model itself, but keeping it separate allows for other preprocessors to be plugged into the backend; we may want to support pngs or gifs, for example.

Client Sequencing Architecture

Client Sequencing Architecture

In our new architecture, Classy loads the image, sends it to the preprocessor, and sends the result to VGG. We refer to this pattern as client sequencing. Each model provides some computation, and the client makes remote requests to each model in turn. Client sequencing is the most flexible approach: The computations can be used individually, or even reordered.

With this new architecture, Classy now has no specific logic tying it to the server classification implementation. It also doesn’t need to burn cpu to do preprocessing. Unfortunately, the bandwidth requirements have actually gotten worse! It now has to send and receive more data than it did in the initial architecture. We’ll solve this problem in the next iteration.

Server Sequencing

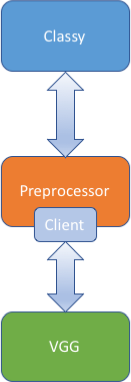

Rather then sending the data to two different models, it would be much more efficient for Classy to send the jpgs to the preprocessor, and then have the preprocessor send the data to VGG, retrieve the classes, and return them.

Server Sequencing Architecture

Server Sequencing Architecture

We call this architecture server sequencing. One advantage of this approach is that the logic for communicating with VGG is hidden behind the preprocessor. As far as the Classy is concerned, it is only interacting with a single model that expects jpgs and returns classes. This comes at the cost of embedding the code to communicate with VGG inside the preprocessor.

In general, server sequencing can be much more efficient than client sequencing, especially if the first and second components are physically close together like in our design . It does sacrifice a bit of flexibility, however. The preprocessor is tightly coupled to VGG. In our example this makes sense, but there are times when we would prefer something a bit more flexible.

Hybrid Sequencing

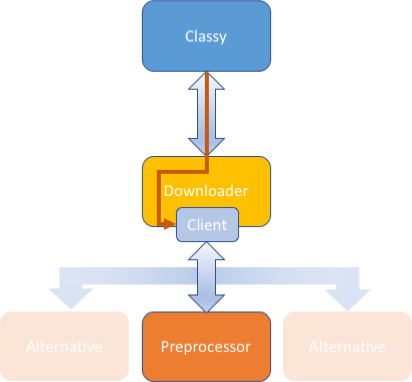

There is one other inefficiency in our system. When we are classifying images based on web urls, we must download them first. We could save bandwidth with a component that downloads the images for us and passes them along to the preprocessor. This component takes a list of urls, and returns the classifications that it gets from the preprocessor.

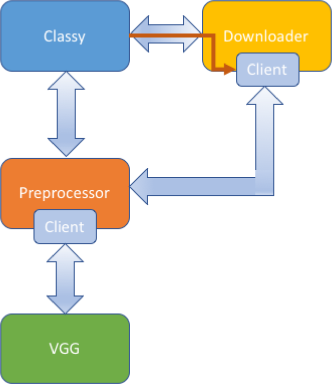

Hybrid Sequencing Architecture

Hybrid Sequencing Architecture

We could use server sequencing for this component, but downloading images and passing them along to other components could be useful in many pipelines. We therefore will use a modification called hybrid sequencing.

In this architecture, the downloader sends data to the preprocessor just as in server sequencing. The difference is that Classy is expected to provide configuration specifying where the preprocessor is located. This enables the downloader to be used by other clients in other pipelines as well.

Essentially, the client sends its data along with information that says: “Do your computation, then send the results to the model located over there and return the results to me”. This keeps the performance of server sequencing, but returns some of the flexibility of client sequencing. It does have some limitations when compared to the client sequencing approach. For example, intermediate results from the first model cannot be retrieved and used locally.

Putting it Together Classy Final Architecture

Classy Final Architecture

We will combine the last two sequencing architectures into our final architecture. Classy needs to support local images as well as remote urls, so in this version Classy can communicate with the downloader or the preprocessor. Note that because we are using a standard protocol for remote communication, the downloader can use the same client code to talk to the preprocessor that Classy uses internally.

This architecture is flexible: Classy can send local jpgs to the preprocessor, or it can send urls to the downloader. When it talks to the downloader, it sends the location of the preprocessor as the next stage. If we decide to replace VGG with an alternative implementation, we can simply replace VGG and the preprocessor; the downloader and the client do not need to be changed.

This architecture is scalable: all of the communication is GraphPipe over http. We can colocate models for efficient transmission, or we can stick load balancers and multiple backends into any part of the pipeline that is getting overloaded.

Finally, this architecture is extensible: we could add another preprocessor to handle pngs or gifs and use the same VGG model behind it. We could even use the same downloader for urls if we provide config pointing to the new preprocessor along with our request.

Conclusion

This post provided an overview of machine learning model pipelining using a real example application. Pipelining will become more common as we see AI systems broadly deployed into production. Part II in this series shows how to implement the three sequencing approaches discussed above and walks through the implementation of Classy.

Machine Learning Model Pipelines: Part I was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.