Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

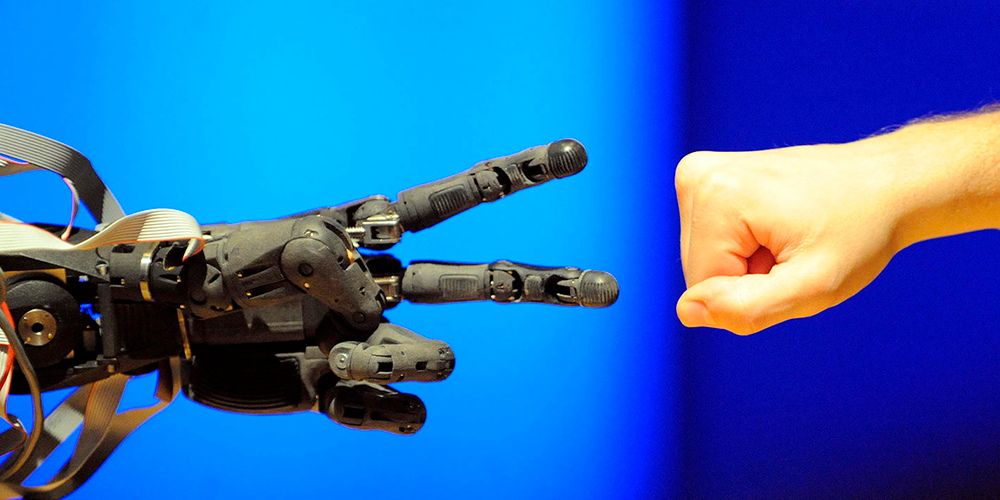

Within the last 5 years the development of artificial intelligence gained incredible speed. In the 00-ies we did not even think about autopilots in the cars, using AI in smartphone cameras and teaching robots how to back-flip (hey there Boston Dynamics!). At the same time, more talks about the necessity to restrain artificial intelligence started to pop out. Not only authors of dedicated media but leading entrepreneurs such as Elon Mask have spoken about the topic for numerous times already.

“Until people see robots going down the street killing people, they don’t know how to react because it seems so ethereal. AI is a rare case where I think we need to be proactive in regulation instead of reactive. Because I think by the time we are reactive in AI regulation, it’s too late.”

This sounds convincing and scary at the same time. But right now artificial intelligence restrains itself. And in order for the robots to go out on the streets to kill people, they need to get over this restraint and this is not something you can do in 10 minutes.

Artificial intelligence is Math based on probabilities. This means there will always be errors during the task execution. Many industries cannot allow wrong task execution: cars with autopilots, law or medicine. For better understanding let’s have a look at the example with self-driven cars — at the form in which they currently exist. Artificial intelligence can identify objects on the way of the car with 97.5% of accuracy so the possibility of error is 2.5%. From 1000 rides with passengers 25 can result in a car crash. So letting the cars out with such percentage of error equals gathering the data about Facebook users and hoping no one would notice. This probability of error is the restriction of artificial intelligence. If we look more closely, we will see that the technology is really popular with those areas that would not suffer from the error: camera masks, messengers, other entertaining apps.

Artificial intelligence will appear in most important aspects of our life only when the probability of error would be declined to zero. Otherwise it is an unjustified risk that only an idiot would try. The next 5–10 years will be spent on getting over this restriction. And it would not be so bad to live in a world, where we can create a doctor who will work with zero probability of error. But if a person decides to create a perfect robot, whose main function is killing, this person would be no different to weapon makers. Maybe we should focus on restricting such people instead.

Artificial Intelligence Restriction was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.