Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

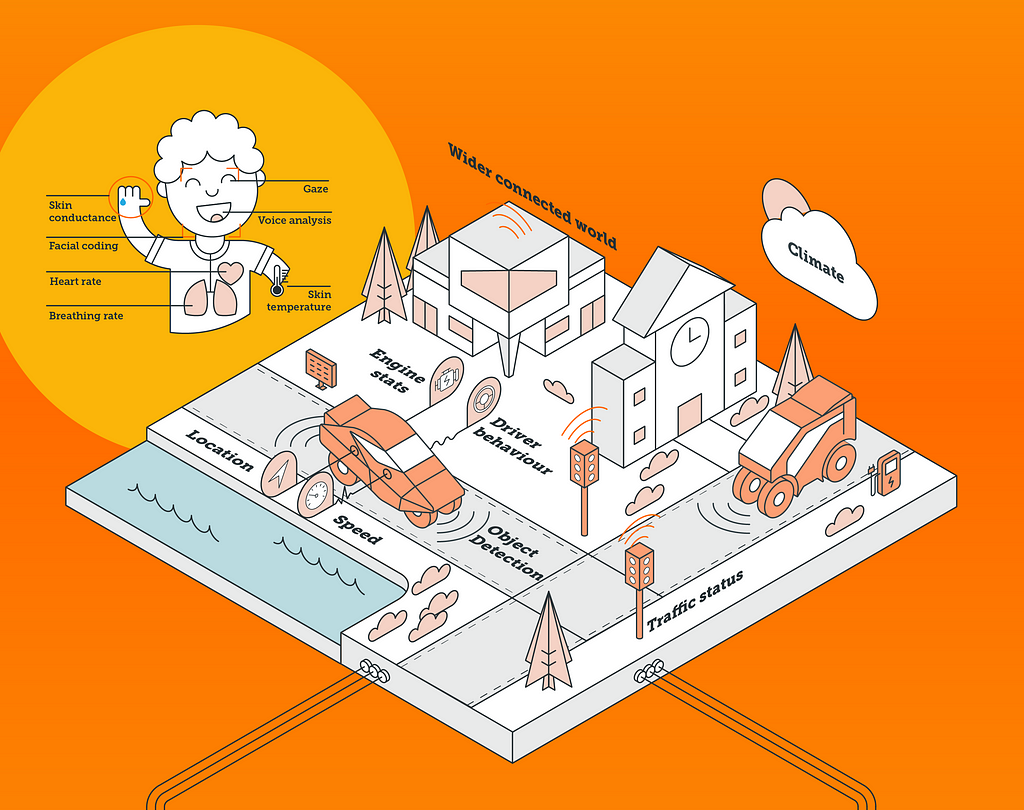

As us and others have already stated, our vehicles will soon know how we feel and respond to our emotions in real time. So what kind of technology and processes will underlie this new era of emotionally intelligent transport solutions?

See the accompanying infographic for a quick tour or read on to get a little deeper in the weeds. Take a tour through the world of empathic mobility in the infographic accompanying this story – see link above.

Take a tour through the world of empathic mobility in the infographic accompanying this story – see link above.

Without diving too deep into the foundations of how people’s emotions are measured, let’s be clear on the basic premise:

Changes in a person’s physiology can indicate changes in their emotional state.

In other words, we can infer an emotional response from something changing in or on the body — heart rate, facial expression, voice tone, breathing rate, and so on. And now, increasingly affordable and accurate sensors allow us to measure human emotions in almost any environment.

By plugging human data into our vehicles, they can be trained to respond to our emotional state. This can provide many benefits, from life-saving safety, to comfort and entertainment. Your ride could be very different if it knows you are happy, anxious, distracted, tired, drunk or having a medical episode.

This is empathic mobility, and here is a quick run-through of some of the key technologies in the empathic mobility stack.

Firstly, Gather your Data

While there are many data signals that can be used to infer an emotional response, the in-vehicle environment has some unique restrictions and opportunities. The main data types currently being integrated into the next generation of transport include:

Facial Coding

Video from a camera pointed at the occupant’s face can be processed for their facial expressions. This has the advantage of not requiring a big leap forward in hardware solutions, you simply embed a camera in the cockpit, facing the occupant.

On the downside, the data signal can be affected by various factors including lighting conditions, skin colour / ethnicity, glasses, facial hair and head movement. Any meaningful signal is also limited to how often we pull facial expressions while driving, when most of the time there’s (hopefully) nobody in front of us to express our feelings towards.

‘Internal ‘ Biometrics

There are various hidden, nonconscious changes in our bodies that can give us a continuous and high-frequency readout of the user’s emotional state. In our experience the top players in the list are heart rate, skin conductance and breathing rate. These are typically measured using consumer- or medical-grade wearables. They can be insightful sources of information but we can’t expect everyone to don something like a wristband or chest-strap every time they want to travel somewhere.

The detection of biometric signals is starting to move away from the body and into less intrusive formats, such as sensors in the seat. There are even remote, noncontact ways to measure things like heart rate and breathing, typically by bouncing a signal off the user’s body. Leaders in this space include infrared cameras, lasers and radars. We’ve spoken to several automakers who are exploring how to integrate this kind of tech into their next range of vehicles, as well as tech companies whose data could be added to the mix to build a unified solution set.

Voice

A microphone is all you need to send a user’s voice recording to software that can analyse it for patterns such as emotional states. As with cameras, this has the advantage of requiring reasonably low-fi sensor hardware but there is still some fancy software juju required to eliminate background noise, separate multiple voices and so on. Also, in the same way that we don’t always show facial expressions, voice analysis can only work when the occupants are speaking.

Context

Even if your next motor is riddled with weapons-grade physiological sensors, no AI system can flawlessly interpret your body’s behaviour for useful insights. A sudden spike in heart rate or a frowning face don’t necessarily mean much on their own. You could be approaching a potential accident, or maybe you just remembered that you forgot to put the cat out.

This is why we need all the context we can get our hands on. I already argued this point in more detail recently — see: Sensor Fusion: The Only Way to Measure True Emotion — so I won’t push it too hard here. But let’s take a brief skim through some of the context data types an empathic vehicle could use to flavour its understanding of the occupants. We can break them roughly into two main camps:

Vehicle. Sensors throughout the cockpit and under the hood can already tell us a great deal about what the vehicle is doing. These include engine performance stats, in-vehicle climate conditions, the status of the infotainment system, as well as information about the vehicle’s movement and its driver’s behaviour, such as speed, location, acceleration, braking, etc.

Environment. Outside the metal box on wheels, there is a vast ecosystem of data available to us to further help understand what the people inside the box are feeling and doing. Consider how much more accurate and relevant the AI in the vehicle will become when external sensors are feeding it data about other vehicles or pedestrians in the vicinity, the current weather conditions, the state of traffic up ahead, its occupants’ daily schedules or recent sleep patterns… the list is endless.

An emerging terminology for this matrix of data connections, which spans from the inside of the occupants body to the global IoT and cloud infrastructure, helps illustrate the network structure:

- V2H: vehicle-to-human.

- V2V: vehicle-to-vehicle.

- V2I: vehicle-to-infrastructure.

- V2X: vehicle-to-‘everything/anything’.

Oh, but there are so many more being thrown about already. How about:

- V2P: vehicle-to-pedestrian.

- V2D: vehicle-to-device.

- V2G: vehicle-to-grid.

- V2H (again!): vehicle-to-home.

- V2R: vehicle-to-road.

- V2S: vehicle-to-sensor.

- V2I (again!): vehicle-to-internet.

You get the idea.

Jump on over to our recent story if you want to dig a little deeper into the techniques and challenges of Measuring Emotion in the Driver’s Seat.

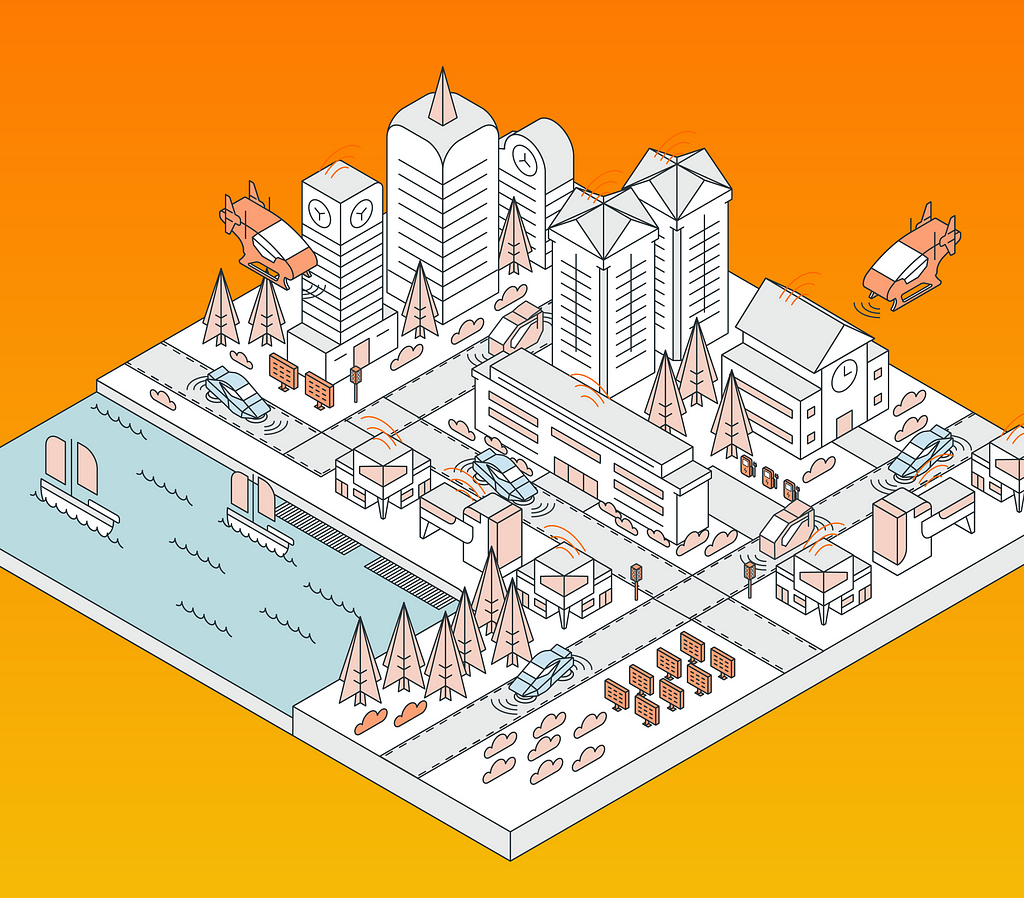

In the near future, vehicles will be empathic machines, connected to the data-rich world around them.

In the near future, vehicles will be empathic machines, connected to the data-rich world around them.

Next, Gently Stir your Data Streams

The current set of sensors running in a modern vehicle is already complicated enough. Now, coming out of the automakers’ next few production cycles we’re likely to see an explosion of sensors, not just in terms of their numbers but also of different types and volumes of data collected. Even in 2015 Hitachi claimed that ‘today, the amount of data generated by a connected vehicle exceeds 25GB/hour’. As vehicles get smarter that digital traffic is likely to pile up fast.

To put each data type in its right place for subsequent processing, the vehicle needs to act as a sensor fusion platform. Its onboard computer is tasked with the heavy lifting of synchronising all the different types and frequencies of incoming data. Then, bandwidth-permitting, they can be passed over to onboard or external processors to gather insights about the vehicle and its occupants’ situation.

Now Cook your Data

The emerging generation of emotion AI processing software is designed to churn through the deluge of sensor data for useful emotional insights. This can come in many flavours but the basic idea is to output values (eg. how happy, sad, anxious, etc.) and events (eg. change of mood or behaviour) that inform the vehicle to execute an appropriate response.

Whether the signal from the occupant was a change in facial expression, heart rate variability, voice tone or other physiological response, the emotion AI processor would detect an event. As I’ve already mentioned, these physiological changes could be cross-correlated with other context data, such as the time of day, and weather or lighting conditions. The AI in the system makes probabilistic inferences about the situation, based on the available data, and informs the vehicle of its conclusion.

Overall, this is a process of scanning multiple sources and types of incoming data for patterns, and comparing them with existing knowledge about the user and the current context. Sound familiar? It’s what us humans do all day long. And our capability to understand and response to the situation is what we call empathy.

Empathy is a type of intelligence that varies between people and can be developed over time. It’s part of being smart. And in an analogous way, we are training smart technology to develop its own form of empathy, to provide useful or entertaining responses to our current mood and behaviour.

Serving Time

To clarify the process from end-to-end, I will illustrate a potential user scenario and give you a basic overview of the way our system at Sensum would deal with it.

Let’s say you’re driving home late, after a busy day of office work and subsequent dinner with clients. Rain is pelting the windscreen, causing you to squint into the blurry lights ahead. You are starting to nod off but are too tired to notice yet.

A camera in the dashboard is recording a video of your facial expression, gaze direction, and head & body position. At the same time, a microphone is listening for changes in your voice tone. Contact sensors in the seat, and remote sensors throughout your car’s cocoon, are collecting data on your heart rate, breathing, skin temperature and so on. All this data is constantly being streamed to the vehicle’s onboard AI chipset.

The system should detect a potential issue as it sees changes in, say, your heart rate variability and breathing rate. You might be starting to roll your eyelids and nod your head as you get sleepier, all showing up in the facial coding stream. Even if these combined data-points are not enough for the computer to make a decision, it can adjust its assessment based on the fact that external data has informed it that the weather is poor, it’s late, and you had a busy day (inferred from your calendar).

The way our system processes incoming data streams is by comparing any data types it recognises against each other. It then builds a probabilistic model of the user’s emotional state on multiple ‘dimensions’ of emotional scoring such as arousal (excited/relaxed), valence (positive/negative) and dominance (control/submission). Depending on which ‘zone’ the data is clustered in this dimensional space, the system is able to infer a likely emotional state such as drowsiness, which might be signified by something like low arousal, neutral valence and low dominance.

More on how our own system works here if you’re keen to learn.

OK, so now the onboard AI system is informing your car that you are probably drowsy. Quite how the car responds to this information could vary across vehicle models, manufacturers and their software providers. For instance, it could be any combination of the following:

- Notification: a voice notification from your onboard voice assistant or a warning displayed on the dashboard.

- Climate control: reduce the temperature of the cocoon and switch on the air flow towards your face.

- Media: switch your music over to a more upbeat playlist.

- Driver control: if your car has autonomous capabilities it could request partial or complete control of the vehicle.

- Journey planning: the car might search for the nearest fuel station and recommend pulling over for a coffee or a nap.

That’s a quick tour of the empathic mobility tech stack as we currently see it. With widespread investment in emotion-related technologies, particularly from the mobility sector, it probably won’t be long before this kind of empathic human-machine interaction (HMI) hits the road. Demo vehicles have already been built by major automakers, including Toyota (Yui) and Audi (Aicon) so we may see significant empathic HMI in the next cycle of production models, two or three years from now.

Further Serving Suggestions

- The infographic for this story: A Tour of Empathic Mobility.

- Sensum mobility solutions.

- The Role of Human Emotions in the Future of Transport.

- Measuring Emotion in the Driver’s Seat: Insights from the Scientific Frontline.

- Sensor Fusion: The Only Way to Measure True Emotion.

How to Build Emotionally Intelligent Transportation was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.