Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Photo by Alfonso Reyes on Unsplash

Photo by Alfonso Reyes on Unsplash

I recently watched a Youtube video on Integrated Circuits (IC) and Moore’s Law which featured an interesting ASCII graphic of Jack Kilby and others. I wanted to re-create this effect as an image filter for web cam video in the browser.

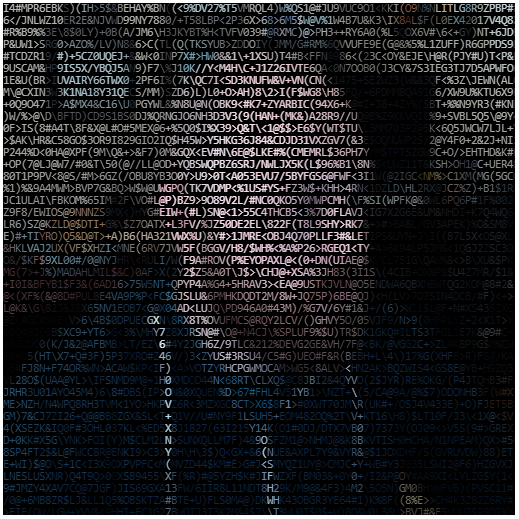

This is what we will be building today:

Our camera filter in action! 🎥

Our camera filter in action! 🎥

You can try it out for yourself here: https://mariotacke.github.io/blog-real-time-ascii-camera-filter/. Let’s go! 🚀

Step 1: Asking for permission

Before we can apply our filter magic, we need to access the camera video stream. Getting access to the camera video is fairly straight forward, thanks to the browser’s MediaDevices API:

First, we make use of the navigator‘s getUserMedia function which requires us to pass a constraints object that specifies which devices we want to access as well as a success and failure callback. In the example above, we require video access which prompts the browser to ask the user for permission. Should the user accept, we gain access to a MediaStream object which we can use as a source to a<video> element.

Finally, we start the video by calling play() on the video element and subscribe to its play event to start our rendering loop. For this, we use requestAnimationFrame which is more performant than running a setInterval.

Step 2: Accessing the camera video feed

Conceptually, a video filter is just an image filter which is executed for every frame of a video. So in order for this to work, we need to access the pixel data for each frame, run it through our image filter, and write it back out to a canvas.

For this, we will use one <video> element and two canvases. Why two canvases? To extract the video frame data, we have to first draw it onto a canvas. Only then can we extract pixel data from the canvas context, manipulate it, and draw it onto another canvas.

processFrame is our main render loop function. Once, we have access to the video width and height, we draw the video with drawImage onto the hiddenContext 2D context. This allows us to more freely extract data later via its getImageData function. Great, now we have an exact copy of the <video> element on our <canvas> element.

Step 3: Pixelate!

Let’s talk strategy for a moment: our goal is to draw characters onto the screen that resemble the input video. First, most fonts have variable width which might make our calculations difficult, so let’s pick a monospace font instead. I chose Consolas. This let’s us determine the character width once and simplifies the next step.

Fortunately, the canvas context provides a measureText function which accepts a string and returns width and height in pixels. Since we picked a monospace font, we can use the font width throughout our algorithm.

Second, we need to give our characters some color. I chose to calculate the average color of the source image rectangle on which the text is drawn. This requires us to loop through a section of the input frame, sum up the RGB values, and divide it by the number of pixels in the frame.

This is what it would look like if we simply output these sections:

Simple pixelation filter. Feels like internet in the nineties 🙊…

Simple pixelation filter. Feels like internet in the nineties 🙊…

Let’s start with the loop first. We step through the image frame data by incrementing our y position by the font height and x position by the font height. Think: one character at a time. Next, we have to extract the section of the frame the character covers. The getImageData function of the context is a great fit here since it allows us to pass an x/y offset with width and height. We then use this frameSection and run it through our getAverageRGB function which returns a single RGB value.

Image data is generally stored in four bytes Red (R), Green (G), Blue (B), and A (alpha), thus we have to iterate in steps of four through the frame section. To calculate the average color, we individually sum up our values for R, G, and B and later divide it by the number of pixels in the frame.

Step 4: Bringing it all together

Now that we have calculated the average RGB value for the frame section we want to draw, we can pick a character to represent it.

And finally, our output:

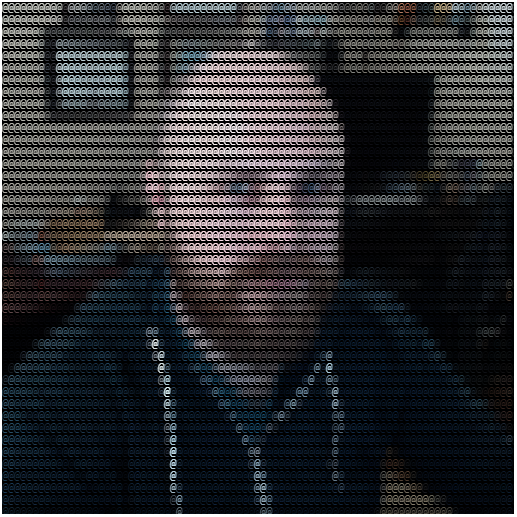

Using the @ character to represent each frame section

Using the @ character to represent each frame section

Wait, that’s pretty boring. Let’s add a variety of characters instead of simple @ characters. How about the English alphabet or a Matrix-style Japanese alphabet? Just pick one 💡!

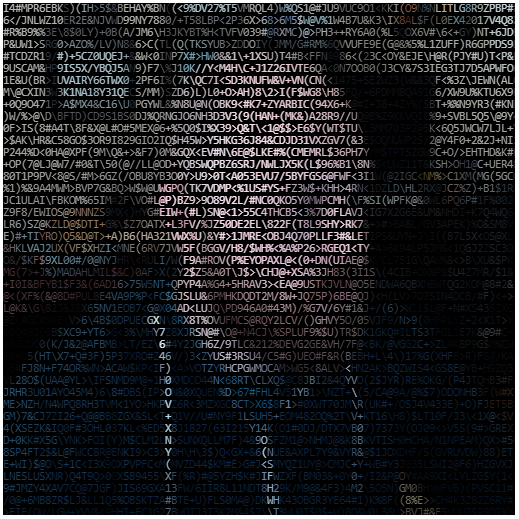

The final product. A beautiful mess of various characters. 😅

The final product. A beautiful mess of various characters. 😅

Conclusion

In this article I described my process for creating an ASCII art camera filter on top of a live camera stream. We worked through asking the user for permission to access the camera feed, setting up a rendering loop, and finally implementing an image filter with canvas. Until next time! 👋

Left: original video stream, right: ASCII output

Left: original video stream, right: ASCII output

Thank you for taking the time to read through my article. If you enjoyed it, please hit the Clap button a few times 👏! If this article was helpful to you, feel free to share it!

For more from me, be sure to follow me on Twitter, here on Medium, or check out my website!

References

- Code repository: https://github.com/mariotacke/blog-real-time-ascii-camera-filter

- MDN: MediaDevices

- MDN: CanvasRenderingContext2D

- Video: Integrated Circuits & Moore’s Law

Building a real-time ASCII Camera Filter in plain Javascript was originally published in HackerNoon.com on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.