Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Last year, Google blew the world away with Duplex, an AI assistant that can make phone calls for you. The demo went viral and raised a lot of ethical and philosophical questions.

Last month, Google announced an update for Duplex: it can now use websites for you.

This announcement might not have been as controversial as last years, but I believe it is a transformative step towards the future of user interfaces. To understand why, let’s have a quick look back at the history of HCI.

From the command line interface to AI

Ever since they were invented, computers have been weird and hard to use. The history of technology has been a constant battle towards making them more human.

Early computers were a nightmare. Their hardware-based input meant you would operate them by cranking a heavy metallic handle, feeding them perforated punchcards or by moving physical switches and cables around. This was tedious and slow.

In the 1950s, we transitioned to the command line interface. This was the first step towards making computers more human, because we could now operate them using language: by writing to them.

However, computers could only understand their language: code. Despite decades trying to simplify programming languages, to this day, learning to code is beyond the reach of most people.

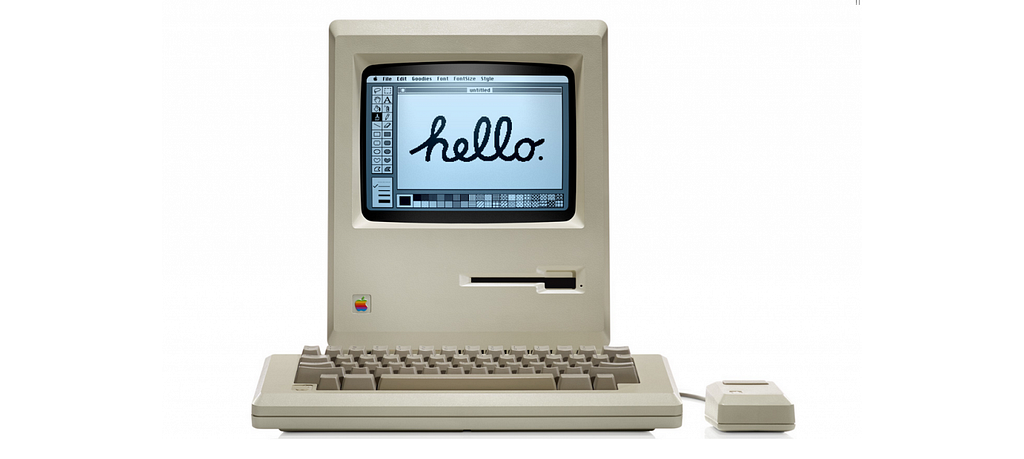

Apple’s Macintosh popularized the GUI

Apple’s Macintosh popularized the GUI

In the 80s, we fixed that with the graphical user interface (GUI) and the mouse. This enabled people to use computers without having to code, and it brought computers to a much larger audience.

Despite being much easier to use, the GUI and the mouse are not perfectly intuitive. Some people still struggle with them. To get these people to properly use computers, we needed yet another leap in innovation.

Steve Jobs introducing the first iPhone.

Steve Jobs introducing the first iPhone.

This leap was the smartphone. More precisely, it was the touch screen, which allowed us to use computers with something we are all born with: our fingers.

I believe the touch screen struck a deeply human chord. The fact that one-year-old children can use a smartphone is proof of that.

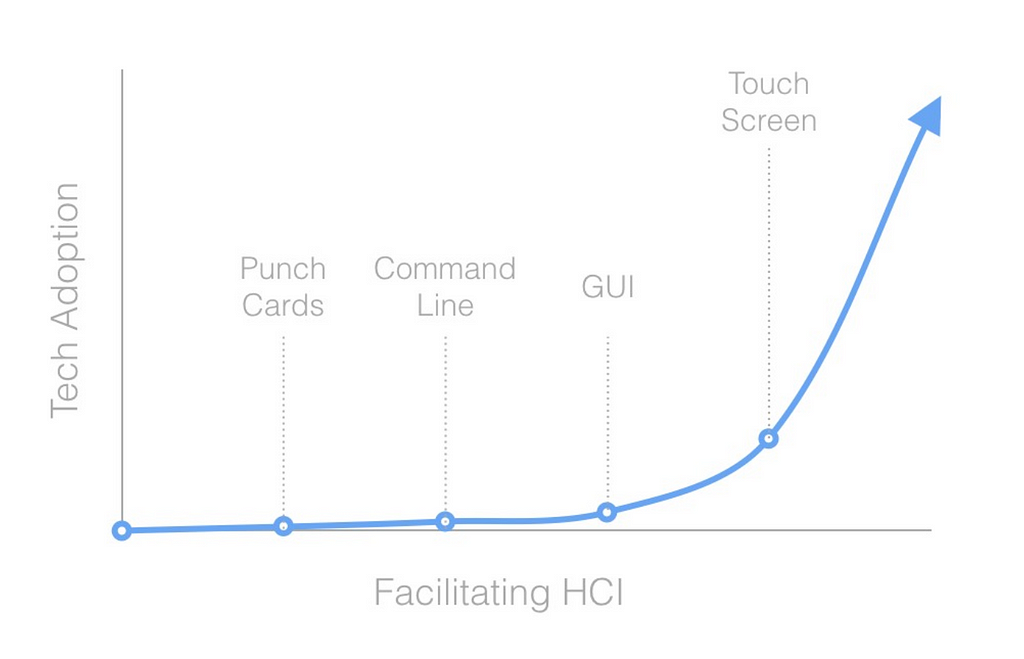

Technology becomes more widespread as it becomes easier to use.

Technology becomes more widespread as it becomes easier to use.

From the command line interface to the GUI and the touch screen, the history of technology has been a journey to make computers more intuitive — closer to our human experience.

At every step of the way, we’ve also made computers accessible to a larger audience. While the first computers could only be used by a handful of specialists, today, children as young as one can easily use smartphones. This is a testament to the progress we’ve made.

AI is the new UI

As I have said before, I believe AI is the next step in this journey of humanizing computers. Duplex for Web is the perfect example of that.

In the demo, the assistant navigates a car rental website. It fills in the form and makes choices for you according to your past history.

Duplex for Web uses AI to interact with websites.

Duplex for Web uses AI to interact with websites.

As a designer, I spent years learning how to make website pleasant and easy to use. Watching the AI instantly filling the form felt weird. I couldn’t help but think about all the work that went into implementing it, only for the user to barely look at it.

In a way, Duplex is making websites redundant. Designers like me are now faced with the possibility that we could optimize the experience by removing it altogether and have the AI interact with the server instead. Sure, the form provides visual feedback about what decisions were made, but that can also be done within the assistant — possibly making the current structure of design roles redundant.

With Duplex for Web, websites, menus, forms, and other UI elements are becoming a hindrance — a bunch of slow and unnecessary obstacles between you and what you are trying to achieve. Why spend time going through them, when you can tell the computer exactly what you want, in plain English?

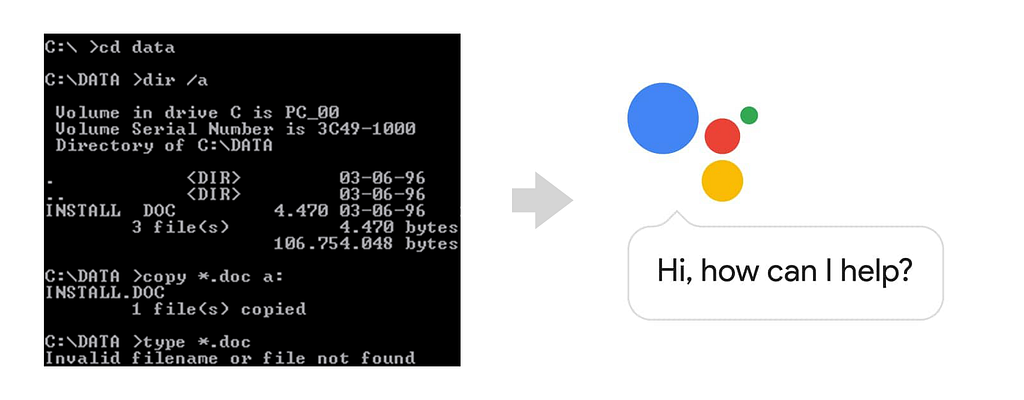

In a way, AI assistants are the new command line interfaces. Only now, the language is English instead of code.

In a way, AI assistants are the new command line interfaces. Only now, the language is English instead of code.

One of the main problems with the command line interfaces was discoverability. It was very hard for the people using it to know what action they could do. This is the main problem with chatbots today. The GUI solved this by visually showing the files, folders, and possible actions.

Duplex for Web combines the best of both worlds. The form shows you what you can do in a visual way, and you can tell the computer what you want in plain English. This is the best of both worlds.

Conclusion

The point of user interfaces is to tell computers what to do. AI allows us to do it in much more human ways. Advances in computer vision, speech processing, and NLP now enable computers to see, listen, understand and even talk back to us. In this world, AI will slowly become the new operating system.

Just like the punch cards and the command line interfaces from decades ago, the GUI will eventually become redundant. Designers will have to adapt to that change. Duplex for Web is an early example of this trend. It gives us a glimpse at what the future of user interfaces will look like.

In the end, AI won’t kill the GUI, but what comes afterward definitively will. However, that is a subject for another article.

If you enjoyed or learned something from this, please hit the 👏 clap button. It helps to bring this text to more people.

Pro-tip: You can clap up to 50 times :)

I am in not affiliated with the Duplex project. These are my opinions and they do not reflect those of my employer.

This article was first published on The Next Web on 06/29/19.

Google’s Duplex for Web gives us a glimpse into the future of AI-powered interfaces was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.