Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

We previously discussed how you can implement an ad-hoc scheduling system using DynamoDB TTL as well as CloudWatch Events. And now, let’s see how you can implement the same system using AWS Step Functions and the pros and cons of this approach.

As before, we will assess this approach using the following criteria:

- Precision: how close to my scheduled time is the task executed? The closer, the better.

- Scale (number of open tasks): can the solution scale to support many open tasks. I.e. tasks that are scheduled but not yet executed.

- Scale (hotspots): can the solution scale to execute many tasks around the same time? E.g. millions of people set a timer to remind themselves to watch the Superbowl, so all the timers fire within close proximity to kickoff time.

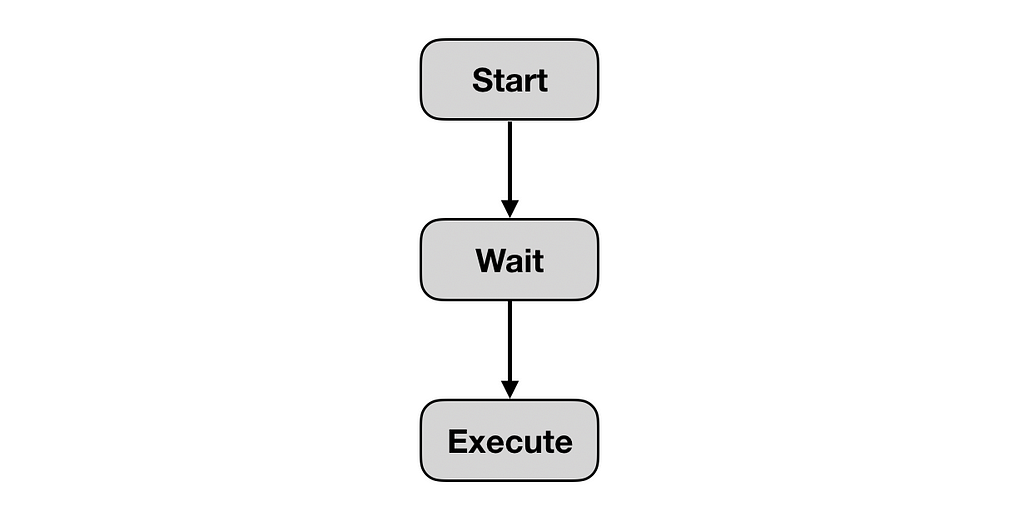

Waiting with Step Functions

One of the understated superpowers of Step Functions is the wait state. It allows you to pause a workflow for up to an entire year!

Normally, idle waiting is very difficult to do with Lambda. But with Step Functions, it’s as easy as a few lines of JSON:

"wait_ten_seconds": { "Type": "Wait", "Seconds": 10, "Next": "NextState"}You can also wait until a specific UTC timestamp:

"wait_until" : { "Type": "Wait", "Timestamp": "2016-03-14T01:59:00Z", "Next": "NextState"}You can also parameterize how long to wait for/until by swapping out Seconds for SecondsPath, or Timestamp for TimestampPath. This allows you to control the wait duration using the state machine input.

For example, I can pass an expiraydate to the state machine and use it to control when to continue the workflow.

"wait_until" : { "Type": "Wait", "TimestampPath": "$.expirydate", "Next": "NextState"}To schedule an ad-hoc task, I can start a state machine execution and use a wait state to pause the execution until the specified date and time.

Precision

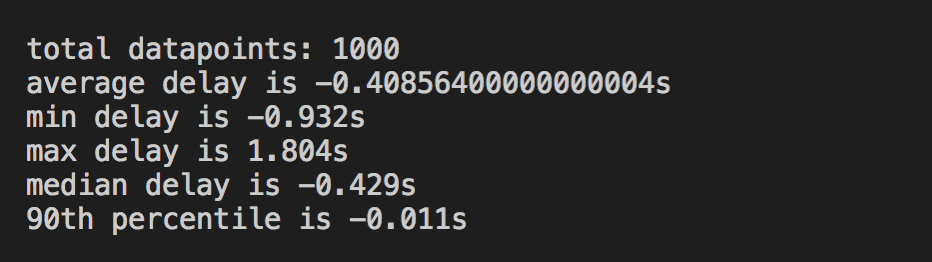

I started 1000 state machine executions, each waiting for between 1 and 10 minutes to invoke a Lambda function. This is the result of comparing the timestamp for the actual invocations against the intended timestamp.

As you can see, the precision of this approach is spot on.

Scalability (hotspots)

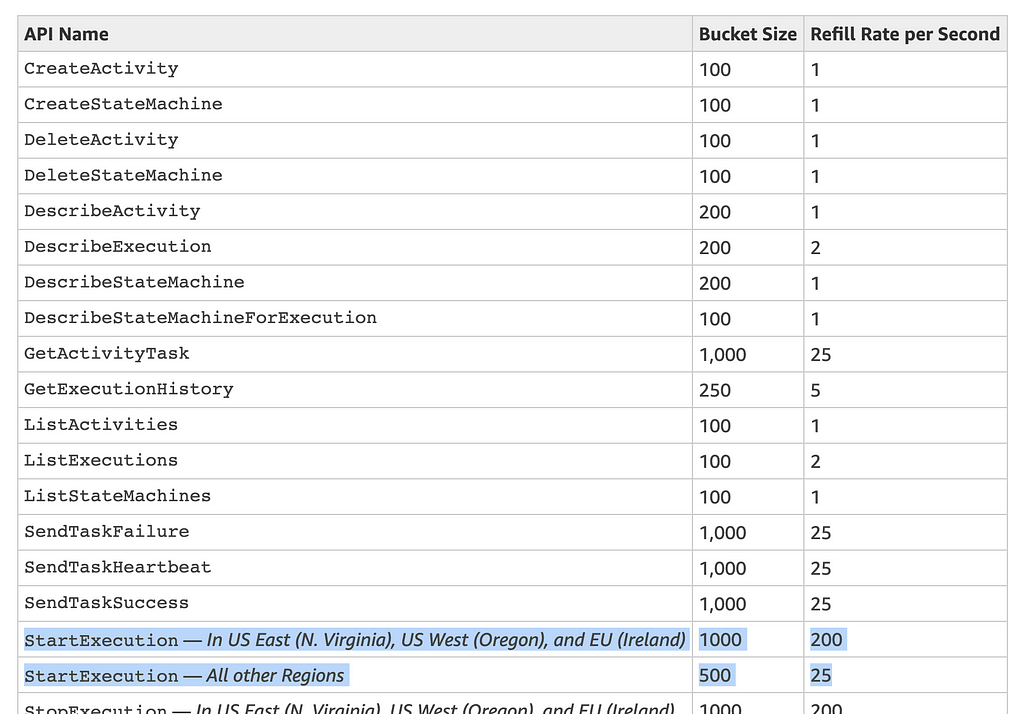

If you need to schedule a large number of tasks in a burst, then you need to consider the API limit on the number of StartExecution requests. For most regions, this starts at a lowly 500 and refills at 25 per second.

Yes, it’s a soft limit so you can ask for a limit raise via the support centre. But given how low the default limits are, it’s unlikely that you’d be able to raise them significantly. So if you need to support scheduling 10s of thousands of tasks per second, then this approach might not be for you.

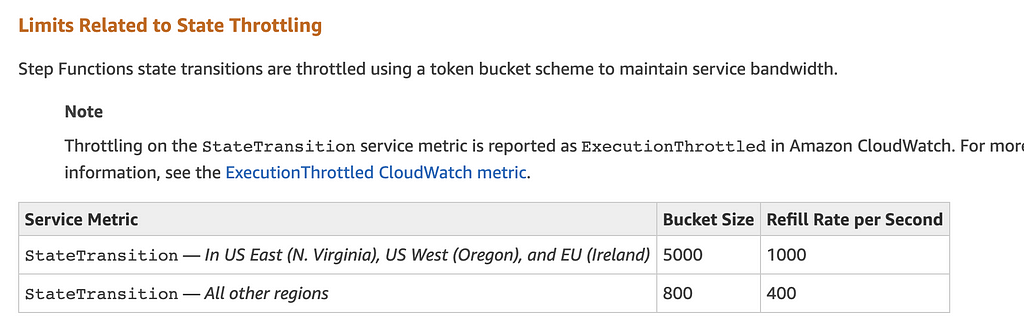

When the wait state expires, our scheduled task would execute. However, if you have scheduled a large number of tasks to all execute at the same time, then they could be throttled. Because there is a hard limit on the number of state transitions (see below).

As you can see, this limit is generous and would not pose a problem for most workloads. However, if you need to schedule ad-hoc tasks that tend to cluster together then you need to take it into consideration. For example, a system that lets users schedule ad-hoc reminders can experience large hotspots around notable events — the Superbowl, the GOT finale, the Champions League final, etc.

Scalability (number of open tasks)

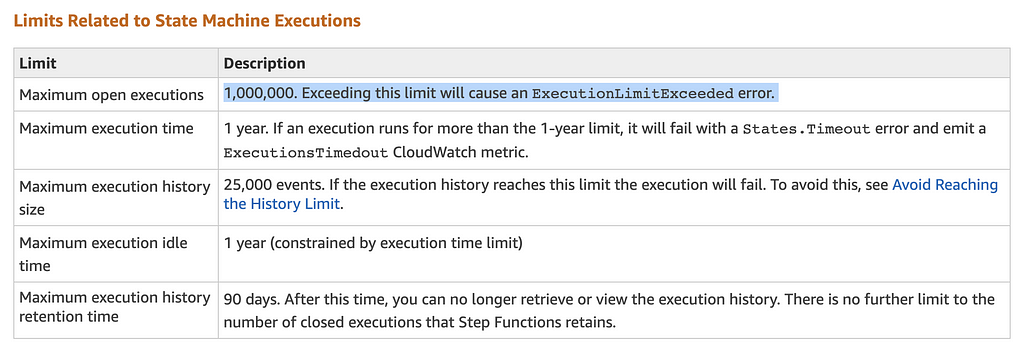

Step Functions also has a hard limit of 1,000,000 open executions — that is, executions that have started but not yet finished. This will be the maximum number of open tasks we can schedule with Step Functions.

One million open tasks should be plenty for most. However, because you can wait for up to a year, it leaves you a large time window when open tasks can accumulate. And there are many use cases that need to schedule many millions of open tasks.

Fortunately, there are workarounds to this limit. Instead of using Step Functions to provide an end-to-end solution, we can use it as the “last mile” solution. Kudos to David Wells for this excellent suggestion.

body[data-twttr-rendered="true"] {background-color: transparent;}.twitter-tweet {margin: auto !important;}

@ben11kehoe Nice post! @theburningmonk I used dynamo TTL and step functions for this. Expire dynamo item 48 hours early and set scheduled items to send at a given timestamp with step functions `Wait` type and unix timestamp

This workaround also alleviates the scaling limit on starting too many tasks in a burst. Instead of starting a Step Functions execution right away, we would write items to DynamoDB (with TTL) instead.

DynamoDB is very scalable and there’s no limit to the number of open tasks. Its lack of precision (the official documentation only confirms that data is typically deleted within 48 hours) is addressed by letting Step Functions carry the “last mile” of the execution. This combination allows the two approaches to neatly complement each other.

It’s not bulletproof, however. Since you’re using Step Functions to execute the scheduled task, you’re still constrained by the hard limit on state transitions. Also, if all the tasks are to be scheduled for execution within 48 hours (and you care about precision), then you can’t rely on DynamoDB TTL at all.

Cost

Another caveat to keep in mind about Step Functions is that it is one of the most expensive services on AWS. A million state transitions would cost you $25, which is many times the cost of the Lambda invocations.

The simplest state machine for a scheduled task would require 3 state transitions. So a million scheduled tasks would cost you $75 just for Step Functions! You then still have to factor in the cost for Lambda and other peripheral services such as CloudWatch Logs.

Summary

Step Functions brings a lot to the table when it comes to scheduling ad-hoc tasks:

- you can schedule tasks to be executed up to a year later

- the timing of the scheduled task is very precise

- all the usual visualization, logging and audit capabilities you get from Step Functions

However, it’s not without its limitations. There are a number of soft and hard limits on Step Functions. While these limits should be sufficiently high for most use cases, it does mean that you can only scale to a certain point.

You can, however, work around some of these:

- the limit on the number of open executions

- the limit on the startExecutions API requests

if you combine the use of Step Functions with DynamoDB TTL.

Finally, unlike the other approaches, the cost of this approach is a much bigger concern. This owes to the fact that Step Function is a comparatively expensive service. Which is why I generally recommend using Step Functions mainly for business-critical workloads.

Read about other approaches

- DynamoDB TTL as an ad-hoc scheduling mechanism

- Using CloudWatch and Lambda to implement ad-hoc scheduling

Hi, my name is Yan Cui. I’m an AWS Serverless Hero and the author of Production-Ready Serverless. I have run production workload at scale in AWS for nearly 10 years and I have been an architect or principal engineer with a variety of industries ranging from banking, e-commerce, sports streaming to mobile gaming. I currently work as an independent consultant focused on AWS and serverless.

You can contact me via Email, Twitter and LinkedIn.

Check out my new course, Complete Guide to AWS Step Functions.

In this course, we’ll cover everything you need to know to use AWS Step Functions service effectively. Including basic concepts, HTTP and event triggers, activities, design patterns and best practices.

Get your copy here.

Come learn about operational BEST PRACTICES for AWS Lambda: CI/CD, testing & debugging functions locally, logging, monitoring, distributed tracing, canary deployments, config management, authentication & authorization, VPC, security, error handling, and more.

You can also get 40% off the face price with the code ytcui.

Get your copy here.

Originally published at https://theburningmonk.com on June 27, 2019.

Schedule ad-hoc tasks with Step Functions was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.