Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Photo by Jeffrey F Lin on Unsplash

Photo by Jeffrey F Lin on Unsplash

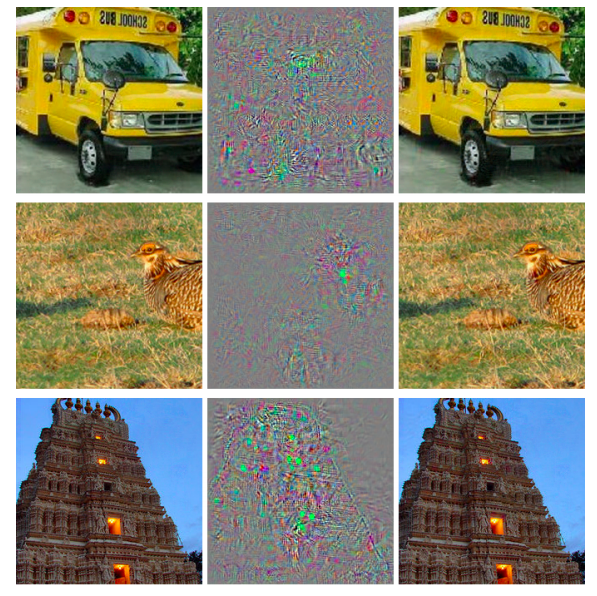

Adversarial attack is way to fool models through abnormal input. Szegedy et al. (2013) introduces it on computer vision field. Given a set of normal pictures, superior image classification model can classify it correctly. However, same model is no longer classify input with noise (not random noise).

Left: Original input. Middle: Difference between left and right. Right: Adversarial input. Image classification model classify left 3 inputs correctly but model classify all right inputs as “ostrich”. (Szegedy et al. 2013)

Left: Original input. Middle: Difference between left and right. Right: Adversarial input. Image classification model classify left 3 inputs correctly but model classify all right inputs as “ostrich”. (Szegedy et al. 2013)

In natural language processing (NLP) field, we can also generate adversarial example to see how your NLP model resistance to adversarial attack. Pruthi et al. use character level error to simulate adversarial attack. Performance of state-of-the-art model achieve 32% relative (and 3.3% absolute) error reduction.

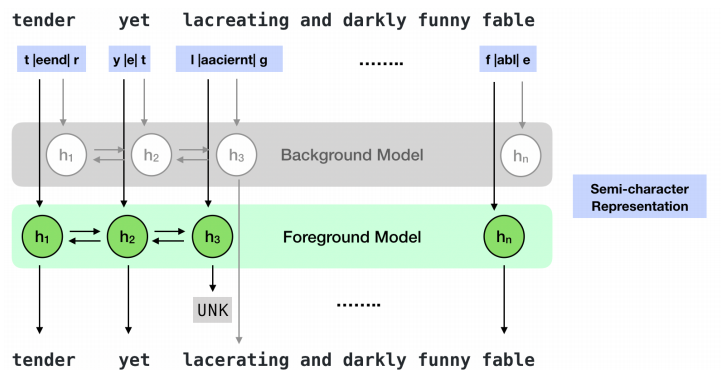

Architecture

Pruthi et al. use semi-character based RNN (ScRNN) architecture to build a word recognition model. A sequence of words will feed into the RNN model. It does not consume whole word but splitting to prefix, body and suffix.

- Prefix: First character

- Body: Second character to second last character

- Suffix: Last character

In the mean time, there are 2 models to predict words. Foreground model is trained by domain specific data and the data volume is relative small. Background model is trained on larger common dataset. Background model prediction will be used whenever UNK is predicted by foreground model under specific setting.

3 different ways to handle unknown prediction (i.e. UNK):

- Pass-though: Skip the word

- Backoff to neutral word: Replace it by a neutral word like ‘a’ which has a similar distribution among classes.

- Backoff to background model: Replace it by background model prediction.

On the other hand, After The Deadline (ATD) which is an open-source spell corrector is considered in experiment.

ScRNN Architecture (Pruthi et al. 2019)

ScRNN Architecture (Pruthi et al. 2019)

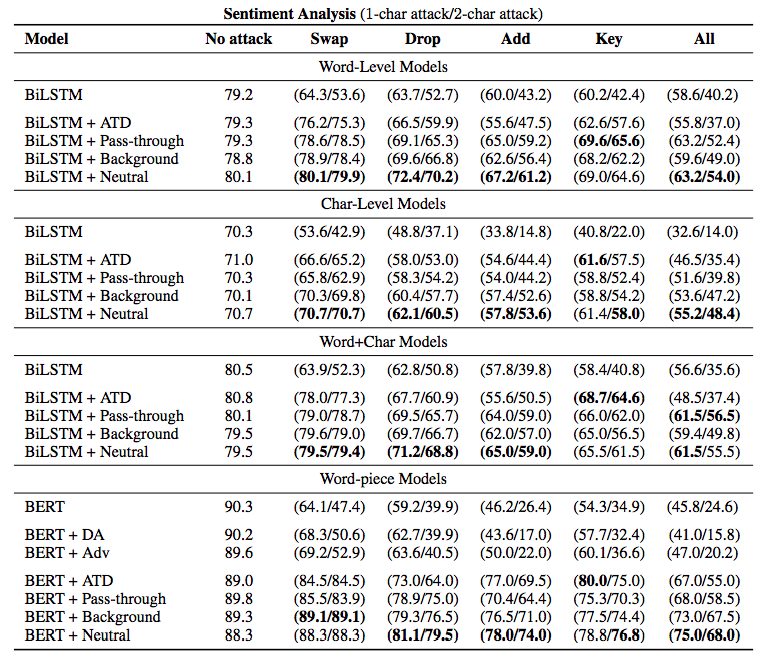

Experiment

Pruthi et al. compares different models and back-off ways to see the performance of different adversarial attacks which are:

- Swap: Swap character randomly

- Drop: Drop character randomly

- Add: Add character randomly

- Key: Introduce keyword distance error

- All: Combine all of the above adversarial attack.

By using above augmentation methods to generate synthetic data to prevent the adversarial attack. It recovered over 76% of the original accuracy.

Model performance comparison (Pruthi et al. 2019)

Model performance comparison (Pruthi et al. 2019)

Take Away

- Data augmentation cannot replace real training data. It just help to generate synthetic data to make the model better.

- Do not blindly generate synthetic data. You have to understand your data pattern and selecting a appropriate way to increase training data volume.

- Drop, Add and Key methods are available nlpaug package (≥ 0.0.1) while Swap method is available from 0.0.4 version. You can generate augmented data within a few line of code.

Like to learn?

I am Data Scientist in Bay Area. Focusing on state-of-the-art in Data Science, Artificial Intelligence , especially in NLP and platform related. Feel free to connect with me on LinkedIn or Github.

Extension Reading

- Data Augmentation in NLP

- Data Augmentation for Text

- Data Augmentation for Audio

- Data Augmentation for Spectrogram

Reference

- C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow and R. Fergus. Intriguing properties of neural networks. 2013.

- D. Pruthi, B. Dhingra and Z. C. Lipton. Combating Adversarial Misspellings with Robust Word Recognition. 2019

Does your NLP model able to prevent adversarial attack? was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.