Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

It is assumed that algorithms are faster and better decision-makers than humans. It is also assumed that algorithms can work tirelessly, learn from mistakes and get better over time. That is the ideal state of thinking. That is typically true and as long as it is true, everything goes smooth. When that assumption is not true and things go astray, that is when all the blame game begins!

For example, in the hyper trading world, ML & AI are being used aggressively to recommend and execute trades in order to improve the performance to be an order of magnitude better than the performance from human hedge fund managers.

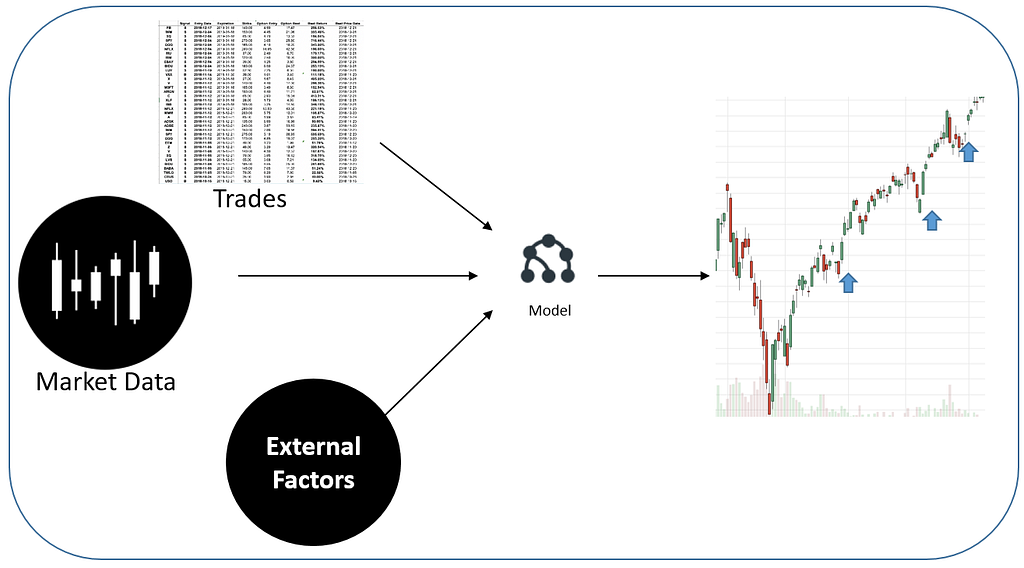

These algorithms are designed to look for time-sensitive patterns, their win-rate and risk-reward ratios and exploit those patterns with fast execution. Though these models are mined, there is a human component to review the risk involved and the validity of the patterns.

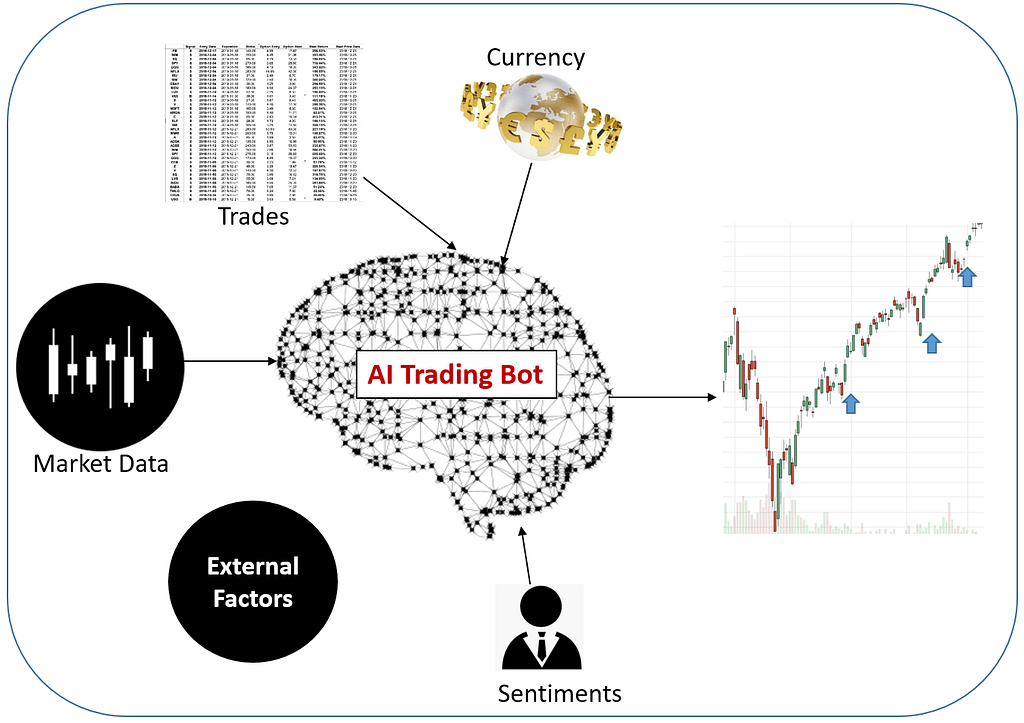

As the trend goes to AI, it not only learns the patterns (like ML) but also improves from experience and starts to think like a human being on what is the best way. It learns the knowledge and applies them similar to how a human brain works. At some point of time, as AI develops a keen and powerful trading mind of its own, it’s going to be difficult for anyone to understand why it behaves and makes the decision the way it makes.

It could start trading the same or multiple instruments in both long and short side in different accounts to leverage short term opportunities and also hedge that with other related instruments. As it learns more, it may start to execute very complex multi-stage strategies that are impossible for a human mind to comprehend.

All of this is great as long as the strategy continues to make money. When the strategy backfires and starts to lose because of market changes or because another AI robot plays a counter game and becomes smarter than the AI agent being used?

Who is responsible for the loss made by the bot agent? That interpretation is an open question. Machine Learning learns based on the input data and the parameters. So if the input data is biased that will have an impact on the predictions.

On the other hand, AI learns and adapts and changes its strategy based on what happens. In such a situation who is responsible for the decisions it makes? Where are the checks and balances? You are dealing with a situation where you have no idea about the hypothesis of why a long or a short position is being made. And there is also the amount of money invested and diversification which can be done by the agent or can be done by a human.

This is an area where there are no clear answers. As more and more of such automated money managers start to expand their solution, we will start to see some real pain and that is where there will be a strong drive for algorithm trading compliance.

Summary: Continuous monitoring using ethical metrics

All these points to the need for creative compliance. Validating that the AI solution is behaving right and is not becoming rogue. It’s not a one time process. It has to be done on a constant basis as AI bot continues and learns and adapts on a daily basis by improving against its success metrics. All metrics we have are success metrics for measuring the performance of the predictions. Need new ethical metrics that measures if the model is behaving correctly & ethically.

Who is accountable when algorithms get it wrong? was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.