Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Photo by imgix on Unsplash

Photo by imgix on Unsplash

About a year ago, Forbes was reporting that we are creating about 2.5 quintillion bytes of data every day. That's a two, a five, and SIXTEEN zeros! By 2020, every person on earth will create about 1.7 MB of data per second.

Let’s look at that for a second.

7 MB * 86400(number of seconds in a day) = 146,880 MB Of data per day!

Now let’s add it all up to see how much data we’ll have worldwide, sometime next year.

143 .4375 Gb of data (divide the result in MB by 1024 to get the value in GB)

*

7,584,821,144(the estimated population in 2020)

=

1,087,947,782,842.5 GB of new data ingested.

Every.

Day.

Bruh!

Come to think about it, the title of the article should be:

Where do you store 1,087,947,782,842.5 GB?

I work for Dashbird and we handle a lot of data on the daily but we don’t even get close to a fraction of that number and we constantly need to upgrade our infrastructure to handle more data as we grow. As you can imagine, this number has been stuck in my head ever since I realized exactly how much data will be floating around.

Ok, now you’re thinking, where does it all go, who has to sift through it and how much of that data are images of genitalia.

Oh, so you weren’t thinking that? Maybe it’s just me…

But the other two questions remain, how is this data generated and who processes it?

Who creates all that data?

IoT is by far the biggest culprit for all the data that’s created. By 2020 we’ll have about 30 billion devices connected. 30 billion toasters, fridges, Siri’s and Android-powered toilets outputting millions and millions of gigs of data.

It might sound hard to believe and 30 billion devices is a BIG number but have a look around you, sitting in your Coffee shop drinking your favorite $8 cup of coffee, how many iPhones, MacBooks, smartphones, Teslas and Hue lights you see around you? What about all the search queries that happen at any given point or the thousands of Uber rides or every social media interaction. Or that stupid Roomba that your dog is, for some weird reason, terrified of the damn thing even tho it weighs 80 pounds. It all adds up.

And while personal IoT devices, like the ones we are used to, have really started to grow exponentially over the past few years, IoT has played an incremental role in manufacturing, agriculture, healthcare (among many other sectors) for years and it’s only picking up speed.

Think about it, you always hear about people losing jobs to machines, or factories that automate their process and letting people go. Those are the machines that produce a HUGE amount of the data I’m talking about today.

Where does it all go?

To oversimplify it, all that data goes to the cloud.

But if I were to attempt an explanation I would probably have to put the spotlight to the biggest Big Data culprits which are Google, Facebook, Apple, Microsoft, Amazon, IBM, Cisco, etc. The list can on for quite a while I’ll stop here.

The way they handle big data storage is absolutely fascinating. Most use the services like AWS Glacier or Azure Data Lake Storage which at its core is a form of Direct-attached storage or DAS, that are comprised of a vast network of servers. They are also known as hyperscale computing environments.

They use a blend of PCIe flash storage as well as HDD for redundancy and to cut down on the latency as much as possible. If one machine were to break they usually swap the whole unit and always have their data backed up on a separate machine ensuring no data is lost. They run on Apache Hadoop, NoSQL, Presto, Cassandra, etc and develop analytics systems on top of them.

Data handling

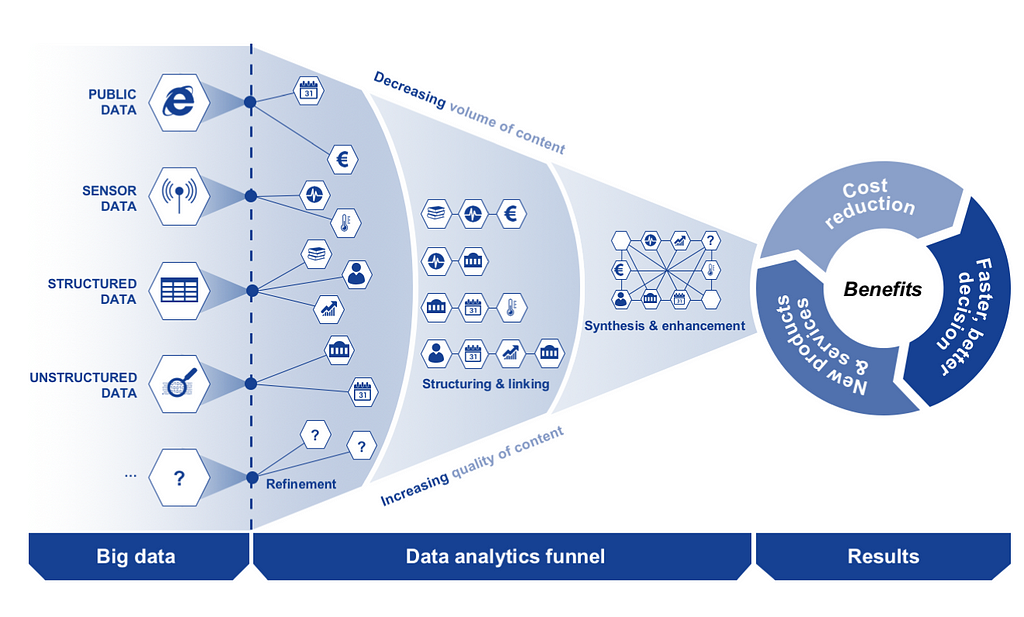

As all this data comes in, it usually analyzed real time and this is another fascinating aspect of this process as storing data is hard enough, having the compute power to analyze it is a whole other level.

The toughest task however is to do fast (low latency) or real-time ad-hoc analytics on a complete big data set. It practically means you need to scan terabytes (or even more) of data within seconds. This is only possible when data is processed with high parallelism.

There are many architectures for processing real-time data and there's no one perfect solution but there are some that stand out.

Serverless architecture has brought about a revolution in this space mainly and for good reason. Besides the cool factor, Serverless has a lot of benefits for big data, from the fact that you don’t have to manage that infrastructure, a task that’s not fully under the responsibility of the service provider to cost and the fact that you get an on-demand scaling solution that will be quick to respond to large influxes of data.

So how long do they hold on to my data?

While they take steps to anonymize your data after a while, your data NEVER gets deleted to the full extent. The EU has taken some steps to ensure you as a member of the EU has some say in the matter but its way to early to tell if this GDPR has any kind of merit since there are still loopholes that companies can use to circumvent their ruling.

Nate Anderson, at Ars Technica, reports:

Search data is mined to “learn from the good guys,” in Google’s parlance, by watching how users correct their own spelling mistakes, how they write in their native language, and what sites they visit after searches. That information has been crucial to Google’s famously algorithm-driven approach to problems like spell check, machine language translation, and improving its main search engine. Without the algorithms, Google Translate wouldn’t be able to support less-used languages like Catalan and Welsh.

Data is also mined to watch how the “bad guys” run link farms and other Web irritants so that Google can take countermeasures.

Google eventually anonymizes the data:

The last octet of the IP address is wiped after nine months, which means there are 256 possibilities for the IP address in question. After 18 months, Google anonymizes the unique cookie data stored in these logs.

This isn’t especially ambitious; Europe’s data protection supervisors have called for IP anonymization after six months and competing search engines like Bing do just that (and Bing removes the entire IP address, not just the last octet). Yahoo scrubs its data after 90 days.

The new oil?

Data has become a commodity, just like oil, gold, and lumber and it’s starting to increase in value as we use and produce more and more of it but since it’s still young, you won’t see it traded at a stock market anytime soon. Although, companies that have their entire business rely on the buying and selling of user data are publicly listed, one might argue that we are already doing that to some extent.

Some may try to vilify Big Data companies but I’m not one of them. In fact, I’m among the people that are anctiously looking to see what’s in store for us in the coming years and how ‘data’ will improve our daily lives.

Do You Know What Happens to Your Data? was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.