Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Img Source: https://blog.ladder.io/ab-testing/

Img Source: https://blog.ladder.io/ab-testing/

I have been a digital analytics and optimization consultant since last 4–5 years. After 100 plus A/B tests with different companies, I have gained a few valuable insights in managing a Digital Optimization Program. There’s a repeatable process of ideation which has been sparingly discussed in regular A/B testing articles. This article is about those learnings.

Let’s look at some of those challenges and solutions in the following scenarios.

Scenario 1: Under testing of Less prominent features

A website even if for a single product, is supported by other factors for a complete experience. Those factors together play into a website’s key experiences and metrics conversion.

And these website factors can be both visible and not so visible if you’re not focusses enough on your conversion.

Landing Pages and Request for Information forms are often the big components that are tested most. Especially if CTA text, color, and Hero-images tests are exhausted. This is especially true for B2B and B2C companies that are driven by large single purchases.

For example, car dealerships, education, overseas travel and so on. So first instinct of many teams is to test the lifts in RFI form KPIs. Other aspects like a menu, information about products, or a random download button tend to be overlooked. But teams that follow a cycled approach to site conversion can consistently optimize their organization’s website over a period of time.

Here’s an example of a small test cycle:

- Incremental RFI Tests ( 2–3 tests at max)- If the very first test gives you a lift, great! Implement the winning change and move on to the next part of the website. If not, we still need to move to another section after 2–3 tests and return to test new RFI variations again.

- Optimizing Visitor Journey After Key KPI conversion- No matter your business type, there can be better ways on how you bid goodbye to your visitor. Especially when a key conversion has happened. You can always leave your visitor with an enhanced experience. They are definitely going to leave your website. How you plan their exit before or after a purchase, can decide if they will return again. For example, in case of an automaker, imagine an RFI or a meeting has been set up.

Now you can offer them a review video of the car in the same visit or with an email later. You can also inform them about some potential freebies at local dealerships or other unique features of the car. Usually, there’s good potential here for every industry and business type.

3. Testing the not so visible components of your website- A/B testing is not just limited to gauging visitor’s reaction on visible aspects of the website. An optimization team can help shape the navigation structure of your website. Aspects like website pathing, testing menu and navigation options can create simplified engagement with your visitors.

Scenario 2: Book-keeping of Campaigns

Let’s take a leaf from accounting and maintain a profit-loss statement of all tests done quarterly or annually!

It’s very common to test, implement and forget. It is rare for teams to maintain a record of the tests that were conducted in the last 6 months or a year back. A/B tests are usually short term projects. But a combined reflection on all test campaigns that happened in the last 6 months or a year can give an enormous amount of learning. It also minimizes the reliance on the next rock-star idea and gives the marketing team an ability to plan for ‘seasonal’ tests.

It also helps in sequencing the components that you want to test, rather than an unorganized format or over-testing of one idea.

To do this, a simple pro-active approach of recording the final result of each test can go a long way. You can use an excel or any shared document to record the result of each test. It would cost nothing except for an extra manual step with discipline. In the excel shared above, one can easily rename columns to record the performance of past campaigns, sample sizes, running duration, and other keys factors.

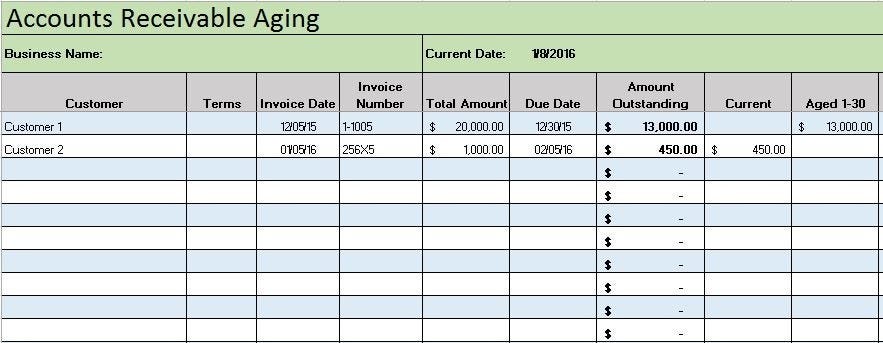

Scenario 3: Testing Calendar

Having a testing calendar is the other side of book-keeping of the tests. As the team begins to mature, they usually have some sort of calendar planned for tests. However, the challenge lies less in following it with discipline.

Two common reasons for doing so are :

- Change in Management or Leadership emphasis: This often happens based on some new performance report. Some new feature launch or regular section topples and testing priorities can change immediately. In some cases, a more flexible approach is required. We must let go off some pre-decided tests to make way for the ones based on new learnings.

However, it’s important to remember not to abandon all test ideas that were decided earlier a part of a larger strategy. Internal priority order can be maintained and low priority ideas can be phased out for new ones.

2. Constraints by IT Resources: This is more of an operational issue. It can be ‘planned’ effectively for mid-large organizations. They usually have a dedicated IT resource to work on-site optimization. If not, then you need to make a case to hire one for the routine. Popular A/B testing tools like Optimizely, VWO, ABTasty can help minimize the dependency on IT. But again there can be many tests that would need IT help. As an indicator, any test that would require testing of pathing ideas, menu, integrating FAQs, chat, among other things. And these may require more of IT help than what a standard tool can provide.

Scenario 4: Analysis of Campaign

An over-discussed phrase but under-utilized in essence. It’s important to be neutral in the analysis process and be thorough at the same time.

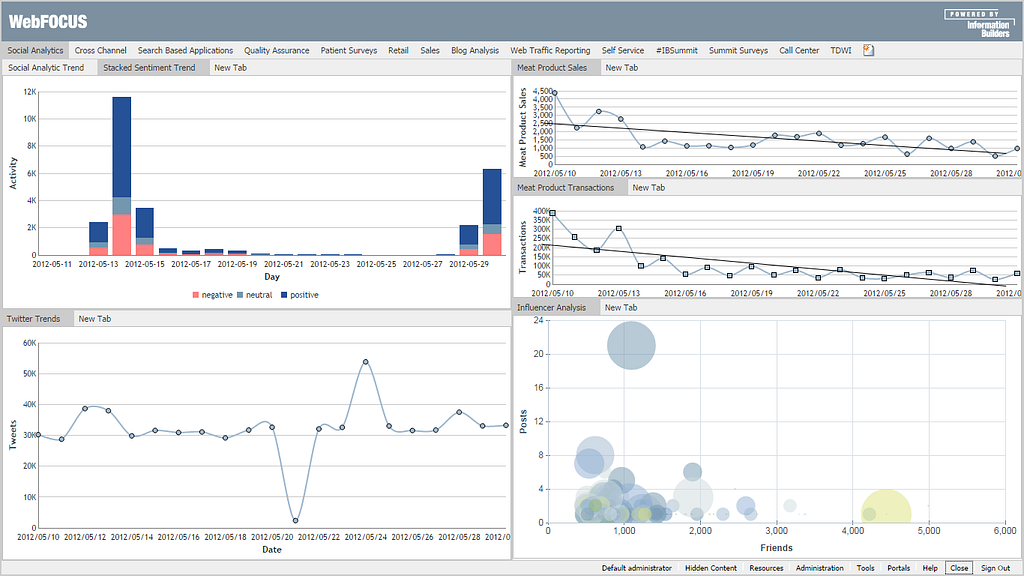

Image Credit- http://www.informationbuilders.nl/blog/dan-grady/18573

Image Credit- http://www.informationbuilders.nl/blog/dan-grady/18573

I have sensed that an analysis of A/B test seldom finishes in one fine report. When the results are out, different managers can ask for several breakdowns and segmentation reports. This can put an analyst into a difficult spot and an endless cycle of reports without helping to drive a decision.

One of the ways I have found useful in limiting this, is to come up with a ‘Measurement Plan’ before the start of the test. Collaboration is fundamental to an analyst’s job. Depending upon how your organization works , you can share a prospective measurement plan with respective people to solicit their feedback. Even if working just by yourself, a plan on paper helps to reflect back when actually analyzing the result and avoids any re-work.

Scenario 5: Taking a Decision & Post Implementation Monitoring of the Result

Customizing industry standards to your own organization remains the key. Also, the last 5% of the testing process remains to be finished until you monitor the results.

Image Credit: https://smartbear.com/blog/test-and-monitor/web-performance-monitoring-101/

There’ll be times when the result from a test will not be clear and there can be a round of voting for recommendations. In such situations, its usual for everyone to recommend and advocate for past industry standards. While they are a good reference point, I believe they shouldn’t be considered as a holy grail to be relied upon. Simply because many times they are too old to be relevant. Also what may apply to your organization can be different from what has been recommended for an industry. Hence understanding your immediate business objective, your own audience and customizing a recommendation accordingly is the key in such situations.

Finally, whatever decision get’s implemented, do monitor! It’s the simplest, most intuitive and yet the most easily overlooked part of the process. One can feel like this test is completed and to prep for the next one. But this is still just 95% complete and the last 5% of the process still remains.

Normally winning variations would behave as expected when deployed to 100% traffic, but occasionally the performance might actually be not that good for a multitude of reasons. So it needs to be monitored on a consistent basis post 100% launch and updates are shared with all stakeholders.

Conclusion

There are many other points but I’ll rest my cases here. Hopefully, some of the points discussed above would connect with you and help you in your on-going testing process. Many of you will have different opinions and they might be more effective than the ones discussed in this article. So it would be great if you can share any feedback or process ideas that have consistently worked for you. I would love to have any discussion on the subject and learn from any consenting as well as dissenting point of views.

After all, that’s what A/B test campaigns are all about!

Pankaj Singh

P.S: If you’re interested in free online courses in technology and design, you can visit this platform.

Intermediate Guide to A/B Testing- Happy Web Optimization was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.