Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

How we Reduced the Time Complexity from 18 days to 4.5 minutes.

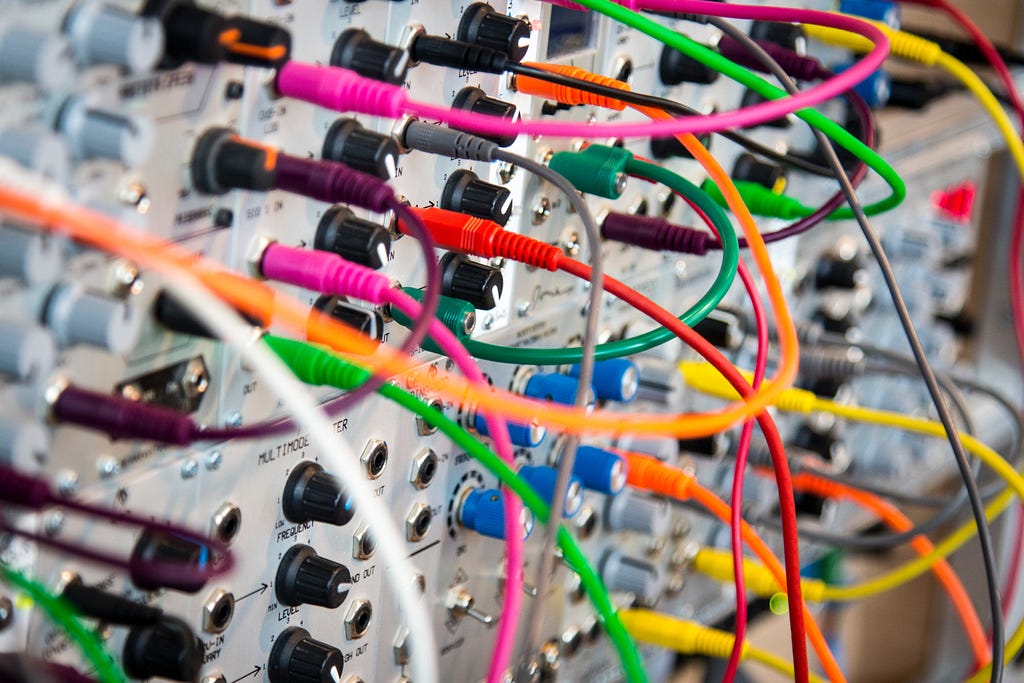

Photo on Unsplash

Photo on Unsplash

Garbage is literally everywhere. It is no different from computers. If we think about it, we get a lot of garbage inside a purse even we didn’t pay for it.

Data processing is generally considered as extracting a pattern from a variety of text using automated scripts and requests. Great commands for data mining using python can be learned from here

Data processing is often considered a pain for many persons. Extracting tags out of the file of smaller size takes efforts but is relatively easier when it is compared with the corpus containing a million entries.

By the way, I am the curator of CodesMyth, an online platform for Simplifying code and breaking Myths.

This posts require no prior knowledge and hence is a lengthy one. So, please bear with me.

Our Situation

Our work impacts the domain of telecommunications, i.e. Phone calls and internet related stuff. So, to make it easier, when a person from anywhere in the world calls to any other person in the world, a Call Detail Record (CDR) is dumped after their conversation is done so as to charge them suitably on the basis of services availed. In our case, a CDR is a single line containing the number of fields like who called to whom, duration, arrival time, end time etc.

Photo by Mario Caruso on Unsplash

Photo by Mario Caruso on Unsplash

When a subscriber from one service provider, say A calls to another subscriber of some other service provider, say B, an LRN value is dumped in the CDR.

As per Google, Location Routing Number (LRN) is a 10-digit number in a database called a Service Control Point (SCP) that identifies a switching port for a local telephone exchange.

Basically, LRN is a unique value that is mapped to the area code and service provider of the caller.

INPUTS —

a) A corpus, say C1 [XML format], which consists of nearly 8 Million Records distributed among multiple files present in multiple directories. Each record consisting of approximately 30 entries some of which among it were Caller Number, Called Number, Originating area code, terminating area code, LRN, facility etc.

b) Another corpus, say C2 which consisted of 85 Million Entries of CDR distributed among multiple files in multiple folders. This was the Corpus which was to be corrected.

Photo by Pankaj Patel on Unsplash

Photo by Pankaj Patel on Unsplash

OUR TASK—

We were to substitute a value of a field [LRN] from corpus C1 into corpus C2 based on the called number of corpus C2.

In short, all we wanted to do was to extract a “Called Number” parameter from every single CDR of corpus C2, then searched for “LRN” parameter inside of the whole corpus C1 on the basis of the “Called Number” parameter of corpus C2 and finally substitute the LRN parameter inside of C2 for that particular CDR. This process was to be executed for nearly 85 million records.

PREVIOUS SCRIPT —

Somebody, at our organization, made a script (in C programming language) for the above task and ended up using Brute force Algorithm to implement it. Just imagine the time that would be taken for the following procedure —

1 — Extract the called number from C2.

2 — One by one, open files from the subdirectories of C1 and then search for the required LRN for a corresponding called Numbers. [8 Million Records]

3 — Once we have it, substitute LRN inside the CDR of C1 for the corresponding called number.

4 — Repeat this for 85 Million Records.

- Not to Mention a lot of unnecessary prints, redundant variables assignment, the inclusion of unnecessary libraries regarding the flow of control along with unnecessary logs that were dumped. Printing to the console can also take up a lot of unnecessary time and resource.

HOW DID WE OPTIMISE IT?

Photo by Nate Grant on Unsplash

Photo by Nate Grant on Unsplash

After running the original script for one day, it had processed nearly 0.5 GB of CDRs and the total volume of CDR being 9GB. One simple mathematical calculation tells us that it will take nearly 18–20 days provided smooth and unintruppted which is impossible provided that there are resource chrunch in most of the organizations.

We all agreed that there was no point in running this script and no one has the time to wait for such a long time. The whole process would have to be started again from scratch. Besides, the corpus contained a lot of unnecessary data and there was the possibility of the wrong substitution. If the above happened, all the work and resource utilization would have been done for nothing.

Step — 1:

Sometimes, what you’re looking for is already there.- Aretha Franklin

There was no need to read the corpus C1 again and again. It was like re-reading the holy book but like a million times. This corpus C1 existed in XML format. An XML parser already existed with us.

Here’s what we did —

- Every time we read a record in a file in Corpus C1, we searched for the XML tags “Called_Number” and “LRN”, then extracted them and printed the output into a file in the following format separated via a semicolon(“;”). We can consider (“;”) as a separator between fields.

So instead of reading an entire record, we would read a line for that record in the format illustrated below.

Called_Number;LRN

- We did the same thing with all the other records and ended up creating the output file with nearly 8 Million lines since there were 8 million Records. Let say that file is (A).

- “A” consisted of many redundancies which were expected like — 1- There were redundant characters in between the number and at the end of the numbers.2- For some Called Numbers, there were no LRN Values.3- Size of Caller number were not uniform which was to be 10. They were like garbage values printed at Called Number field.4- There were duplicate values among the files.

- To Remove them, we made the following checks while parsing the corpus C1 and creating the output file(“A”).* The string “Called_number” should be converted into an integer using “atoi()” function in C. If failed, we will not write the output to the output file(“A”). (Handling case 1)* The length of string “Called_number” should be 10. (Handling case 3)

- Once we created the output file(“A”), we edited it in vim and handled (Case 2 and Case 4) using two vim internal commands which were —

:g/;$/d [Deleting all the lines that are ending with “;”]:sort u [For sorting in ascending order and removing the duplicate lines]

Removing the duplicate lines from a file is often easy in a text editor. For eg., Atom.

Photo by Caspar Camille Rubin on Unsplash

Photo by Caspar Camille Rubin on Unsplash

Some may say, we can just write a simple program for it. But one should use an approach or tool if is already built to fix your problem instead of starting from scratch. And from the time and efforts saved, create something new on the top of your existing application/product/platform thus providing other a stepping stone and help the community grow. This is basic concept of OpenSource.

This output file (“A”) was our final file which will be used from a Corpus C1 of 8 Million Entries. The total entries which remained in it after duly processing it were merely 2.2 Million Entries.

From a whopping 8 Million records(Each record consisting of 30 entries), we narrowed down to 2.2 Million entries.

Scaled the size of Corpus = (8000000*30)/2200000 = 109 times.

which is significant if we think about it after analysing the resources available to us.

Next step was to optimize the processing of CDR of Corpus C2 —

As in the case of a CDR, we were searching for a particular key in the whole file (“A”) linearly [using Linear search]. Since our newly generated file from corpus C1 (“A”) was already sorted, we decided to implement the binary search.

Hence, converting the complexity from O(N) to O(logN).

CDRs from Corpus C2 were distributed among multiple directories each for different area code( around 252 directories). Earlier, When we were processing the CDR, we were jumping to the next directory only if the previous directory was processed.[Processing one directory at a time].

This means that the entire process was running on a single user thread. Threads are a basic concept of OS and are used when parallel processing is implemented in a software or application.

Hence we implemented multithreading on a multi-core processor. The blades on which the scripts were executing consisted of 80 cores. Hence, at parallel, after implementing the threading via shell, we were able to execute the CDRs from 80 directories simultaneously. Thus our total time was reduced by a factor of 80.

We just created a simple shell script which did just —

./script_which_process_cdr [Directory Name] [Output Directory Name] &

The next challenge was that although the processing time was reduced disastrously, each script for every directory was created with a new process ID and had no correlation whatsoever with the process ID of the process running simultaneously of another thread. Hence, the file, (“A”) from Corpus C1 was loaded into the memory for nearly 242 times which was quite the wastage of resources.

So, instead of applying the multithreading via shell, we decided to implement the multithreading in C in which our script was originally coded.

What we decided is that, once the corpus file was successfully loaded, then we would start threading. Using pthread, it was quite easy and also there was no issue with the modification of data of the file(“A”) by threads since none of the process executing included writing to the file (“A”).

Briefly summarizing, all we did was getting the output of the corpus (C1) in a text file (A) after removing certain redundancies, then loading the file into the memory of our blades into a 2-D Array. If loading into memory was successful, the binary search was used to identify and substitute the key-value pair. To make the process even faster, multi-threading was implemented and threads were created to process a large number of directories in parallel.

The whooping time of execution calculated from the time Function was

$ time process_cdr.sh <Directory of the path were CDR’s were present>$ Execution completed in 4 minutes 27 seconds.

From a whopping 18 days of processing, our processing of data was completed in just 4 minutes 27 seconds.

From the above event, I felt the cruciality of time complexity. Time is the only thing that we cannot purchase and cannot get back. From the above example, I believe that no matter how our script is coded, there is always someplace or some dimensions to optimize it, be it space or time complexity or documentation. We just need to give it some more time to analyze and think about it without any external hindrances.

Happy Coding and More power to you.

Photo by Austin Distel on Unsplash

Photo by Austin Distel on Unsplash

How we reduced the time complexity from 18 days to 4.5 minutes. was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.