Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Making a build or buy decision at the start of any data science project can seem daunting — let’s review a

Making a build or buy decision at the start of any data science project can seem daunting — let’s review a

Almost every major cloud provider now offers a custom machine learning service— from Google Cloud’s AutoML Vision Beta, to Microsoft Azure’s Custom Vision Preview, and IBM Watson’s Visual Recognition service, the field of computer vision is no exception.

Perhaps your team has been in this Build or Buy predicament?

From the marketing perspective, these managed ML services are positioned for companies that are just building up their data science teams or whose teams are primarily composed of data analysts, BI specialists, or software engineers (who might be transitioning to data science).

However, even small-to-mid sized data science teams can get value from evaluating these ‘machine learning as a service’ (MLaaS) offerings. Because these vendors produce and have access to so much data, they are able to build and train their own machine learning models in house — these pretrained models can offer higher performance quickly. It’s also useful to use these MLaaS offerings as a baselines to compare against in-house models.

This post will provide you with a clear framework on how to evaluate these different vendors and their MLaaS offerings.

The inspiration for this post came from an interesting episode of Sam Charrington’s This Week in Machine Learning & AI Podcast featuring Tom Szumowski, a data scientist at URBN (Urban Outfitter’s parent company) on ‘Benchmarking Custom Computer Vision Services’.

Benchmarking Custom Computer Vision Services at Urban Outfitters - Talk #247

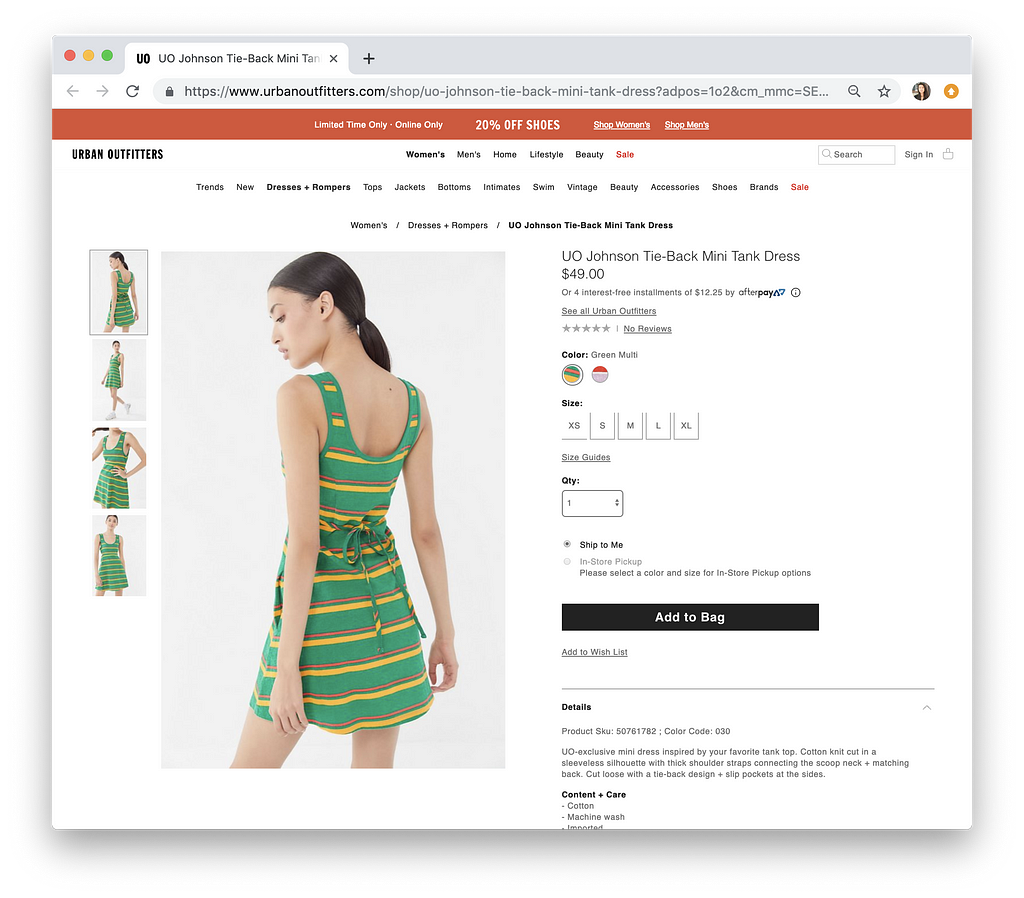

In the interview, Tom describes his team’s efforts to build a machine learning pipeline to automate fashion product attribution tagging. This essentially means taking a product like this dress :

and being able to automatically and accurately generate attributes about it such as its sleeve length (none), neckline (scoop neck), length (mini dress), pattern (stripes), color (green, yellow, red), etc…

Using machine learning — specifically computer vision— to handle this product attribute classification process makes sense for URBN because of the vast, diverse catalog of products they offer. Access to these attributes help the business across some key initiatives:

- personalization around content and product recommendations

- improving discoverability and search in the user experience

- forecasting / planning for inventory management

The URBN team anticipated that the number of attributes would continue to grow and identified the opportunity costs of an in-house solution (in terms of team time spent), so they were interested in finding a scalable solution for managing the models for all those attributes.

Here’s an overview of the custom vision service vendors that their team evaluated:

Vendors included: Google Cloud AutoML Vision Beta, Clarifai, Salesforce Einstein Vision, Microsoft Azure Custom Vision Preview, IBM Watson Visual Recognition, In-House: Keras ResNet and In-House: Fast.ai ResNet

Vendors included: Google Cloud AutoML Vision Beta, Clarifai, Salesforce Einstein Vision, Microsoft Azure Custom Vision Preview, IBM Watson Visual Recognition, In-House: Keras ResNet and In-House: Fast.ai ResNet

Tom Szumowski has a great write up about the specific details about the case study — Exploring Custom Vision Services for Automated Fashion Product Attribution (Part I and Part II) — and shared the presentation too.

How does this apply to my data science team?

While URBN’s specific use case was interesting, it was the thought process and framework that the URBN team used to evaluate these different MLaaS products that proves extremely useful (and what will be the focus for the rest of this post).

Framework for Evaluating MLaaS offerings

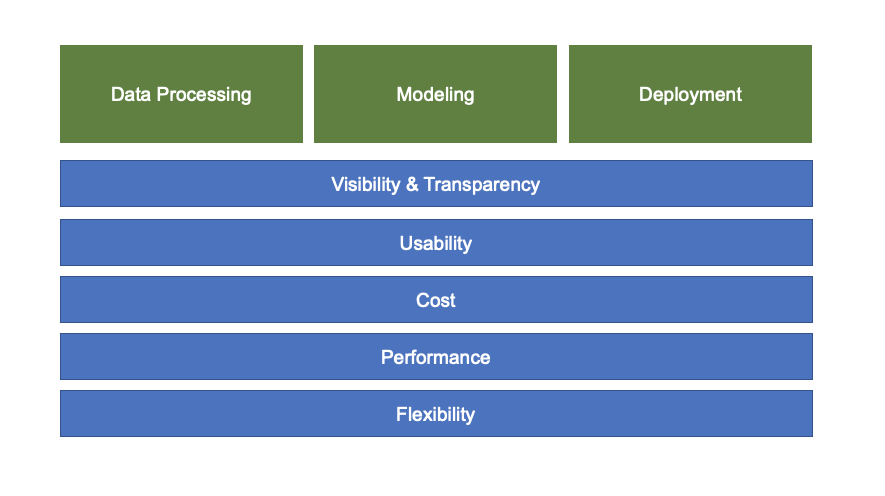

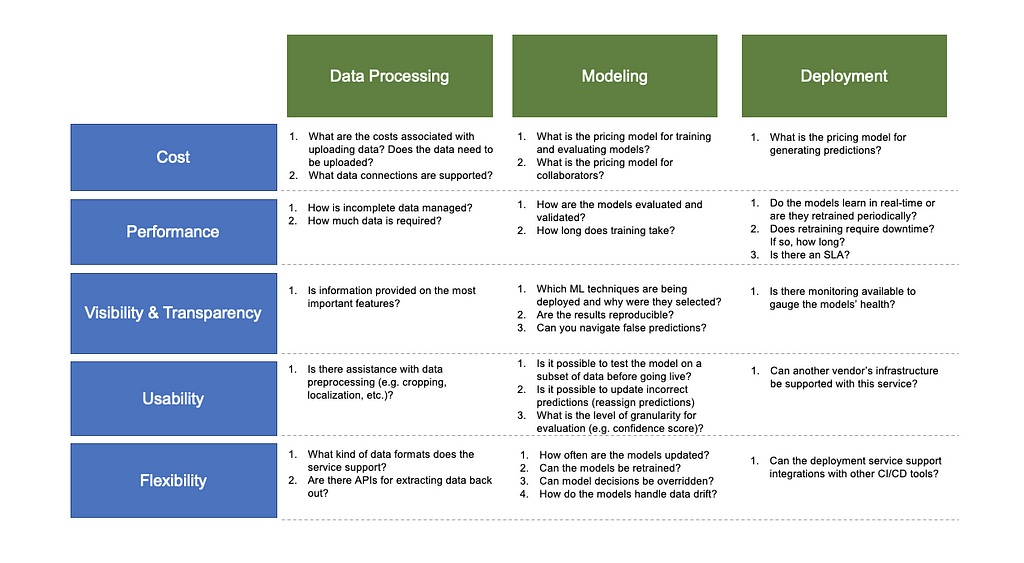

Here’s a starting framework for evaluating these different MLaaS products. You’ll notice how the attributes for visibility, usability, flexibility, cost, and performance span across each portion of the workflow. We’ll dive into each section more deeply below.

This framework and its questions will help you understand whether using a MLaaS product is the right approach for your team — from the data that you’re feeding into the MLaaS offering to the actual model results you receive. To prevent these systems from being expensive black boxes, you should ask questions around how the evaluation actually occurs and whether the results can be updated.

See this diagram in slide format here

See this diagram in slide format here

Perhaps you’re evaluating these MLaaS options against in-house models and approaches like the URBN team did?

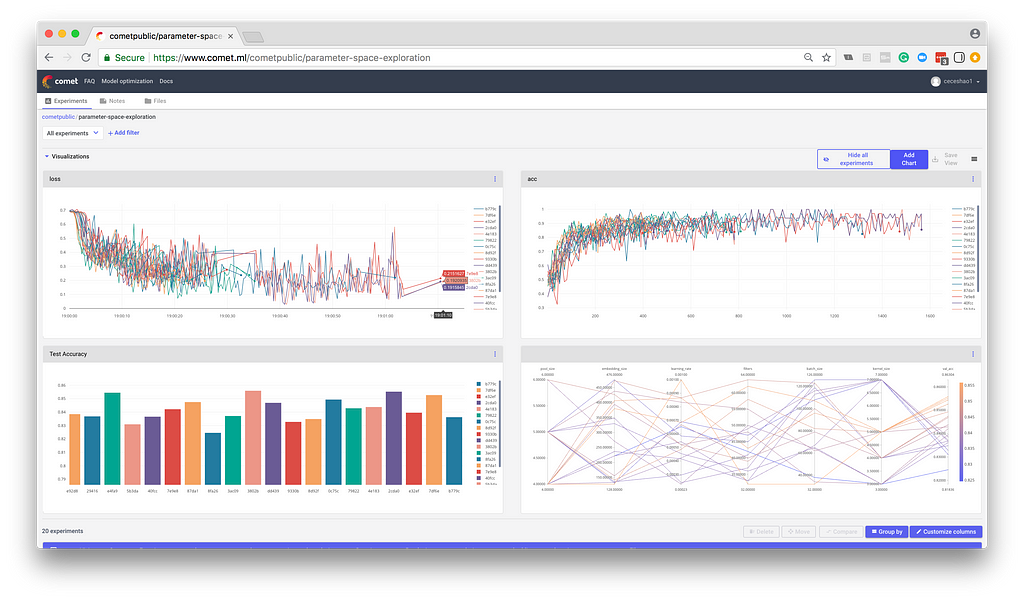

If so, it’s critical to have experiment comparability. Comparability means that you are consistent around the data, environment, and model that you use in order to make a fair assessment across these different options (after all, even having different library versions can impact performance). Tools like Comet.ml can help automatically track the hyperparameters, results, model code, and environment details of these experiments. With visualizations across these different experiments, it’s easy for your team to directly compare how different models performed and identify the best approach.

Project-level visualizations in Comet.ml make it easy to identify which model and parameter set performed the best. You can share these visualizations as reports to your manager, procurement team, or fellow data scientists!

Project-level visualizations in Comet.ml make it easy to identify which model and parameter set performed the best. You can share these visualizations as reports to your manager, procurement team, or fellow data scientists!

Evaluation in Practice

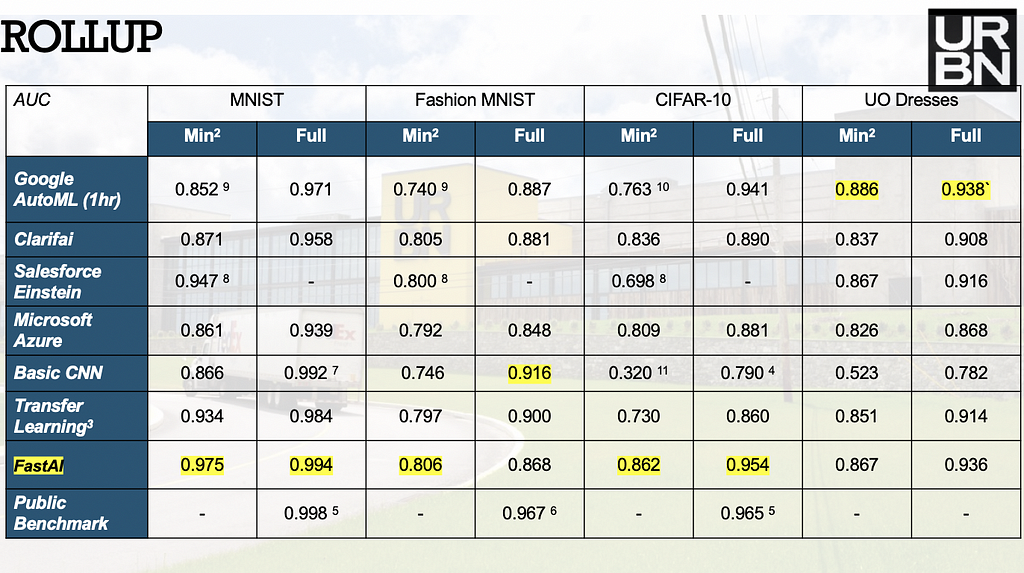

So what were the results of the URBN team’s evaluation? Here’s the table comparing classification accuracy metrics for benchmark datasets like MNIST, Fashion MNIST, CIFAR-10, and the cleaned UO Dresses data:

From the URBN presentation, Google’s AutoML had the best AUC for the UO Dresses set with Einstein and Fast.ai behind.

From the URBN presentation, Google’s AutoML had the best AUC for the UO Dresses set with Einstein and Fast.ai behind.

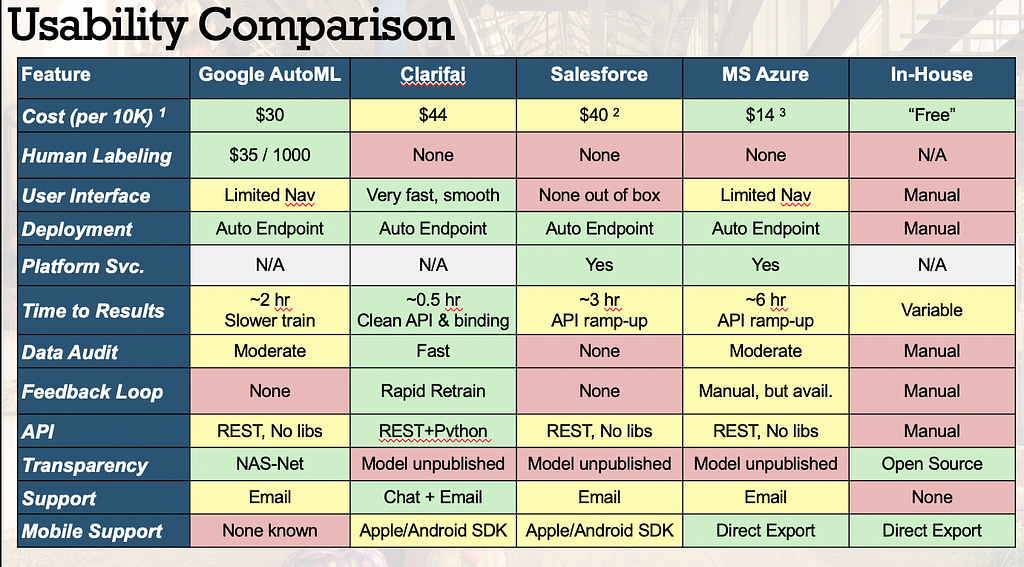

Their team also put together a great usability comparison table where they compared key capabilities like deployment options, retraining options, APIs, and more.

Thanks for reading! I hope you found this framework and example useful for your data science projects. If you have questions or feedback, I would love to hear them at cecelia@comet.ml or in the comments below ⬇️⬇️⬇️

If you haven’t listened to TWiMLAI yet, I would highly recommend the entire series. Some of my favorite episodes are (1) Scaling Machine Learning on Graphs at Linkedin with Hema Raghavan and Scott Meyer, (2) Trends in Natural Language Processing with Sebastian Ruder, and (3) Designing Better Sequence Models with RNNs with Adji Bousso Dieng

NY AI & ML is hosting a meetup in NYC with Adji in May — join us 🚀🚀)

👉🏼Want more awesome machine learning content? Follow us.

Using managed machine learning services (MLaaS) as your baseline was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.