Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

When I was younger, I believed that psychologists possessed this uncanny, and somewhat mystical ability to read minds and understand what humans were thinking and planning on doing next. Now that I am a psychologist, people often ask me “are you reading my mind?” This type of question is so common in fact, that my psych friends and I have commiserated and constructed a joking response: “You’re not paying me enough for that.”

But for those of you who aren’t satisfied by my cheeky response, here’s a straightforward one: No. It turns out that psychologists don’t know what humans are thinking, and we’re actually very bad at predicting behaviors too. Part of the problem is that we are only as complicated as our subject matter — how could a fly understand the nature of itself fully — unless it was just a bit more complex than a fly? The bigger problem, though, is that we are trying to understand a subject matter that seems to behave probabilistically rather than deterministically.

Many of you are probably familiar with Schroedinger’s cat — the one that’s both alive and dead until you open the box and observe it as being in one state or the other. Human behaviors are that way too. We can guess whether a human will be generous or greedy at a given moment, but we can’t ever know for certain until we actually open the proverbial box and observe the behavior. To those who believe in human agency — this makes sense. To those who think of humans as complex, deterministic machines, this is as counter-intuitive as quantum mechanics is. How could two different states and behaviors be simultaneously true?

In my niche, moral psychology, scholars have wrestled with what’s called the “situationist challenge”: how can moral character be a meaningful concept, if human behavior changes drastically from situation to situation? Or, put another way, how can we talk about heroes and villains if each person’s behavior is all over the moral map?

Again, it comes back to probabilities. Humans are probabilistic, not deterministic. When we conceptualize behaviors in terms of probability curves, we begin to have a more accurate picture of the individual.

If a person is dishonest in our first meeting, it is tempting to assume that the person can be characterized as dishonest. But it is paramount to keep in mind that one datapoint is contextless in the statistical world. The observer has no clue whether that dishonesty was an unfortunate fluke, was characteristic, or was actually uncharacteristically mild compared with an even more egregious history of dishonesty.

Once data across a variety of settings and times is collected, the resulting probability curve is actually quite useful for characterizing an individual as generally honest or dishonest. Someone with good character, then, will still fluctuate in their level of honesty from situation to situation, but on average they will be more honest than others across situations. This might sound obvious (saints have their bad days, sinners have their good days), but society betrays this understanding of human nature frequently.

The devil is in the details, and the nuance is as simple as this: statistics are good for describing human behavior, not for predicting human behavior. What’s the difference?

Human behavior is best thought of probabilistically, but making a prediction requires collapsing this range of possibilities into a single guess. It forces you to collapse the probability curve and prophesy what the future state of the cat will be once the box is opened. In social sciences, this typically means collapsing the probability curve to the average of that person’s behavior, and guessing that value. Or worse (and more common) collapsing the curve of all people’s behaviors (not personalized, but generalized) down to the average of all people’s behavior.

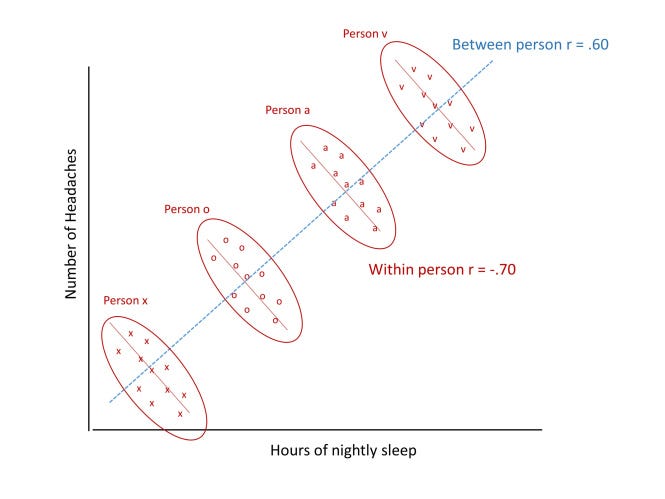

This image illustrates how aggregated data from all people may machine-learn a result (the blue line) which is exactly opposite of the result when only personal data is considered during machine learning (the short red lines). This is called the Yule-Simpson Effect.

This image illustrates how aggregated data from all people may machine-learn a result (the blue line) which is exactly opposite of the result when only personal data is considered during machine learning (the short red lines). This is called the Yule-Simpson Effect.

This is okay when the stakes are trivial. For instance, it’s not a big deal when Netflix groups me with similar people, and then (in)correctly guesses that I’ll like Sharknado. But when the stakes are nontrivial, its ethically dubious.

For instance, in the case of pre-hiring evaluations, it’s potentially immoral to rule an individual out of the hiring lineup solely because of their statistical score on a personality or character test. It’s easier to see why when we break the statistical logic down: some of the people who answer x on this question later do Y, and some (but fewer) of the people who don’t answer X on this question later do Y. We are not hiring you on the basis that you answered X, and at this job we cannot allow you to do Y.

This should throw red flags — it seems unfair! After all — what if you are one of the people who answered the question “incorrectly,” but never would have caused a problem, while they hired someone who got the question “right” but did go on to cause a problem?

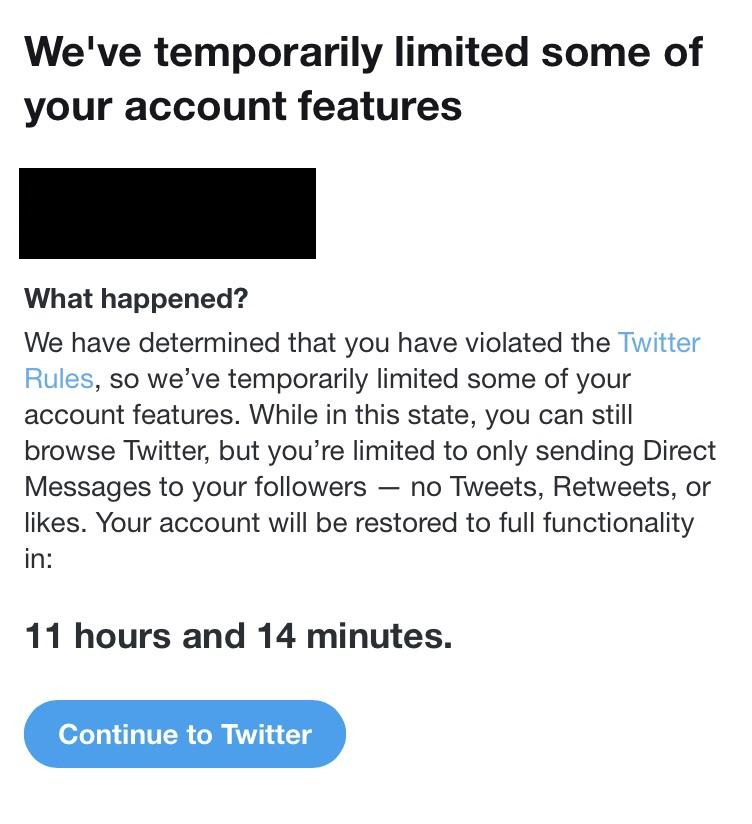

Imagine a similar situation online. Let’s say past Yelp research has revealed that when 10 or more new accounts leave positive reviews for the same business in the scope of an hour, 90% of the scenarios represent a sybil attack (i.e. the business created a bunch of dummy accounts to artificially boost their reviews). As a result, Yelp implements an algorithm to detect when 10 new accounts rate a business, and then automatically blocks that business for presumed foul play. Is it ethical to allow such algorithms to go completely unchecked by humans? The livelihood of people is on the line.

One issue that social media massively struggles with is cyberbullying. If an algorithm automatically detects and removes bullying posts, perhaps the benefits outweigh the costs (especially for tech giants that wage an uphill battle in terms of manually sorting through such posts). But can it ever be excusable for an algorithm to automatically ban a user on the basis of past bullying altogether? The notion of automated digital exile troubles me.

I bring these scenarios up to paint a picture of how society really does move beyond statistical description of individuals, to making assumptions about a person’s behaviors before observing Schroedinger’s cat, and that this assumption comes at an ethical cost.

Now, a very formidable criticism of my analysis is a pragmatic one: “throwing prediction out because of error is throwing the baby out with the bathwater. Betting against stats is unwise.” No-doubt, to abandon statistical insight would be nihilistic. But in order for statistical predictions to be ethical, they must also be rational and relational.

When AI is employed for nontrivial decision-making, it’s findings should be grounded in human theory and facts. That is — the assumptions of the AI (e.g. this business is performing a sybil attack), should be supported not only empirically, but rationally by humans. A human reviewer might look at the case, see that the business already has a thousand positive reviews, and therefore has no need to perform a sybil attack. A quick online search might yield that there is a big event held at that business today, and 10 reviews is no cause for alarm in such a scenario.

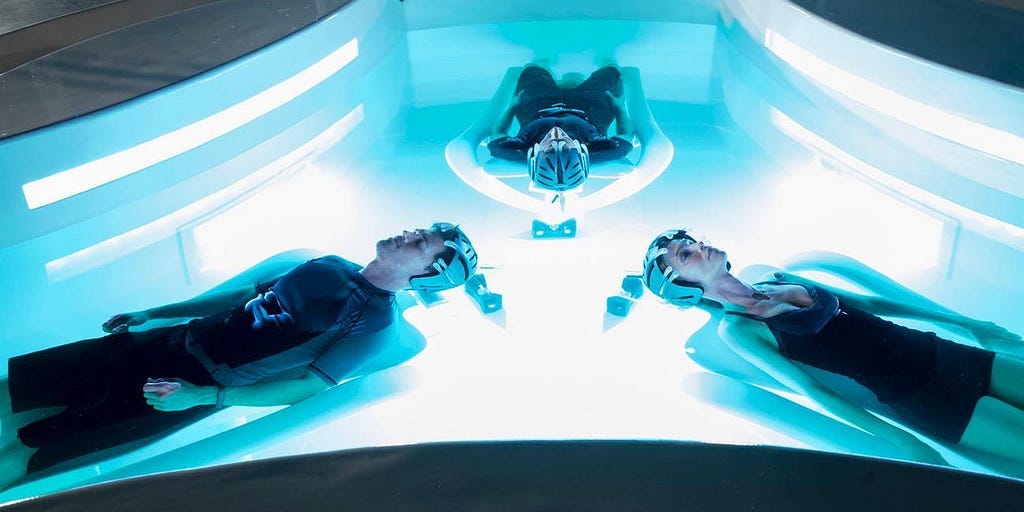

Precogs from The Minority Report

Precogs from The Minority Report

A human reviewer adds an element of surprisability to machine learning with stakes. (I mean an agentic human here, not a human who pulls no decision-making weight and is following a protocol handed down from higher ranks.) Humans must always entertain the minority report.

When statistical prediction heavily influences a decision, it should be done as responsibly. When possible, data in the statistical analyses should predict a future behavior on the basis of that individual’s actions only. Rather than saying “most people who answer X do Y, therefore we assume that you’ll do Y because you answered X” the statistics should focus on how much the person has answered X in the past, and how much the person has subsequently done Y in the past. Don’t assume everyone is like everyone else. This is not true. You should have as much person-specific data, over many recent time points, as possible.

Furthermore, despite the contrary adage, people do change. Expanding on that last point, data should be relatively recent. If Netflix used my ratings from 10 years ago, I’d hate most recommendations. More importantly, convicts and drug addicts can rehabilitate. Haters can learn to love. Using recent data provides an avenue for that rehabilitation and redemption.

And last but not least, it is my belief that the avenue for redemption should be available at all times. In short, I don’t think that users should be banned from platforms and job-seekers shouldn’t be blocked from re-applying at the next opportunity. Of course, in the case of malicious cyberbullying or similar scenarios, trust must be rebuilt in a reasonable manner. Perhaps an individual is limited in the amount of access they have to a platform, and access is regained or further restricted commensurate with their recent behavior. This is a demanding process. The alternatives, however, are unethical in my estimation.

Humans are probabilistic, not deterministic. If we want to remain open-minded we can’t prophesy life or death and nail the box shut. We have to actually observe Schrodinger’s cat.

I am building out the egalitarian infrastructure of the decentralized web with ERA. If you enjoyed this article, it would mean a lot if you gave it a clap, shared it, and connected with me on Twitter! You can also subscribe to my YouTube channel!

What Schrodinger’s Cat Taught Me About Being Human was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.