Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

As we explored in our recent article on AI-based algorithm development, automation is quickly changing from something to that developers can work on into something that can do developers’ work. While the extent of this shift remains to be seen in the years to come, one major focus today is how AI can tackle the repetitive and tedious aspects of application development so human developers can focus their efforts elsewhere.

Now, developers at Alibaba’s Xianyu(闲鱼) second-hand trading platform have launched the UI2CODE project to apply deep learning technology in converting visual user interface images to client-side code. With clear component, position, and layout features that suit the scope of machine learning, UI vision research presents an especially promising area for exploring these technologies’ uses in automatic code generation.

In this introduction to Xianyu’s ongoing efforts, we explore how UI2CODE analyzes GUI elements and UI layout structures to generate Flutter code for applications’ overall design.

Fundamentals of the Project

Early work on the UI2CODE started in March 2018, when the Xianyu Technical Team undertook initial research on its technical feasibility. Since then the project has undergone three rounds of refactoring, progressing through various machine learning code generation programs to meet the criteria of commercial settings.

UI2CODE’s central principle is to divide and control UI development features so as to avoid a too-many-eggs-in-one-basket scenario. To this end, the solutions it incorporates have been evaluated based on three core demands. First, for the precision of visual restorations, their outputs cannot deviate by even a single pixel. Second, though machine learning is subject to probability, their results must meet 100 percent accuracy standards. Lastly, the solutions must be easy to maintain; being understandable and modifiable for engineers is only a starting point, and a well-reasoned layout structure is essential to ensuring smooth interface operation.

Running Results

Following several rounds of refactoring, the Xianyu team determined that UI2CODE’s key functionality is to solve automatic generation of the feed stream card, which can also be automatically generated at the page level. The following video shows the running results of the UI2CODE plug-in:

The following sections explore the design and process principles which enable UI2CODE to generate these results.

Architectural Design and Process Breakdown

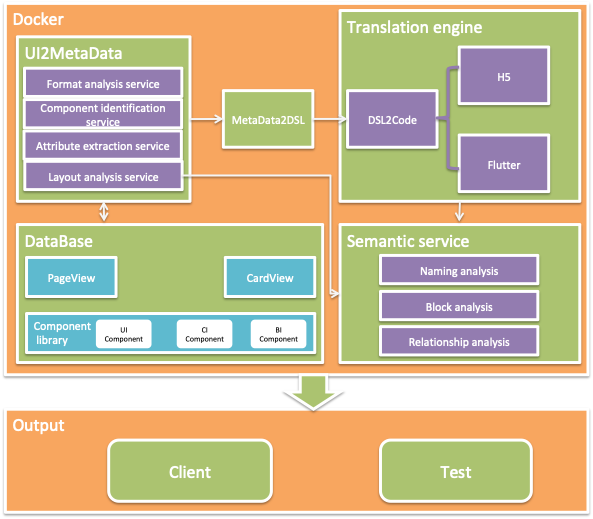

The following diagram offers an overview of UI2CODE’s architectural design.

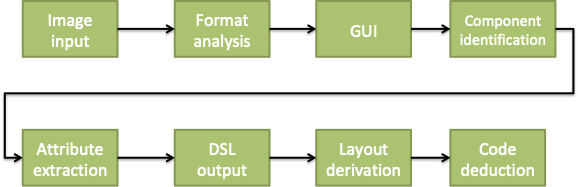

With it, the system’s process can be briefly summarized in the steps shown below:

This process roughly corresponds to four steps. First, GUI elements are extracted from visual drafts using deep learning technology. Next, deep learning techniques are used to identify the types of GUI elements present. Third, DSL is generated using recursive neural network technology. Finally, the corresponding Flutter code is generated using syntax-tree template matching.

The following sections discuss key steps in the UI2CODE system in detail.

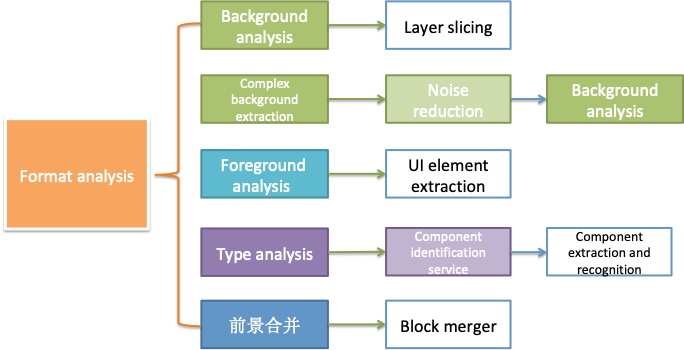

Background/Foreground Analysis

The singular purpose of background/foreground analysis in UI2CODE is slicing, which enables a direct determination of the UI2CODE output’s accuracy.

The following white-background UI offers a straightforward example:

After reading this UI into memory, binarization is performed on it as follows:

def image_to_matrix(filename): im = Image.open(filename) width, height = im.size im = im.convert("L") matrix = np.asarray(im) return matrix, width, heightThe result is a two-dimensional matrix, which converts the value of the white background in this UI to zero:

Only five cuts are needed to separate all of the GUI elements. There are various ways of cutting these apart; the following shows a cross-cut code snippet and is slightly less complicated than the actual cutting logic, which is essentially a recursive process:

def cut_by_col(cut_num, _im_mask): zero_start = None zero_end = None end_range = len(_im_mask) for x in range(0, end_range): im = _im_mask[x] if len(np.where(im==0)[0]) == len(im): if zero_start == None: zero_start = x elif zero_start != None and zero_end == None: zero_end = x if zero_start != None and zero_end != None: start = zero_start if start > 0: cut_num.append(start) zero_start = None zero_end = None if x == end_range-1 and zero_start != None and zero_end == None and zero_start > 0: zero_end = x start = zero_start if start > 0: cut_num.append(start) zero_start = None zero_end = None

The client UI is basically a vertical flow layout, for which a cross cut followed by a vertical cut can be made:

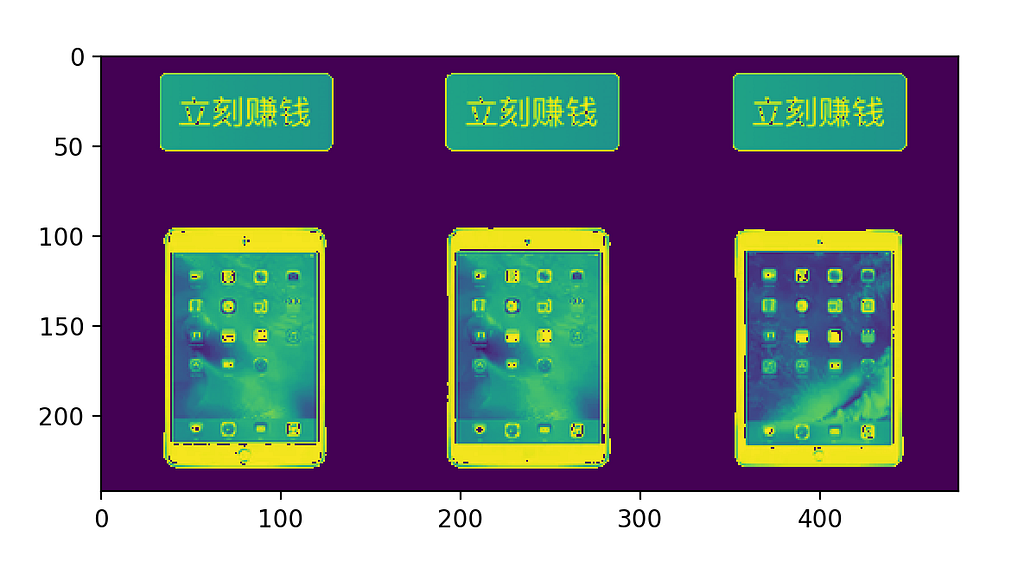

At this point, the X and Y coordinates of the cut point are recorded, and will form the core of the component’s positional relationship. After slicing, this yields two sets of data: six GUI element pictures and their corresponding coordinate system records. In subsequent steps, component identification is performed using a classification neural network.

In actual production processes, background/foreground analysis becomes more complicated, mainly in terms of dealing with complex backgrounds.

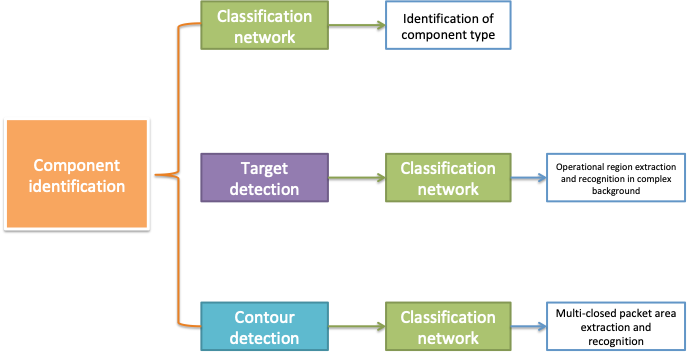

Component Identification

Prior to component identification, sample components must be collected for training. Further, the CNN model and SSD model provided in TensorFlow are used for incremental training at this stage.

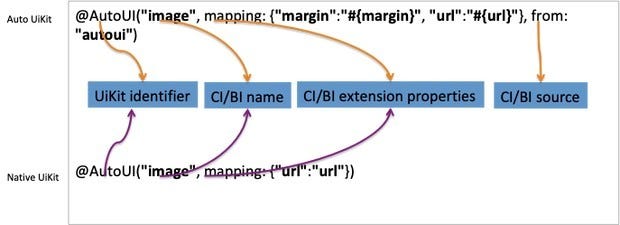

UI2CODE classifies GUI elements according to a wide variety of types, including Image, Text, Shape/Button, Icon, Price, and others which are then categorized as UI components, CI components, and BI components. UI components are mainly for classification of Flutter-native components; CI components are mainly for classification of Xianyu’s custom UIKIT; and BI components are mainly for classification of feed stream cards with specific business relevance.

Global feature feedback is needed for repeated correction of component identification, usually adopting a convolutional neural network. Taking the following screenshot as an example, the two characters of text in crimson red (translating to “Brand new”) comprise the richtext portion of the image. Meanwhile, the same shape style may be present in buttons or icons.

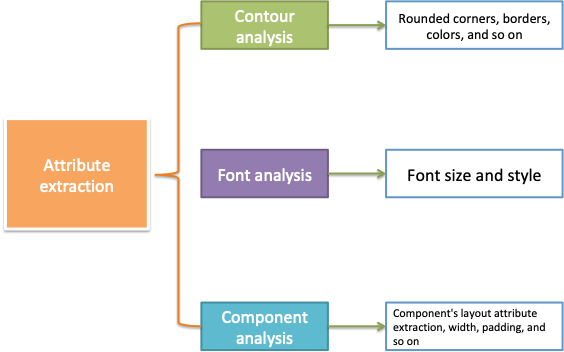

Attribute Extraction

Amid a diverse array of technical points, UI2CODE’s attribute extraction step can be summarized as addressing three aspects of components: shape and contour, font attributes, and dimensions.

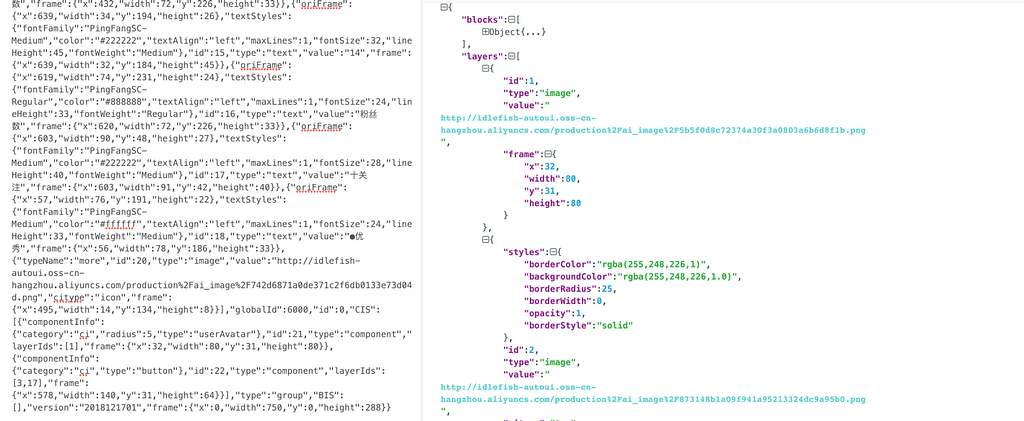

Following attribute extraction, extraction of all GUI information is effectively complete. The GUI DSL is generated as follows:

This data enables layout analysis to be performed. Here, extraction of text attributes is the most complicated factor.

Layout Analysis

In early stages of UI2CODE, the Xianyu team used a four-layer LSTM network for training and learning before shifting to rule implementation due to the small sample size. Rule implementation also has the advantage of being relatively simple; the order of the five cuts inn the first step of slicing is row and col. The disadvantage of rule implementation is that the layout is relatively rigid and needs to be combined with RNN for advance feedback.

The following video shows the results of predicting layout structure through the four-layer LSTM mechanism; here, the UI layout structure is akin to the framework of a building, while the UI layer code restoration is akin to interior design using GUI attributes.

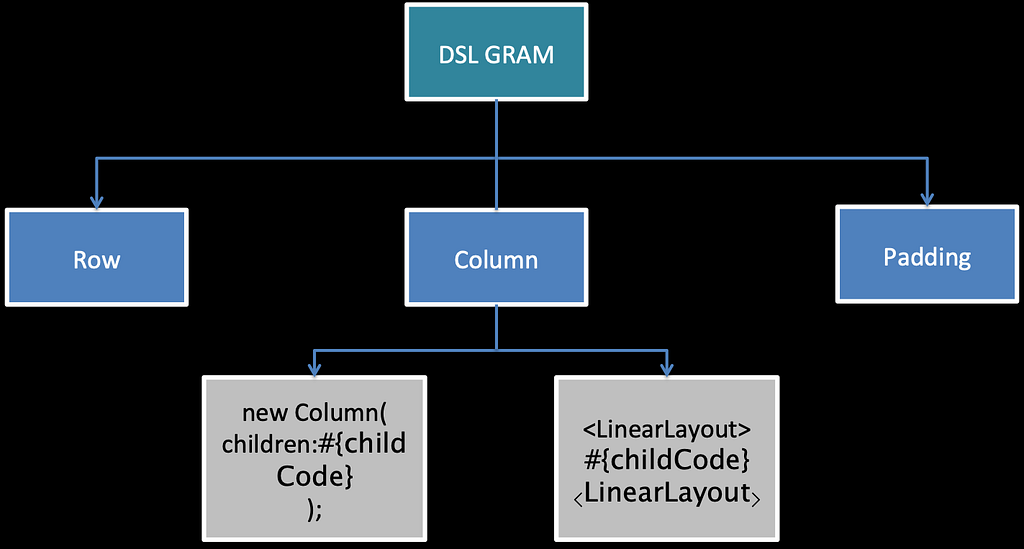

Code Generation and Plug-in

AI is essentially a matter of probability, while automatically generated code demands a very high degree of restoration and 100 percent accuracy. As probability dynamics make it difficult to achieve this level of accuracy, an editable tool is needed to enable developers to quickly understand and modify UI layout structures.

To this end, the Xianyu team implemented a template-based matching approach for the DSL TREE generated by UI2CODE, in which the code template’s content is defined by experienced Flutter technologists. This has so far proved to be the optimal approach to code implementation.

Some tags are introduced in the code template, and the Intellij plug-in is used to retrieve and replace the corresponding UIKIT in the Flutter project so as to improve code reuse.

The entire plug-in project requires provision of custom UIKIT functionality, which includes retrieval, replacement, verification, creation, modification, and graphic identification for the DSL Tree. In all, this is similar to the ERP system, which takes time for improvement.

Key Takeaways

Of the five key components introduced in this article, four relate to machine vision problems and are linked together through AI. Releasing code into an online environment presents extremely strict requirements, and AI’s probabilistic nature presents a major challenge in this respect. To continue to address these issues and implement solutions, the Xianyu technical team has chosen to focus on machine vision capabilities while using AI technologies as a supplement for building the UI2CODE system in its entirety, and continues to focus on AI technologies to make UI2CODE an ideal automated tool for code generation.

(Original article by Chen Yongxin陈永新 )

Alibaba Tech

First hand and in-depth information about Alibaba’s latest technology → Facebook: “Alibaba Tech”. Twitter: “AlibabaTech”.

Introducing UI2CODE: An Automatic Flutter UI Code Generator was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.