Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

We present a way to create a GIF from a comic strip using deep learning. The final result looks like this:

Disclaimer: All the Dilbert comics are the work of Scott Adams and you can find them at dilbert.com

GIFs are better

In mobile devices GIFs are a much better way to present the content than static images. We have also seen an explosion of GIFs due to faster internet speed and the ability to quickly share it with your friends using social media apps. In case of a comic strip, where we have gone through the pain of zooming in and zooming out — GIFs seems to be a natural progression.

Using deep neural nets for fun

We all love Dilbert and in some weird way the Pointy Haired Boss too. And thanks to Scott Adams we have been thoroughly entertained through all these years. We wondered if we could convert any comic strip into a GIF? after all, a GIF is just a series of image frames.

To create a GIF from a comic we cut the comic strip into separate frames and then combine them as a GIF using FFMpeg. That’s the simple and sweet way to get a GIF. But can we do more?

Text Removal

We thought of removing the text then making it appear after a second, so that it would seem that the person has just spoken the words. It somehow gives a life to the characters.

So, the removal of text was the main task for this project.

Training data preparation

In all the different types of deep neural nets we need labelled training data. The training data we used was prepared manually using the PixelAnnotationTool. It took me 1 hour to annotate approximately 200 images. You basically click inside the bubble once and once outside and you get the following result. The tool uses the Watershed algorithm to do this job.

Manually Masked Speech BubbleDeciding on deep neural net

Manually Masked Speech BubbleDeciding on deep neural net

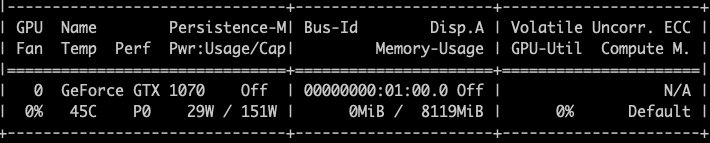

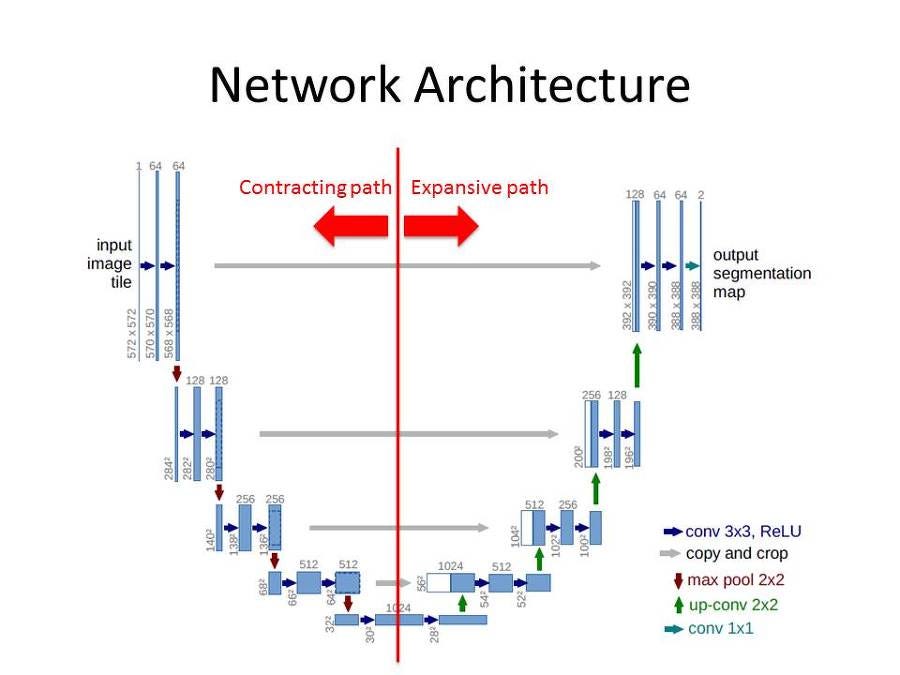

We were looking for such architectures which are capable of doing image segmentation. Image segmentation is the process of partitioning an image into multiple segments. The most notable one to achieve this is Mask-RCNN which are used for high resolution images as they are very good with even tiny specks of objects in the image. Mask-RCNN also has a higher GPU memory requirement, somewhere in the range of 8 to 13 Gigabytes and requires lot of tuning in order to train in lesser memory and the hardware we have is a GTX 1070 which only has 8 Gigs of GPU memory.

While Mask-RCNN is more powerful and can be used for detection+segmentation, we only needed segmentation which Unet is good enough to do. Also, in the past we have had a good experience with the performance of the model. So, we decided to use Unet model. It uses VGG as the base model and builds upon it. The model looks like this with all the layers in its glory:

Unet architecture image from deeplearning.netTrain, Train

Unet architecture image from deeplearning.netTrain, Train

After deciding on the model it was now time to train the Unet model on the training data created by hand and the tool. The data was split into training and validation sets and the training was done for approximately 15 minutes in total on the GTX 1070.

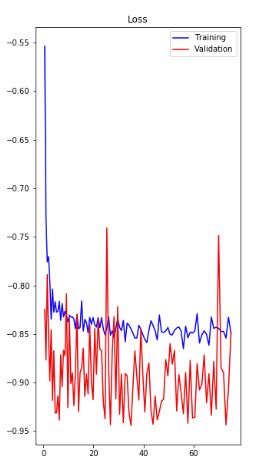

Let us look at the graph below which shows the loss. The loss (dice coefficient) sharply drops within 10 iterations of training and then fluctuates around -0.85 dice value. The binary accuracy and loss for validation data follows it and is also around the same level. (The loss of validation data is fluctuating a lot due to them being in a very small number.)

Time to see the results

Yay! hard work is over. Time to see the results. Pretty awesome isn’t it?

Some issues needed attention

The result looks awesome to me yet it was only 20% of the project . If you notice above you will see small white blobs sprinkled on a few of the images very far from the real speech bubbles, those don’t look good. To remove small artifacts, we found the connected components in the generated mask and removed the ones which had areas within a threshold.

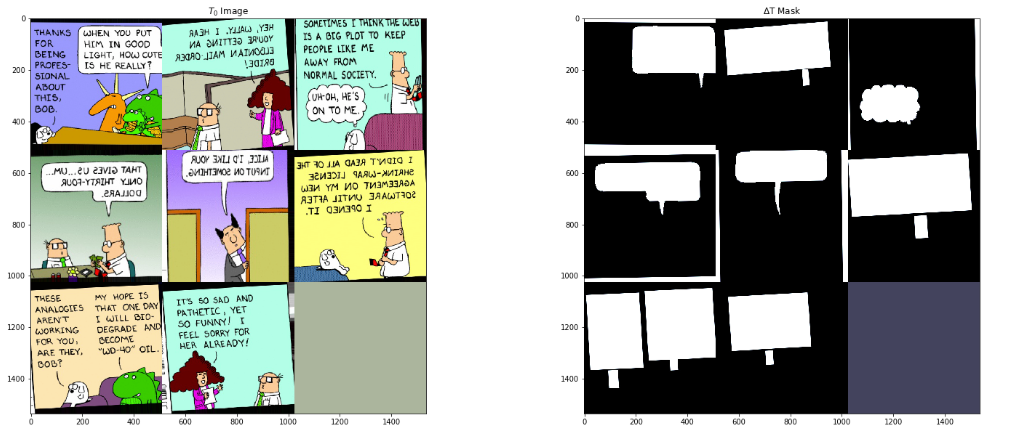

Texts without the speech bubble

In addition this doesn’t work that well when there is no speech bubble surrounding the text. And if you see recent Dilbert comics, you will find that most of them do not have any speech bubble. So, in order to fix that we needed new training data where we have masks covering the texts. So, I sat down one more time to create masks but this time PixelAnnotationTool didn’t help, I used this tool. The new training data combined with the old ones solved this issue. And as you can see below the training data looks like this now:

Training data with and without speech bubblesRefilling the void background

Training data with and without speech bubblesRefilling the void background

As soon as you mask out an area that area looks white. In case of a speech bubble it may look okay but in case of texts without the speech bubble it would look ugly. So, we need to repaint the background void created by the masked area with the original background. To do that we simply copied the pixel color for each height from top to each pixel at that height from the top. Since at every height there is at least one pixel which is an actual background color we can find it and replicate it at that height.

Duration for frames

One can have same duration for each frame but that would either make some frames display for too long or for too short duration. To solve this problem we look at how many positive pixels are returned by the Unet model and accordingly give proportional duration to different frames.

Future

Starting with Dilbert, the aim is to cover as many comic publications as possible. We believe the model trained can be adjusted for other publications.

Do let us know your comic suggestions.

Without further ado just head over to comic2gif.com and create your own GIF.

Dilbert as a GIF was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.