Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

This sample app is designed for clubs, gyms, museums (or even Costco )— any organization that has membership cards with a photo to gain access. With just a few serverless tools, we can develop a scalable, durable membership management system that uses facial recognition to keep track of members.

The app allows an administrator to enroll faces in their organization, and then verify new faces against the known membership list. In traditional app development, this would be a major undertaking but in serverless it’s easier than you might think.

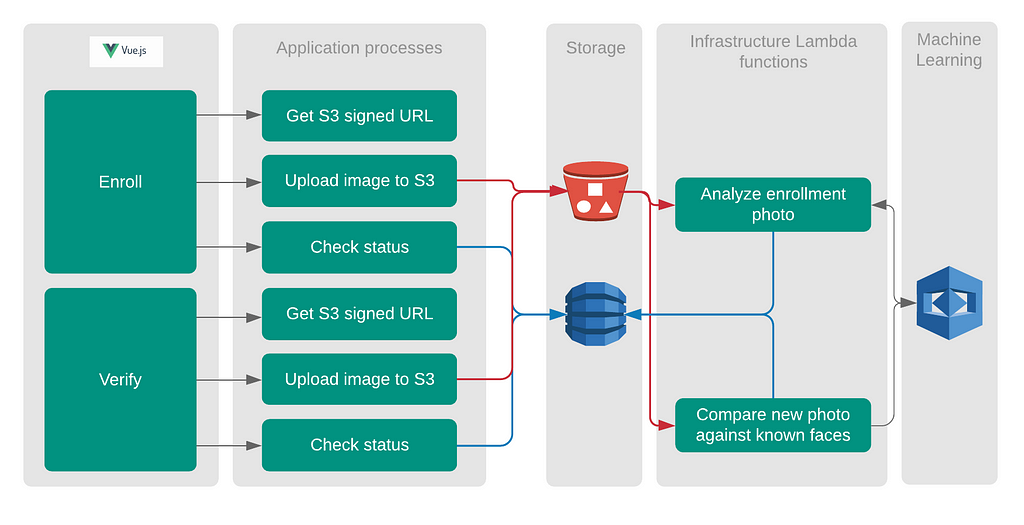

The key takeaways in this project are that serverless lets us clearly delineate the front-end and back-end of a web-app, and by hooking code onto events fired by AWS services, we can benefit from massive scale and reliability with very little actual development. Our design looks like this:

In this exercise:

- We use VueJS on the front-end to create a mobile-friendly web app that will take photos and handle user interaction.

- On the back-end we use API Gateway and Lambda to handle the interactivity with the front-end.

- S3 stores all the images and a DynamoDB table keeps track of the facial data in those images.

- Finally, AWS Rekognition does all the heavy lifting in enrolling and verifying faces.

A quick walk-through of the final web app

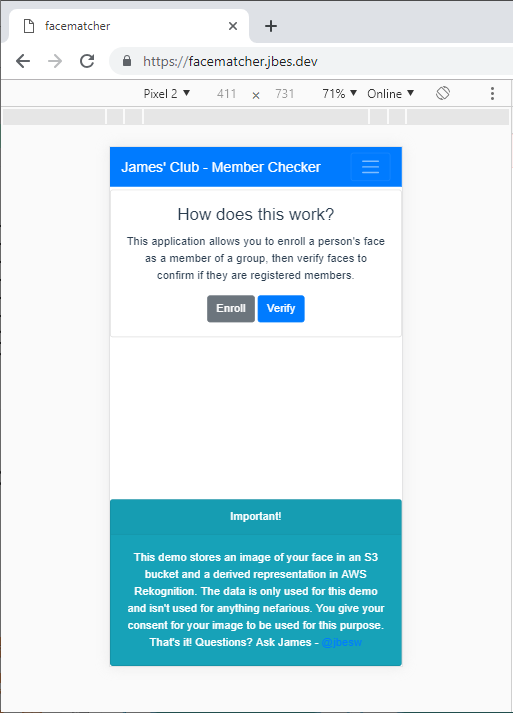

You can test the end result by visiting https://facematcher.jbes.dev/. This is a live application intended for mobile phones and tablets (you can use Chrome tools to force a mobile layout, as shown below).

Please read this first — every enrollment in the live hosted app joins a collection of members and the subsequent verification occurs against that entire population of faces. If you choose to join, you are a permanent member so please don’t enroll your face if you are uncomfortable with having your facial data stored in the demo system.

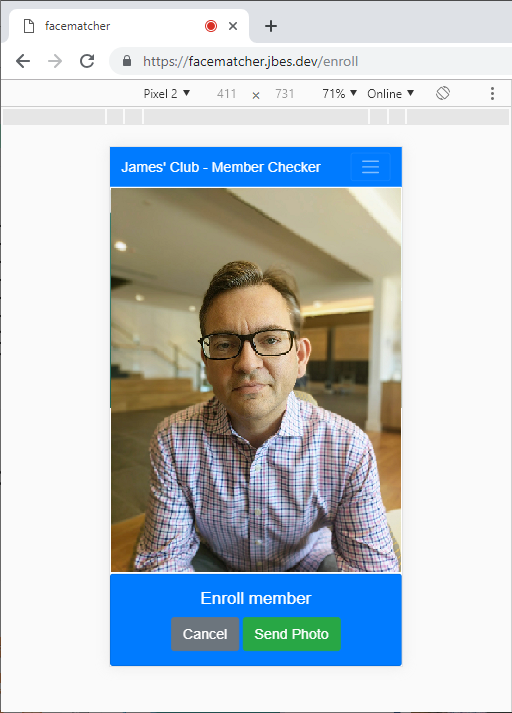

Click ‘Enroll’ to register your face — make sure you’re in a well-lit environment with your face looking forward towards the camera:

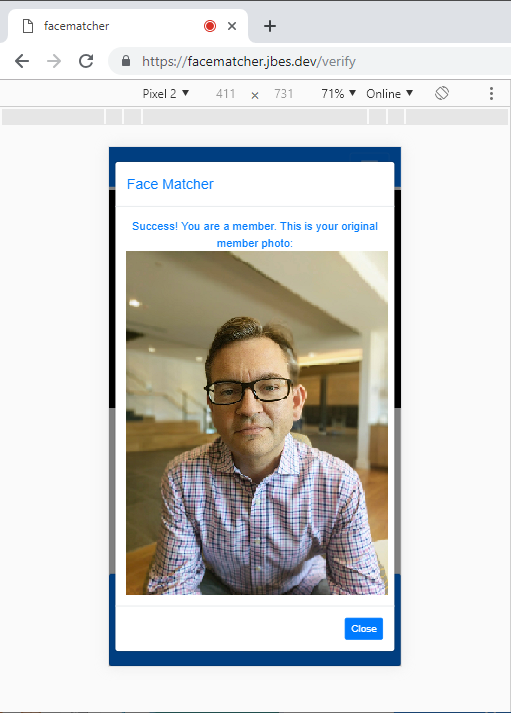

After enrolling, click ‘Verify’ and take a photo of yourself — if it successfully matches, you’ll get a confirmation showing the original enrollment photo:

Just a couple of quick caveats about this process. First, this sample app uses getUserMedia to capture the photo — some browsers don’t support this (including iOS) so you will need to use Firefox or Chrome for testing.

Second, the live hosted app above protectively shuts down if it’s used excessively (to save my wallet from unexpected charges), so if the system is unavailable, it’s due to over-use. This is only a demo environment but if you like what it does, you can launch the code in your own AWS account, which is what we’ll be doing in this tutorial.

Everything in this exercise can be completed within the AWS Free Tier, which includes ample facial recognition usage for testing. Having said that, it’s always possible to be charged for usage and you are always responsible for your own bill.

Finally, you can also follow my walk-through at https://www.youtube.com/watch?v=nyNKOYqZ3b4.

Ok, back to the code!

Setting up Rekognition

AWS Rekognition is not supported in every region so for clarity everything in this demo happens in us-east-1 (N. Virginia). You will need to install and configure your AWS CLI first and then open a command prompt on your operating system.

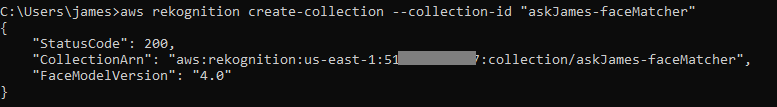

We will be using a single collection within Rekognition called askJames-faceMatcher — to set up the collection, from your command prompt enter:

aws rekognition create-collection --collection-id "askJames-faceMatcher"

You should see the output below, meaning your Rekognition collection is now ready to use.

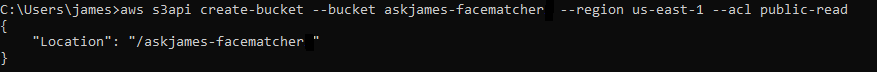

We will need an S3 bucket for our app with public read permissions (for production I would recommend enforcing public access only through a CloudFront distribution but this helps keep the demo simple).

We are going to call the bucket askjames-facematcher-<enter a random code> — this will give you a unique bucket name.

From the same command prompt, type:

aws s3api create-bucket --bucket askjames-facematcher-RANDOMCODE --region us-east-1 --acl public-read

If successful, you will see a JSON response similar to this:

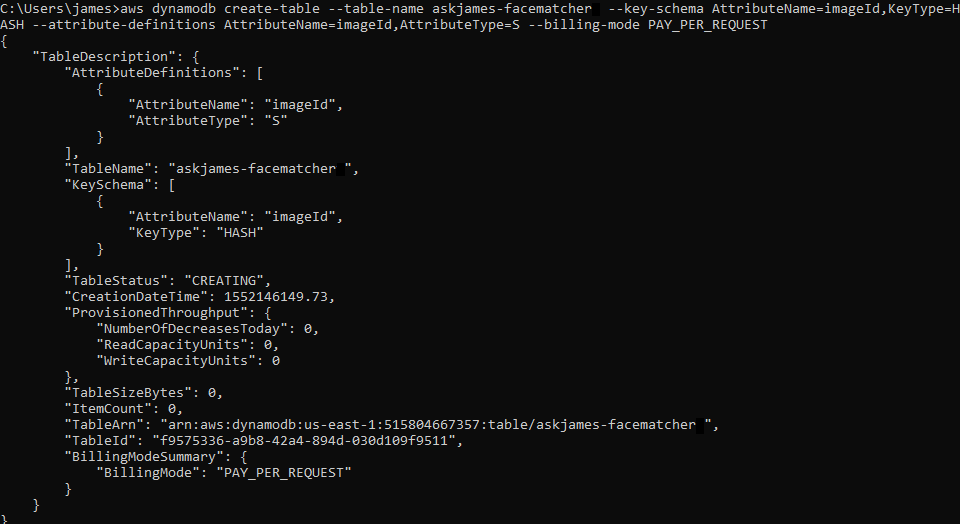

Next, let’s create the DynamoDB table with on-demand capacity by entering the following:

aws dynamodb create-table --table-name askjames-facematcher --key-schema AttributeName=imageId,KeyType=HASH --attribute-definitions AttributeName=imageId,AttributeType=S --billing-mode PAY_PER_REQUEST

You should see the response below:

The back-end of this system is now set up — next, let’s configure the Lambda functions that will connect these services.

Deploying the back-end glue.

The way this application works, when an image arrives in the S3 bucket, it triggers a Lambda function to fire, which then processes the image with Rekognition.

You need to download my code and deploy to AWS to make this work, so let’s set that up.

- First, clone the code repository from https://gitlab.com/jbesw/askjames-facematcher-backend in to a directory on your machine — this contains all the Lambda functions we will be using.

- Once downloaded, run npm install to set up the packages needed.

- In case you haven’t installed Serverless framework before on your system (or you need to update), also run npm install -g serverless.

- Finally, you must set your bucket name in serverless.yaml — enter your unique bucket name on line 14 (the UPLOAD_BUCKET variable).

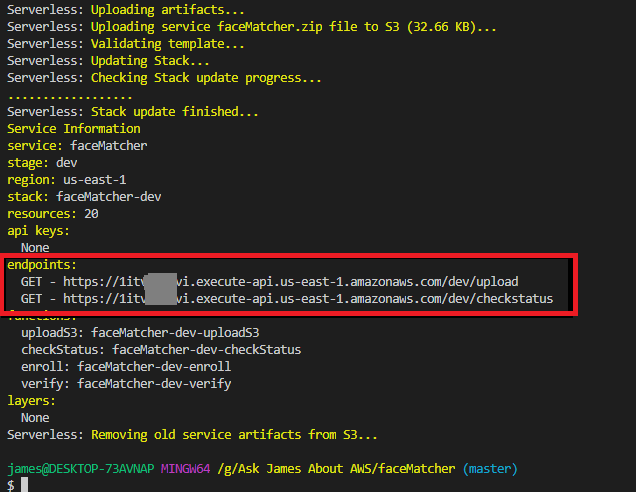

You can now deploy this code with sls deploy, wait a minute for the magic to happen, then run sls s3deploy since we are using a plugin to hook up the S3 events to some code. You’ll need to take a note of the endpoints listed in the output since we will need this URL later:

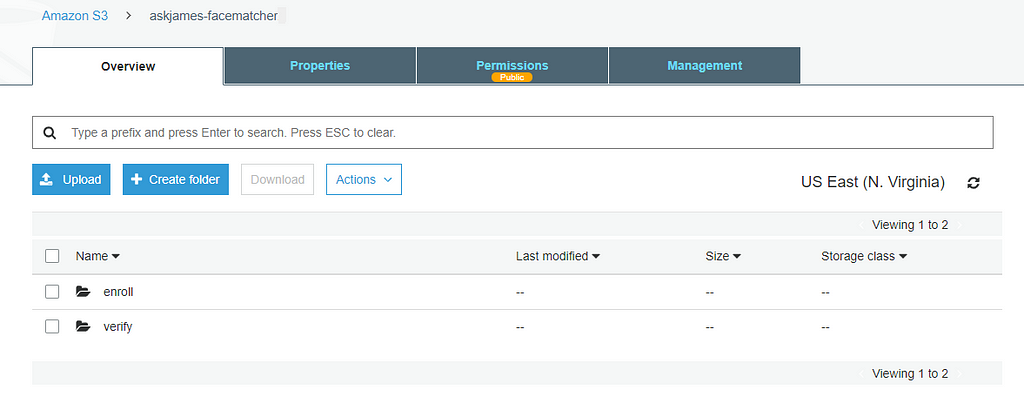

We are now ready to test the whole back-end of the application. Go to the S3 bucket you created earlier and create two folders called ‘enroll’ and ‘verify’. After you’ve finished, it will look like this:

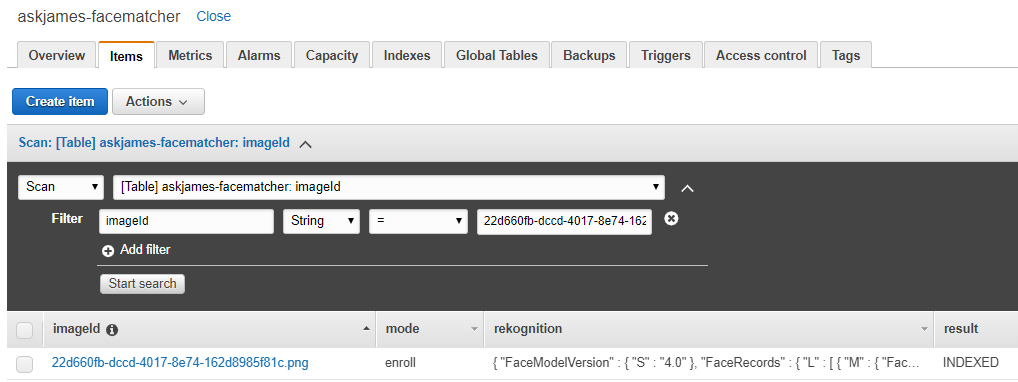

You’ll need two different photos of the same person’s face for the next steps, to test the photo enrollment and verification processes. Upload one of the photos to the enroll folder, and then check on your DynamoDB table to find a new record has appeared a few seconds later:

In the result column, ‘INDEXED’ means the system has successfully found and enrolled the face in the Rekognition collection.

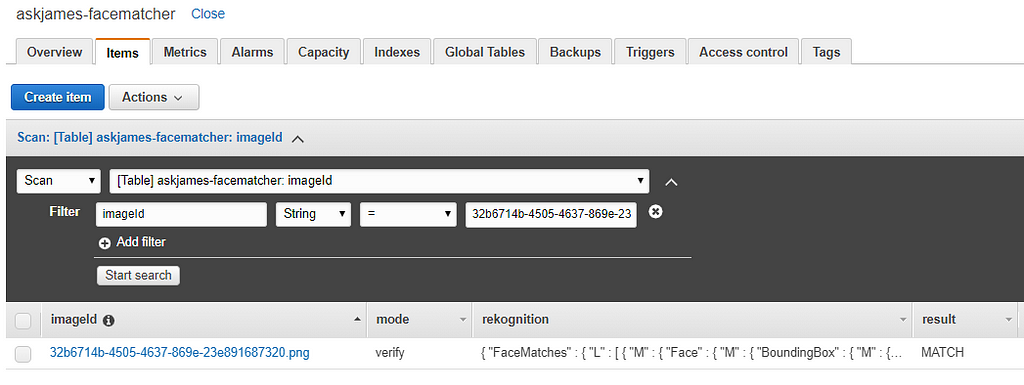

Go back to S3 and upload the other photo of the person into the verify folder, and then refresh the DynamoDB table to see the result:

In the result column, ‘MATCH’ indicates that Rekognition matched the photo with a face in the collection, and this DynamoDB item contains details of that match in the JSON attribute.

What just happened? When you put a new object into the S3 bucket, depending on which folder you target will fire one of two Lambda functions. In the enroll folder, the process attempts to find and register a new face in the photo; in the verify folder, it will compare any face found against everyone already registered.

The key point is all of this happens serverlessly and independently of any front-end. Whether you copy 1 object into the bucket or 1,000 these functions will do their respective jobs and you are only constrained by your budget. The core of the app’s functionality has now been completed.

Connecting the front-end.

FaceMatcher has a VueJS front-end — this is a single-page application that communicates with the back-end via an API.

- To get started, clone my front-end code from https://gitlab.com/jbesw/askjames-facematcher-frontend.

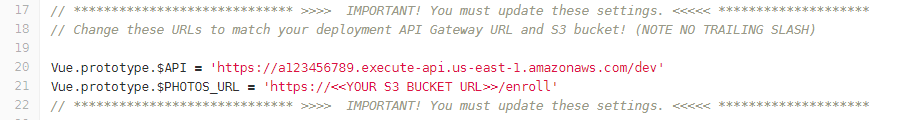

- From the same directory run npm install to download all the packages it needs. Once installed, go to the src folder and open main.js — scroll down to lines 20–21:

- For the API, enter the endpoint URL you saved from deploying the back-end. It will look similar to the one in the screenshot (only the code at the beginning should be different).

- Next, enter the S3 bucket URL — for example, if it was askjames-facematcher-123456, the URL would be http://s3.amazonaws.com/askjames-facematcher-123456/enroll.

To run the front-end application locally, enter npm run dev at the command prompt. Once the build process completes, visit http://localhost:8080 to see the app.

In Chrome tools (press F12), you can toggle the device toolbar to force the page to render as it would on a mobile device (it works best on mobile or tablet layouts).

A quick recap.

In this serverless application, we built out a back-end by initializing the services we wanted to use first (Rekognition, S3 and DynamoDB). We then deployed the ‘glue’ functions that were fired when objects were uploaded to S3 — these events are the core part of the system. These functions stored the responses from Rekognition in DynamoDB.

The front-end actually does very little — it takes a photo with your webcam or phone’s camera, requests an upload URL from S3 and pushes the photo up to the service. It then polls an API which checks with DynamoDB to see if the photo has finished processing, and displays the result.

You could deploy the front-end to S3 (as I did in the hosted app) by entering npm run build and copy the files in the dist directory to the bucket. The hosted app uses a CloudFront distribution and a custom domain name to finish out the deployment.

Critically, although this app is simple, it’s extremely scalable and durable. The front end is loaded from S3 and there is no server acting as a single point of failure. The mechanism for facial analysis stores all the images in S3 and the results in DynamoDB. We do not need to back up the images or scale under heavy load.

How does this compare with a non-serverless design?

The most common approach would use a web-server like Flask, Django or Express to generate the front-end code and manage the file uploading process and the interaction with Rekognition. This server would have to then store the images somewhere in a file system and maintain a database of files and their respective Rekognition responses.

The limitations with this approach are scaling, availability and effort — the server is a single point of failure and can only reach a certain capacity before scaling out is required. The amount of coding required to make this work would be considerably greater than the serverless approach illustrated here.

What’s next?

There are many ways to improve the performance of the app, and the UI could be much more useful. In production, you would also want to add authentication to restrict usage.

But with this skeleton application, you can see that the core functionality is simple to build and deploy with serverless, thanks to the ease of using the managed services provided. We have built out a fully-featured member management system using facial recognition with very little actual code.

How to Build a Member App using Facial Recognition and Serverless was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.