Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Object Detection :Theory

Ever wanted to detect objects in an image or a video…probably yes! That’s why you are here!This story is divided into two parts. This part deals with main theory behind Object Detection. In the Next part, we will be programming the components of Object Detection we have seen here and getting them to work (Detect objects in an image). We will not be using Tensorflow Object Detection API instead we are going to write our own Object detection programme using YOLO(You Only Look Once) Algorithm. I’ll be sharing what I learned from Coursera course. Now, this story can be pretty lengthy(big things demand more effort). I think this will do for the introductory part.

Let’s check out the requirements:

- YOLO v2 model weights

- Tensorflow (of course!)

Object Detection as the name suggests means detecting objects in an image or a video blah…blah …blah. I think google has enough for that. Let’s not waste time and jump into some really important components that gets this thing to work!

We will be looking at some key components of Object Detection:

- Sliding Windows Algorithm

- Bound Boxes

- Intersection over Union [IOU]

- Non-max Suppression

- Anchor Boxes

That’s it! and at the end we will use these concepts to understand why YOLO algorithm works so well. We will look at Sliding Windows Algorithm and then see how YOLO can solve the problems we have with Sliding windows.

Let’s assume we want to detect dogs in an image. So, for this purpose we a training data-set of closely cropped images of dogs (X) and corresponding label (Y) with 0(No dog) or 1 (Yes, a dog!).

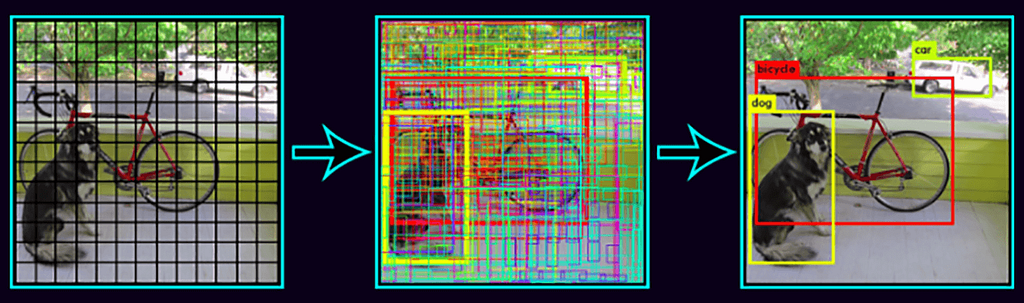

Sliding Windows Algorithm

Now, suppose we trained a Convolutional Neural Network on the above data-set which output 1 if dog detected or else 0. And, now we want to test them on real life images of dogs. Since our training set was having closely cropped images of dogs from which our CNN learned to tell whether there was a dog or not. This is fine as we are having closely cropped images but what about real-life images (suppose we click a photo from our phone), this image is not closely cropped. So, how do we get our CNN to tell whether there is a dog or not. This is where Sliding Windows comes into play.

We define a window of some size (say of size w) and put this over a region (covering area w, of course!) on the image. Then, feeding this input region to our trained CNN and getting it to output (0 or 1). Likewise, we repeat this process on each and every region of the image(with some stride). Thus, calling this sliding windows.

Now, once done we take a window of bigger size and slide it over whole image and hopefully by repeating this process again and again (increasing window size) we will probably end up with a window of the size of a dog in the image and getting our CNN to output 1 for that, meaning we detected a dog in that particular region. This was all about sliding windows algorithm .

One of the disadvantage of sliding windows is the computational cost. As we are cropping out so many square regions in the image and running each image though a CNN independently. We may think of using a bigger window as it will reduce computation but at the cost of accuracy and small window will be accurate but computationally expensive. One way to go around this problem is to implement Sliding Windows convolution-ally. Sliding window also do not localize object accurately unless the stride and window size is small.

Let’s see how YOLO can help predicting accurate Bounding Boxes.

Bounding-Boxes prediction

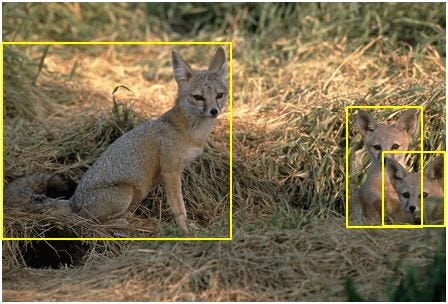

Bounding Boxes are the boxes that enclose the object in an image. Sliding windows as stated earlier outputs less accurate bounding boxes (as it depends on the size of the window). Now, let’s look another approach for Bounding-Boxes Prediction.

Bounding Boxes (I know they are not dogs,but I guess fox or something else!)

Bounding Boxes (I know they are not dogs,but I guess fox or something else!)

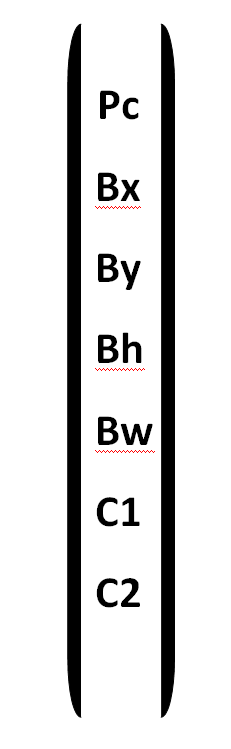

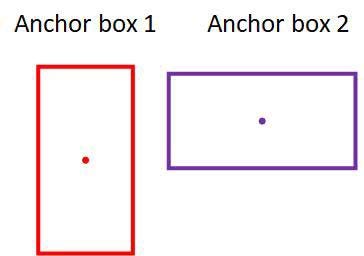

The idea is to divide the image into grids (say w by w) and then for each grid we define our Y label which earlier was (0 or 1) as follows:

Pc : is the probability that there is an object in the grid cell. It is either 0 meaning no object or 1 meaning an object.

Bx : If Px is 1, then Bx is the x co-ordinate of the Bounding Box .

By : If Px is 1, then By is the y co-ordinate of the Bounding Box .

Bh : If Px is 1, then Bh is the height of the Bounding Box.

Bw : If Px is 1, then Bw is the width of the Bounding Box.

C1 : C1 refers to the class probability that the object is of class C1.

C2 : C2 refers to the class probability that the object is of class C2.

One thing to note here is the number of classes may vary , depending on whether it’s a Binary Classification or Multi-Class Classification.

To summarize, if a grid contains an object (i.e. Px =1) then we look at the class of the object and then the Bounding-Box for that object in the grid.

Now, there are couple of questions we should address.

- What is the size of the grid to be used?

- Which grid is responsible for outputting a Bounding-Box for object that span over multiple grids?

Usually, in practice 19 by 19 grid is used and the grid responsible for outputting the Bounding-Box for a particular object is the grid that contains the mid-point of the object. And, one more advantage of using 19 by 19 grid is that the chance of mid-point of object appearing in two grid cells is smaller.

This was all about Bounding-Boxes Prediction. Now, let’s talk about another component called Intersection over Union.

Intersection Over Union [IOU]

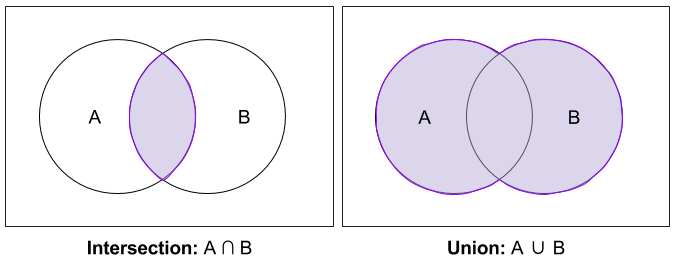

Now, earlier we talk about predicting Bounding-Boxes, but how accurate are these predictions. This is where the concept of Intersection Over union comes in. Now, we may wanna revisit set theory . Let’s take a quick glance at Intersection and then Union:

Suppose, if we have two sets A and B. A is the set of the teachers in an Institution and B is a set of the students in the same Institution. So, their Intersection will be the another set C containing students who work as assistant teacher and are of course students. Their Union will be a new set C which contains all the teachers from set A and all the students from set B.

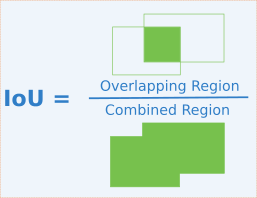

Now, what is Intersection over Union?

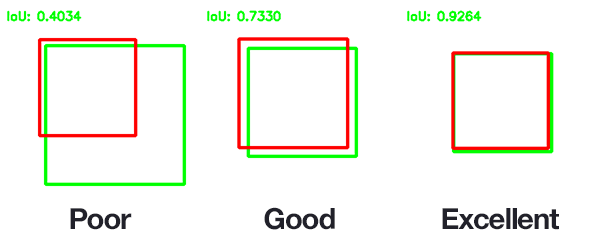

Suppose, our algorithm outputted a Bounding-Box (say A) for a object in some grid. So, what Intersection over Union tells us is how close our prediction is to the ground truth (say B). We divide the Intersection of A and B by the Union of A and B . If IOU is greater than equal to certain threshold (say 0. 5) then the prediction is considered to be correct or good else we need to work more on our algorithm. If the IOU is 1 then it means our prediction is exactly same as ground truth.

One thing to note here is greater the IOU is the more accurate is the prediction and other thing is by convention, we use 0. 5 as a threshold . But we are free to use threshold greater than 0. 5.

Below is the Image depicting this idea:

Congratulations! We came a long way.Just a couple of topics to go before we ready to write our very own Object Detection Programme.

Non-max Suppression

We have noticed till now that for a prediction of single object which spans over multiple grids, each grid would output its own prediction with probability score (Pc we have seen earlier). Now, this can make our predictions look messy and probably we just wanna see the a single bounding-box for single object with maximum probability score(Pc).

How we do it?

What we do is out of all the bounding-boxes outputted by our network we discard the once with class probability less than certain threshold (say 0. 5) and out of the remaining boxes we chose the box with highest class probability(for example let’s say the box has a name A). Now, we calculate the IOU of the remaining boxes with A and discard the boxes with IOU greater than or equal to 0. 5(say).

One thing to note here is if we have three classes then the right thing will be to carry out NMS (Non-Max Suppression) for all three classes.

This is it! Last but not the least , let’s take a look what are Anchor boxes.

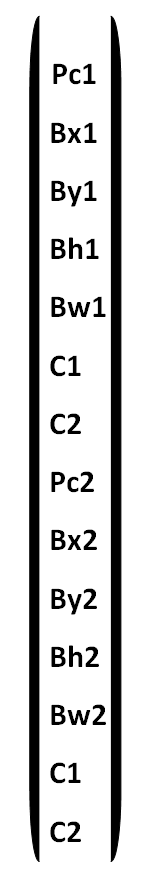

Anchor boxes

From the very start we have came across some problems and we do have tackled them. Just one problem left to deal with is how we detect multiple objects in the same grid cell?

The idea behind is instead of defining just one Bounding-Box predictions values (Pc, bx, by, bh, bw, c1, c2…etc) with one shape (say rectangle) , we may define two Bounding-Box prediction values or ever three or more of different shapes(so they are able to detect objects of different sizes)(say rectangle, square etc).

The image shows the defining of two Anchor Boxes having different Bounding-Box values thus, having different Shapes.

Now, how we decide which anchor box will be used in predicting which type of objects?

Here, again we make use of Intersection over Union.We use the box which has the highest IOU with the shape of the Object to make prediction.

So, this was all about Anchor Boxes, I consider to be important. With this we probably sum up the theory we need for Object Detection. Congratulations! For completing a big journey. Last topic for this story is about YOLO algorithm . We will sum all the components we studied about from the start and see how these things make this Algorithm out-perform any other Algorithm!

Before that for good intuition about Anchor boxes consider this Image:

For YOLO algorithm when preparing our Training set , we divide the image into grids (mainly 19 by 19) and we define Anchor Boxes for each grid(say 2 anchor boxes for each grid) . For example :Each grid in 19 by 19 grids will output two Anchor Boxes(two predictions). The size of Y-label will be 19 by 19 by 2 by (5 + num_of_classes) where 2 corresponds to the number of Anchor Boxes and 5 corresponds to the bounding-boxes(bx, by, bh, bw) and Pc. Once, we done preparing our training data-set this way, we train it on a CNN . This CNN takes input images of size (say 100 by 110) as outputs an image of size 19 by 19 by 2 by (5 + num_of _classes)

Predictions : This CNN when feed with a new image will output Y-label in the format specified above. Couple of things to note here, First, for the grids that did not found any object will have Pc=0(of course!) and will output some random values for bounding-box and classes will be 0. Second, suppose if the object is in rectangle shape and we have defined two anchor boxes (one of shape square and the other rectangle) so the network will output values for rectangle anchor box and the square will have some random values we don’t care about.

Last but not the least, as each grid will output two bounding-box (because Anchor box is two i. e. rectangle and square) our output will look very messy so to deal with this we will implement Non-max suppression as stated above.

That’s all , I have for this story. I hope , I was able to help you and add value to your knowledge. In the next post, we will work out our own Object Detection programme using Tensorflow and YOLO v2.

Credits:[Images used in this story]

Object Detection :Theory was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.