Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

“What I cannot create, I do not understand.”

— Richard Feynman

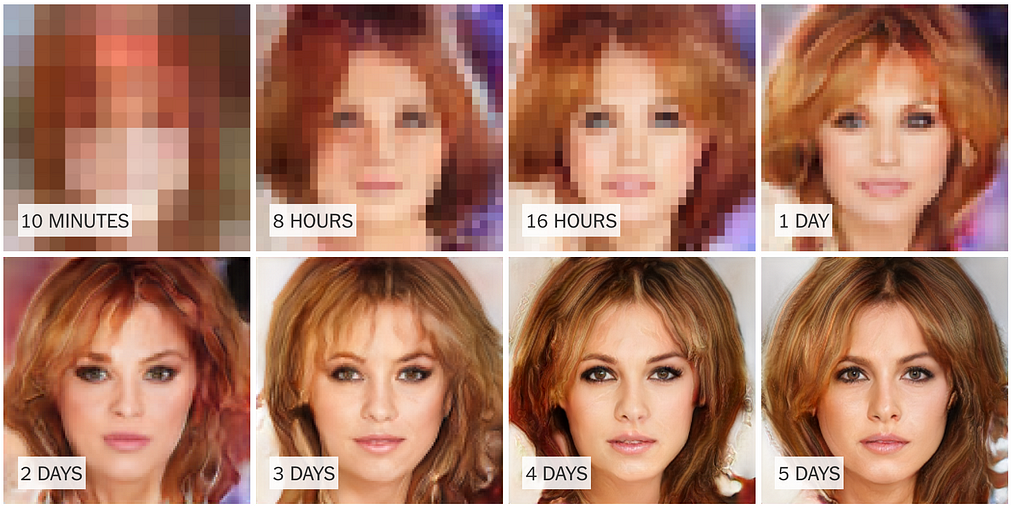

The above images look real, but more than that, they look familiar. They resemble a famous actress that you may have seen on television or in the movies. They are not real, however. A new type of neural network created them.

Generative Adversarial Networks (GANs), sometimes called generative networks, generated these fake images. The NVIDIA research team used this new technique by feeding thousands of photos of celebrities to a neural network. The neural network produced thousands of pictures, like the ones above, that resembled the famous faces. They look real, but machines created them. GANs allow researchers to build images that look like the real ones that share many of the features the neural network was fed. It can be fed photographs of objects from tables to animals, and after being trained, it produces pictures that resemble the originals.

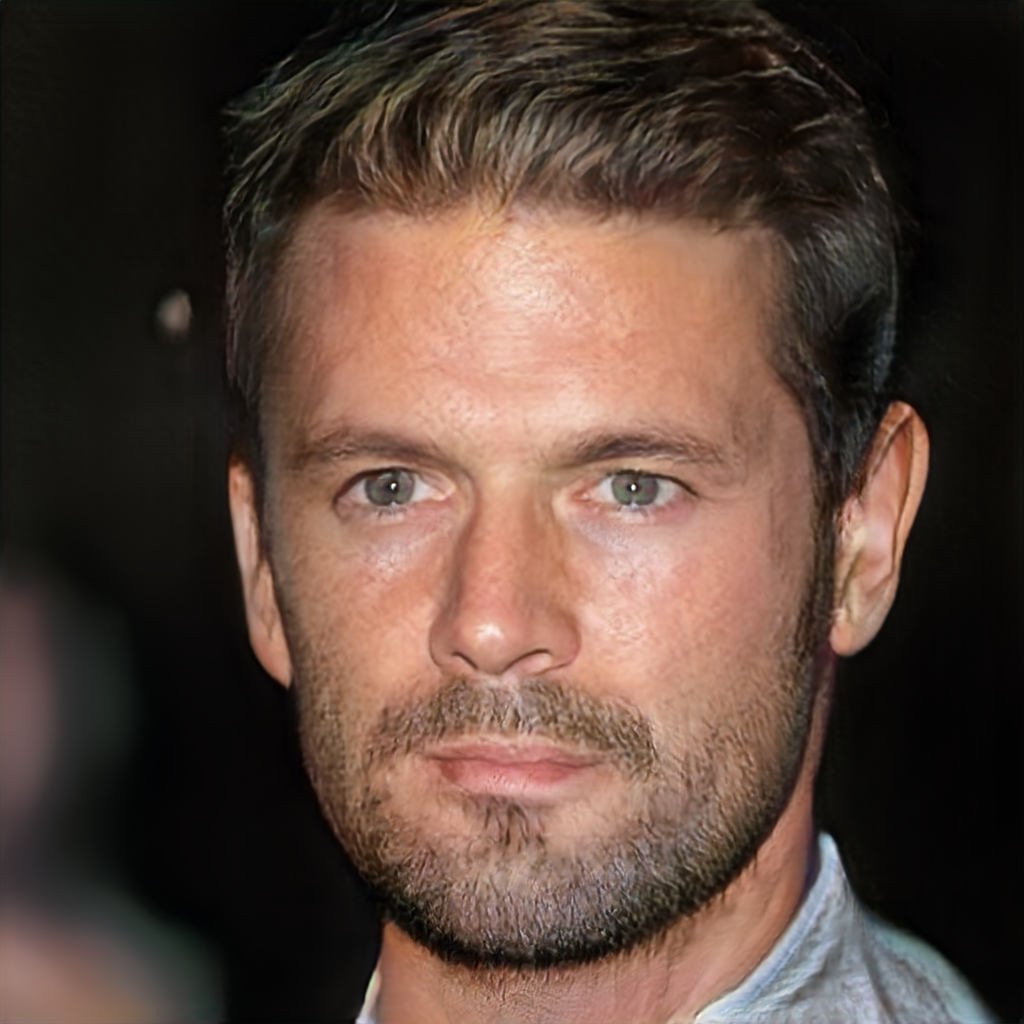

Of the two images above, can you tell the real from the fake?¹

Of the two images above, can you tell the real from the fake?¹

For the NVIDIA team to generate these images, it set up two neural networks. One that produced the pictures and the other that determined if they were real or fake. Combining these two neural networks produced a GAN. They play a cat and mouse game, where one creates fake images that look like the real ones fed into it, and the other decides if they are real. Does this remind you of anything? The Turing Test. Think of the networks as playing the guessing game of whether it is a real or fake.

After training the GAN, one of the neural networks creates fake images that look like the real ones fed to the GAN. The resulting pictures look exactly like the real ones. This technique can generate a lot of fake data that can help researchers predict the future or even construct simulated worlds. That is why for Yann LeCun, Director of Facebook A.I. Research, “Generative Adversarial Networks is the most interesting idea in the last ten years in machine learning.” GANs will be helpful for creating images and maybe creating software simulations of the real world, where developers can train and test other types of software. For example, for companies writing self-driving software for cars, they can train and check their software in simulated worlds.

These simulated worlds and situations are now hand-crafted by developers, but some believe that in the future these scenarios will all be created by GANs. GANs generate new images and videos from very compressed data. Thus, you could use the two neural networks for a GAN to save data and then reinstate it. Instead of zipping your files, you could use one neural network to compress it and the other to generate the original videos or images. It is no coincidence that in the human brain some of the apparatus used for imagination is the same as the one for memory recall. Demis Hassabis, the founder of DeepMind, published a paper that “showed systematically for the first time that patients with damage to their hippocampus, known to cause amnesia, were also unable to imagine themselves in new experiences.” The finding established a link between the constructive process of imagination and the reconstructive process of episodic memory recall.

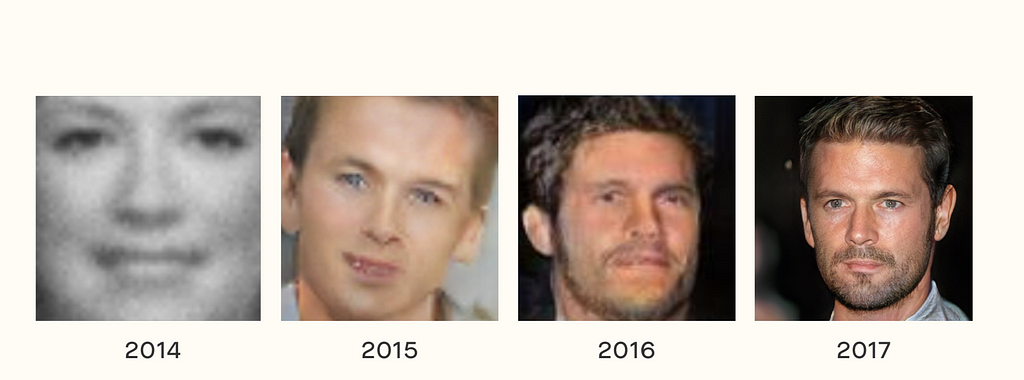

Increasingly realistic synthetic faces generated by variations on Generative Adversarial Networks (GANs)The Creator of GANs

Increasingly realistic synthetic faces generated by variations on Generative Adversarial Networks (GANs)The Creator of GANs

Ian Goodfellow, the creator of GANs, came up with the idea at a bar in Montreal when he was with fellow researchers discussing and trying to figure out what goes into creating photographs. The initial plan was to understand the statistics that determined what created photos, and then feed them to a machine so that it could produce them. Goodfellow thought that the idea would never work because there are too many statistics to make that work. So, he thought about using a tool, a neural network. He could teach neural networks to figure out what are the underlying characteristics of the pictures fed to the machine and generate new ones.

Goodfellow then added two neural networks so that they could together build realistic photographs. One created fake images and the other determined if they were real. The idea was that one of the adversary networks would teach the other how to produce images that could not be distinguished from the real ones.

On the same night that he came up with the idea, he went home a little bit drunk and stayed up that night coding the initial concept of a GAN on his laptop. It worked on the first try. A few months later, he and a few other researchers published the seminal paper on GANs at a conference. The trained GAN used handwriting digits from a well-known training image set called MNIST.

In the following years, hundreds of papers were published using the idea of GANs to produce not only images but also videos and other data. Now at Google Brain, Goodfellow leads a group that is making this technique of using two neural networks very reliable to train. The result from this work are services that are far better at generating images and learning sounds, among other things. “The models learn to understand the structure of the world,” Goodfellow says. “And that can help systems learn without being explicitly told as much.”

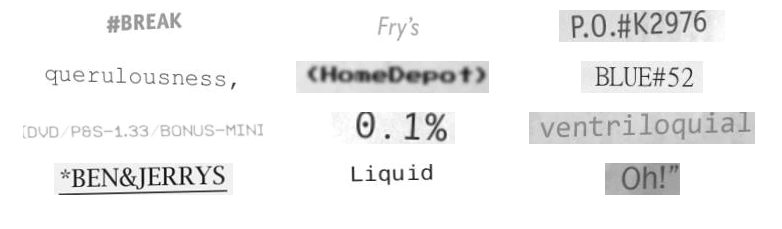

Synthetically generated word images

Synthetically generated word images

GANs could eventually help neural networks learn with less data, generating more synthetic images that are then used to identify and create better neural networks. Recently, a group of researchers at Dropbox improved their mobile document scanner by using synthetically generated images. GANs produced new word images that, in turn, were used to train the neural network.

And, that is just the start. Researchers believe that the same technique can be applied to develop artificial data that can be shared openly on the internet not revealing the primary source, making sure that the original data stays private. This would allow researchers to create and share healthcare information without sharing sensitive data about patients.

GANs also show promise for predicting the future. It may sound like science fiction now, but that might change over time. LeCun is working on writing software that can generate video of future situations based on the current video. He believes that human intelligence lies in the fact that we can predict the future, and therefore, GANs will be a powerful force for Artificial Intelligence systems in the future

The Birthday Paradox Test

Even though GANs are generating new images and sounds, some people ask if GANS generate new information. A collection of data trains GANs. Can GANs produce data that contain information that is outside of the data given to them? Can they create images that are entirely different from the ones fed to it?

A way of analyzing that is by what is called the Birthday Paradox Test. This test derives its name from the implication that if you put 23 — two soccer teams plus a referee — random people in a room, the chance that two of them have the same birthday is more than 50%.

This effect happens because when there are 365 days in a year, you need around the square root of that to see an occurrence of duplicate birthdays. The Birthday Paradox says that for a discrete distribution that has support N, then a random sample size of √N would likely contain a duplicate. What does that mean? Let me break it down.

If there are 365 days a year, then you need the square root of 365 — √365 — to have a probable chance of duplicate birthdays, which means about 19 people. But this also works for the other side of the equation. If you do not know the number of days in a year, then you can select a fixed number of people and ask them if there are two people with the same birthday. Then, you can infer the number of days based on the number of people when two people have the same birthday with high probability. If you have 22 people in a room, then the number of days in a year is the square of 22–22² — about 484 days per year.

The same test can check the size of the original distribution of the generated images of a GAN. If the result reveals that K images have duplicate images with reasonable probability, then you can suspect that the number of original images is about K². So, if it shows that it is very likely to find a duplicate with 20 images, then it means that the size of original set of images equals 400.

With the following test in hand, researchers have shown that images generated by famous GANs do not generalize beyond what the training data provides. Now, what is left to prove is if GANs can generalize beyond the training data or if there are ways of generalizing beyond the original images by using other methods to improve the dataset.

Humans receive data, including sounds, images through vision, and whatnot. A question that some researchers ask is if humans would pass the Birthday Paradox Test, meaning “Can human brains generalize more than the data that is presented to them?”

Bad Intent

There are concerns that people can use this technique with ill intent. With so much attention on fake media, we could face an even broader range of attacks with fake data. “The concern is that these methods will rise to the point where it becomes very difficult to discern truth from falsity,” said Tim Hwang, who previously oversaw A.I. policy at Google and is now director of the Ethics and Governance of Artificial Intelligence Fund, an effort to fund ethical A.I. research. “You might believe that accelerates problems we already have.”

Even though this technique cannot create still images of high quality, researchers believe that the same technology could produce videos, games, and virtual reality. The work to start generating videos has already begun. Researchers are also using a wide range of other Machine Learning methods to generate faux data. In August of 2017, a group of researchers at the University of Washington was featured in the headlines when they built a system that could put words in Barack Obama’s mouth in a video. An app with this technique is already on the App Store. The results were not completely convincing, but the rapid progress in the area of GANs and other techniques point to a future where it becomes tough for people to differentiate between real videos and generated ones. Some researchers claim that GAN is just another tool like others and can be used for good or evil and that there will be more technology to figure out if the newly created videos and images are real.

Not only that, but researchers uncovered ways of using GANs to generate audio that sounds like something to humans but that machines understand differently. For example, you can develop audio that sounds to humans like “Hi, how are you?” and to machines, “Alexa, buy me a drink.” Or, audio that sounds like a Bach symphony, but for the machine, it sounds like “Alexa, go to this website.” The future has unlimited potential.

All my blog posts can be found here: https://giacaglia.github.io/blog/

Notes:

- Both images were generated by GANs

Dueling Neural Networks was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.