Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

McCulloch Pitts Neuron — Deep Learning Building Block

The fundamental block of deep learning is artificial neuron i.e.. it takes a weighted aggregate of inputs, applies a function and gives an output. The very first step towards the artificial neuron was taken by Warren McCulloch and Walter Pitts in 1943 inspired by neurobiology, created a model known as McCulloch-Pitts Neuron.

Disclaimer: The content and the structure of this article is based on the deep learning lectures from One-Fourth Labs — Padhai.

Motivation — Biological Neuron

The inspiration for the creation of an artificial neuron comes from the biological neuron.

Fig — 1 Biological Neuron — Padhai Deep Learning

Fig — 1 Biological Neuron — Padhai Deep Learning

In a simplistic view, neurons receive signals and produce a response. The general structure of a neuron is shown in the Fig-1. Dendrites are the transmission channels to bring inputs from another neuron or another organ. Synapse — Governs the strength of the interaction between the neurons, consider it like weights we use in neural networks. Soma — The processing unit of the neuron.

At the higher level, neuron takes a signal input through the dendrites, process it in the soma and passes the output through the axon (the brown color cable-like structure in the Fig-1).

McCulloch-Pitts Neuron Model

MP Neuron Model introduced by Warren McCulloch and Walter Pitts in 1943. MP neuron model is also known as linear threshold gate model.

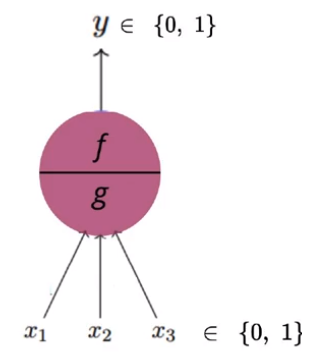

Mathematical Model Fig — 2 Simple representation of MP Neuron Model

Fig — 2 Simple representation of MP Neuron Model

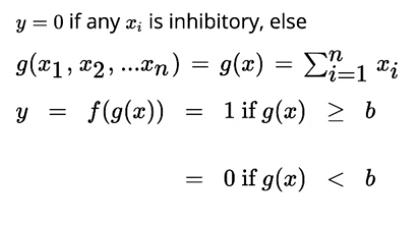

The function (soma) is actually split into two parts: g — The aggregates the inputs to a single numeric value and the function f produces the output of this neuron by taking the output of the g as the input i,e.. a single value as its argument. The function f will output the value 1 if the aggregation performed by the function g is greater than some threshold else it will return 0.

The inputs x1, x2, ….. xn for the MP Neuron can only take boolean values and the inputs can be inhibitory or excitatory. Inhibitory inputs can have maximum effect on the decision-making process of the model. In some cases, inhibitory inputs can influence the final outcome of the model.

Fig — 3 Mathematical representation

Fig — 3 Mathematical representation

For example, I can predict my own decision of whether I would like to watch a movie in a nearby IMAX theater tomorrow or not using an MP Neuron Model. All the inputs to the model are boolean i.e., [0,1] and from the Fig — 2 we can see that output from the model will also be boolean. (0 — Not going to movie, 1 — going to the movie)

Inputs for the above problem could be

- x1 — IsRainingTomorrow (Whether it's going to rain tomorrow or not)

- x2 — IsScifiMovie (I like science fiction movies)

- x3 — IsSickTomorrow (Whether I am going to be sick tomorrow or not depends on any symptoms, eg: fever)

- x4 — IsDirectedByNolan (Movie directed by Christopher Nolan or not.) etc….

In this scenario, if x3 — IsSickTomorrow is equal to 1, then the output will always be 0. If I am not feeling well on the day of the movie then no matter whoever is the actor or director of the movie, I wouldn’t be going for a movie.

Loss Function

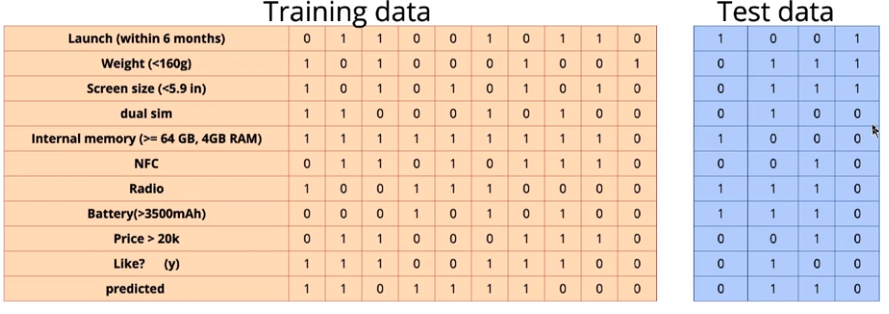

Let's take an example of buying a phone based on some features of the features in the binary format. { y — 0: Not buying a phone and y — 1: buying a phone}

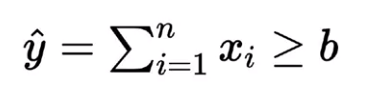

For each particular phone (observation) with a certain threshold value b, using the MP Neuron Model, we can predict the outcome using a condition that the summation of the inputs is greater than b then the predicted value will be 1 or else it will be 0. The loss for the particular observation will be squared difference between the Yactual and Ypredicted.

Fig — 5: MP Neuron Model for Buying a phone

Fig — 5: MP Neuron Model for Buying a phone

Similarly, for all the observations, calculate the summation of the squared difference between the Yactual and Ypredicted to get the total loss of the model for a particular threshold value b.

Learning Algorithm

The purpose of the learning algorithm is to find out the best value for the parameter b so that the loss of the model will be minimum. In the ideal scenario, the loss of the model for the best value of b would be zero.

For n features in the data, the summation we are computing in Fig — 5 can take only values between 0 and n because all of our inputs are binary (0 or 1). 0 — indicates all the features described in the Fig — 4 are off and 1 — indicates all the features are on. Therefore the different values the threshold b can take will also vary from 0 to n. As we have only one parameter with a range of values 0 to n, we can use the brute force approach to find the best value of b.

- Initialize the b with a random integer [0,n]

- For each observation

- Find the predicted outcome, by using the formula in Fig — 5

Calculate the summation of inputs and check whether its greater than or equal to b. If its greater than or equal to b, then the predicted outcome will be 1 or else it will be 0.

- After finding the predicting outcome compute the loss for each observation.

- Finally, compute the total loss of the model by summing up all the individual losses.

- Similarly, we can iterate over all the possible values of b and find the total loss of the model. Then we can choose the value of b, such that the loss is minimum.

Model Evaluation

After finding the best threshold value b from the learning algorithm, we can evaluate the model on the test data by comparing the predicted outcome and the actual outcome.

Fig — 7: Predictions on the test data for b = 5

Fig — 7: Predictions on the test data for b = 5

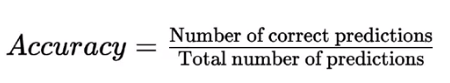

For evaluation, we will calculate the accuracy score of the model.

For the above-shown test data, the accuracy of the MP neuron model = 75%.

Python Implementation of MP Neuron Model

In this section, we will see how to implement MP neuron model using python. The data set we will be using is breast cancer data set from sklearn. Before start building the MP Neuron Model. We will start by loading the data and separating the response and target variables.

Once we load the data, we can use the sklearn’s train_test_split function to split the data into two parts — training and testing in the ratio of 80:20.

Remember from our previous discussion, MP Neuron takes only binary values as the input. So we need to convert the continuous features into binary format. To achieve this, we will use pandas.cut function to split all the features into 0 or 1 in one single shot. Once we are ready with the inputs we need to build the model, train it on the training data and evaluate the model performance on the test data.

To create a MP Neuron Model we will create a class and inside this class, we will have three different functions:

- model function — to calculate the summation of the Binarized inputs.

- predict function — to predict the outcome for every observation in the data.

- fit function — the fit function iterates over all the possible values of the threshold b and find the best value of b, such that the loss will be minimum.

After building the model, test the model performance on the testing data and check the training data accuracy as well as the testing data accuracy.

Problems with MP Neuron Model

- Boolean Inputs.

- Boolean Outputs.

- Threshold b can take only a few possible values.

- All the inputs to the model have equal weights.

Summary

In this article, we looked at the working of MP Neuron Model and its analogy towards biological neuron. In the end, we also saw the implementation of MP Neuron Model using python and a real word breast cancer data set.

Connect With Me

GitHub: https://github.com/Niranjankumar-c LinkedIn: https://www.linkedin.com/in/niranjankumar-c/

McCulloch Pitts Neuron — Deep Learning Building Blocks was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.