Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

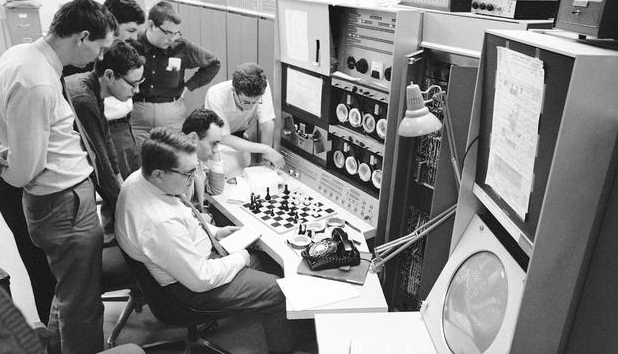

The breakthrough “MAC Hack VI” chess program in 1965.

The breakthrough “MAC Hack VI” chess program in 1965.

Many believed an algorithm would transcend humanity with cognitive awareness. Machines would discern and learn tasks without human intervention and replace workers in droves. They quite literally would be able to “think”. Many people even raised the question whether we could have robots for spouses.

But I am not talking about today. What if I told you this idea was widely marketed in the 1960’s, and AI pioneers Jerome Wiesner, Oliver Selfridge, and Claude Shannon insisted this could happen in their near future? If you find this surprising, watch this video and be amazed how familiar these sentiments are.

Fast forward to 1973, and the hype and exaggeration of AI backfired. The U.K. Parliament sent Sir James Lighthill to get a status report of A.I. research in the U.K. The report criticized the failure of artificial intelligence research to live up to its sensational claims. Interestingly, Lighthill also pointed out how specialized programs (or people) performed better than their “AI” counterparts, and had no prospects in real-world environments. Consequently, AI research funding was cancelled by the British government.

Across the pond, the United States Department of Defense was invested heavily in AI research, but then cancelled nearly all funding over the same frustrations: exaggeration of AI ability, high costs with no return, and dubious value in the real world.

In the 1980’s, Japan enthusiastically attempted a bold stab at “AI” with the Fifth Generation Project (EDIT: Toby Walsh himself corrected me in the comments. UK research did pick up again in the 1980’s with the Alvey Project in response to Japan). However, that ended up being a costly $850 million failure as well.

The First AI Winter

The end of the 1980’s brought forth an A.I. Winter, a dark period in computer science where “artificial intelligence” research burned organizations and governments with delivery failures and sunk costs. Such failures would terminate AI research for decades.

Oftentimes, these companies were driven by FOMO rather than practical use cases, worried that they would be left behind by their automated competitors.

By the time the 1990’s rolled around, “AI” became a dirty word and continued to be in the 2000’s. It was widely accepted that “AI just didn’t work”. Software companies who wrote seemingly intelligent programs would use terms like “search algorithms”, “business rule engines”, “constraint solvers”, and “operations research”. It is worth mentioning that these invaluable tools indeed came from AI research, but they were rebranded since they failed to live up to their grander purposes.

But around 2010, something started to change. There was rapidly growing interest in AI again and competitions in categorizing images caught the media’s eye. Silicon Valley was sitting on huge amounts of data, and for the first time there was enough to possibly make neural networks useful.

By 2015, “AI” research commanded huge budgets of many Fortune 500 companies. Oftentimes, these companies were driven by FOMO rather than practical use cases, worried that they would be left behind by their automated competitors. After all, having a neural network identify objects in images is nothing short of impressive! To the layperson, SkyNet capabilities must surely be next.

But is this really a step towards true AI? Or is history repeating itself, but this time emboldened by a handful of successful use cases?

What is AI Anyway?

For a long time, I have never liked the term “artificial intelligence”. It is vague and far-reaching, and defined more by marketing folks than scientists. Of course, marketing and buzzwords are arguably necessary to spur positive change. However, buzzword campaigns inevitably lead to confusion. My new ASUS smart phone has an “AI Ringtone” feature that dynamically adjusts the ring volume to be just loud enough over ambient noise. I guess something that literally could be programmed with a series of `if` conditions, or a simple linear function, is called “AI”. Alrighty then.

“Data Science” Has Become Too Vague

In light of that, it is probably no surprise the definition of “AI” is widely disputed. I like Geoffrey De Smet’s definition, which states AI solutions are for problems with a nondeterministic answer and/or an inevitable margin of error. This would include a wide array of tools from machine learning to probability and search algorithms.

It can also be said that the definition of AI evolves and only includes ground-breaking developments, while yesterday’s successes (like optical character recognition or language translators) are no longer considered “AI”. So “artificial intelligence” can be a relative term and hardly absolute.

In recent years, “AI” has often been associated with “neural networks” which is what this article will focus on. There are other “AI” solutions out there, from other machine learning models (Naive Bayes, Support Vector Machines, XGBoost) to search algorithms. However, neural networks are arguably the hottest and most hyped technology at the moment. If you want to learn more about neural networks, I posted my video below.

If you want a more thorough explanation, check out Grant Sanderson’s amazing video series on neural networks here:

An AI Renaissance?

The resurgence of AI hype after 2010 is simply due to a new class of tasks being mastered: categorization. More specifically, scientists have developed effective ways to categorize most types of data including images and natural language thanks to neural networks. Even self-driving cars are categorization tasks, where each image of the surrounding road translates into a set of discrete actions (gas, break, turn left, turn right, etc). To get a simplified idea of how this works, watch this tutorial showing how to make a video game AI.

In my opinion, Natural language processing is more impressive than pure categorization though. It is easy to believe these algorithms are sentient, but if you study them carefully you can tell they are relying on language patterns rather than consciously-constructed thoughts. These can lead to some entertaining results, like these bots that will troll scammers for you:

Probably the most impressive feat of natural language processing is Google Duplex, which allows your Android phone to make phone calls on your behalf, specifically for appointments. However, you have to consider that Google trained, structured, and perhaps even hardcoded the “AI” just for that task. And sure, the fake caller sounds natural with pauses, “ahhs”, and “uhms”… but again, this was done through operations on speech patterns, not actual reasoning and thoughts.

This is all very impressive, and definitely has some useful applications. But we really need to temper our expectations and stop hyping “deep learning” capabilities. If we don’t, we may find ourselves in another AI Winter.

History Repeats Itself

Gary Marcus at NYU wrote an interesting article on the limitations of deep learning, and poses several sobering points (he also wrote an equally interesting follow-up after the article went viral). Rodney Brooks is putting timelines together and keeping track of his AI hype cycle predictions, and predicts we will see “ The Era of Deep Learning is Over” headlines in 2020.

The skeptics generally share a few key points. Neural networks are data-hungry and even today, data is finite. This is also why “game” AI examples you see on YouTube (like this one as well as this one) often require days of constant losing gameplay until the neural network finds a pattern that allows it to win.

We really need to temper our expectations and stop hyping “deep learning” capabilities. If we don’t, we may find ourselves in another AI Winter.

Neural networks are “deep” in that they technically have several layers of nodes, not because it develops deep understanding about the problem. These layers also make the neural networks difficult to understand, even for its developer. Most importantly, neural networks are experiencing diminishing return when they venture out into other problem spaces, such as the Traveling Salesman Problem. And this makes sense. Why in the world would I solve the Traveling Salesman Problem with a neural network when a search algorithm will be much more straightforward, effective, scalable, and economical (as shown in the video below)?

Nor would I use deep learning to solve other everyday “AI” problems, like solving Sudokus or packing events into a schedule, which I discuss how to do in a separate article:

Of course, there are folks looking to generalize more problem spaces into neural networks, and while that is interesting it rarely seems to outperform any specialized algorithms.

Luke Hewitt at MIT puts it best in this article:

It is a bad idea to intuit how broadly intelligent a machine must be, or have the capacity to be, based solely on a single task. The checkers-playing machines of the 1950s amazed researchers and many considered these a huge leap towards human-level reasoning, yet we now appreciate that achieving human or superhuman performance in this game is far easier than achieving human-level general intelligence. In fact, even the best humans can easily be defeated by a search algorithm with simple heuristics. Human or superhuman performance in one task is not necessarily a stepping-stone towards near-human performance across most tasks.

— Luke Hewitt

I think it is also worth pointing out that neural networks require vast amounts of hardware and energy to train. To me, that just does not feel sustainable. Of course, a neural network will predict much more efficiently than it trains. However I do think the ambitions people have for neural networks will demand constant training and therefore require exponential energy and costs. And sure, computers keep getting faster but can chip manufacturers struggle past the failure of Moore’s Law?

A final point to consider is the P versus NP problem. To describe this in the simplest terms possible, proving P = NP would mean we could calculate solutions to very difficult problems (like machine learning, cryptography, and optimization) just as quickly as we can verify them. Such a breakthrough would expand the capabilities of AI algorithms drastically and maybe transform our world beyond recognition (Fun fact: there’s a 2012 intellectual thriller movie called The Travelling Salesman which explores this idea).

Here is a great video that explains the P versus NP problem, and it is worth the 10 minutes to watch:

Sadly after 50 years since the problem was formalized, more computer scientists are coming to believe that P does not equal NP. In my opinion, this is an enormous barrier to AI research that we may never overcome, as this means complexity will always limit what we can do.

And this makes sense. Why in the world would I solve the Traveling Salesman Problem with a neural network when a search algorithm will be much more effective, scalable, and economical?

It is for these reasons I think another AI Winter is coming. In 2018, a growing number of experts, articles, forum posts, and bloggers came forward calling out these limitations. I think this skepticism trend is going to intensify in 2019 and will go mainstream as soon as 2020. Companies are still sparing little expense in getting the best “deep learning” and “AI” talent, but I think it is a matter of time before many companies realize deep learning is not what they need. Even worse, if your company does not have Google’s research budget, the PhD talent, or massive data store it collected from users, you can quickly find your practical “deep learning” prospects very limited. This was best captured in this scene from the HBO show Silicon Valley (WARNING: language):

Each AI Winter is preceded with scientists exaggerating and hyping the potential of their creations. It is not enough to say their algorithm can do one task well. They want it to ambitiously adapt to any task, or at least give the impression it can. For instance, AlphaZero makes a better chess playing algorithm. Media’s reaction is “Oh my gosh, general AI is here. Everybody run for cover! The robots are coming!” Then the scientists do not bother correcting them and actually encourage it using clever choices of words. Tempering expectations does not help VC funding after all. But there could be other reasons why AI researchers anthropomorphize algorithms despite their robotic limitations, and it is more philosophical than scientific. I will save that for the end of the article.

So What’s Next?

Of course, not every company using “machine learning” or “AI” is actually using “deep learning.” A good data scientist may have been hired to build a neural network, but when she actually studies the problem she more appropriately builds a Naive Bayes classifier instead. For the companies that are successfully using image recognition and language processing, they will continue to do so happily. But I do think neural networks are not going to progress far from those problem spaces.

Tempering expectations does not help VC funding after all.

The AI Winters of the past were devastating in pushing the boundaries of computer science. It is worth pointing out that useful things came out of such research, like search algorithms which can effectively win at chess or minimize costs in transportation problems. Simply put, innovative algorithms emerged that often excelled at one particular task.

The point I am making is there are many proven solutions out there for many types of problems. To avoid getting put out in the cold by an AI Winter, the best thing you can do is be specific about the problem you are trying to solve and understand its nature. After that, find approaches that provide an intuitive path to a solution for that particular problem. If you want to categorize text messages, you probably want to use Naive Bayes. If you are trying to optimize your transportation network, you likely should use Discrete Optimization. No matter the peer pressure, you are allowed to approach convoluted models with a healthy amount of skepticism, and question whether it is the right approach.

Hopefully this article made it abundantly clear deep learning is not the right approach for most problems. There is no free lunch. Do not hit the obstacle of seeking a generalized AI solution for all your problems, because you are not going to find one.

Are Our Thoughts Really Dot Products? Philosophy vs Science

One last point I want to throw in this article, and it is more philosophical than scientific. Is every thought and feeling we have simply a bunch of numbers being multiplied and added in linear algebra fashion? Are our brains, in fact, simply a neural network doing dot products all day? That sounds almost like a Pythagorean philosophy that reduces our consciousness to a matrix of numbers. Perhaps this is why so many scientists believe general artificial intelligence is possible, as being human is no different than being a computer. (I’m just pointing this out, not commenting whether this worldview is right or wrong).

No matter the peer pressure, you are allowed to approach convoluted models with a healthy amount of skepticism, and question whether it is the right approach.

If you do not buy into this Pythagorean philosophy, then the best you can strive for is have AI “simulate” actions that give the illusion it has sentiments and thoughts. A translation program does not understand Chinese. It “simulates” the illusion of understanding Chinese by finding probabilistic patterns. When your smartphone “recognizes” a picture of a dog, does it really recognize a dog? Or does it see a grid of numbers it saw before?

This article was originally published on Towards Data Science.

Related Articles:

- Data Science in Tech - Hacker Noon

- Are Our Thoughts Really Dot Products?

- How It Feels to Learn Data Science in 2019

- Data - Hacker Noon

Is Another AI Winter Coming? was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.