Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

NPM Package Verification — Ep. 2

Minimum Viable Package Verification as a Service (MVPVaaS)

TL;DR

I built a super-extra-pre-alpha version of PVaaS (Package Verification as a Service) that is running in the cloud. There is a JSON route that surfaces verification data for a package, and a badge route that, well, gives you a badge.

Does a package verify? https://api.verifynpm.com/v0/packages/tbv

A previous version? https://api.verifynpm.com/v0/packages/tbv@0.3.0

How ‘bout a badge: https://api.verifynpm.com/v0/packages/tbv/badge

I cannot emphasize enough how experimental all of this is! I will not make any breaking changes to the version zero API, however, I can’t make any promises that the API is, ya know, permanent. Feel free to tinker with it. All of the source code is available on GitHub: github.com/verifynpm.

Photo by Linh Nguyen on Unsplash

Photo by Linh Nguyen on Unsplash

To scroll back a bit, my first hair-brained project of the year was a proof-of-concept for validating NPM packages. I build a globally installable NPM package call TBV (Trust But Verify) that lets you check to see if the contents of a package can be consistently reproduced from the source code on GitHub.

Neat.

I received a pleasant amount of positive feedback (yay community!) and overwhelmingly the responses favored making verification results available online in the form everything from repo badges to actual visibility on npmjs.com. So to pursue “verification as a service,” I decided that the first step was to build an API that would run TBV in the cloud.

Scratch that. The first step was buying yet another domain name. Obviously.

body[data-twttr-rendered="true"] {background-color: transparent;}.twitter-tweet {margin: auto !important;}

Buying a domain name is buying the wonderful feeling that you'll actually finish the side project you have planned for it.

So, armed with a shiny new domain name, to pursue “verification as a service,” I decided that the second step was to build an API that would run TBV in the cloud.

Before I got going I wanted to set some ground rules for myself so that I could get something running in the cloud within a single weekend. I didn’t quite hit that goal (OK, fine, failed miserably), but here are the rules I set for myself:

CI/CD:

My personal philosophy that the first thing you should do with any application is deploy it. Building it comes later. This seems counterintuitive, but the mindset saves time in two ways. First, configuring a build pipeline for the “null application” is really easy. I tend to use TravisCI and a basic config to build a “hello world” Node app and run tests is like 5 lines of YAML. As the application grows your build config grows with it. This is way more efficient than trying to shoehorn in a build process after the application has hit rebellious adolescence. Secondly, automation saves time, which is kinda the point. I don’t have a lot of free time, so I need all the help I can get. Automation from the get-go means all I have to do to get changes to the cloud is push to master.

Serverless

For this project, Kubernetes was out. I really do think that containerization is the future of PaaS, but I also have hands-on experience to know that k8s can take a hot minute to achieve positive ROI. I flirted with the idea of a smallish VM on Digital Ocean, but plumbing a CI system for deployments as well as configuring a database and message queue of some sort seemed like a weekend project all to itself. Any out-of-the-box infrastructure that I could leverage would be a boon. I took a look at TravisCI and saw that they have a deployment config for AWS Lambda. AWS DynamoDB is a thing and has a built-in stream for plumbing changes to yet more Lambda functions. Sold.

Spec-first API

There is huge value in designing network-based systems before they are built. Swagger (aka OpenAPI Spec 2.0) is a fantastic DSL for defining APIs. My API functions would be considered “done” when the fulfill the spec.

No Yak Shaving

As much as is possible, I want to ignore nifty distractions and work on the important things. This means focusing on making the verification route work as soon as possible. I knew that there were going to be challenges getting the TBV library working in the cloud, but I had no idea where the challenges lay. In words of the Lean Startup, I wanted to start validating assumptions a soon as I could. Don’t shave that yak.

If you haven’t messed with it yet, AWS’s Serverless Application Model (SAM) is pretty slick. Lambda was pretty intuitive and I was able to very quickly (think tens of minutes) get a function built in Typescript up and running in the cloud. I am fronting the who thing with AWS API Gateway configured with Swagger. The API is configured to use the Lambda function as its implementation.

I started by laying out a single route with Swagger that defined the basic verification response, the designed standard errors, content types, and other basic API boilerplate stuff.

Next, I implemented the single API route with a “do nothing” Lambda function. From the get-go, I setup TravisCI to deploy the function whenever I push to master. The first thing I pushed was a simple hello world function. It took less than 30 minutes to figure out the AWS IAM permission stuff, take a peek at the TravisCI docs, and then get the build working. The speed that automation gives is astounding. I cannot repeat this enough: deploy first, then build.

By lunch on Saturday, I had defined my API, configured API Gateway, setup a CI/CD pipeline for deploying Lambda functions, build a “hello world” function, and wired up API Gateway to my shiny new domain name. I could hit https://api.verifynpm.com/v0/package/tbv and get an actual response.

Neat.

In the afternoon I learnt myself some DynamoDB. TBV can take a minute or so to run, especially if there is prepare or prepack script. That is way too long to wait for an API response. To allow for higher API SLAs, I wanted to use one main request-handling Lambda function to drop verifications on a queue which would be read by another Lambda function that actually ran TBV.

Once the package has been verified once, all consumers can see the result which will be fetched directly from the database. With the queue, even the first call will return sub-second response times. Subsequent calls will reveal how verification is progressing.

As it turns out DynamoDB has a built-in stream to watch for changes. All I had to do was write an item to the database with the package name/version and an “unknown” status, and another Lambda function would be pushed that change event.

The goal was then to have one function that responded to API traffic and ensured that the DDB item was written for the incoming request, and another function for running TBV when new packages came in from the DDB stream.

And this was the end of Day One. I had a plan for orchestrating everything and I was confident that I would have a working API and a blog post before work on Monday morning.

I.

Was.

Wrong.

Day Two was where the “fun” began. As it turns out, AWS Lambda is really good at running basic Node functions out of the box. But TBV has to exec both git and npm in order to work. git is used to fetch the package source from source control, and npm is used for installing dependencies and generating a package to compare to the published version.

My assumption was that running npm would be trivial since the Lambda Node 8.10 runtime includes it already and that running git would be difficult if not impossible. One of the beauties of racing to (in)validate assumptions is that I was totally wrong.

It turns out that at the end of last year, AWS launched Lambda Layers which allow developers to “package and deploy libraries, custom runtimes, and other dependencies separately from your function code.” Ya know other dependencies like git. And in the 50 or so days that the feature had been live, someone had already created just what I needed. Thanks, internet!

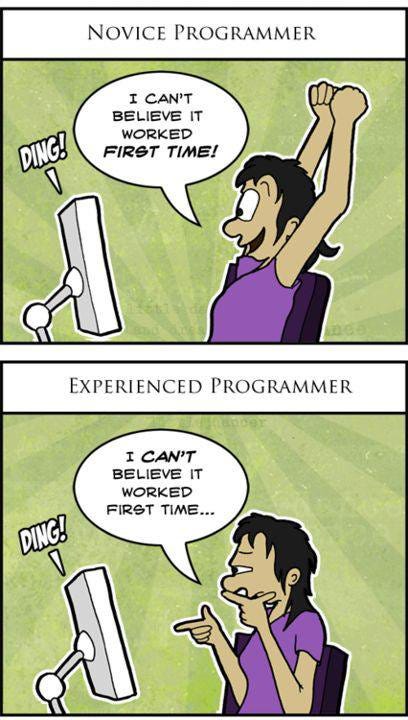

At this point, I thought I had really dodged a bullet. I started running live data through the system for packages like express that are pretty popular and that I knew verify. And it worked. I’ve been doing this long enough to know that success at this early is suspicious.

So then I started trying other libraries, like, oh, TBV.

It failed.

This is where I started going down the first path of yak shaving. The output of TBV was originally optimized for human readability. However, this meant that viewing the log output in AWS was very unhelpful. I ran the same version of TBV on my own machine as on Lambda and it Worked On My Machine™.

I added verbose logging to TBV so that I could see the raw output from the commands that were being run.

And so began a long series of commits that I not proud of.

Once I had better logging visibility, noticed that npm ci was failing because the command was not supported. Yep, Node 8.10 on AWS Lambda runs npm@5.6.x and I need at least npm@5.7.0 to run npm ci. I was literally one minor version short of a working distributed system.

First I tried running npm install --global npm@latest on Lambda, but that failed because Lambda functions have read-only access to everything on the filesystem except /tmp. I expected this, but hey, no harm in trying.

Next, I tried installing npm as a production dependency of my function. Getting $PATH to include Node, MY version of NPM, but NOT the normally installed version of NPM proved difficult. Installing npm as a production dependency just seems wrong anyway.

Next, I started digging into the source code for NPM to see how the ci command works. If it was trivial to implement, then maybe I could clone just that code. Open source, blah blah, MIT license, blah blah. I assumed it would be super convoluted and thus an exercise if futility. Nope! It just used another library called cipm. (As part of this process, I learned that NPM often introduces new functionality by incorporating external libraries.)

Partial success?

Next, I tried installing cipm as a production dependency. Honestly, I forget why this attempt didn’t work. I also felt dirty using a different method for installing and building than what would be used in the wild. I was bummed by the irony that I was getting beat by the library that I was trying to secure.

Next, I tried watching Netflix. But this didn’t work because watching TV isn’t a good way to write software that works.

Sunday had come and gone. I was frustrated. I was getting shoddy results from TBV anyway. I had gained some validated learning but hadn’t shipped the product I had wanted. Sigh.

Since I had already missed my (self-imposed) deadline, I decided to retreat and lick my wounds. How about doing something therapeutic. Like creating another NPM package.

I took some of the functions and runtimes I had cobbled together so far and built the galaxy’s OKest Typescript AWS Lambda generator for yeoman.

This project became the scaffold for most of the Lambda functions that I have built for this project. I was a bit of a deviation from “no yak shaving,” but I think that it has saved me some time.

The next few evenings were spent rebuilding now TBV handles package comparison. I learned a bunch of stuff about tarballs and GZip and related streams that I will expound in another post. The big takeaway is that I ended up NOT looking at the package shasum like I talked about in my last post. Instead, I computed the sha256 of each file in the package and then compared those

The end result was that I was able to get a better view of why a package doesn’t verify because I could now see what files were added, modified, and removed when comparing packages.

I also started toying with a new workflow/pipeline model for running the verification sub-tasks. It ended up being super promising, but I am now struggling to get it to run npm ci reliably, so that hasn’t rolled out yet.

Lastly, I ensured that TBV now removes any temp directories it creates. As it turns out, you can run out of disk space in a Lambda function, even between calls. Maybe I’ll talk about this in the future.

I also realized that Lambda layers could be used to create custom runtimes as well. I would like to go into detail on how this works and why you might want to try it. For now, though, let here is the repo I came up with for building a custom AWS Lambda runtime with Node 10.15 and npm@6.7.0:

I had found another similar custom run time from rrainn. However, I could get npm to run on theirs out of the box. I leveraged their javascript bits to make mine work.

rrainn/aws-lambda-custom-node-runtime

This was a fantastic exercise in understanding how the guts of Lambda functions work. It was also one of the first times that I have really used docker to implement a build process.

Another weekend had come and gone, but I was finally able to run TBV in Lambda. The last step was to build one final function for serving badges. At this point, I am just returning custom badges from https://shields.io.

Honestly, after spending so much time learning how the sausage is made, I found it very refreshing to just build a regular run-of-the-mill function that runs on a standard runtime without anything exotic behind the scenes.

I took a brief look at building a basic website using Gatsby. That proved to be a bridge too far. I will eventually get that done. For now, I want to focus on the API.

I need your help!

I think that the next steps are to get the community to start hammering on both TBV as a library and as an API. I’m interested to see what breaks. (Oh, yeah, it’s gonna break!)

If something doesn’t look right, please open an issue on the TBV repo:

When opening issues, please run TBV locally with the--verbose option and include the output in the issue. Also, note that you can also run TBV in a docker container. See the repo README for instructions on that.

Have thoughts or comments? Feel free to open an issue.

Also, if you want to roll up your sleeves and help with the code, I would be honored! Really! To contribute, fork the repo and submit a Pull Request.

But wait there’s more!

I’m so not done with this project! I might take a few weeks off, but I have new functionality and blog posts in the works. If you don’t want to miss an update, go ahead and click “Follow.”

Give this post all a few 👏 if you think I earned it, and don’t forget to head on over to verifynpm on GitHub and ⭐ star ⭐ every ⭐ single ⭐ repo ⭐

NPM Package Verification — Ep. 2 was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.