Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Naive Bayes is a very handy, popular and important Machine Learning Algorithm especially for Text Analytics and General Classification. It has many different configurations namely:

- Gaussian Naive Bayes

- Multinomial Naive Bayes

- Complement Naive Bayes

- Bernoulli Naive Bayes

- Out-of-core Naive Bayes

In this article, I am going to discuss Gaussian Naive Bayes: the algorithm, its implementation and application in a miniature Wikipedia Dataset (dataset given in Wikipedia).

The Algorithm:

Gaussian Naive Bayes is an algorithm having a Probabilistic Approach. It involves prior and posterior probability calculation of the classes in the dataset and the test data given a class respectively.

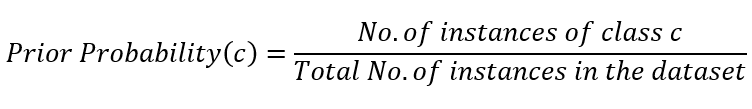

Mathematical Formula for Prior Probability ….. eq-1)

Mathematical Formula for Prior Probability ….. eq-1)

Prior probabilities of all the classes are calculated using the same formula.

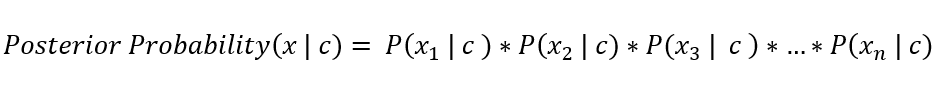

Mathematical Formula for Posterior Probability of a test data x given class c which is the product of the conditional probabilities of all the features of the test data given class c ….. eq-2)

Mathematical Formula for Posterior Probability of a test data x given class c which is the product of the conditional probabilities of all the features of the test data given class c ….. eq-2)

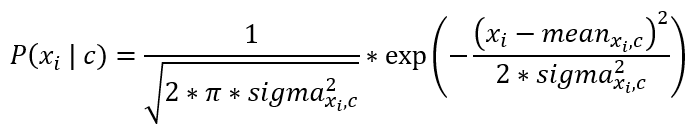

But, how to obtain the conditional probabilities of the test data features given a class?

This is given by the probability obtained from Gaussian (Normal) Distribution.

Mathematical Expression for obtaining the conditional probabilities of a test feature given a class and x_i is a test data feature, c is a class and sigma² is the associated Sample Variance ….. eq-3)

Mathematical Expression for obtaining the conditional probabilities of a test feature given a class and x_i is a test data feature, c is a class and sigma² is the associated Sample Variance ….. eq-3)

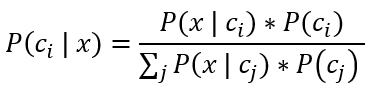

Finally, the conditional probability of each class given an instance (test instance) is calculated using Bayes Theorem.

Mathematical Expression of Conditional Probability of class c_i given test data x ….. eq-4)

Mathematical Expression of Conditional Probability of class c_i given test data x ….. eq-4)

Eq-4) is repeated for all the classes and the class showing the highest probability is ultimately declared the predicted result.

Implementation in Python from scratch:

As it is stated, implementation from scratch, no library other than Numpy (that provides Python with Matlab-type environment) and list/dictionary related libraries, has been used in coding out the algorithm. The Gaussian Naive Bayes is implemented in 4 modules for Binary Classification, each performing different operations.

=> pre_prob(): It returns the prior probabilities of the 2 classes as per eq-1) by taking the label set y as input. The implementation of pre_prob() is given below:

# Importing necessary libraries...import collections import numpy as np

def pre_prob(y): y_dict = collections.Counter(y) pre_probab = np.ones(2) for i in range(0, 2): pre_probab[i] = y_dict[i]/y.shape[0] return pre_probab

=> mean_var(): It is the function that returns the mean and variance of all the features for 2 class labels (binary classification), given the feature set X and label set y as input. The implementation of mean_var() is given below:

def mean_var(X, y): n_features = X.shape[1] m = np.ones((2, n_features)) v = np.ones((2, n_features)) n_0 = np.bincount(y)[np.nonzero(np.bincount(y))[0]][0]

x0 = np.ones((n_0, n_features)) x1 = np.ones((X.shape[0] - n_0, n_features)) k = 0 for i in range(0, X.shape[0]): if y[i] == 0: x0[k] = X[i] k = k + 1 k = 0 for i in range(0, X.shape[0]): if y[i] == 1: x1[k] = X[i] k = k + 1 for j in range(0, n_features): m[0][j] = np.mean(x0.T[j]) v[0][j] = np.var(x0.T[j])*(n_0/(n_0 - 1)) m[1][j] = np.mean(x1.T[j]) v[1][j] = np.var(x1.T[j])*((X.shape[0]-n_0)/((X.shape[0] - n_0) - 1)) return m, v # mean and variance

=> prob_feature_class(): It is the function that returns the posterior probabilities of the test data x given class c (eq-2) by taking mean m, variance v and test data x as input. The implementation of prob_feature_class() is given below:

def prob_feature_class(m, v, x): n_features = m.shape[1] pfc = np.ones(2) for i in range(0, 2): product = 1 for j in range(0, n_features): product = product * (1/sqrt(2*3.14*v[i][j])) * exp(-0.5 * pow((x[j] - m[i][j]),2)/v[i][j]) pfc[i] = product return pfc

=> GNB(): It is the function that sums up the 3 other functions by using the entities returned by them to finally calculate the Conditional Probability of the each of the 2 classes given the test instance x (eq-4) by taking the feature set X, label set y and test data x as input and returns

- Mean of the 2 classes for all the features

- Variance of the 2 classes for all the features

- Prior Probabilities of the 2 classes in the dataset

- Posterior Probabilities of the test data given each class of the 2 classes

- Conditional Probability of each of the 2 classes given the test data

- Final Prediction given by Gaussian Naive Bayes Algorithm

The implementation of GNB() is given below:

def GNB(X, y, x): m, v = mean_var(X, y) pfc = prob_feature_class(m, v, x) pre_probab = pre_prob(y) pcf = np.ones(2) total_prob = 0 for i in range(0, 2): total_prob = total_prob + (pfc[i] * pre_probab[i]) for i in range(0, 2): pcf[i] = (pfc[i] * pre_probab[i])/total_prob prediction = int(pcf.argmax()) return m, v, pre_probab, pfc, pcf, prediction

Application of Gaussian Naive Bayes on a Miniature Dataset

The sample Gender-Dataset given in the Wikipedia has been used for application of the implemented Gaussian Naive Bayes.

Naive Bayes classifier - Wikipedia

Problem Statement: “Given the height (in feet), weight (in lbs) and foot size (in inches), predict whether the person is a male or female”

=> Data Reading is done using Pandas as the dataset contains textual headings for the columns. In operations and other data manipulations following this, Pandas has not been repeated any further.

import pandas as pdimport numpy as np

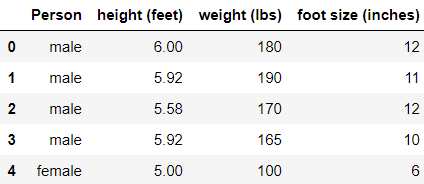

data = pd.read_csv('gender.csv', delimiter = ',')data.head() Snap of the dataset being used

Snap of the dataset being used

=> Executing the 4-module-Gaussian Naive Bayes for the test instance used in the Wikipedia.

Naive Bayes classifier - Wikipedia

# converting from pandas to numpy ...X_train = np.array(data.iloc[:,[1,2,3]])y_train = np.array(data['Person'])

for i in range(0,y_train.shape[0]): if y_train[i] == "Female": y_train[i] = 0 else: y_train[i] = 1

x = np.array([6, 130, 8]) # test instance used in Wikipedia

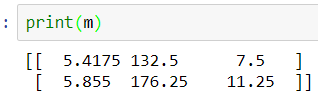

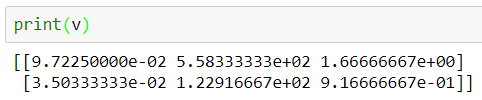

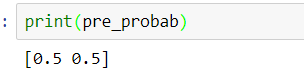

# executing the Gaussian Naive Bayes for the test instance...m, v, pre_probab, pfc, pcf, prediction = GNB(X_train, y_train, x)print(m) # Output given below...(mean for 2 classes of all features)print(v) # Output given below..(variance for 2 classes of features)print(pre_probab) # Output given below.........(prior probabilities)print(pfc) # Output given below............(posterior probabilities)print(pcf) # Conditional Probability of the classes given test-dataprint(prediction) # Output given below............(final prediction)

Mean of the 2 classes (rows) of all features (columns)

Mean of the 2 classes (rows) of all features (columns) Sample Variance of the 2 classes (rows) of all features (columns)

Sample Variance of the 2 classes (rows) of all features (columns) Prior Probabilities of the classes, Female and Male

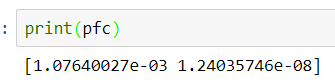

Prior Probabilities of the classes, Female and Male Posterior probabilities of the test-data given each of the 2 classes

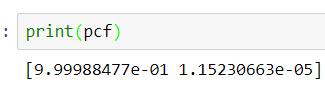

Posterior probabilities of the test-data given each of the 2 classes Final Conditional Probabilities of the 2 classes given the test-data

Final Conditional Probabilities of the 2 classes given the test-data Final Prediction of the Test Instance which refers to Female

Final Prediction of the Test Instance which refers to Female

Finally, the calculation and predicted results comply with that shown in Wikipedia using the same dataset.

This implementation of Gaussian Naive Bayes can also be used for Multi-Class Classification by repeating each time for each of the classes in a One-vs-Rest fashion.

That’s all about Gaussian Naive Bayes !!!

For Personal Contacts regarding the article or discussions on Machine Learning/Data Mining or any department of Data Science, feel free to reach out to me on LinkedIn

Navoneel Chakrabarty - Contributing Writer - Hacker Noon | LinkedIn

Implementation of Gaussian Naive Bayes in Python from scratch was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.