Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Photo by Lukas Blazek on Unsplash

Photo by Lukas Blazek on Unsplash

So you’ve decided to build an application for Alexa or Google? Congratulations! A lot of people start by pouring through the technical documentation, tutorials, and sample code. I know I did when I wrote my first application for Alexa three years ago. It’s a tempting approach, especially for a technology enthusiast. But I want you to stop and take 30 minutes to improve the customer engagement of your voice application before you write a line of code. How? Test your language model with others.

When building a voice application, much of the heavy work of deriving the context from speech is done by the platform providers. Alexa and Google provide the sophisticated algorithms that do this speech conversion. But you still need to provide their platform with training phrases (utterances) and a blueprint of how those utterances map to different JSON requests (intents) for your code. Take the time to test these training phrases with human beings to make sure you’re nailing customer expectations.

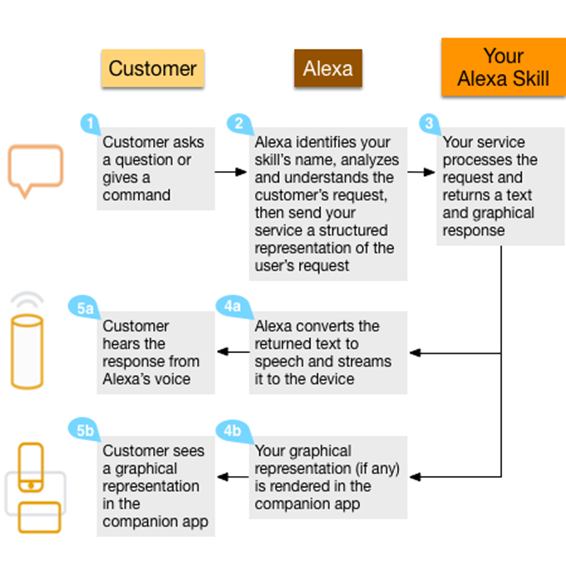

Customer interaction with your Alexa skill

Customer interaction with your Alexa skill

Let’s consider building a skill that lets a customer order a pizza. We’ll want to support a few different scenarios:

- The customer should be able to tell us which pizza they want including both the size and the type of pizza (“a large pepperoni pizza”)

- They will need to indicate if they are picking up the pizza or need delivery

- They will need to confirm the order after hearing the total.

As the designer of this skill, my initial approach is a linear flow through these steps, with a language model somewhat like this:

OrderIntent (in response to the question "What would you like to order?") "I'd like a {Size} {Type} pizza" "Can I get a {Size} {Type} pizza?" "Let's go with a {Size} {Type} pizza" "a {Size} {Type} pizza"Note that {Size} would be small, medium, or large; {Type} would be pepperoni, sausage, Hawaiian, etc. If either of these is not provided, we'll prompt the user to fill the slot ("What size pizza would you like?")DeliveryIntent (in response to the question "Would you like pick-up or delivery?") "Pick up" "I'll pick it up" "Delivery" "I'd like it delivered" "Let's deliver it"

Yes/No (built-in intents in response to the question "Your total is $x. Would you like to place this order?")

Seems straight-forward. Voice platforms provide an easy way to seed these utterances which will send the code a JSON request for the specified intent. But voice is unique. Voice means free-form, natural conversations. And a good third-party application should allow people to complete their tasks in a natural, conversational tone. You don’t need fancy tools or layouts to test your model — just observe how people talk with your skill. Try it out with your team, or with your customers. Get in a room with no machines, no preconceptions, no designs, and ask people to complete a task.

I did this for the pizza skill — I talked to 5 of my friends and coworkers asking them to order a pizza with my skill. Not a rigorous round of market testing to be sure, but with only 30 minutes of effort I discovered several scenarios that weren’t covered by my language model:

One large one topping with pepperoni

I’d like a large pepperoni pizza with thin crust.

Can I order a large pepperoni pizza with garlic bread stick?

I would like two large pepperoni pizzas and a large coke and some chicken wings

I would like a Hawaiian pizza but only half — the other half pineapple only

What size pizzas do you have?

Responses to whether the customer wanted to pickup or deliver the pizza resulted in answers consistent with my model. This is probably because the question specified two available options making it unnatural to provide a creative response. It is worth noting that one person asked for the order to be repeated at this step, highlighting that it may be useful to add this intent and respond with the in-process order details.

Now, you may not want to incorporate support for all of these interactions in an initial release. But these do highlight customer expectations when interacting with your skill. These should be addressed in your language model, dialog flow, as part of the welcome message to set expectations (“I can place a pizza order for you now, if you want to customize your order, please call our store directly”), or as part of a help message. One of my customer conversations resulted in a loop as the customer kept asking what sizes of pizza were available (to which I repeatedly said “Sorry, I didn’t understand you. Can you say that again?”). It’s a good practice to break out of this loop by proactively offering help if a customer repeatedly says something your code can’t process.

So the next time you set out to write an engaging application for humans to converse with machines, take the time upfront and focus on the human side of the equation.

Improve your voice application in 30 minutes! was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.