Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

ID verification pipeline with Deep Learning

By Grigoriy Ivanov, Computer Vision Researcher and Engineer at Poteha Labs

Whether you are a bank, which strives to attract more clients without forcing them to visit branches, or a large governmental organization needing to optimize access into secure areas, you might want to have identity verification service as a part of your system also known as KYC (Know Your Customer).

Besides possessing a cutting-edge feature which makes you a ‘high-tech’ company, automatic id verification can be used in plenty of ways. To begin with, it allows to automatize registration and thus reduce costs for staff. In the second place, it can lead to an increase in the flow of incoming users because of speed and convenience.

Furthermore, it can be installed at checkpoints in order to open the gates for verified people only. At last, this system would almost eliminate the impact of such human factors as inattentiveness, lack of security guard, etc.

Thanks to deep learning one of the simplest verification techniques today is face verification. Possible service may take, for example, an ID document and a selfie as an input and returns the value of ‘equality score’ of two faces contained in these photos. One of such systems is Chinese DocFace [1]: it actually executes everything we are striving for and is successfully used for automatic passport controls at checkpoints. So, next in this article we are going to describe how to implement a similar system 1) using open source projects and 2) having a little train data available.

Problem statement and caveats

To start, let’s fix and discuss the problem statement.

- Given: two photos. A photo of ID document (or any other document used to prove identity) and a selfie. For simplicity’s sake, we are going to consider the images containing only one face.

- To find: whether faces in given photos belong to one person.

There are plenty of pitfalls in this seemingly simple problem. What if train dataset consists of low-quality photos? Or what if some of the images are flipped or rotated? What if there are lamp or flash reflections right on the face?

Another example is when the photo of a document contains more than one face: the main one, which should be used for identification, and another one as a watermark.

One more important caveat is that faces’ distribution for passport photos is different from the selfies. The same applies to celebrities photos (which faces are usually used to train modern open source neural networks).

Some of the problems listed above can be solved without gathering your own dataset. However, there are some which are quite complex within our limitations (the last two). As only open source projects are considered to be used we are going to skip them for now (one can resolve them by gathering additional data and using separate models for selfies and passports with some weights shared, as described in [2]).

Typical deep learning pipeline for person verification

Now let’s turn to the development steps. The simplest pipeline for face id verification is as follows:

- Detect faces on images.

- Compute faces’ descriptors/embeddings.

- Compare descriptors.

We are going to tackle and describe each block consequently.

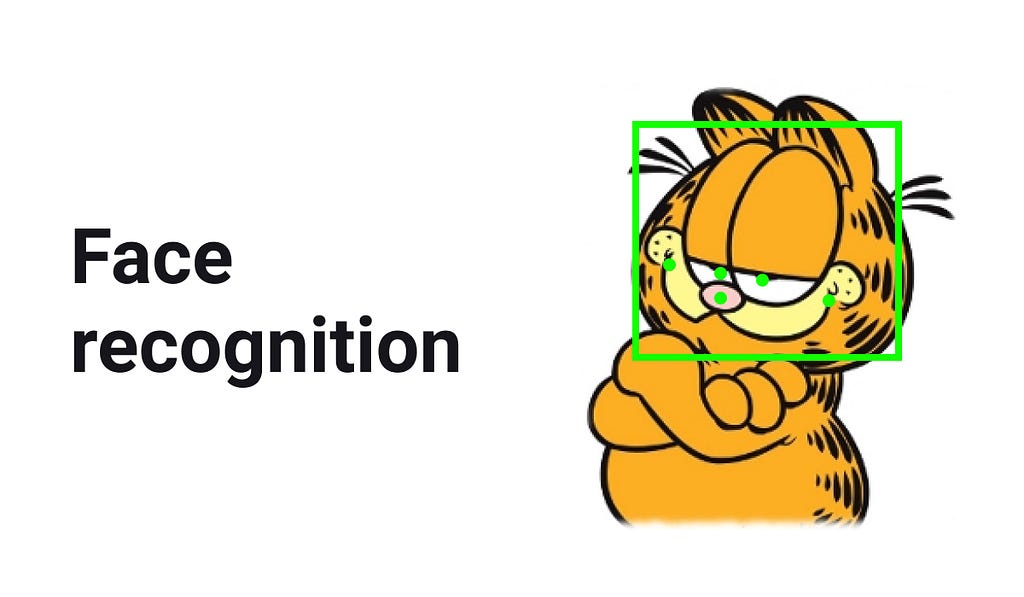

Face detection

There are loads of face detection algorithms: Haar cascades (with popular implementation in opencv and dlib), HOG based detectors and neural networks.

On the one hand, classical approaches are usually fast but not as accurate as DNN (deep neural network) based techniques. On the other hand, DNN was computationally expensive and hard to use until recently, but nowadays these drawbacks are actively being eliminated.

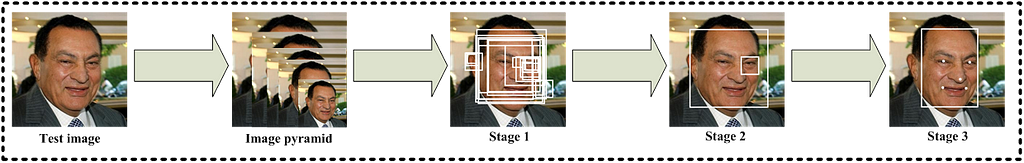

One of our favorite deep learning algorithms is MTCNN [3]. It is fast, simple and accurate among other DNN approaches. It detects 5 face’s landmarks: eyes, mouth corners and nose tip. These points are then used to rotate and scale faces so that all faces we compare are in the same orientation.

Basically, MTCNN consists of three small neural networks. The first one generates a lot of proposals: regions that might contain faces. The second then filters most of them out. Ultimately, the third network refines predictions and regresses face’s landmarks. And to detect faces on multiple scales the algorithm is run on a pyramid of resized versions of the original photo.

To transform faces so that they would be in the same orientation one can use predefined points for a ‘normal’ face and then estimate similarity transform from a detected face (using, for example, skimage.estimate_transform).

We’ve chosen to use a great tensorflow implementation [4] which is as easy to use as a python package.

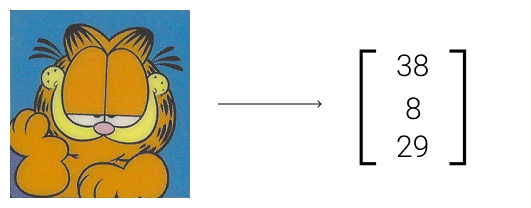

Face embeddings

This is the most significant stage of the pipeline. During it, we need to ‘digitize’ each face — describe it by a numeric vector (or descriptor, embedding) so that faces of one person would have similar embeddings (in some sense). Faces’ descriptors of different people, on the contrary, should be far away from each other. There are plenty of ways to acquire embeddings and the most efficient as of today are again those based on neural networks.

Garfield’s got a rather simple face embedding

Garfield’s got a rather simple face embedding

One popular mechanical solution is to compare embeddings on a sphere, using cosine similarity (see [5] for more details). Just googling ‘sphereface pytorch’ and choosing the first link leads us to a nice PyTorch implementation of one of such models ([6]). We would only need sphere20a class and model’s weights from it.

And here come several unobvious tricks which we use to tackle the problems described in the Problem statement section above. First of all, how should we detect ‘bad faces’? A face can be covered, shot in bad lighting conditions or located far away from the camera so that it’s resolution is low. It turns out that it’s sufficient to compute the norm of the embedding to separate low-quality images from the nice ones.

Let’s describe the intuition behind that. The model is trained on high-quality photos of celebrities’ faces, so the embedding for each face can describe the ‘presence’ of each feature in the image, where by a feature we mean any vector component. These components can represent eyes color, skin tone, etc. The more feature is ‘present’ in the image, the higher the value the vector component will get. But what if there is no face or it is shot in bad conditions? Then there are no features and every component gets a low score. Because of this, the final norm of the descriptor would be low.

One more trick we would recommend is to sum two descriptors for every face: for the original one and its flipped version. It improves the accuracy of the system and solves the problem with the flipped images.

Comparing descriptors

As described earlier, we use cosine similarity to compare two faces’ vectors. This function actually maps two vectors to [-1, 1] interval. So, our last problem is to choose the comparison threshold. The best way to do it is to ask your friends and colleagues to take selfies and photos of their documents (not very easy to do actually) and then just use them as a validation set. Here comes one tradeoff: between false acceptance rate (FAR) and false rejection rate (FRR). The higher the threshold, the lower FAR but higher FRR, and vice versa. The only thing we would like to emphasize here is that the threshold usually depends on customer needs.

Suppose we’ve managed to learn somehow that it is acceptable to falsely accept one ‘ID — selfie’ pair from 1M pairs of different people. Then our task is to maximize FRR@FAR=1e-6. That is, to maximize FRR given such threshold that FAR=1e-6.

Results

We have briefly discussed the following topics:

- Typical problems with designing facial verification system and how to tackle them.

- Face detection and alignment.

- Face embeddings and their comparison.

- Metrics for assessing id verification.

Our real dataset was too small to make any precise conclusions about the system we’ve created. But this is the type of data which is not easy to collect (or sometimes even legal). So, if you want to use it just for fun, you can get along with the current performance. For further quality improvement, you may try to contact your governmental services to get additional face id datasets.

Conclusion

Summing up, we have described an easy-to-implement face id verification pipeline constructed solely of the open source components. What can we do next? The next logical step would be to recognize textual information for ID so that we could automate registration processes more. There are, obviously, lots of text recognition methods and we are going to go through one of them in the next article.

References

- DocFace

- DocFace+: ID Document to Selfie Matching

- MTCNN

- Tensorflow implementation for MTCNN

- NormFace

- PyTorch implementation of SphereFace

Thank you for reading! Please, ask us questions, leave your comments and stay tuned! Find us at https://potehalabs.com

ID verification pipeline with deep learning was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.