Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Well, the answer to the question is still not fully answered in the AI community, but surely they can attempt to tell us. Let’s see how!!

As humans, we understand certain languages which our parents have taught us or maybe we have learned them out of our own interest. Similarly, computers are no different, but what if we wish to talk to our computers, for that we would like it to respond to us in the same language that we can understand. Natural language understanding (NLU) is the task of making a computer understand our language, whereas Natural language generation (NLG) is the task when a computer tries to make us understand what it wants to say. More formally, Natural language generation (NLG) is the natural language processing task of generating natural language from a machine representation system such as a knowledge base or a logical form.

Today in this blog post, we are going to experiment/develop a NLG system from scratch that will be able to recite a story to us, after it has seen ample data about the same. If you have less time and want things to get done faster then checkout markovify, a python module.

This notebook contains all of the code from this experiment.

https://github.com/prakhar21/Text-Generation-Markov-Chains/blob/master/StoryTeller.ipynb

We will be using Markov Chains to build this system. This is one of the classical techniques that people have been using for very long time to accomplish such tasks that hold temporal information. Today with the advancements in AI we have better and much efficient options such as Recurrent Neural Networks for accomplishing such tasks.

What are Markov Chains?

A Markov chain is “a stochastic model describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event”.

To simplify for our use-case, consider each word from all books as one node in the graph connected with a directed edge from a word to another for the words that occur consecutive to each other. Next thing, we would do it to add a certain probabilistic weight to the edges. We decide on the probability by calculating of how many times a certain (word-word) pair exist from the total possible pairs with the start node word.

Let’s take an example and understand it better.

Sentence 1: I am a boy

Sentence 2: I am a girl

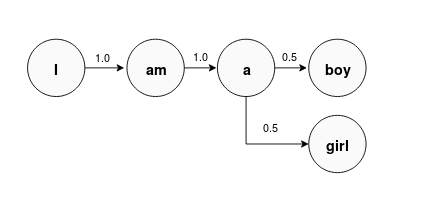

State diagram for example sentences

Considering, our corpus has just two sentences, the state diagram for the same can be modeled as shown above, wherein, I have considered each word in a sentence as one state. Please note that this is just one way of representing the states. The numbers that you see on the edge are known as transition probabilities i.e. this is the probability that you can transition from State A to State B.

For example, We are 100% sure that if we are at the word I then the next word has to be am. Now see the word a, it has two out-degree connections from itself. i.e. there is 50% chance that if you are at word a at anytime of your sentence then you can either go to girl or boy with equal probabilities.

By now you would have cracked that, more data will likely have more out-degree connections from a certain word, resulting in different transitions probabilities for connected transitions and increasing the exploration parameter of our model. i.e generating more unseen sentences. That’s what are Markov Chains.

One similar kind of implementations can be seen at Automatic Donald Trump.

Markov Chains Representation

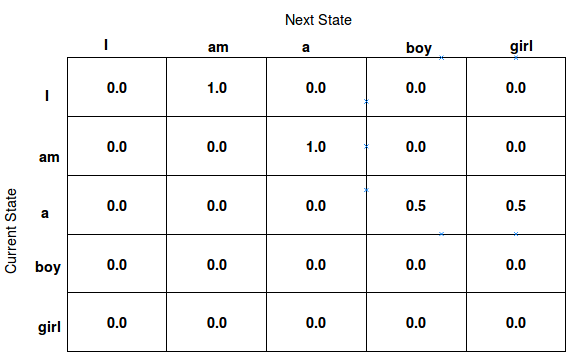

We usually code Markov chains by representing them as transition table. Nothing but a 2-D matrix with number of states as the number of columns and rows. Considering our example, we would have a matrix with 5 rows and 5 columns.

Transition Table

We have put number of rows equal to columns, considering possibility of transition from any state to any other. Any new word that we add to our vocabulary, will increase both the rows and columns by 1.

Other Practical Use-cases of Markov Chains:

- Doing weather prediction for the next day.

- Smart Suggestions while typing text.

- Making a ranking algorithm like Page Rank.

Feel free to share comment your thoughts on the same. — Thanks

Originally published at prakhartechviz.blogspot.com on January 15, 2019.

How Bots Could Become Better Storytellers was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.