Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Serverless and AWS Lambda how-to

This is the third part of our three-part story about analytical systems. Here you can find Part 1 which answers the questions about client analytics and Part 2 about classical server-side analytics implementation.

In this part, we will continue telling you about server-side analytics, particularly about the implementation entirely based on cloud services. In the previous part we have listed the main steps of the implementation. These are:

- Data query

- Stream processing

- Database

- Aggregation service

- Front-end

The same applies here with the only difference in that each step is transferred to cloud services to the greatest extent possible. So, we will follow the main steps listed above in their original order trying to describe potential cloud option properties and differences between them.

1. Data Query

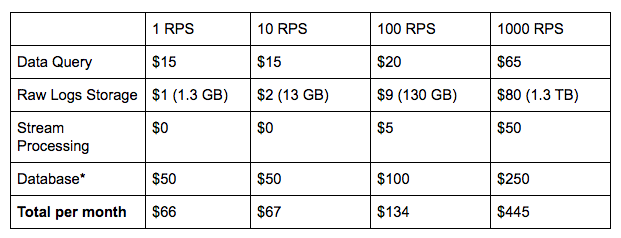

In our work, we generally prefer to use Kinesis. It’s a SaaS streaming platform developed and managed by Amazon. This solution is much easier than Kafka (both its self-hosted and manageable options). It allows real-time and batch processing and easily sends data from the clients to the streams. The biggest benefit of using Kinesis over Kafka is that you don’t have to operate and manage any underlying infrastructure (servers and clusters). Kinesis can be scaled both programmatically and via web-interface. The cost of running a one shard stream with the ingestion rate of 100 events per second (0.5 KB each) is about $20 a month. It converts to 260M events per month (for approx. 130 Gb of data).

Possible alternatives: manageable Kafka (which is much more difficult), RabbitMQ, or any other similar pub/sub-queue.

Raw logs storage Raw logs storage

Raw logs storage

Talking about raw logs storage, the option we prefer is a duet of Amazon Firehose and S3.

Firehose is a part of Kinesis which receives events from producers, keeps them for a specified time period (say, 5 minutes) and then sends to the destination in a batch. S3, in turn, is a highly durable and scalable cloud storage. It’s worth reminding that the main objective of the storing raw logs is to have a relevant copy in case something went wrong within the whole log transfer process.

We recommend uploading objects to S3 by batches in order to reduce the number of the Put operations, cause you have to pay for each of them. This service is fully managed and doesn’t require any administration. The cost for 1000 events per second (0.5 Kb each) is $9 per month. $5 is for Firehose, and another $4 for storing 130 Gb of data in the S3. And if you are not going to delete your previous month raw logs, the price for S3 will grow up every month by $4. Athena is an additional service which allows making SQL queries to the data stored in S3 buckets.

2. Stream Processing

To process the continuously generated events we need to include some platform which allows custom logic creation. For example, Kinesis is friendly to the Amazon Lambda.

Lambda is a cloud service which allows running business logic written in Node.js, Python, Java, C#, and Go without manual managing servers. In a nutshell, Lambda is a stateless function which is called in response to a trigger (an HTTP query, a timer or a Kinesis event). Lambda scales up and down automatically based on the amount of simultaneously incoming events. You pay only for the time when the function is executing: specifically, for calls and for the lifetime of the function (for every 100ms the code is executed). The estimated cost for processing around 100 events per second is $5 a month.

Another possible option is developing your own container-based application using Docker. Docker is a container platform which allows you to deploy code with a predefined environment on any server. It reduces deploy time a lot comparing to bare servers and allows to configure auto-scaling using popular orchestrators (Kubernetes, Docker Swarm, Rancher etc.) Unlike Lambdas containers don’t set any limits (on languages or external libraries used, duration, memory etc.) for your system. However, containers require much more operations and management than Lambda does.

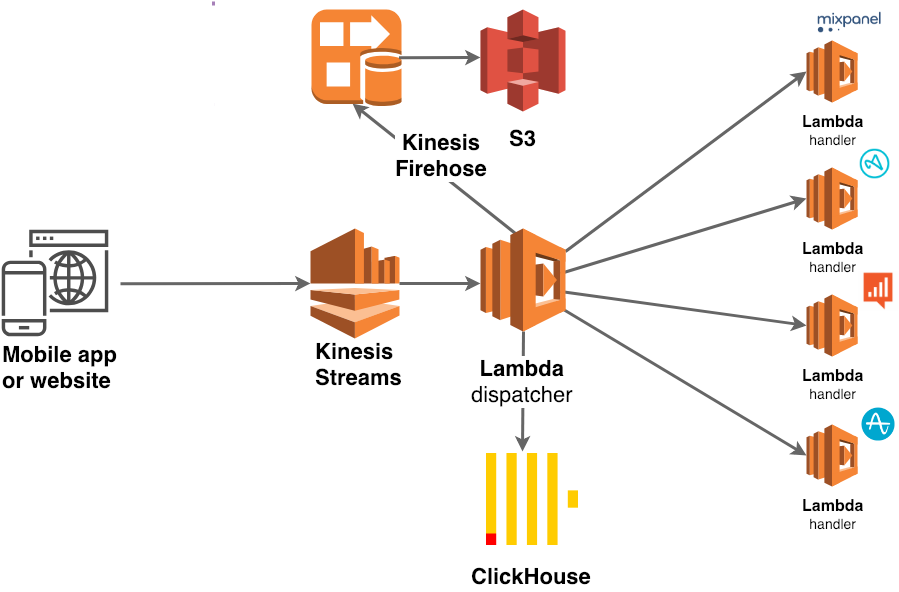

We often use serverless functions as follows:

- Basically, we create one central entry point and a central handler surrounded by other microservices and plugins. This is the way to create a scheme of events configuration and routing.

- For instance, you can create a configuration file which will send a whole set of events to the processing module and metrics calculation: one of them to the aggregations, another — to different analytics systems and so on.

- As a result, each of these workflow parts can be replaced by an Amazon lambda function. Thus instead of a large Spark streaming you can get a set of functions working in parallel. Some of them call others and it performs as a directed graph of related functions.

How does Lambda function call another one? There are two ways: making a direct call or via API. To make direct calls we use boto3 (which is built in for Lambda). The calls can be sync and async and this way we pay only for the function calls. On the contrary, when using API, a restful interface is available. However, API is a paid option.

What is important about serverless is the variety of ways to use it. These are the development of:

- WSGI apps (which take only several hours to develop)

- websites

- chatbots

- MapReduce architectures

- on-demand calculations

And some limitations you should consider:

- CPU or GPU usage while running intensive tasks. This means one can’t run highly complex computational tasks via Lambda.

- Only 3 Gb RAM quota, which can be exceeded easily.

- Cold starts. If no one has used the app for a long time, at the next request the container call will occur and the request processing time may increase by 10 times. To avoid this, try using the warm-up method: send a test event to lambda function every hour so that it remained ‘warm’. However, the cold start issue hasn’t been solved yet. We believe one of the cloud providers will manage it in the nearest future.

3. Database

For storing processed data we offer to study the following known solutions: Amazon RDS Postgres and ClickHouse.

AWS RDS is a service which takes away the common database administration tasks such as making backups, security updates and performance optimization. It also helps with replicas provisioning. It’s not a completely managed database like DynamoDB, but still, it’s handier than running Postgres by yourself. Postgres is an object-relational database with many great features but it’s not the best choice for storing and especially querying analytics events.

Luckily, there are columnar (column-oriented) databases which are developed specifically for building analytical systems in production. They are made clear both for software developers/devops and data scientists/analysts. One of the most popular columnar databases is HBase made by Apache. It is optimized specifically for high loads, however, it is hard to deploy and scale and has a tricky API.

Another columnar database is ClickHouse. It is developed by Yandex specifically for analytics services. It’s designed for extremely fast real-time (benchmarks) queries with SQL-like syntax. It has a variety of useful built-in analytics features. We’ve tested ClickHouse on a dataset of 2 billion entries and most aggregation queries took under 1 second to execute.

Clickhouse can work well on a single machine but can also be deployed to a cluster relatively easy. Running an instance with ClickHouse on EC2 for the specified workload (100 events per second) costs from $50 to $120 per month depending on the amount of allocated memory. The price for the instance can be significantly reduced by using the reserved instance: committing to use the same instance for a year-long or three-year term. SSD storage is $0.11 per Gb per month. You can also run ClickHouse on your own hardware.

4. Aggregation service Big data analysis

Big data analysis

Since at Step 1 we have created a common configuration file for routing all of the app events, any analytics system can be connected easily now. For example, with new app version release we may bring in new events. Suppose, analysts would like to look at them through their analytics system interface, say, Mixpanel.

To achieve this we should add a new component written in one of supported by Lambda languages: Node.js (JavaScript), Python, Java (compatible with Java 8), C# (.NET Core) and Go. This is something that can be done quickly and simply. Note that all modules can be compiled with AWS Linux and should be deployed with the app simultaneously, otherwise it will not work as expected.

Below is a scheme of the possible final architecture using described Amazon services and several costs estimates.

Architecture Scheme showing how the final architecture may lookCosts

Scheme showing how the final architecture may lookCosts *Cost can be reduced 30–50% when using reserved instances (12 or 36 months commitment)

*Cost can be reduced 30–50% when using reserved instances (12 or 36 months commitment)

Conclusion

Implementing analytics solutions for enterprises can be realized in a variety of ways. The solutions we’ve described above is a low-cost and easy to deploy variant. This is achieved by a bunch of Amazon and serverless technologies which allows to:

- Fine-tune events using lambda functions;

- Significantly decrease and manage costs;

- Scale smoothly;

- Cut the time spent on devops. Team lead himself can administer and deal with the deployment;

- Release new versions quickly.

Thank you for reading! Please, ask us questions, leave your comments and stay tuned! Find us at https://potehalabs.com

Analytics services. Serverless analytics was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.