Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Learn logistic regression with TensorFlow and Keras in this article by Armando Fandango, an inventor of AI empowered products by leveraging expertise in deep learning, machine learning, distributed computing, and computational methods. He has also provided thought leadership roles as Chief Data Scientist and Director at startups and large enterprises.

This article will show you how to implement a classification algorithm, known as multinomial logistic regression, to identify the handwritten digits dataset. You’ll use both TensorFlow core and Keras to implement this logistic regression algorithm.

Logistic regression with TensorFlow

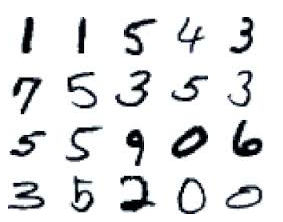

One of the most popular examples regarding multiclass classification is to label the images of handwritten digits. The classes, or labels, in this example are {0,1,2,3,4,5,6,7,8,9}. The dataset that you’ll use is popularly known as MNIST and is available from the following link: http://yann.lecun.com/exdb/mnist/. The MNIST dataset has 60,000 images for training and 10,000 images for testing. The images in the dataset appear as follows:

1. First, import datasetslib, a library from https://github.com/PacktPublishing/TensorFlow-Machine-Learning-Projects:

DSLIB_HOME = '../datasetslib'

import sys

if not DSLIB_HOME in sys.path:

sys.path.append(DSLIB_HOME)

%reload_ext autoreload

%autoreload 2

import datasetslib as dslib

from datasetslib.utils import imutil

from datasetslib.utils import nputil

from datasetslib.mnist import MNIST

2. Set the path to the datasets folder in your home directory, which is where you want all of the datasets to be stored:

import os

datasets_root = os.path.join(os.path.expanduser('~'),'datasets')3. Get the MNIST data using your datasetslib and print the shapes to ensure that the data is loaded properly:

mnist=MNIST()

x_train,y_train,x_test,y_test=mnist.load_data()

mnist.y_onehot = True

mnist.x_layout = imutil.LAYOUT_NP

x_test = mnist.load_images(x_test)

y_test = nputil.onehot(y_test)

print('Loaded x and y')print('Train: x:{}, y:{}'.format(len(x_train),y_train.shape))print('Test: x:{}, y:{}'.format(x_test.shape,y_test.shape))4. Define the hyperparameters for training the model:

learning_rate = 0.001

n_epochs = 5

mnist.batch_size = 100

5. Define the placeholders and parameters for your simple model:

# define input images

x = tf.placeholder(dtype=tf.float32, shape=[None, mnist.n_features])

# define output labels

y = tf.placeholder(dtype=tf.float32, shape=[None, mnist.n_classes])

# model parameters

w = tf.Variable(tf.zeros([mnist.n_features, mnist.n_classes]))

b = tf.Variable(tf.zeros([mnist.n_classes]))

6. Define the model with logits and y_hat:

logits = tf.add(tf.matmul(x, w), b)

y_hat = tf.nn.softmax(logits)

7. Define the loss function:

epsilon = tf.keras.backend.epsilon()

y_hat_clipped = tf.clip_by_value(y_hat, epsilon, 1 - epsilon)

y_hat_log = tf.log(y_hat_clipped)

cross_entropy = -tf.reduce_sum(y * y_hat_log, axis=1)

loss_f = tf.reduce_mean(cross_entropy)

8. Define the optimizer function:

optimizer = tf.train.GradientDescentOptimizer

optimizer_f = optimizer(learning_rate=learning_rate).minimize(loss_f)

9. Define the function to check the accuracy of the trained model:

predictions_check = tf.equal(tf.argmax(y_hat, 1), tf.argmax(y, 1))

accuracy_f = tf.reduce_mean(tf.cast(predictions_check, tf.float32))

10. Run the training loop for each epoch in a TensorFlow session:

n_batches = int(60000/mnist.batch_size)

with tf.Session() as tfs:

tf.global_variables_initializer().run()

for epoch in range(n_epochs):

mnist.reset_index()

for batch in range(n_batches):

x_batch, y_batch = mnist.next_batch()

feed_dict={x: x_batch, y: y_batch}batch_loss,_ = tfs.run([loss_f, optimizer_f],feed_dict=feed_dict )

#print('Batch loss:{}'.format(batch_loss))11. Run the evaluation function for each epoch with the test data in the same TensorFlow session that was created previously:

feed_dict = {x: x_test, y: y_test}accuracy_score = tfs.run(accuracy_f, feed_dict=feed_dict)

print('epoch {0:04d} accuracy={1:.8f}'.format(epoch, accuracy_score))

You’ll get the following output:

epoch 0000 accuracy=0.73280001 epoch 0001 accuracy=0.72869998 epoch 0002 accuracy=0.74550003 epoch 0003 accuracy=0.75260001 epoch 0004 accuracy=0.74299997

There you go. You just trained your very first logistic regression model using TensorFlow for classifying handwritten digit images and got 74.3% accuracy. Now, see how writing the same model in Keras makes this process even easier.

Logistic regression with Keras

Keras is a high-level library that is available as part of TensorFlow. In this section, you will rebuild the same model built earlier with TensorFlow core with Keras:

1. Keras takes data in a different format and so, you must first reformat the data using datasetslib:

x_train_im = mnist.load_images(x_train)

x_train_im, x_test_im = x_train_im / 255.0, x_test / 255.0

In the preceding code, you are loading the training images in memory before both the training and test images are scaled, which you do by dividing them by 255.

2. Then, you build the model:

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(10, activation=tf.nn.softmax)

])

3. Compile the model with the sgd optimizer. Set the categorical entropy as the loss function and the accuracy as a metric to test the model:

model.compile(optimizer='sgd',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

4. Train the model for 5 epochs with the training set of images and labels:

model.fit(x_train_im, y_train, epochs=5)

Epoch 1/5

60000/60000 [==============================] - 3s 45us/step - loss: 0.7874 - acc: 0.8095

Epoch 2/5

60000/60000 [==============================] - 3s 42us/step - loss: 0.4585 - acc: 0.8792

Epoch 3/5

60000/60000 [==============================] - 2s 42us/step - loss: 0.4049 - acc: 0.8909

Epoch 4/5

60000/60000 [==============================] - 3s 42us/step - loss: 0.3780 - acc: 0.8965

Epoch 5/5

60000/60000 [==============================] - 3s 42us/step - loss: 0.3610 - acc: 0.9012

10000/10000 [==============================] - 0s 24us/step

5. Evaluate the model with the test data:

model.evaluate(x_test_im, nputil.argmax(y_test))

You’ll get the following evaluation scores as output:

[0.33530342621803283, 0.9097]

Wow! Using Keras, you can achieve higher accuracy. Here, you achieved approximately 90% accuracy. This is because Keras internally sets many optimal values so that you can quickly start building models.

If you found this article interesting, you can explore TensorFlow Machine Learning Projects to implement TensorFlow’s offerings such as TensorBoard, TensorFlow.js, TensorFlow Probability, and TensorFlow Lite to build smart automation projects. With the help of TensorFlow Machine Learning Projects, you’ll not only learn how to build advanced projects using different datasets but also be able to tackle common challenges using a range of libraries from the TensorFlow ecosystem.

Logistic Regression with TensorFlow and Keras was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.