Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Data strategy is about building better cars, not accumulating more oil. Images: Zbynek Burival, Chris BarbalisWhen was the last time you bought crude oil?

Data strategy is about building better cars, not accumulating more oil. Images: Zbynek Burival, Chris BarbalisWhen was the last time you bought crude oil?

“Data is the new oil” is by now a common phrase asserting the importance of data as a key resource. But Clive Humby actually coined it in the context of a crucial observation that is often overlooked: most people don’t go around shopping for crude oil. In his words,

Data is just like crude. It’s valuable, but if unrefined it cannot really be used. It has to be changed into gas, plastic, chemicals, etc to create a valuable entity that drives profitable activity.

Raw data, like a clickstream, a sequence of financial transactions, or a dump of medical records, is very hard to use. Like oil, it needs to be refined and transformed into more readily usable forms, such as clean, organized data tables.

Now, you don’t buy gasoline for its decorative value. You buy it to power the engine in your car, so you can get to where you need to go — and that is what generates value.

Machine Learning and AI are the new combustion engine

Data works in the same manner. What actually generates value is a product. In this post we focus on data products: products that use data to generate value.

Data products need an engine that consumes refined data and powers value creation. This engine can be as straightforward as a simple way to display important aspects of the data, so humans can make more informed decisions. We call this “analytics”. The engine can also be more sophisticated: predictions made by a machine learning model, or a neural network that identifies objects in an image.

Machine learning and AI are the new combustion engine, and data products are the new cars.

Taken together, these components form the data product’s value chain:

Value chain for a data product. Icons by Ale Estrada, Ayub Irawan, BomSymbols, Hadi Davodpour for the Noun Project

Value chain for a data product. Icons by Ale Estrada, Ayub Irawan, BomSymbols, Hadi Davodpour for the Noun Project

Sometimes, parts of this chain can be outsourced. For example, many companies successfully sell ‘analytics’ or ‘insights’. These are essentially data refineries: their product is refined data or sometimes even the engine. Then, other products use them to generate value in the market. The business model and strategy of a data refinery are very different than those of data products, which are my focus here.

Product/Data Fit: Data strategy for data products

This post focuses on data strategy for data products and how to find product/data fit. It’s all about figuring out how the pieces in this chain fit together to optimize value creation.

This process is anchored by understanding how the product uses data to create business value. This guides you as you go up and down the chain to answer questions like:

- What are the most efficient engines to optimize value creation?

- How much and what type of refined data do the engines need?

- How do you generate (or acquire) and then refine the raw data?

One way to think about these questions is by understanding the return on investment (ROI) on the engine. For simplicity, I’ll focus on the case where the engine is a machine learning model.

The investment in the model includes the cost, in time and dollars, of acquiring and storing the data. It also includes the time and cost of refining the data and training the model.

The return on the model depends on two components:

- The accuracy of the model

- The business value generated from a correct prediction (in dollars, clicks, or another quantifiable metric), and the business cost of a wrong or inaccurate prediction

Data strategy is about building better cars

The key to data strategy is: focus on increasing the return, not increasing the investment. This sounds obvious, but is often lost in the hype around data and AI.

Some people focus exclusively on the amount of data. They are the ones who always complain that “We need more data!” or brag about how “We are generating so much data!”.

But these phrases are often markers of a poor data strategy. They emphasize the investment instead of the return. The real goal is to build better cars with more efficient engines, not to accumulate more crude oil.

The point of data strategy is to build better cars with more efficient engines, not to accumulate more crude oil.

Another common distraction is to focus too much on the engine. You wouldn’t use a jet engine to power a scooter. Likewise, for most early stage data products, sophisticated machine learning and AI are overkill. 99% of the time, it’s better to invest in figuring out how your product generates value in the market than in tinkering with the inner workings of a neural net.

Match the engine to your data

How do you increase the returns from your model? One way is to improve model accuracy. But that will also increase the investment: you will need more data or more efficient methods. So the key here is to keep the ROI positive by matching the engine with the amount of data that you have.

One example is the evolution of a recommender system:

- Start by recommending the most popular items to all users. This doesn’t require user level data, and the recommendation is based on simple summary statistics, so the investment is very small.

- As you collect more granular data you can make suggestions like “users who bought X also bought Y”. This requires enough data for each user, but the methods are still very simple.

- A mature recommendation engine will take into account the full shopping history of a user in addition to other features of users and items, often using a method called collaborative filtering.

As the amount of data increases, the engine graduates from simple summary statistics to full blown machine learning. The model gets more and more accurate, but you never invest more than appropriate for the amount of data that you have at each stage.

Data is subject to the law of diminishing returns…

Eventually, it gets hard to scale the ROI of a model on accuracy alone. The reason is that data is subject to diminishing returns.

Suppose you want to predict the results of an election in a state with 1,000,000 voters who are choosing between two candidates, Daisy and Minnie. You survey 200 random voters and 53% of them are voting for Daisy. It turns out that you can be 80% sure that Daisy will in fact win. But if you want to be 90% sure, you’ll need more than double that, about 450 voters. To get to 95% you’ll need 750, and for 99%, another 750.

Real election polls are obviously much more complex, and so is any realistic data problem you are likely to run into. But the principle remains the same. As you are looking to make your predictions more and more accurate, the amount of data you need to collect increases exponentially.

… as are machine learning and AI

Can you solve this problem by using a more powerful engine, like deep learning? Not so much. Sophisticated methods typically require much larger amounts of data, and they are also subject to diminishing returns.

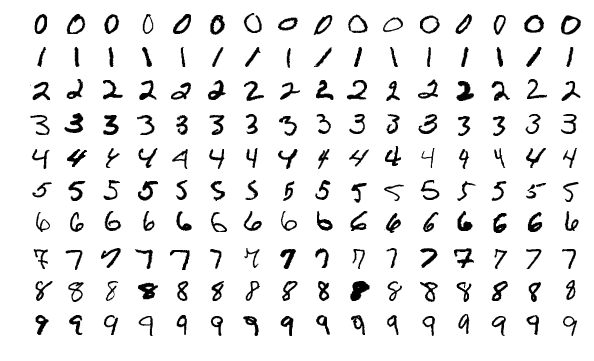

Sample images from MNIST dataset. Source: https://en.wikipedia.org/wiki/MNIST_database

Sample images from MNIST dataset. Source: https://en.wikipedia.org/wiki/MNIST_database

MNIST is a dataset consisting of images of handwritten digits. It is widely used as a toy dataset in image recognition, where the goal is to correctly identify the digit in each image.

One of the simplest algorithms you could use for this purpose is multinomial logistic regression. Despite its simplicity, it correctly identifies about 92.5% of the digits. A simple neural net is a reasonable next step, and it can quickly get you to about 99.3% accuracy. Clearly very impressive, but note that it’s just 7% better than a much simpler model. Further improvements are even harder to achieve: a state-of-the-art deep learning model, using methods fresh out of research, can improve accuracy by another 0.5%.

MNIST is a toy example. Any realistic problem is going to be much more difficult and you should expect lower accuracy from the models. Sometimes, improving performance by 0.1% makes a big difference, and it makes sense to use the really sophisticated stuff. Regardless, both data and methods are subject to very strong diminishing returns.

Ask more valuable questions

Because of the diminishing returns on data, eventually it gets difficult to increase the ROI of a machine learning model just by improving its accuracy. How else can you do that?

Accuracy is just one part of the return on data. The other is the business value of a prediction. One way of thinking about it is to imagine that your model is 100% accurate. What would be the impact on your business? This is entirely about the the question that the model is addressing, not the quality of the solution. So the way to increase the ROI is to ask more valuable questions.

Here is an example. Daisy is running for office, and she is sending volunteers to knock on doors and increase turnout. However, the number of volunteers is limited, so she wants to build a model to target only the voters who are likely to vote for her and not for her opponent Minnie. This is called “response modeling”.

There is a more valuable model that Daisy could build: predict which voters will vote for her if visited by a volunteer, but would stay home otherwise. Voters who are predicted to vote even if they are not visited won’t be targeted, so the volunteers only visit those voters where they make a difference. This is called “uplift modeling”.

Accurate uplift modeling requires much more data than traditional response modeling. So if Daisy doesn’t have enough data, she should start by building response models and improve them as data accumulates. But eventually she should shift to uplift models, even if they are less accurate than the response models — because over all they are more valuable.

Achieving product/data fit

Let’s summarize how you can improve the ROI on your model:

- By increasing the accuracy of the model, while ensuring that the investment in the engine matches the amount of data

- By increasing the business value of a prediction, especially if it can make up for a less accurate model

This is how you find product/data fit: iterate to simultaneously increase the value of your data, your models, and the questions they are tackling.

Let’s see how it plays out in a more realistic situation. Many healthcare startups experiment with clinical decision support (CDS) systems, products that are intended to assist clinicians in making complex decisions in a data-driven manner.

Some CDS products focus on providing treatment suggestions, but they often encounter challenges with market adoption. One reason is that a single wrong suggestion can critically undermine the trust in the system. In terms of the ROI on the model, the cost of a wrong suggestion is exceedingly high. This means that the models making the suggestions must be extremely accurate, which in turn requires very high investment. It is probably better to defer the building of a suggestion engine until the company has secured access to enough data, as well as the trust of the users.

A successful strategy for building a CDS will focus first on the areas where accuracy is less critical. One way of doing that is by showing the data in a way that makes intuitive sense and provides useful insights to the clinician. This is a very common theme in data product development, and I will expand on it in a future post.

Bottom Line

- Data strategy is about building better products, not accumulating more data or using more sophisticated methods.

- To do that, you must understand how your product uses data to generate value, and focus on increasing the ROI on your models.

- One way to do that is by improving the accuracy of the models, but you will quickly run into diminishing returns.

- Another way is by finding more valuable questions that your data can answer, which can result in better ROI even with less accurate models.

- This is how you find product/data fit: iterate to simultaneously increase the value of your data, your models, and the questions they are tackling.

The Product/Data Fit Strategy was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.