Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Learn how to generate lyrics using deep (multi-layer) LSTM in this article by Matthew Lamons, founder, and CEO of Skejul — the AI platform to help people manage their activities, and Rahul Kumar, an AI scientist, deep learning practitioner, and independent researcher.

This article will show you how to create a deep LSTM model suited for the task of generating music lyrics. Here’s your goal: to build and train a model that outputs entirely new and original lyrics that is in the style of an arbitrary number of artists. You can refer to the code file found at Lyrics-ai (https://github.com/PacktPublishing/Python-Deep-Learning-Projects/tree/master/Chapter06/Lyrics-ai) for this exercise.

Data pre-processing

To build a model that can generate lyrics, you’ll need a huge amount of lyric data, which can easily be extracted from various sources. You can find the code files at https://github.com/PacktPublishing/Python-Deep-Learning-Projects/tree/master/Chapter06/Lyrics-ai. These files contain a text file called lyrics_data.txt which includes lyrics from around 10,000 songs and stored them in.

Now that you have your data, convert this raw text into the one-hot encoding version:

import numpy as np

import codecs

# Class to perform all preprocessing operations

class Preprocessing:

vocabulary = {}binary_vocabulary = {}char_lookup = {}size = 0

separator = '->'

# This will take the data file and convert data into one hot encoding and dump the vocab into the file.

def generate(self, input_file_path):

input_file = codecs.open(input_file_path, 'r', 'utf_8')

index = 0

for line in input_file:

for char in line:

if char not in self.vocabulary:

self.vocabulary[char] = index

self.char_lookup[index] = char

index += 1

input_file.close()

self.set_vocabulary_size()

self.create_binary_representation()

# This method is to load the vocab into the memory

def retrieve(self, input_file_path):

input_file = codecs.open(input_file_path, 'r', 'utf_8')

buffer = ""

for line in input_file:

try:

separator_position = len(buffer) + line.index(self.separator)

buffer += line

key = buffer[:separator_position]

value = buffer[separator_position + len(self.separator):]

value = np.fromstring(value, sep=',')

self.binary_vocabulary[key] = value

self.vocabulary[key] = np.where(value == 1)[0][0]

self.char_lookup[np.where(value == 1)[0][0]] = key

buffer = ""

except ValueError:

buffer += line

input_file.close()

self.set_vocabulary_size()

# Below are some helper functions to perform pre-processing.

def create_binary_representation(self):

for key, value in self.vocabulary.iteritems():

binary = np.zeros(self.size)

binary[value] = 1

self.binary_vocabulary[key] = binary

def set_vocabulary_size(self):

self.size = len(self.vocabulary)

print "Vocabulary size: {}".format(self.size)def get_serialized_binary_representation(self):

string = ""

np.set_printoptions(threshold='nan')

for key, value in self.binary_vocabulary.iteritems():

array_as_string = np.array2string(value, separator=',', max_line_width=self.size * self.size)

string += "{}{}{}\n".format(key.encode('utf-8'), self.separator, array_as_string[1:len(array_as_string) - 1])return string

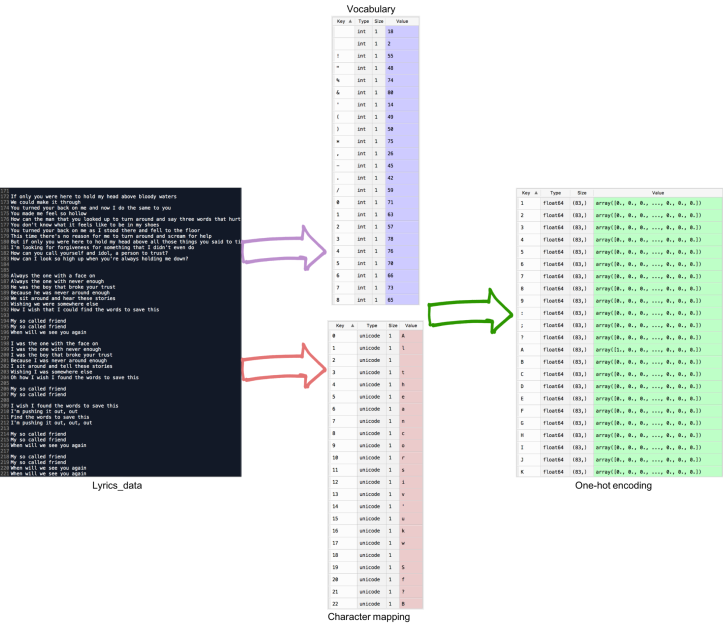

The overall objective of the pre-processing module is to convert the raw text data into one-hot encoding, as shown in the following diagram:

This figure represents the data preprocessing part. The raw lyrics data is used to build the vocabulary mapping which is further been transformed into the on-hot encoding. After the successful execution of the pre-processing module, a binary file will be dumped as {dataset_filename}.vocab. This vocab file is one of the mandatory files that need to be fed into the model during the training process, along with the dataset.

Defining the model

This article will take an approach from the Keras model and use TensorFlow to write each layer from scratch. TensorFlow gives you a more fine-tuned control over your model’s architecture. For this model, use the code in the following block to create two placeholders that will store the input and output values:

import tensorflow as tf

import pickle

from tensorflow.contrib import rnn

def build(self, input_number, sequence_length, layers_number, units_number, output_number):

self.x = tf.placeholder("float", [None, sequence_length, input_number])self.y = tf.placeholder("float", [None, output_number])self.sequence_length = sequence_length

Next, store the weights and bias in the variables that you’ve created:

self.weights = {'out': tf.Variable(tf.random_normal([units_number, output_number]))

}

self.biases = {'out': tf.Variable(tf.random_normal([output_number]))

}

x = tf.transpose(self.x, [1, 0, 2])

x = tf.reshape(x, [-1, input_number])

x = tf.split(x, sequence_length, 0)

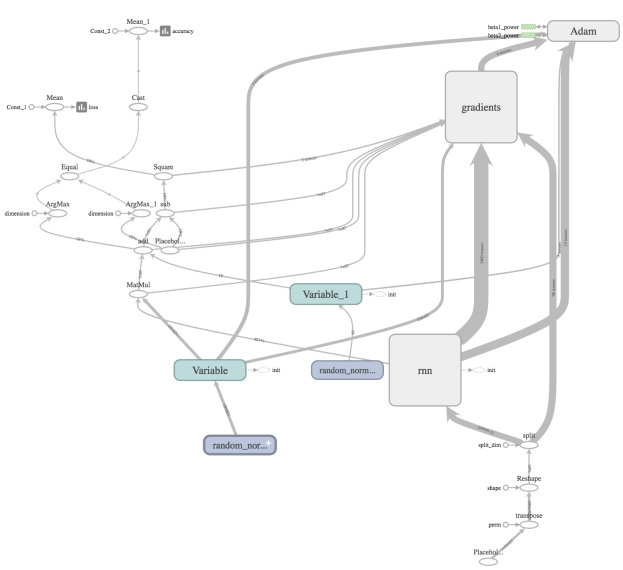

You can build this model using multiple LSTM layers, with the basic LSTM cells assigning each layer with the specified number of cells, as shown in the following diagram:

Tensorboard visualization of the LSTM architecture

Tensorboard visualization of the LSTM architecture

The following is the code for this:

lstm_layers = []

for i in range(0, layers_number):

lstm_layer = rnn.BasicLSTMCell(units_number)

lstm_layers.append(lstm_layer)

deep_lstm = rnn.MultiRNNCell(lstm_layers)

self.outputs, states = rnn.static_rnn(deep_lstm, x, dtype=tf.float32)

print "Build model with input_number: {}, sequence_length: {}, layers_number: {}, " \"units_number: {}, output_number: {}".format(input_number, sequence_length, layers_number,units_number, output_number)

# This method is using to dump the model configurations

self.save(input_number, sequence_length, layers_number, units_number, output_number)

Training the deep TensorFlow-based LSTM model

Now that you have the mandatory inputs, that is, the dataset file path, the vocab file path, and the model name initiate the training process. Define all hyperparameters for the model:

import os

import argparse

from modules.Model import *

from modules.Batch import *

def main():

parser = argparse.ArgumentParser()

parser.add_argument('--training_file', type=str, required=True)parser.add_argument('--vocabulary_file', type=str, required=True)parser.add_argument('--model_name', type=str, required=True)parser.add_argument('--epoch', type=int, default=200)parser.add_argument('--batch_size', type=int, default=50)parser.add_argument('--sequence_length', type=int, default=50)parser.add_argument('--log_frequency', type=int, default=100)parser.add_argument('--learning_rate', type=int, default=0.002)parser.add_argument('--units_number', type=int, default=128)parser.add_argument('--layers_number', type=int, default=2)args = parser.parse_args()

Since this is a batch training the model, divide the dataset into batches of a defined batch_size using the Batch module:

batch = Batch(training_file, vocabulary_file, batch_size, sequence_length)

Each batch will return two arrays. One will be the input vector of the input sequence, which will have a shape of [batch_size, sequence_length, vocab_size], and the other array will hold the label vector, which will have a shape of [batch_size, vocab_size].

Now, initialize your model and create the optimizer function. In this model, you used the Adam Optimizer. Then, you’ll train your model and perform the optimization over each batch:

# Building model instance and classifier

model = Model(model_name)

model.build(input_number, sequence_length, layers_number, units_number, classes_number)

classifier = model.get_classifier()

# Building cost functions

cost = tf.reduce_mean(tf.square(classifier - model.y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

# Computing the accuracy metrics

expected_prediction = tf.equal(tf.argmax(classifier, 1), tf.argmax(model.y, 1))

accuracy = tf.reduce_mean(tf.cast(expected_prediction, tf.float32))

# Preparing logs for Tensorboard

loss_summary = tf.summary.scalar("loss", cost)acc_summary = tf.summary.scalar("accuracy", accuracy)train_summary_op = tf.summary.merge_all()

out_dir = "{}/{}".format(model_name, model_name)train_summary_dir = os.path.join(out_dir, "summaries")

##

# Initializing the session and executing the training

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

iteration = 0

while batch.dataset_full_passes < epoch:

iteration += 1

batch_x, batch_y = batch.get_next_batch()

batch_x = batch_x.reshape((batch_size, sequence_length, input_number))

sess.run(optimizer, feed_dict={model.x: batch_x, model.y: batch_y})if iteration % log_frequency == 0:

acc = sess.run(accuracy, feed_dict={model.x: batch_x, model.y: batch_y})loss = sess.run(cost, feed_dict={model.x: batch_x, model.y: batch_y})print("Iteration {}, batch loss: {:.6f}, training accuracy: {:.5f}".format(iteration * batch_size,loss, acc))

batch.clean()

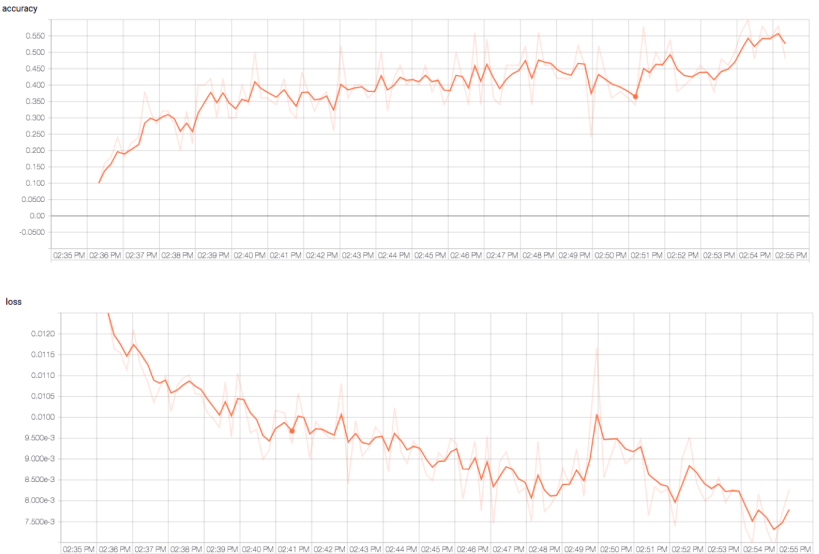

Once the model completes its training, the checkpoints are stored. You can use them later for inferencing. The following is a graph of the accuracy and the loss that occurred during the training process:

The accuracy (top) and the loss (bottom) plot with respect to the time

The accuracy (top) and the loss (bottom) plot with respect to the time

You can see that accuracy getting increased and loss getting reduced over the period of time.

Inference

Now that the model is ready, you can use it to make predictions. Start by defining all parameters. While building inference, you need to provide some seed text, just like you did in the previous model. Along with this, you should also provide the path of the vocab file and the output file in which you will store the generated lyrics. Also, provide the length of the text that you need to generate:

import argparse

import codecs

from modules.Model import *

from modules.Preprocessing import *

from collections import deque

def main():

parser = argparse.ArgumentParser()

parser.add_argument('--model_name', type=str, required=True)parser.add_argument('--vocabulary_file', type=str, required=True)parser.add_argument('--output_file', type=str, required=True)parser.add_argument('--seed', type=str, default="Yeah, oho ")parser.add_argument('--sample_length', type=int, default=1500)parser.add_argument('--log_frequency', type=int, default=100)Next, load the model by providing the name of model that you used in the training step in the preceding code, and restore the vocabulary from the file:

model = Model(model_name)

model.restore()

classifier = model.get_classifier()

vocabulary = Preprocessing()

vocabulary.retrieve(vocabulary_file)

Use the stack methods to store the generated characters, append the stack, and then use the same stack to feed it into the model in an interactive fashion:

# Preparing the raw input data

for char in seed:

if char not in vocabulary.vocabulary:

print char,"is not in vocabulary file"

char = u' '

stack.append(char)

sample_file.write(char)

# Restoring the models and making inferences

with tf.Session() as sess:

tf.global_variables_initializer().run()

saver = tf.train.Saver(tf.global_variables())

ckpt = tf.train.get_checkpoint_state(model_name)

if ckpt and ckpt.model_checkpoint_path:

saver.restore(sess, ckpt.model_checkpoint_path)

for i in range(0, sample_length):

vector = []

for char in stack:

vector.append(vocabulary.binary_vocabulary[char])

vector = np.array([vector])

prediction = sess.run(classifier, feed_dict={model.x: vector})predicted_char = vocabulary.char_lookup[np.argmax(prediction)]

stack.popleft()

stack.append(predicted_char)

sample_file.write(predicted_char)

if i % log_frequency == 0:

print "Progress: {}%".format((i * 100) / sample_length)sample_file.close()

print "Sample saved in {}".format(output_file)Output

After successful execution, you’ll get your own freshly brewed, AI-generated lyrics reviewed and published. The following is one sample of such lyrics. Some spellings have been modified so that the sentences make sense:

Yeah, oho once upon a time, on ir intasd

I got monk that wear your good

So heard me down in my clipp

Cure me out brick

Coway got baby, I wanna sheart in faic

I could sink awlrook and heart your all feeling in the firing of to the still hild, gavelly mind, have before you, their lead

Oh, oh shor,s sheld be you und make

Oh, fseh where sufl gone for the runtome

Weaaabe the ligavus I feed themust of hear

Here, you can see that the model has learned in the way it has generated the paragraphs and sentences with appropriate spacing. It still lacks perfection and also doesn’t make sense.

Seeing signs of success — The first task is to create a model that can learn, and then the second one is used to improve on that model. This can be obtained by training the model with a larger training dataset and longer training durations.

If you found this article interesting, you can explore Python Deep Learning Projects to master deep learning and neural network architectures using Python and Keras. Python Deep Learning Projects imparts all the knowledge needed to implement complex deep learning projects in the field of computational linguistics and computer vision.

Generating Lyrics Using Deep (Multi-Layer) LSTM was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.