Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

In 2014, the publication of a study from a Google-led AI research team opened up a new field of hacking called an adversarial attack. The techniques the paper demonstrated not only changed our understanding of how machine learning operates but also showed in practical terms how one of the most commercially promising and highly anticipated aspects of the AI revolution could potentially be undermined.

This new attack surface proved so intrinsic to the basic structure of deep neural networks that, to this date, the best minds in AI research are having difficulty in devising effective defenses against it.

This was no Y2K-style fixable programming oversight, but rather a systemic architectural vulnerability, which, barring new breakthroughs, threatens to carry over from the current period of academic and theoretical development into the business, military, and civic AI systems of the future.

If machine learning has a fundamental weakness equivalent to the 51% attack scenario in cryptocurrency schemes, then its susceptibility to adversarial attacks would seem to be it.

Image is everything

The core benefit of the new generation of neural networks is a capacity to deal with the visual world. AI-based image analysis software can drive vehicles, analyze medical imaging, recognize faces, carry out safety inspections, empower robots, categorize image databases, create spaces in augmented reality, analyze and interpret video footage for events and language, and even assist in surgical procedures.

To accomplish this, an image-based machine learning system needs two resources. The first is a training set of still images or video, which allows it to gain a suitably accurate understanding of objects and events that it might later need to recognize. The second is actionable imagery, such as CCTV footage.

From an economic standpoint, such a system must be designed to work with available material. Even if the image input has issues such as compression artifacts or low resolution, the AI must factor these limitations into its process.

This high level of fault tolerance, as it transpires, enables attackers to poison the input data and affect — or even command — the outcome of the AI’s analysis.

A malicious ghost in the machine

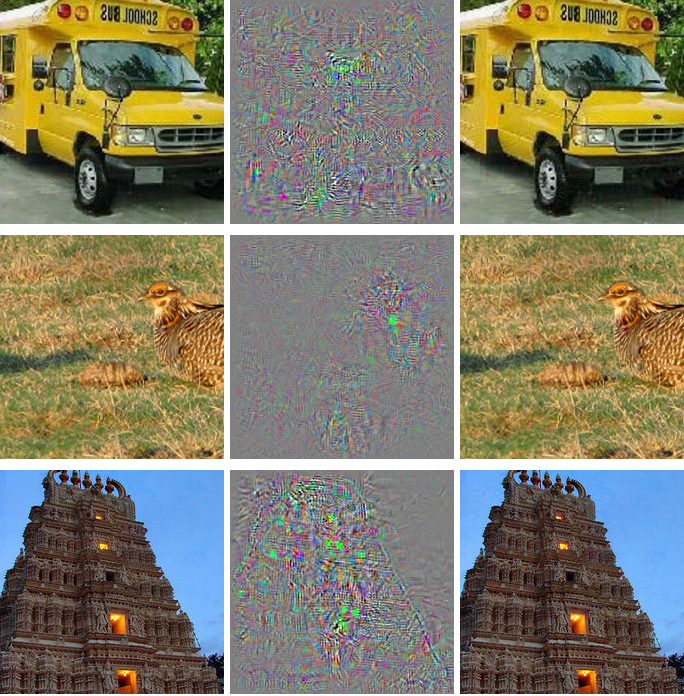

The term “adversarial attack” was first coined in a paper led by Google AI researcher Christian Szegedy in 2014. By subtly altering wildly differing test images from the ImageNet database, the researchers caused a machine learning system to misclassify them all as “ostrich” on an instance of the popular and respected AlexNet convolutional neural network.

The left column shows the original ImageNet source images. The middle column shows the subtle matrix of differences applied by the researchers. The right column shows the final processed images, which were all classified as “ostrich.”

Not only was the procedure easily replicable, but, amazingly, it proved to be broadly transferable across different neural network models and configurations.

The attack is a misuse of a fundamental feature in the conceptual model of image-based machine learning systems, exploiting the process of elimination that the system uses when estimating which label to apply to an image.

Characteristics can be extracted from the image most likely to be classed as — for instance — an ostrich, and then applied imperceptibly to non-ostrich images so that these become classified as “ostrich.” The math powering the elimination routine allows an attacker to systematically push the poisoned image over the boundary into the target classification.

The level of visual “perturbation” in such adversarial images is so low that both the human eye and the neural network are trained to discount them as noise. But the neural network cannot do this since this noise is shaped as a distilled “hash” for an established (though incorrect) classification. Therefore, the machine abruptly closes the analysis routine and returns the wrong result, and the reductionist mathematics of the neural network is turned back on itself in the form of an attack.

Shock to the system

Szegedy’s discovery was doubly astounding because it revealed the linear nature of the higher dimensional space of deep network learning models. Until this point, neural networks were thought to possess little linearity at this level of operation — an eccentric feature that, ironically, should protect them from systematized attacks like this, and which in fact contribute to the controversial “black box” nature of artificial intelligence systems.

Instead, the attack was not only trivial to reproduce but quickly proved to have “real world” applications.

In 2016, research out of Carnegie Mellon built on the Szegedy findings to create a method capable of fooling a state-of-the-art facial recognition system (FRS) by the use of 2D-printed glasses.

First column: two researchers evade FRS detection. Second column: Researcher successfully identified as a target, actress Milla Jovovich [source]; Third column: Researcher successfully identified as a colleague; Fourth column: researcher successfully impersonates TV host Carson Daly [source].

By this stage, a plethora of new research papers had seized on the theory of adversarial attacks and begun to distinguish between misclassification (evading correct identification) and targeted classification (grafting a specific and incorrect target identity onto an image).

In this case, the researchers were able to convince a facial and object recognition system that one of the male researchers was Hollywood actress and model Milla Jovovich and that another was TV presenter Carson Daly. Additionally, they were able to swap identities among themselves, or otherwise cause the FRS to fail to identify them.

In all cases, the key to the subterfuge was the encoded texture printed out on their outsize glasses, which was sufficient to “hash” to the target for the machine learning system.

Any doubt about the resilience of target-enabled textures was eliminated in 2017 when MIT researchers 3D-printed a specially crafted turtle model capable of convincing Google’s InceptionV3 image classifier system that it was a rifle.

The encoded texture on MIT’s turtle object consistently reads as “rifle” in Google’s object recognition algorithm.

Defensive efforts

A wide range of countermeasures have been considered since the existence of the adversarial attack was revealed. Research out of Harvard in 2017 proposed regularizing the image input in order to level the playing field and make crafted attack images easier to identify. However, the technique requires doubling the already-strained resources needed to operate a viable machine learning system. Even where variations on this “Gradient Masking” technique can be made practicable, counter-attacks are possible.

A 2017 Stanford research paper proposed “biologically inspired” deep network protection, which artificially creates the non-linearity that researchers had, prior to the 2014 paper, presumed existed. However, peer criticism suggests that the technique is easily negated by stabilizing the gradient input.

A recent report from the Institute of Electrical and Electronics Engineers (IEEE) evaluates four years of defense-oriented research following the disclosure of the adversarial attack technique and finds no proposed approach that can definitively and economically overcome the systemic nature of the vulnerability. The report concludes that adversarial attacks pose “a real threat to deep learning in practice, especially in safety and security-critical applications.”

Research out of Italy in 2018 agrees that the adversarial attack scenario is immune to current countermeasures.

Widespread applicability of adversarial attacks

The most alarming aspect of adversarial attack is its transferability, not only across different machine learning systems and training sets but also into other areas of machine learning besides computer vision.

Adversarial attacks in audio

Research from the University of California in 2018 demonstrated that it is possible to add an adversarial perturbation to a soundwave (such as a speech recording) and completely change the speech-to-text transcription to a targeted phrase — or even conceal speech information in other types of audio, such as music.

It’s an attack scenario that threatens the integrity of AI assistants, among other possibilities. The commercial exploitation of constant-listening devices in the home has already come under fierce criticism, and the spoofing of spoken user input has clear implications for invasive marketing campaigns.

Adversarial attacks in text

A 2017 paper from IBM’s India Research Lab outlines an adversarial attack scenario based on pure text input and observes that such a technique could be used to manipulate and deceive sentiment analysis systems, which are using natural language processing (NLP) techniques. At a widespread level, such a technique could potentially enable a campaign of analytics-led misinformation in the category of “fake news.”

Implications of the adversarial attack vulnerability for machine learning

Ian Goodfellow, the creator of Generative Adversarial Networks and a key contributor to the debate about adversarial attacks, has commented that machine learning systems should not repeat the mistake of early operating systems, where defense was not initially built into the design.

Yet the IEEE report, backed up with other research overviews, suggests that deep learning networks had this weakness baked into them from conception. All attempts to counter the syndrome on its own terms can be repulsed because they are iterations (and counter-iterations) of the same math.

What’s left is the possibility of a “firewall” or sentinel approach to data cleansing, where satellite technologies evolve around a continuously vulnerable machine learning framework, at the cost of network and machine resources, and a diminishing integrity of the entire process.

Commercial concerns seem to be taking precedence. To date, the only real-world example of adversarial attack to have impressed the potential gravity of the problem to the media was when a team of researchers caused a machine learning system to misinterpret traffic signs by simply printing subtly altered versions of them.

Nonetheless, the IEEE report notes the generally dismissive attitude towards the threat in academia: “Whereas few works suggest that adversarial attacks on deep learning may not be a serious concern, a large body of the related literature indicates otherwise.”

The current applicability or scope of adversarial attacks is irrelevant if the fundamental vulnerability survives into later production systems. At that point of commercialization and widespread diffusion, such systems are likely to present a much more attractive target, deserving of greater resources from those that would exploit some of the most sensitive and critical new AI-driven applications of the future.

Adversarial attacks: How to trick computer vision was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.