Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

How to make SnapChat Lenses using Computer Vision?

We all love SnapChat lenses/filters, but ever wondered how you can make your own? This article explains how you can use python and computer vision libraries like opencv and dlib to create your own “glasses and mustache lens” with as few as 80 lines of code.

What is dlib?

Dlib is a modern C++ toolkit containing machine learning algorithms and tools for creating complex software in C++ to solve real world problems. It is used in both industry and academia in a wide range of domains including robotics, embedded devices, mobile phones, and large high performance computing environments. Dlib’s open source licensing allows you to use it in any application, free of charge. [source]

What is OpenCV?

OpenCV (Open Source Computer Vision) is a library of programming functions mainly aimed at real-time computer vision. The library is cross-platform and free for use under the open-source BSD license. OpenCV supports the deep learning frameworks TensorFlow, Torch/PyTorch and Caffe. [source]

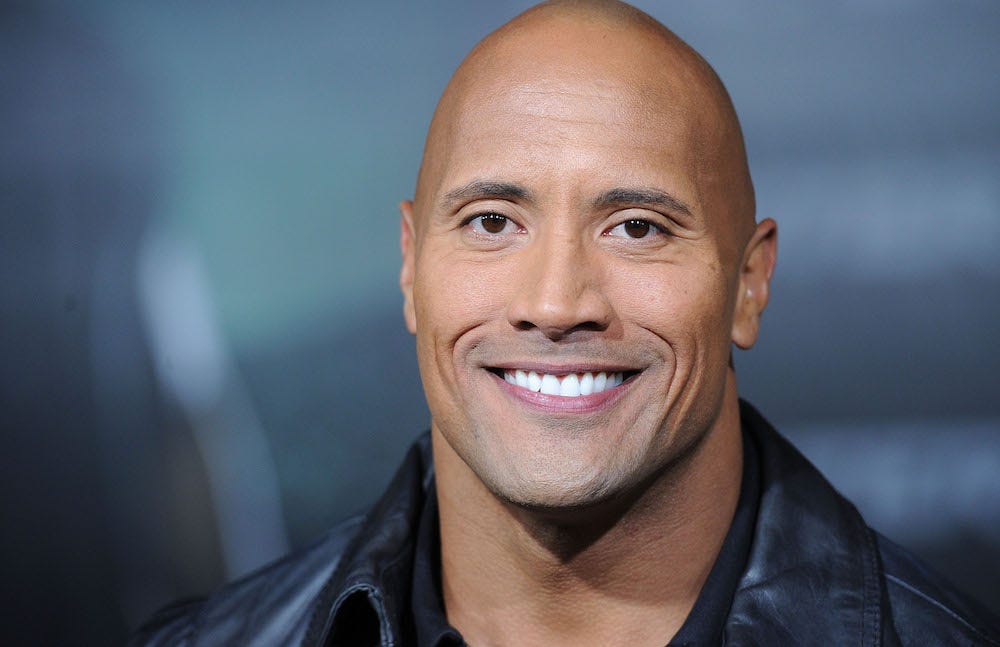

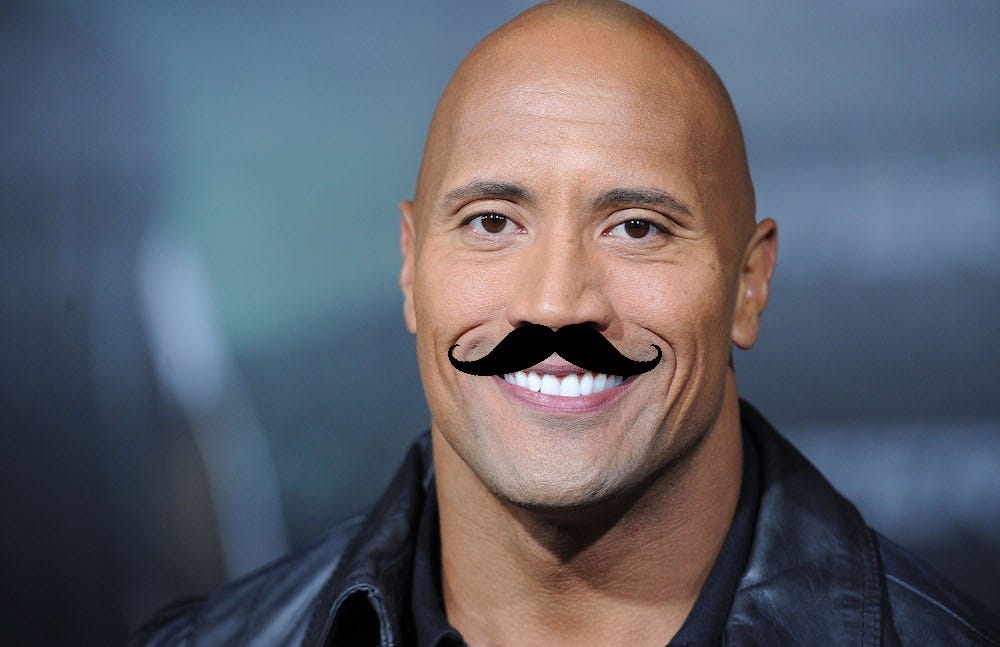

Let us try to implement a SnapChat filter on the below picture of Dwayne Johnson. We will add a pair of glasses and a mustache for the purpose of this tutorial.

First, import all the required libraries:

import cv2import numpy as npimport globimport osimport dlib

Read all the image files that we want to overlay on the face of Dwayne Johnson. We will use the glasses and mustache shown below.

In the code snippet below, we load the mustache and glasses images and create our image masks. The image masks are used to select sections from an image that we want to display. When we overlay the image of a mustache over a background image, we need to identify which pixels from the mustache image should be displayed, and which images from the background image should be displayed. The masks are used to identify which pixels should be used when applied to an image.

We load the mustache/glasses with -1 (negative one) as the second parameter to load all the layers in the image. The image is made up of 4 layers (or channels): Blue, Green, Red, and an Alpha transparency layer (knows as BGR-A). The alpha channel tells us which pixels in the image should be transparent and which one should be non-transparent (made up of a combination of the other 3 layers). Then we take just the alpha layer and create a new single-layer image that we will use for masking. We take the inverse of our mask. The initial mask will define the area for the mustache/glasses, and the inverse mask will be for the region around the mustache/glasses. Then we convert the mustache/glasses image to a 3-channel BGR image. Save the original mustache/glasses image sizes, which we will use later when resizing the mustache/glasses image.

imgMustache = cv2.imread("mustache.png", -1)orig_mask = imgMustache[:,:,3]orig_mask_inv = cv2.bitwise_not(orig_mask)imgMustache = imgMustache[:,:,0:3]origMustacheHeight, origMustacheWidth = imgMustache.shape[:2]imgGlass = cv2.imread("glasses.png", -1)orig_mask_g = imgGlass[:,:,3]orig_mask_inv_g = cv2.bitwise_not(orig_mask_g)imgGlass = imgGlass[:,:,0:3]origGlassHeight, origGlassWidth = imgGlass.shape[:2]Once the reading is done. Let’s start with face detection. There are several ways to do that.

- dlib face detection

- OpenCV face detection

- TenesorflowSSD face detection

I am going to use dlib face detection over other two for 2 reasons.

- To use Tensorflow we need to train our own face detection model as there is no pre-trained model available.

- dlib seems to give better accuracy compared to OpenCV.

# 68 point detector on facepredictor_path = "shape_predictor_68_face_landmarks.dat"# face detection modalface_rec_model_path = "dlib_face_recognition_resnet_model_v1.dat"

cnn_face_detector=dlib.cnn_face_detection_model_v1("mmod_human_face_detector.dat")detector=dlib.get_frontal_face_detector()predictor = dlib.shape_predictor(predictor_path)

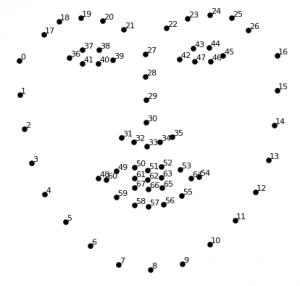

dlib 68 point detector will detect following face points

Now we will start capturing frames from the webcam or from a video file and after that we will detect faces in those frames also will detect 68 points on the face to apply filters.

ret, frame = video_capture.read() dets = cnn_face_detector(frame, 1) for k, d in enumerate(dets): shape = predictor(frame, d.rect)

Now points 31 and 35 are nose length so we will consider mustache as 3 times the length of nose, and height is relevant to the original picture. Apply that numpy array to original frame.

mustacheWidth = abs(3 * (shape.part(31).x - shape.part(35).x))mustacheHeight = int(mustacheWidth * origMustacheHeight / origMustacheWidth) - 10mustache = cv2.resize(imgMustache, (mustacheWidth,mustacheHeight), interpolation = cv2.INTER_AREA)mask = cv2.resize(orig_mask, (mustacheWidth,mustacheHeight), interpolation = cv2.INTER_AREA)mask_inv = cv2.resize(orig_mask_inv, (mustacheWidth,mustacheHeight), interpolation = cv2.INTER_AREA)y1 = int(shape.part(33).y - (mustacheHeight/2)) + 10y2 = int(y1 + mustacheHeight)x1 = int(shape.part(51).x - (mustacheWidth/2))x2 = int(x1 + mustacheWidth)roi = frame[y1:y2, x1:x2]roi_bg = cv2.bitwise_and(roi,roi,mask = mask_inv)roi_fg = cv2.bitwise_and(mustache,mustache,mask = mask)frame[y1:y2, x1:x2] = cv2.add(roi_bg, roi_fg)

Now, let’s put on glasses. Points 1 and 16 will be glass width, and height is relative.

glassWidth = abs(shape.part(16).x - shape.part(1).x)glassHeight = int(glassWidth * origGlassHeight / origGlassWidth)glass = cv2.resize(imgGlass, (glassWidth,glassHeight), interpolation = cv2.INTER_AREA)mask = cv2.resize(orig_mask_g, (glassWidth,glassHeight), interpolation = cv2.INTER_AREA)mask_inv = cv2.resize(orig_mask_inv_g, (glassWidth,glassHeight), interpolation = cv2.INTER_AREA)y1 = int(shape.part(24).y)y2 = int(y1 + glassHeight)x1 = int(shape.part(27).x - (glassWidth/2))x2 = int(x1 + glassWidth)roi1 = frame[y1:y2, x1:x2]roi_bg = cv2.bitwise_and(roi1,roi1,mask = mask_inv)roi_fg = cv2.bitwise_and(glass,glass,mask = mask)frame[y1:y2, x1:x2] = cv2.add(roi_bg, roi_fg)

Conclusion

This will not work as good as SnapChat lenses but it is a strong base to create one like SnapChat. Also it is a lot of fun to create your own custom lens rather than using those provided by SnapChat. You can file full code below.

smitshilu/SnapChatFilterExample

If you like this article please don’t forget to follow me on Medium or Github. or subscribe to my YouTube channel.

How to make SnapChat Lenses? was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.