Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Ready to learn how to train your AI in less than a day without writing a single line of code? If you are sitting on a pile of data that you want to make useful, but AI seems too daunting of a task to get started, then this article is for you. Or if you have experienced the trenches of AI and are ready to make the model training process a whole lot simpler and faster, you will also find this article eye-opening.

Off-the-shelf algorithms have gotten so good that they have ushered in a new paradigm of accessibility in machine learning. The conversation in machine learning has evolved from how to build the technical architecture of a prediction model to how we can most effectively train an off-the-shelf state-of-the-art model on a specific business application. To show you the simplicity and effectiveness of training an off-the-shelf model and to celebrate the fall season, Labelbox will teach you how to train a deep convolutional object detection model called SSD MobileNet V1 to identify pumpkins.

What’s a Pumpkin?

If you can answer this question then you can train a model that can, too.

Instead of labeling images from scratch, we leveraged an off-the-shelf model that has already been trained to accomplish a similar task. SSD_mobilenet_V1 is an object detection model, which we fine-tune for customer specific object detection applications. Being able to visualize and correct a model’s erroneous initial predictions in Labelbox not only saves time and money but also teaches the model trainer to better define his/her objectives and better control data quality.

Visually inspecting the model’s initial predictions of our pumpkin data forced us to think more deeply about what a pumpkin is and which pumpkins we wanted our model to recognize.

In the United States, pumpkins usually refer to the round orange squash that is carved into jack-o’-lanterns for Halloween or baked into pies on Thanksgiving. As it turns out, pumpkin qualities, such as shape, size, texture, and color, vary widely making identifying pumpkins much more complicated than we originally thought. We began questioning whether we really knew what a pumpkin was and how to tell it apart from squash or gourds.

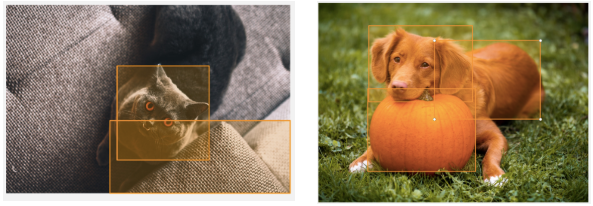

In the side-by-side images above, the SSD MobileNet V1 model incorrectly identified the cat’s orange eyes and the dog’s orange fur to be pumpkins. This bias of orange suggests that the pumpkins in our dataset are disproportionately orange. In the image below, the model correctly identified the carved pumpkins but failed to identify the uncarved smaller pumpkins, suggesting that the pumpkins in our dataset are disproportionately jack-o’-lanterns.

It wasn’t just our own conception of a pumpkin that we had to think more deeply about, but also the extent to which we wanted our model to identify pumpkins. For example, the initial prediction model was able to identify a cartoon jack-o’-lantern, but not a black and white sketch of a pumpkin. Was the sketch a false negative or did it correctly interpret this sketch as not a pumpkin? The two images are similar in size, shape, ribbing, but differ in color and carving. Without the coloring, it is ambiguous as to whether the sketch is a pumpkin or a gourd.

In addition to potential biases in our dataset, we noticed biases in our annotation process. Absence of a label will penalize predictions in those areas as false positives, biasing the model away from detecting these. For example, we labeled pumpkins in the foreground and ignored those in the background, we labeled pumpkins in focus and ignored blurry pumpkins, and we labeled well-lit pumpkins over those in dark shadows. There is no good or bad choice when deciding whether to label one, the other or both options, it depends on your desired outcome. The key is to be consistent in your labeling choices over time and across labelers.

Build your own AI in 3 steps

With the following steps, you can build your own AI in under 24 hours.

Step 1. Import data

Step 2. Configure interface

Step 3. Iterate: label-train-evaluate

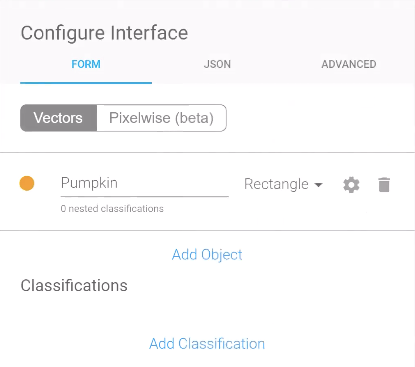

The setup (step 1 and step 2) takes just a few minutes. We started by creating a new project in Labelbox and uploading 500 pumpkin images. Next, we configured the labeling interface by typing in the segmentation title “Pumpkin” and choosing “Rectangle” as the bounding geometry type. We need to use rectangles rather than another bounding option like polygons because SSD_mobilenet_V1 is trained on rectangular bounding boxes.

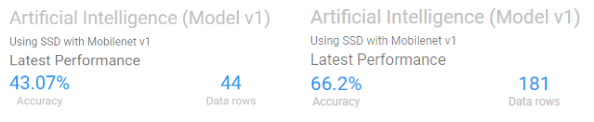

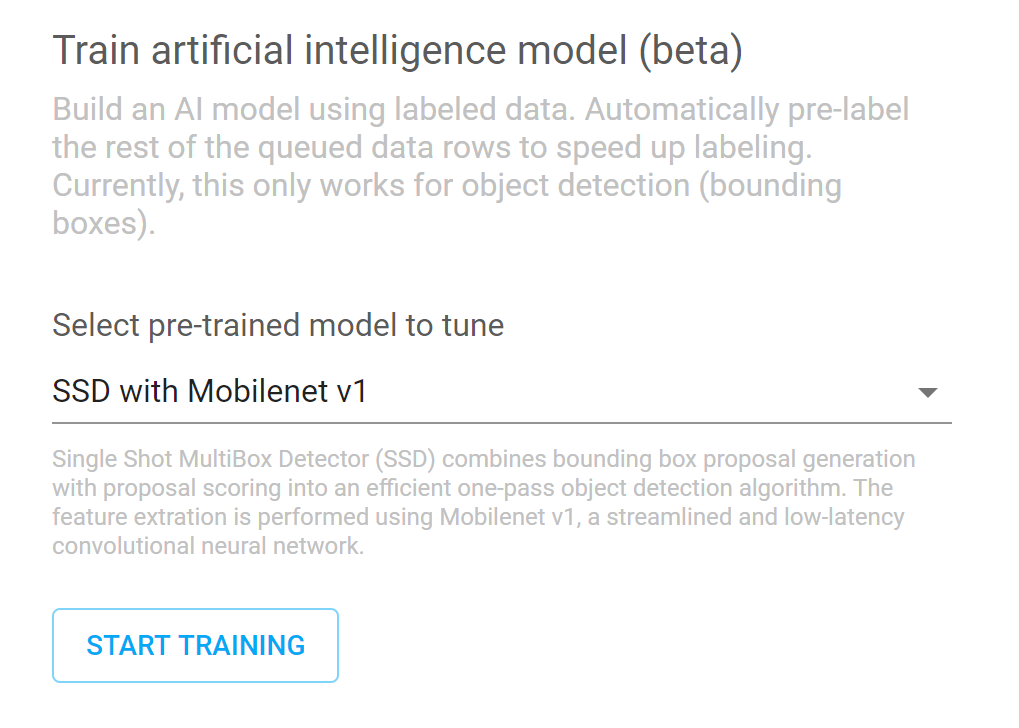

The fine-tuning stage (step 3) is the iterative process of labeling, training and reviewing. With just a click of a button, we trained the model on 44 images, which resulted in an initial prediction accuracy of 43%. We corrected the model’s initial labeling errors and retrained it on 181 images of pumpkins which increased the accuracy to 66%. This process can be continued to further increase model accuracy.

First and second iteration of the Automatic Model Builder

First and second iteration of the Automatic Model Builder

Automatic Model Builder

One-Click Model Training with the Automatic Model Builder

One-Click Model Training with the Automatic Model Builder

Labelbox is soon releasing an Intelligent Labeling Assistance (ILA) feature, which functions behind the scenes and requires no technical background to get started. There are two key components to ILA: the Automatic Model Builder (AMB) and the predictions API . Essentially, AMB performs transfer learning to fine-tune a deep neural network on the data and ontology specific to your particular project. In other words, ILA automatically customizes pre-trained state-of-the-art computer vision models to fit the particular needs of your business application. ILA is split into two components so that Labelbox can support the same prediction features on your own model as it does an off-the-shelf one.

AMB accelerates your training process by giving you a starting point way ahead of the game. When you import your data, the Automatic Model Builder tool will generate a prediction model that pre-labels your data immediately. Not having to train your model from scratch on basic feature extraction layers, such as differentiating the foreground from the background or understanding object edges, reduces the total amount of time that you have to spend in the iterative labeling and training process so that you can get your model up and running faster.

By having a model make an initial prediction, you can quantify and visualize the initial accuracy of your model and spend your time and budget more effectively by skipping the work of initial training and jumping right into fine-tuning. Your model will correctly process the obvious use cases of your data set, alleviating your domain experts from rudimentary labeling, and freeing up their time for the more complicated or ambiguous cases. Being able to visualize how your model is interpreting your data during training will help you make the corrections you need to get the outcome you want. For more information on how to ensure high-quality training data check out our article It All Boils Down to Training Data.

We are releasing AMB with “Single Shot Multibox Detector (SSD) with MobileNet” for visual object segmentation. This is just the beginning. Stay tuned as we extend our AMB feature to incorporate more pre-trained cutting-edge models from Tensorflow detection model zoo.

Business Applications

In conclusion, you can apply this Automatic Model Builder tool to your business in under a day and without writing any code. It took us only 2–3 hours to get our AI to identify pumpkins at 66% accuracy. With a bit more time and whole lot more data, you can quickly train your AI to have an extremely accurate outcome. Whether you are trying to identify tumors or have your self-driving car recognize barriers, Labelbox’s AMB feature will save you time and money by automatically transferring learning from pre-trained state-of-the-art models to your business application.

Visit www.labelbox.com to explore Labelbox for free or speak to one of our team members about an enterprise solution for your business.

Originally published at medium.com on November 20, 2018.

AI is More Accessible Than You Know was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.