Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Training and Deploying A Deep Learning Model in Keras MobileNet V2 and Heroku: A Step-by-Step Tutorial Part 1

Why train and deploy deep learning models on Keras + Heroku?

Why train and deploy deep learning models on Keras + Heroku?

This tutorial will guide you step-by-step on how to train and deploy a deep learning model. Having scoured the internet far and wide, I found it difficult to find tutorials that take you from the beginning to the end of building and deploying deep learning models. While there are some excellent articles on various stages of this process (that I used in my own journey to deep learning), I wrote this tutorial to close what I view is an important gap.

This tutorial walks you through the entire process of training a model in TensorFlow and deploying it to Heroku — code available in the GitHub repo here.

The full list of the technology we are going to use:

- Keras 2.2 is a high-level neural networks API, written in Python and capable of running on top of TensorFlow.

- TensorFlow 1.11 (TF) is an open-source machine learning library for research and production. TensorFlow is Google’s attempt to put the power of Deep Learning into the hands of developers around the world.[1]

- Scikit-image 0.14 is a collection of algorithms for image processing.

- Scikit-learn 0.19 Machine Learning in Python.

- Pillow 4.1 is the Python Imaging Library

- Python 3.6

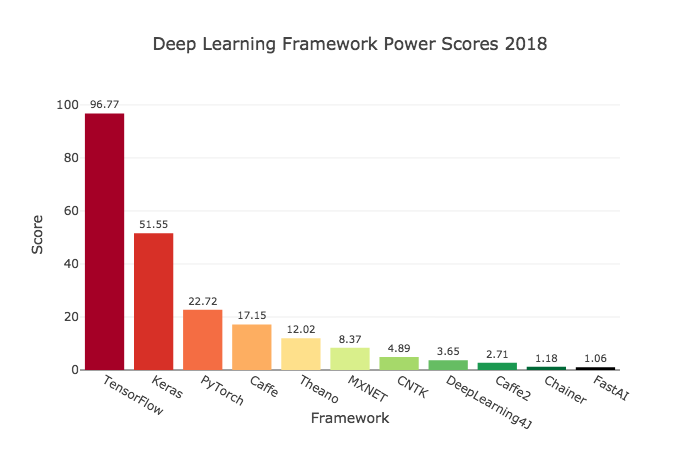

TensorFlow, Keras (and of course python) have been increasingly adopted across industries and research communities:

Deep learning frameworks ranking computed by Jeff Hale, based on 11 data sources across 7 categories

Deep learning frameworks ranking computed by Jeff Hale, based on 11 data sources across 7 categories

TensorFlow has gradually increased its power score due to ease of use — it offers APIs for beginners and experts alike to quickly get into developing for desktop, mobile, web or cloud. For an easy introduction to TensorFlow see: (easy-tensorflow)

The Keras website explains why it’s user adoption rate has been soaring in 2018:

- Keras is an API designed for human beings, not machines. This makes Keras easy to learn and easy to use; however, this ease of use does not come at the cost of reduced flexibility.

- Keras models can be easily deployed across a greater range of platforms.

- Keras supports multiple backend engines such as TensorFlow, CNTK, and Theano.

- Keras has built-in support for multi-GPU data parallelism.

Since training and deployment are complicated and we want to keep it simple, I have divided this tutorial into 2 parts:

Part 1:

- Prepare your data for training.

- Train a deep learning model.

- Serve your model with TensorFlow Serving.

- Deploy to Heroku.

In order to benefits from this blog:

- You should be familiar with python.

- You should already have some understanding of what deep learning and neural network are.

Prepare data for training

One of the hardest parts of solving a deep leaning problem is having a well-prepared dataset. There are three general steps to preparing data:

- Identify Bias — Since models are trained on a predefined dataset, it is important to make sure you do not introduce bias.

In statistics, sampling bias is a bias in which a sample is collected in such a way that some members of the intended population are less likely to be included than others. It results in a biased sample, a non-random sample of a population (or non-human factors) in which all individuals, or instances, were not equally likely to have been selected. If this is not accounted for, results can be erroneously attributed to the phenomenon under study rather than to the method of sampling.

(Khan Academy provides great examples on how to identify bias in samples and surveys.)

2. Remove outliers from data.

Outliers are extreme values that deviate from other observations on data , they may indicate a variability in a measurement, experimental errors or a novelty. In other words, an outlier is an observation that diverges from an overall pattern on a sample.

3. Transform our dataset into a language a machine can understand — numbers. I will only focus in this tutorial, on transforming datasets as the other two points require a blog to cover them in full details.

To prepare a dataset you must of course first have a dataset. We are going to use the Fashion-MNIST dataset because it is already optimized and labeled for a classification problem.

(Read more about the Fashion-MNIST dataset here.)

The Fashion-MNIST dataset has 70,000 grayscale, (28x28px) images separated into the following categories:

+-----------+---------------------+ | Label | Description | +-----------+---------------------+ | 0 | T-shirt/top | | 1 | Trouser | | 2 | Pullover | | 3 | Dress | | 4 | Coat | | 5 | Sandal | | 6 | Shirt | | 7 | Sneaker | | 8 | Bag | | 9 | Ankle boot | +-----------+---------------------+

Fortunately, the majority of deep learning (DL) frameworks support Fashion-MNIST dataset out of the box, including Keras. To download the dataset yourself and see other examples you can link to the github repo — here.

from keras.datasets.fashion_mnist import load_data

# Load the fashion-mnist train data and test data(x_train, y_train), (x_test, y_test) = load_data()

# outputx_train shape: (60000, 28, 28) y_train shape: (60000,)x_test shape: (10000, 28, 28) y_test shape: (10000,)

By default load_data()function returns training and testing dataset.

It is essential to split your data into training and testing sets.

Training data: is used to train the Neural Network (NN)

Testing data : is used to validate and optimize the results of the Neural Network during the training phase, by tuning and re-adjust the hyperparameters.

Hyperparameter are parameters whose value is set before the learning process begins.

After training a Neural Network, we run the trained model against our validation dataset to make sure that the model is generalized and is not overfitting.

What is overfitting? :)

Overfitting means a model predicts the right result when it tests against training data, but otherwise fails to predict accurately. However, if a model predicts the incorrect result for the training data, this is called underfitting. For further explanation of overfitting and underfitting.

Thus, we use the validation dataset to detect overfitting or underfitting. But, most of the time we train the model multiple times in order to have a higher score in the training and validation datasets. Since we retrain the model based on the validation dataset result, we can end up overfitting not only in training dataset but also in the validation set. In order to avoid this, we use a third dataset that is never used during training, which is the testing dataset.

Here are some of the samples of the data

norm_x_train = x_train.astype('float32') / 255norm_x_test = x_test.astype('float32') / 255Normalize the data dimensions so that they are of approximately the same scale. In general, normalization makes very deep NN easier to train, especially in Convolutional and Recurrent neural network. Here are a nice explanation video and an article

Convert labels (y_train and y_test) to one hot encoding

from keras.utils import to_categorical

encoded_y_train = to_categorical(y_train, num_classes=10, dtype='float32')

encoded_y_test = to_categorical(y_test, num_classes=10, dtype='float32')

One hot encoding is a representation of categorical variables as binary vectors. Here is the full explanation if you would like to have a deep understanding, and do not hesitate to ask if you have a question

Resize images & convert to 3 channel (RGB)

MobileNet V2 model accepts one of the following formats: (96, 96), (128, 128), (160, 160),(192, 192), or (224, 224). In addition, the image has to be 3 channel (RGB) format. Therefore, We need to resize & convert our images. from (28 X 28) to (96 X 96 X 3).

Running the previous code in all our data, may eat up a lot of memory resources; therefore, we are going to use a generator. Python Generator is a function that returns an object (iterator) which we can iterate over (one value at a time).

Train a Deep Learning model

After we have split, normalized and converted the dataset, now we are going to train a model.

There are many techniques for training the model, we will only cover one of them, though I believe it is one of the most important methods or strategies, — Transfer Learning.

Transfer Learning

Transfer learning in deep learning means to transfer a knowledge from one domain to a similar one. In our example, I have chosen the MobileNet V2 model because it’s faster to train and small in size. And most important, MobileNet is pre-trained with ImageNet dataset.

ImageNet is an image dataset organized according to the WordNet hierarchy. Each meaningful concept in WordNet, possibly described by multiple words or word phrases, is called a “synonym set” or “synset”. There are more than 100,000 synsets in WordNet, majority of them are nouns (80,000+). In ImageNet, we aim to provide on average 1000 images to illustrate each synset. Images of each concept are quality-controlled and human-annotated.[2]

Since our dataset is kind of subset of the ImageNet dataset, then we are going to transfer the knowledge of this model onto our datasets. A nice article that explains this in more detail: A Gentle Introduction to Transfer Learning for Deep Learning

The below code is important to understand when working using Transfer Learning as a technique:

for layer in base_model.layers:

# trainable has to be false in order to freeze the layers layer.trainable = False # or True

As using a pre-trained model (e.g. MobileNetV2 in our case), you need to pay close a attention to a concept call Fine Tuning.

‘Fine Tuning’, generally, is when we freeze the weights of all the layers of the pre-trained neural networks (on dataset A [e.g. ImageNet]) except the penultimate layer and train the neural network on dataset B [e.g. Fashion-MNIST], just to learn the representations on the penultimate layer. We usually replace the last (softmax) layer with another one of our choice (depending on the number of outputs we need for the new problem.[3]

In our case, we have 10 classes, so we have the following

output_tensor = Dense(10, activation='softmax')(op)

When do we use need Fine Tuning?

- When you have small datasets (e.g. few 1000s)

- When the dataset used to train the pre-trained model is very similar to or the same as the new dataset.

Build & Train

from keras.optimizers import Adam

model = build_model()model.compile(optimizer=Adam(), loss='categorical_crossentropy', metrics=['categorical_accuracy'])

Now we build and compile the model. Some compilation definitions:

The Adam optimization algorithm is an extension to stochastic gradient descent that has recently seen broader adoption for deep learning applications in computer vision and natural language processing.[4]

A loss function (categorical_crossentropy) is a measure of how good a prediction model does in terms of being able to predict the expected outcome. [5]

categorical_accuracy is a metric function that is used to judge the performance of your model.[6]

train_generator = load_data_generator(norm_x_train, encoded_y_train, batch_size=64)

model.fit_generator( generator=train_generator, steps_per_epoch=900, verbose=1, epochs=5)

It is essential to the understand the following when training any deep learning model.

Epoch is when an entire dataset is passed forward and backward through the neural network only once. [7]

Batch Size is the total number of training examples present in a single batch [7]. And it goes a long with python generate mention previously

Iterations (steps_per_epoch) is the number of batches needed to complete one epoch [7].

For a more detailed understanding read — Epoch vs Batch Size vs Iterations.

94% accuracy after 5 epochs of training, but how do would we do in the test dataset:

test_generator = load_data_generator(norm_x_test, encoded_y_test, batch_size=64)model.evaluate_generator(generator=test_generator, steps=900, verbose=1)

86%, seems reasonable for the amount spent training — , 1 hour of CPU time.

Things to you could try to improve the accuracy

- Choose more epochs, 10 as an example.

- Try to freeze the layers layer.trainable = False.

- Choose a bigger batch_size, 128 as an example.

- Select different optimizer, Nadam, for instance. However, changing the optimizer sometimes does show much improvement in this case.

Save the model

Make sure you save the model because we are going to use in the next part

model_name = "tf_serving_keras_mobilenetv2"model.save(f"models/{model_name}.h5")Conclusion

We have prepared the fashion dataset for the MobileNetV2 model. Further, we used the MobileNet model as our base model for Transfer Learning.

In part 2 we are going to prepare the model to be server by TensorFlow Serving. Then we will deploy the model to Heroku.

Questions/suggestions? — Leave them below in the comments.

References

- A Comprehensive guide to Fine-tuning Deep Learning Models in Keras

- What is ImageNet?

- Transfer Learning and Fine Tuning: Let’s discuss

- Gentle Introduction to the Adam Optimization Algorithm for Deep Learning

- Regression Loss Functions All Machine Learners Should Know

- Usage of metrics

- Epoch vs Batch Size vs Iterations

Training and Deploying A Deep Learning Model in Keras MobileNet V2 and Heroku: A Step-by-Step… was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.