Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

I have this conversation about twice a week. Someone has decided they want to take advantage of the benefits of serverless Azure Functions for an upcoming project, but when starting to lay out the architecture a question pops up:

“Should we be using Azure Event Hubs, Queues, or Event Grid?”

It’s honestly a great question — and it’s a question with consequences. Each of these messaging technologies comes with its own set of behaviors that can impact your solution. In previous blogs, I’ve spent some time explaining Event Grid, Event Hubs ordering guarantees, and how to keep Event Hub stream processes resilient. In this blog, I specifically want to tackle one other important aspect: throughput for variable workloads. Here was the scenario I was faced with this last week:

We have a solution where we drop in some thousands of records to get processed. Some records only take a few milliseconds to process, and others may take a few minutes. Right now we are pushing the messages to functions via Event Hubs and noticed some significant delays in getting all messages processed.

The problem here is subtle, but simple if you understand the behavior of each trigger. If you just have a pile of tasks that need to be completed, you likely want a queue. It’s not a perfect rule, but more often than not queues may have the behavior you are looking for. In fact, this blog will show that choosing the wrong messaging pipeline can result in an order of magnitude difference in processing time. Let’s take a look at a few reasons why.

Variability in size of each task

If you look at the problem posed by the customer above, one key element let me know queues could be best here.

“…Some records only take a few milliseconds to process, and others may take a few minutes.”

If I have a queue of images that need to be resized, a 100kb image will resize a lot quicker than a 100mb panoramic image. The big question is: “How does my processing pipeline respond to a long task?” It’s a bit like driving on a freeway. An incident on the road is much more impactful for a one lane road than a five lane road — and the same is true for your serverless pipelines.

Event Hubs behavior with variability in task time

Azure Event Hubs cares about two things, and it does a great job with those two things: ordering and throughput. It can receive and send a huge heap of messages very quickly and will preserve order for those messages. Our team has posted previously on how Azure Event Hubs allowed us to process 100k messages a second in Azure Functions. The fact that I can consume a batch of up to 200 messages in every single function execution is a big reason for that. But those two core concerns of ordering and throughput can also get in each others way.

> If you’re not already familiar with how Event Hubs preserves order, it may be worth reading this blog on ordering first and coming back.

Imagine I have a series of events that all land in the same Event Hub partition. Let’s say my Azure Function pulls in a batch of 50 events from that partition into a function. Messages 1–9 process in a few milliseconds, but message 10 is taking a few minutes to process. What happens to events 11–50? They are stuck. Event Hubs cares about ordering, so message 10 needs to complete before message 11 starts. Imagine a scenario where the events you are receiving are stock market events — you very much do care that a “buy” happened before a “sell” event, so you’d be glad it’s holding the line. But what if your scenario doesn’t care about ordering? What if events 11–50, or even 51–1,000 could be running while we wait for message 10 to complete? In this case Event Hubs behavior is going to slow down your performance dramatically. Event Hubs will not process the next batch in a partition until the current batch, and every event in that batch, has completed. So one bad event can hold up the entire partition.

Queues behavior with variability in task time

What about queues? Queues don’t care as much about ordering¹. Queues care more about making sure that every message gets processed and gets processed completely. Head into any government building and you will usually witness distributed queue processing in action. You have a single line of people that need something done, and a few desks of employees to help (hopefully more than one 😄). While some employees may have requests that take a number of minutes, the other employees will keep grabbing the next person in line as soon as they are available. One long request isn’t going to stop all work from continuing. Queue triggers will be the same. Whichever instance of your app is available to take on more work will grab the next task in line. No task depends on the completion of another task in that queue².

Service Bus queues and Storage queues

As if deciding between Event Hubs, Event Grid, and queues wasn’t hard enough, there’s a sub-decision on storage queues vs service bus queues. I’m not going to go very deep into this here. There’s a detailed doc that will lay out the big differences. In short, Service Bus is an enterprise-grade message broker with some powerful features like sessions, managed dead-letter queues, and custom policies. Storage queues are super simple, super lightweight queues as part of your storage account. I often stick with storage queues because my Azure Function already has a storage account, but if you need anything more transactional and enterprise grade (or topics), Service Bus is absolutely what you want.

Azure Functions and variable workloads

To illustrate the difference in behavior for variable workloads I ran a few tests. Here’s the experiment.

I put 1,000 identical messages in an Azure Event Hub, Azure Service Bus queue, and an Azure Storage queue. 90% of these messages would only take one second to process, but 10% (sprinkled throughout) would take ten seconds. I had three mostly identical JavaScript functions that I would start processing on those messages. The question is: which one would process fastest?

Experiment results: Event Hubs

Shouldn’t be much of a surprise given the explanation above, but Event Hubs took roughly 8x longer than queues to process all 1,000 messages. So what happened? It actually processed about 90% of the messages by the time the queue function apps finished, but that last 10% had a very long tail. Turns out one instance got unlucky with its assigned partitions and had about 40 of those ten-second tasks. It was stuck waiting for long tasks to complete before moving on to the next set of events, which likely contained another ten-second task. The forced sequential processing for the final 10% was significant.

Experiment results: Storage queues and Service Bus queues

Storage queues and Service Bus queues were extremely close in terms of overall time to process for this experiment (within a few seconds). There is one subtle difference I want to call out here though. Behind-the-scenes in serverless there are instances, or worker nodes (you could even call them… servers), processing your work. While we handle the scale out for you, Azure Functions gives you the ability to process multiple messages on a single node at one time. This is actually super helpful in a number of cases as you can have much better connection pooling, cache sharing, and resource utilization. For both service bus queues and storage queues, the Functions runtime will pull down a batch of messages and process them in parallel on each running instance of your app. The default concurrency for both kinds of queue triggers is 16 messages. In my case my functions scaled out to many instances during the short test, so my total concurrency was higher than 16 messages, but each instance was processing sets of 16.

Why this matters is storage and service bus queues handle the batch slightly different. The big distinction point on: “How many messages have to be processed before the next message, or batch, will be retrieved.”

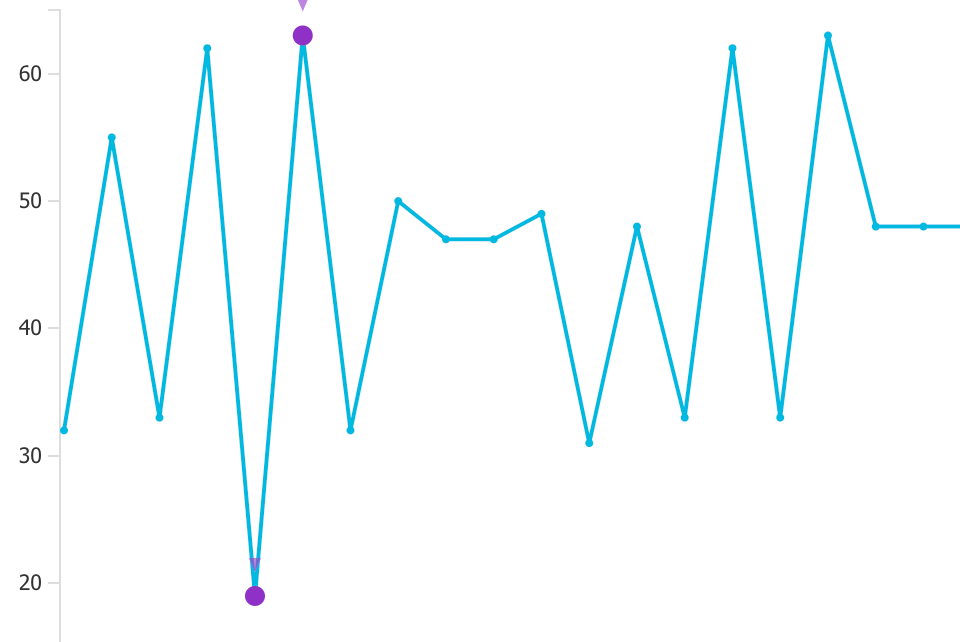

For Azure Storage queues there is a “new batch threshold” that has to be crossed before the next batch is retrieved. By default, this is half of the batch size, or 8. What that means is the host will grab 16 messages and start processing them all concurrently. Once there are <= 8 messages in that batch left, the host will go grab the next set of 16 messages and start processing (until the to-be-completed count gets to<= 8 messages again). In my case, since only 10% of messages were slow, this threshold could generally be trusted to keep instance concurrency high. But you can still see the little bursts of batch thresholds in Application Insights analytics. The sharp jumps correlate to the processing batch size and when new batches are retrieved.

Messages processed on a single instance grouped by 5 seconds

Messages processed on a single instance grouped by 5 seconds

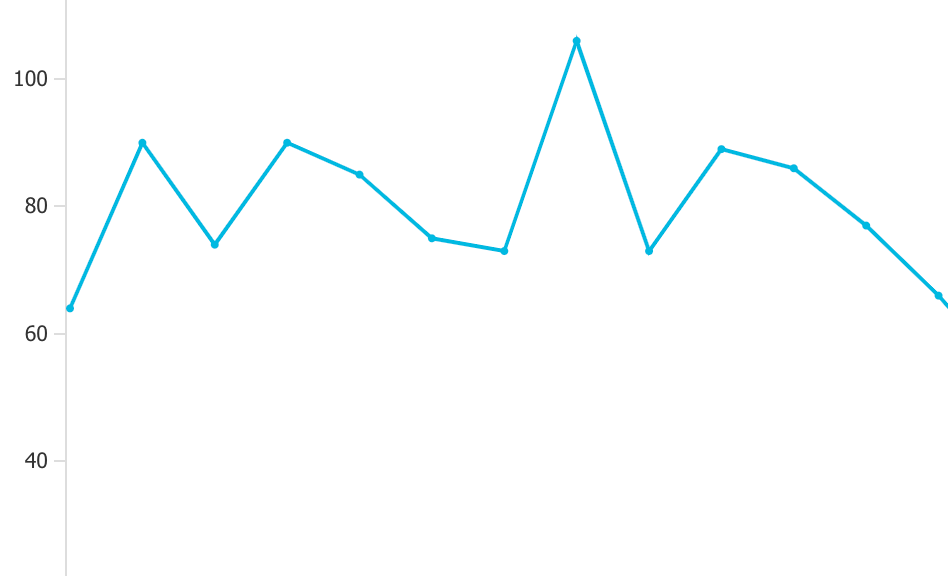

Azure Service Bus queues rely on the MessageHandler provided by the Service Bus SDK to pull new messages. The message handler has an option for max concurrency (by default set to 16 for functions), and can automatically fetch more messages as needed. In my experiment you can see a much smoother rate of messages being concurrently processed. If 1 slow message was holding up one of the 16 concurrent executions, the handler could still keep cycling through messages on the other 15.

Same chart as above, but much smoother rate of messages being processed

Same chart as above, but much smoother rate of messages being processed

So as expected, queues of both flavors performed much better when your problem set is “I have a bunch of variable tasks that need to be executed in any order.”

What about Event Grid?

Why wasn’t Event Grid included in this? There is a big difference in Event Grid’s throughput behavior than these other options. Event Grid is push based (via HTTPS) and these are pull. If I get 1000 Event Grid events, my functions get poked by 1000 concurrent HTTPS requests. They’ll have to scale and queue and handle them as quickly as possible. I would expect since ordering isn’t guaranteed for Event Grid it would perform closer to queues. That said, for this customer specifically they had some throughput considerations of downstream systems, so they wanted the flexibility to pull these messages at a more consistent click which Event Grid cannot provide (they’ll poke the function whether it wants more or not).

The only other note I’ll make in regards to Event Grid is it is meant for processing events, not messages. For details on that distinction check out this doc.

Hopefully, this experiment helps clarify that messaging options are not one-size-fits-all. Each comes with its own set of priorities and strengths, and it’s important to understand each when creating serverless architectures.

Footnotes

- Queues may care about order, but for distributed computing, this is best achieved via sessions for better throughput and distribution.

- See footnote 1 above 😅

Azure Functions: Choosing between queues and event hubs was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.