Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

HOW FAR HAVE WE‘VE COME IN TIME SERIES PREDICTION, FROM RNN TO LSTM

Since the beginning of time humans have used many ways to solve the problem of Time Series prediction.

Humans have used multiple ways including statistics because we thought that harnessing this power could open endless possibilities. Today many algorithms are used to solve numerous amounts of such problems like in stock markets, to predict he stock prices, speech recognition etc.

With the beginning of the Deep Learning boom people thought that why not use these ML algorithms and Neural Networks to solve this problem.

ENTER RECURRENT NEURAL NETWORKS!

Recurrent neural networks were developed in the 1980s. A recurrent neural network (RNN) is a class of artificial neural networks where connections between nodes form a directed along a sequence. This allows it to exhibit temporal dynamic behavior for a time sequence. Unlike feedworward neural networks, RNNs can use their internal state (memory) to process sequences of inputs. This enables them to fit perfectly as an ideal algorithm or type of neural network. This algorithm comes in many architectures like, Fully recurrent

Fully Recurrent Architecture

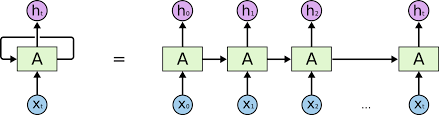

basic recurrent neural network

basic recurrent neural network

Basic RNNs are a network of neuron or nodes organized into successive “layers”, each node in a given layer is connected with a one-way connection to every other node in the next successive layer(This means it is fully connected).Each node (neuron) has a time-varying real-valued activation. Each connection (synapse) has a modifiable weight(which is found while training the model). Nodes are either input nodes (receiving data from outside the network), output nodes (yielding results), or hidden nodes (that modify the data and route the data from input to output).

The output from the previous layer and the hidden layer of the previous layer is fed into the layer so it has the data of the previous hidden state as one of the inputs so, it knew what had happened in the previous layer or state

a simpler representation of this architecture

a simpler representation of this architecture

The Problem

Everything has a problem and so did this Neural Network. Because of the features of RNN that was mentioned above, the same feature has some drawbacks and the most major one is that as the hidden layer and the output of the previous layer is fed, after a discrete steps (layers), the memory of the earlier steps or layers begin to vanish! This is called vanishing gradient.

There were many other architectures that were made under RNN but the one which gained most traction for such a problem (Time-Series Prediction) was…..LSTM

LSTM

LSTM (Long Short Term Memory) is an evolved, even better architecture/sub-category/division of RNN that is achieved by just a simple addition of LSTM cells. It is also called LSTM network sometimes (we will be simply calling it LSTM for this post).

It avoids the vanishing gradient problem (the same was mentioned above, a more professional term). LSTM is normally augmented by recurrent gates called “forget” gates. LSTM prevents backpropagated errors from vanishing or exploding. Instead, errors can flow backwards through unlimited numbers of virtual layers unfolded in space. That is, LSTM can learn tasks that require memories of events that happened thousands or even millions of discrete time steps earlier.

The Conclusion

The conclusion is simply that we still have a long way to go in evolving this category of algorithms more in the future to maybe suit more task in helping make the world indeed a better place

HOW FAR HAVE WE GOTTEN IN TIME SERIES PREDICTION, FROM RNN TO LSTM was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.