Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

[Click here for the German text version.]

[Click here for the German text version.]

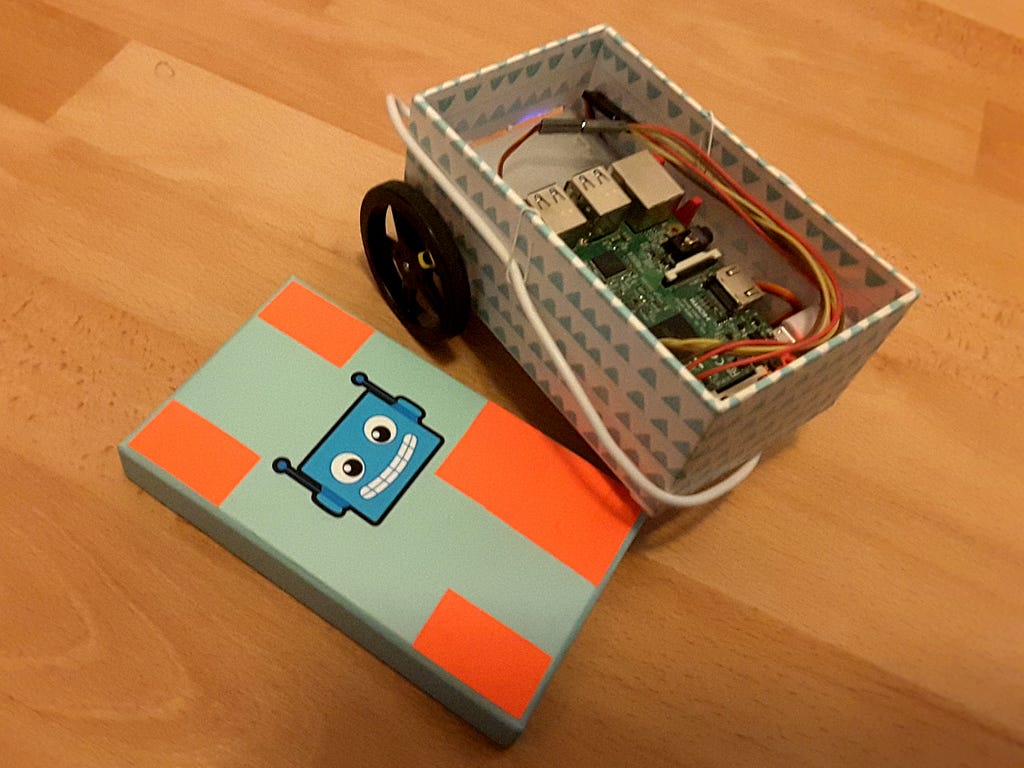

My new project is finished. Normally I build websites… This time, I wanted to create something real! :-)

So I drive my Raspberry Pi through my flat… with two continuous servo motors, wheels, wireless power and a smartphone camera control.

What did I need?

- 1x Raspberry Pi

- 2x Continuous Rotation Micro Servos

- 2x matching wheels

- 6x Jumper cables m/f — I got these from my father. ;-)

- 1x USB Power Bank from the drawer — I have this one.

- 1x nice box with cover in just the right size — Thanks to my wife. ;-)

- Colorful marker stickers, in order to find the robot in a camera picture

- 1x smashed table tennis ball als a third leg

- Decoration, scissors, tape, …

How does it work?

This is what I did…

1. Control the servo motors.

Servo motors have three inputs: 5 Volts power (red), Ground (brown), Signal (yellow). Connect these to the GPIO pins of the Raspberry Pi using jumper cables. Click here for the pinouts. It has two 5V pins at the top-right. Ground is spread a few times over different pins. For the signal pins I chose GPIO 17 and GPIO 27. They lie next to each other.

The signal is transferred with pulse-width modulation (PWM). This means that the signal alternates between 0 and 1 very fast. The duration (“width”) of the 1 becomes the setpoint for the velocity.

- 1500 µs — Stand still

- 1500 µs + 800 µs = 2300 µs — full speed counter clockwise

- 1500 µs — 800 µs = 700 µs — full speed clockwise

Now I can connect to the Raspberry Pi via SSH and test the servo motors with the command line. The pigs program is a neat command line tool for this purpose (pigs man page).

- sudo pigpiod — to start the Pi GPIO Deamon.

- pigs m 17 w — to set pin 17 to Write mode.

- pigs servo 17 2300 — full speed ahead!

- pigs servo 17 700 — full speed backwards!

- pigs servo 17 0 — Stop!

- pigs m 17 w m 27 w servo 17 2300 servo 27 700 mils 1000 servo 17 0 servo 27 0 —for a useful command sequence: First set GPIO ports 17 and 27 to Write mode. Then set full speed on both motors in different direction. Then wait 1000 milliseconds. Then stop both motors.

2. Pass HTTP commands to the servos.

[Click here for the source code.]

Next I published the commands over HTTP. As a Java Web Developer this was a home game for me.

I run a Tomcat 8 Java Web Server on the Raspberry Pi. It has my custom Java Web Archive (WAR). A HttpServlet handles HTTP requests. It reads velocity setpoints from URL parameters and passes them to the pigs program via ProcessBuilder.

The second version is a little bit more sophisticated and understands command sequences:

- left <-800 … 0 … 800> — to control the left wheel.

- right <-800 … 0 … 800> — to control the right wheel.

- wait <milliseconds> — to wait / move on for the specified time.

- stop — as a short form for left 0 right 0.

- clear — to clear all previous commands.

An example request:

http://…/wheels?x=clear+left+800+right+800+wait+1000+stop

3. Build a simple remote control.

[Click here for the source code.]

The simple remote controls directly sets speeds on the left and right wheels dependent on where I click / touch. When the click is released, the robot stops. This way, the Pi can be navigated… Yay!

4. Capture camera, find colorful markers, calculate robot coordinate system from four known points.

[Click here for the source code.]

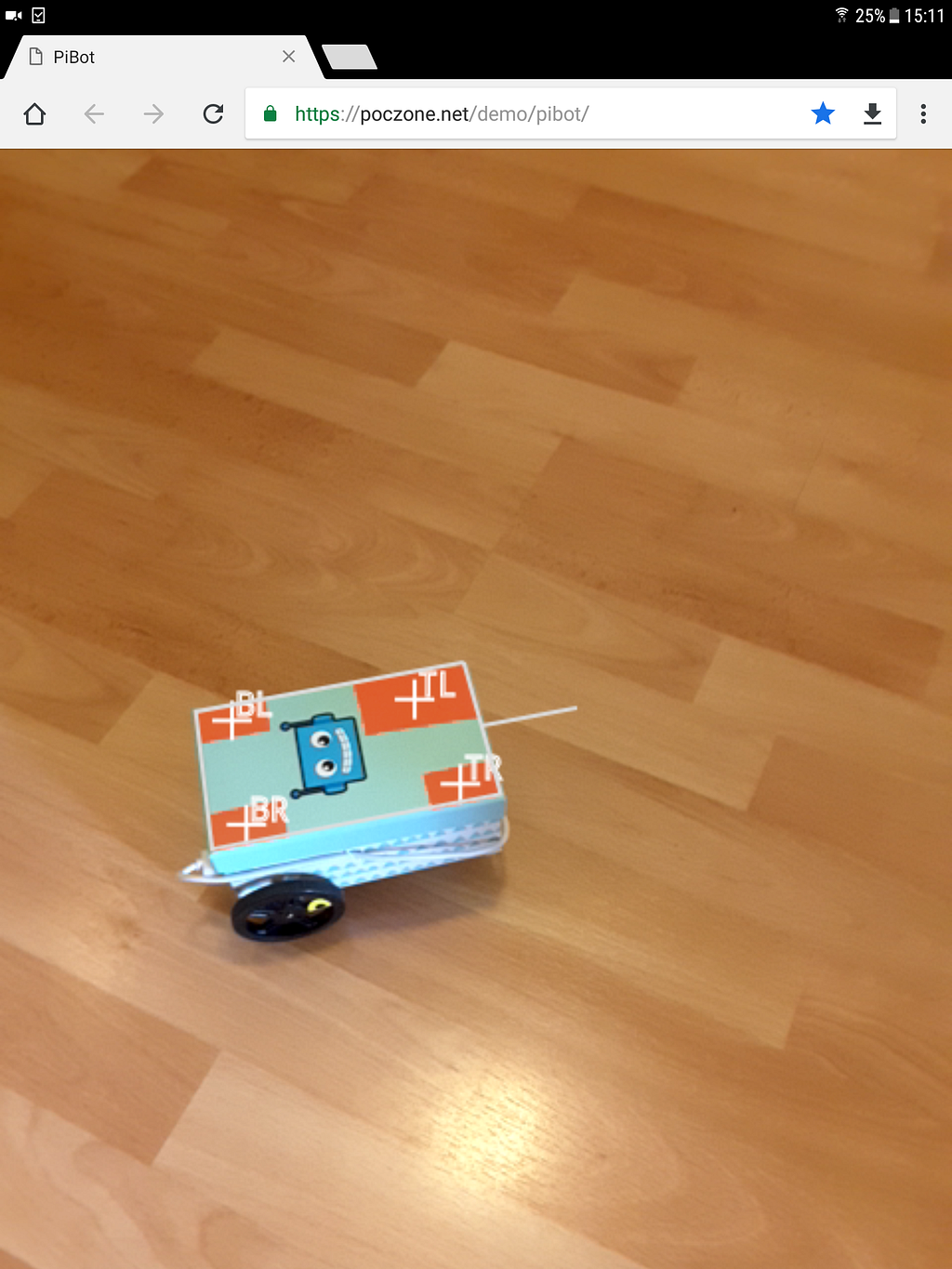

Detected robot cover coordinate system inside the web browser

Detected robot cover coordinate system inside the web browser

But I want a cool controller!

This controller shall be opened in a smartphone / tablet web browser. It captures the camera video. The user points the camera to the robot. The signal orange marker stickers on the robot cover are found inside the image. This way, the robot position is detected. With the positions of the stickers, the robot coordinate system can be calculated, even when the image is distorted in perspective. When I click somewhere on the image, I know: This is 210mm ahead and 32mm to the left.

In more detail, the procedure comes down to these steps:

- Capture the camera with navigator.getUserMedia. Pipe this into a <video> tag.

- Render snapshot images to a <canvas> and collect the current pixel data.

- Detect signal orange pixels… works best in HSV color space with high tolerance on value (in order to cope with light and shadow).

- Find connected signal color islands with a minimum size via a flood fill algorithm. Find the midpoints with average calculation.

- Sort the islands descendant by their size.

- The markers are designed so that the biggest marker is at the front-left. The four biggest islands can be sorted in a way that they form a clockwise circle. Voilà! Front-left, front-right, back-right, back-left.

- Now we have four known points in the image and we know their coordinates in the robot coordinate system. With this clever approach we can transform this into a linear equation system and solve it. We get the matrices to transform points from image to robot coordinates and vice versa.

- Then I draw a frame around the robot and a spike ahead as a sign that I detected the correct coordinate system — see the image above.

- When the user clicks on the image, I know the target millimeters ahead/backwards and left/right …

- This is passed to the robot control with HTTP…

5. Command: Turn 32 degrees, drive 240mm ahead.

The remaining part is to execute commands like this: Turn 32 degrees clockwise, drive 240mm ahead.

Driving ahead is simple: Just set the same speed on both wheels (one wheel has to be inverted due to the setup). I tried to read the wheel speeds from the datasheet. However, the sheet had maximum values only and the calculated values turned out to be inaccurate. So I put the Pi on a book and found the milliseconds for one and 10 wheel revolutions by experimentation and with the speed I wanted. I know the wheel perimeter. So I can easily calculate the drive ahead duration for a given distance. Ahead: Check.

Turning around the center works like this: Drive one wheel forward. Drive the other wheel backwards with the same speed. Again, the calculation approach did not work very good — mainly due to friction. So I measured the time needed for a 360 degrees turnaround. Depending on the desired angle I rotate for the corresponding proportion of that time. The friction is a bit different every time, so the rotation might be a bit off. But the error is little and acceptable for my purposes. Rotation: Check.

Voilà!

And here it is, my Raspberry Pi on wheels with camera control:

This was a very nice project! I was glad to apply my knowledge on differential drive robots from my robotics studies to my own Raspberry Pi. It really made a lot of fun!

Controlling the servo motors was easier than expected.

Because of the Pi’s wifi access and the possibility to run a web server, I was in known terrain very fast. Thanks to HTML5 Camera / Video / Canvas, an image detection based remote control can be provided on a smartphone / tablet nowadays and this works really smooth.

Also, I had a lot of fun getting lost in the Adafruit online shop and learning system! There, you can get great inspiration for hardware projects of any kind. The package was shipped from New York to Germany in three days — awesome!

Let’s see what I build next… :-)

My Raspberry Pi on 2 Wheels was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.