Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

I’m running @pythonetc, a Telegram channel about Python and programming in general. Here are the best posts of August 2018.

Factory method

If you create new objects inside your __init__, it may be better to pass them as arguments and have a factory method instead. It separates business logic from technical details on how objects are created.

In this example __init__ accepts host and port to construct a database connection:

class Query: def __init__(self, host, port): self._connection = Connection(host, port)

The possible refactoring is:

class Query: def __init__(self, connection): self._connection = connection @classmethod def create(cls, host, port): return cls(Connection(host, port))

This approach has at least these advantages:

- It makes dependency injection easy. You can do Query(FakeConnection()) in your tests.

- The class can have as many factory methods as needed; the connection may be constructed not only by host and port but also by cloning another connection, reading a config file or object, using the default, etc.

- Such factory methods can be turned into asynchronous functions; this is completely impossible for __init__.

super VS next

The super() function allows referring to the base class. This can be extremely helpful in cases when a derived class wants to add something to the method implementation instead of overriding it completely:

class BaseTestCase(TestCase): def setUp(self): self._db = create_db()

class UserTestCase(BaseTestCase): def setUp(self): super().setUp() self._user = create_user()

The function’s name doesn’t mean excellent or very good. The word super implies above in this context (like in superintendent). Despite what I said earlier, super() doesn't always refer to the base class, it can easily return a sibling. The proper name could be next() since the next class according to MRO is returned.

class Top: def foo(self): return 'top'

class Left(Top): def foo(self): return super().foo()

class Right(Top): def foo(self): return 'right'

class Bottom(Left, Right): pass

# prints 'right'print(Bottom().foo())

Mind that super() may produce different results since they depend on the MRO of the original call.

>>> Bottom().foo()'right'>>> Left().foo()'top'

Custom namespace for class creation

The creation of a class consists of two big steps. First, the class body is evaluated, just like any function body. Second, the resulted namespace (the one that is returned by locals()) is used by a metaclass (type by default) to construct an actual class object.

class Meta(type): def __new__(meta, name, bases, ns): print(ns) return super().__new__( meta, name, bases, ns )

class Foo(metaclass=Meta): B = 2

The above code prints {'__module__': '__main__', '__qualname__': 'Foo', 'B': 3}.

Obviously, if you do something like B = 2; B = 3, then the metaclass only knows about B = 3, since only that value is in ns. This limitation is based on the fact, that a metaclass works after the body evaluation.

However, you can interfere in the evaluation by providing custom namespace. By default, a simple dictionary is used but you can provide a custom dictionary-like object using the metaclass __prepare__ method.

class CustomNamespace(dict): def __setitem__(self, key, value): print(f'{key} -> {value}') return super().__setitem__(key, value)class Meta(type): def __new__(meta, name, bases, ns): return super().__new__( meta, name, bases, ns )

@classmethod def __prepare__(metacls, cls, bases): return CustomNamespace()

class Foo(metaclass=Meta): B = 2 B = 3

The output is the following:

__module__ -> __main____qualname__ -> FooB -> 2B -> 3

And this is how enum.Enum is protected from duplicates.

matplotlib

matplotlib is a complex and flexible Python plotting library. It's supported by a wide range of products, Jupyter and Pycharm including.

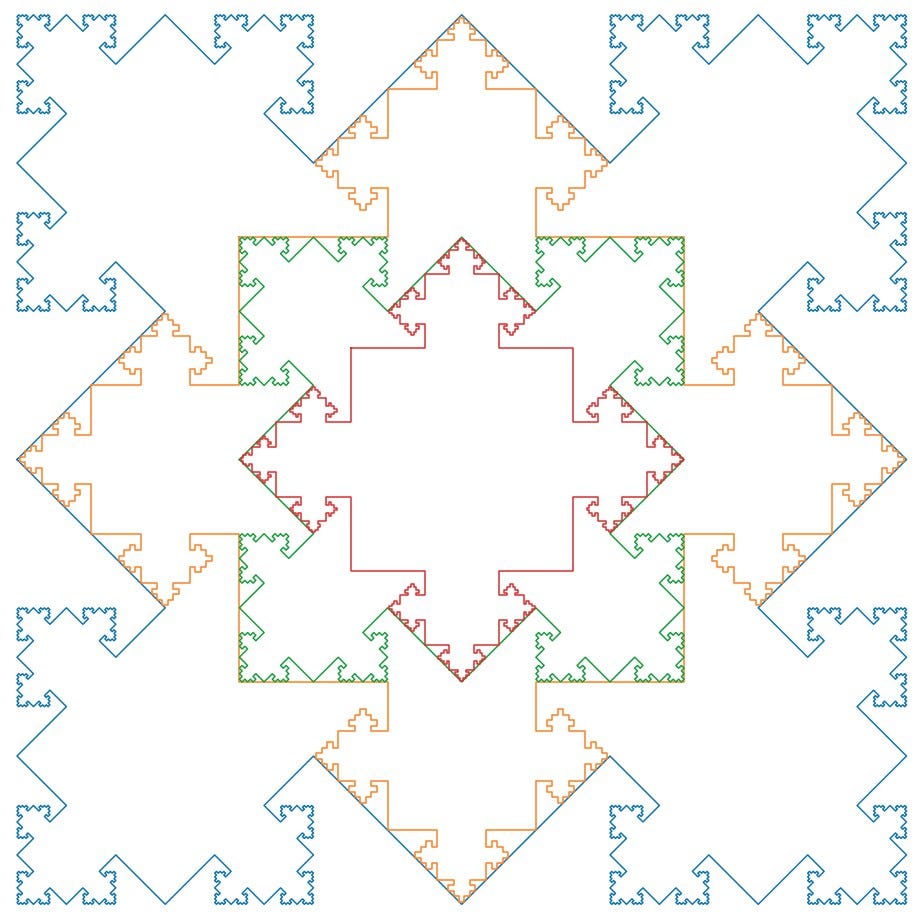

This is how you draw a simple fractal figure with matplotlib: https://repl.it/@VadimPushtaev/myplotlib, see the image at the beginning of the article.

timezones support

Python provides the powerful library to work with date and time: datetime. The interesting part is, datetime objects have the special interface for timezone support (namely the tzinfo attribute), but this module only has limited support of its interface, leaving the rest of the job to different modules.

The most popular module for this job is pytz. The tricky part is, pytz doesn't fully satisfy tzinfo interface. The pytz documentation states this at one of the first lines: “This library differs from the documented Python API for tzinfo implementations.”

You can’t use pytz timezone objects as the tzinfo attribute. If you try, you may get the absolute insane results:

In : paris = pytz.timezone('Europe/Paris')In : str(datetime(2017, 1, 1, tzinfo=paris))Out: '2017-01-01 00:00:00+00:09'Look at that +00:09 offset. The proper use of pytz is following:

In : str(paris.localize(datetime(2017, 1, 1)))Out: '2017-01-01 00:00:00+01:00'

Also, after any arithmetic operations, you should normalize your datetime object in case of offset changes (on the edge of the DST period for instance).

In : new_time = time + timedelta(days=2)In : str(new_time)Out: '2018-03-27 00:00:00+01:00'In : str(paris.normalize(new_time))Out: '2018-03-27 01:00:00+02:00'

Since Python 3.6, it’s recommended to use dateutil.tz instead of pytz. It's fully compatible with tzinfo, can be passed as an attribute, doesn't require normalize, though works a bit slower.

If you are interested why pytz doesn't support datetime API, or you wish to see more examples, consider reading the decent article on the topic.

StopIteration magic

Every call to next(x) returns the new value from the x iterator unless an exception is raised. If this is StopIteration, it means the iterator is exhausted and can supply no more values. If a generator is iterated, it automatically raises StopIteration upon the end of the body:

>>> def one_two():... yield 1... yield 2...>>> i = one_two()>>> next(i)1>>> next(i)2>>> next(i)Traceback (most recent call last): File "<stdin>", line 1, in <module>StopIteration

StopIteration is automatically handled by tools that calls next for you:

>>> list(one_two())[1, 2]

The problem is, any unexpected StopIteration that is raised within a generator causes it to stop silently instead of actually raising an exception:

def one_two(): yield 1 yield 2

def one_two_repeat(n): for _ in range(n): i = one_two() yield next(i) yield next(i) yield next(i)

print(list(one_two_repeat(3)))

The last yield here is a mistake: StopIteration is raised and makes list(...) to stop the iteration. The result is [1, 2], surprisingly.

However, that was changed in Python 3.7. Such foreign StopIteration is now replaced with RuntimeError:

Traceback (most recent call last): File "test.py", line 10, in one_two_repeat yield next(i)StopIteration

The above exception was the direct cause of the following exception:

Traceback (most recent call last): File "test.py", line 12, in <module> print(list(one_two_repeat(3)))RuntimeError: generator raised StopIteration

You can enable the same behavior since Python 3.5 by from __future__ import generator_stop.

Python stories, August 2018 was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.