Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

The internet content landscape today

The internet content landscape today

High quality information is critical to the world.

Social media giants like Facebook, Twitter, Google, and Reddit all curate the data that billions of people consume, every day. Yet as digital society expands, these corporations are encountering immense problems of scale.

Rampant spam, attempts to game the system, fake news & inappropriate content all plague the the information economy today.

As companies do their best to crack down, they risk facing sinister questions about censorship, human bias, and monopolistic behavior.

But despite hiring tens of thousands of human curators and deploying sophisticated machine learning filters, bad actors still weigh heavily upon the shoulders social media giants.

For example, it’s obnoxious to see my Twitter feed invaded by scam bots. Twitter’s advanced machine learning filters, crowd reporting features, and armies of human employees seem to offer little protection against infestations of scam bots. When there’s a will, there’s a way, and any platform used by millions (or even billions) of people is in danger of exploitation by the enterprising mob.

body[data-twttr-rendered="true"] {background-color: transparent;}.twitter-tweet {margin: auto !important;}

I do wish @elonmusk's first tweet about ethereum was about the tech rather than the twitter scambots........ @jack help us please? Or someone from the ETH community make a layer 2 scam filtering solution, please? https://t.co/biVRshZmne

function notifyResize(height) {height = height ? height : document.documentElement.offsetHeight; var resized = false; if (window.donkey && donkey.resize) {donkey.resize(height); resized = true;}if (parent && parent._resizeIframe) {var obj = {iframe: window.frameElement, height: height}; parent._resizeIframe(obj); resized = true;}if (window.location && window.location.hash === "#amp=1" && window.parent && window.parent.postMessage) {window.parent.postMessage({sentinel: "amp", type: "embed-size", height: height}, "*");}if (window.webkit && window.webkit.messageHandlers && window.webkit.messageHandlers.resize) {window.webkit.messageHandlers.resize.postMessage(height); resized = true;}return resized;}twttr.events.bind('rendered', function (event) {notifyResize();}); twttr.events.bind('resize', function (event) {notifyResize();});if (parent && parent._resizeIframe) {var maxWidth = parseInt(window.frameElement.getAttribute("width")); if ( 500 < maxWidth) {window.frameElement.setAttribute("width", "500");}}

Now, let’s take a cognitive leap to consider a social media corporation as if it were a form of modern nation state: Billions of global citizens, united together under one platform, with each individual promoting their own competing interests.

How then do these corpor-nations maximize the greatest user experience for all?

By drafting a carefully considered constitution, otherwise known as Terms of Service. Once drafted and approved, the next question is, how do you properly enforce the rules of the ToS?

For machine identifiable terms of service violations, algorithms can effectively filter en masse. The shortfall, however, is that even with billions of dollars of research and development, AI and machine learning seem far away from being meaningfully capable.

Many potential violations of a quality terms of service are not identifiable by machines and may not ever be. For the countless violations that are not machine readable, corporations need to enlist thousands of human curators. This results in tens of thousands of people watching horrible videos, reading offensive tweets, and scanning for fake news — all day, every day. Filtering for violations of the ToS.

Furthermore, perhaps in order to draft the most virtuous terms of service possible, certain tenets need to be reasonably objective. In theory, this kind of information may not ever be machine readable. Take for example, the unending discussion over what is hate speech and what is protected free speech. The recent banning of Alex Jones by Facebook, Youtube, and Apple, but not Twitter, has reignited this age old discussion.

body[data-twttr-rendered="true"] {background-color: transparent;}.twitter-tweet {margin: auto !important;}

We didn't suspend Alex Jones or Infowars yesterday. We know that's hard for many but the reason is simple: he hasn't violated our rules. We'll enforce if he does. And we'll continue to promote a healthy conversational environment by ensuring tweets aren't artificially amplified.

— @jack

function notifyResize(height) {height = height ? height : document.documentElement.offsetHeight; var resized = false; if (window.donkey && donkey.resize) {donkey.resize(height); resized = true;}if (parent && parent._resizeIframe) {var obj = {iframe: window.frameElement, height: height}; parent._resizeIframe(obj); resized = true;}if (window.location && window.location.hash === "#amp=1" && window.parent && window.parent.postMessage) {window.parent.postMessage({sentinel: "amp", type: "embed-size", height: height}, "*");}if (window.webkit && window.webkit.messageHandlers && window.webkit.messageHandlers.resize) {window.webkit.messageHandlers.resize.postMessage(height); resized = true;}return resized;}twttr.events.bind('rendered', function (event) {notifyResize();}); twttr.events.bind('resize', function (event) {notifyResize();});if (parent && parent._resizeIframe) {var maxWidth = parseInt(window.frameElement.getAttribute("width")); if ( 500 < maxWidth) {window.frameElement.setAttribute("width", "500");}}

(*Update: since this article was written Alex Jones has been banned from Twitter as well)

Being able to objectively draw the line between hate speech and free speech has been a government enigma for centuries. Although difficult, constitutions frame the guideline for its potential solution in reasonably objective terms. A constitution must be written so that it can be arbitrated across a decentralized court system; in local, state, and federal courts by the due process of lawyers, judges, and jury. If the legal framework is effective enough, legal resolutions will be be replicated with similar outcomes, in all levels of the court system.

As for Alex Jones, I don’t propose to know which corporation is right or not in banning his speech. As with the laws of the land, rules are rules in the digital sphere as well. What’s law is not always synonymous with what’s right. In such cases, in democratic governments, there’s a grander due process for potentially amending the constitution itself.

This idea then reveals a critical difference between the nation state and the corpor-nation. Terms of service of the digital world don’t offer users a democratic due process to alter or enforce the law. Digital platforms connect billions of global citizens and make administrative decisions that wield disproportionate influence over global society. Yet there are few governance procedures like the ones implemented by democratic nations.

Perhaps Silicon Valley focus groups and manual “flagging” tools are the closest comparisons we have. These processes are meaningful, but are severely limited. As the digital world matures, there’s a chance that such mechanisms may prove insufficient.

The digital world, with all its virtues of democratizing access to information, is a highly adversarial environment. Content quality is under fire, and the corporation model may ultimately be insufficient at maintaining the information superhighway.

New experiments, and potential solutions to the corporation’s downfalls, are being tested in the world of cryptocurrency.

New governance models for software platforms are being tested in highly adversarial environments. So adversarial in fact, that creatures of the cryptocurrency swamp are even challenging the corporate software model (i.e. crypto scam bots overrunning Twitter).

One particularly interesting experiment against such problems can be found in the software technique called “Token Curated Registry.” Also known as TCR, it’s a software model for economically incentivizing the wisdom of the crowd in order to draw out, legislate, and enforce a virtuous terms of service.

A token economy (exchangeable for real money) incentivizes a community beyond the control of any one company, to curate the quality of information on a software platform. The term Token Curated Registry or TCR has been the subject of much hype and promise. For example, imagine if you could directly influence the ranked visibility of content on platforms like Spotify, OpenTable, or your Twitter feed, based on how many tokens you have.

Tokens as a way to rank the visibility of subjective content, such as the ranking of “Best Restaurants in Madrid” or the popular “Rap Caviar Playlist,” will likely fall victim to hype.

The problem of subjective TCRs (or ranked TCRs) has not yet been solved. Ranking the visibility of subjective content, based strictly on how much money you have, may seem dishonest or distasteful.

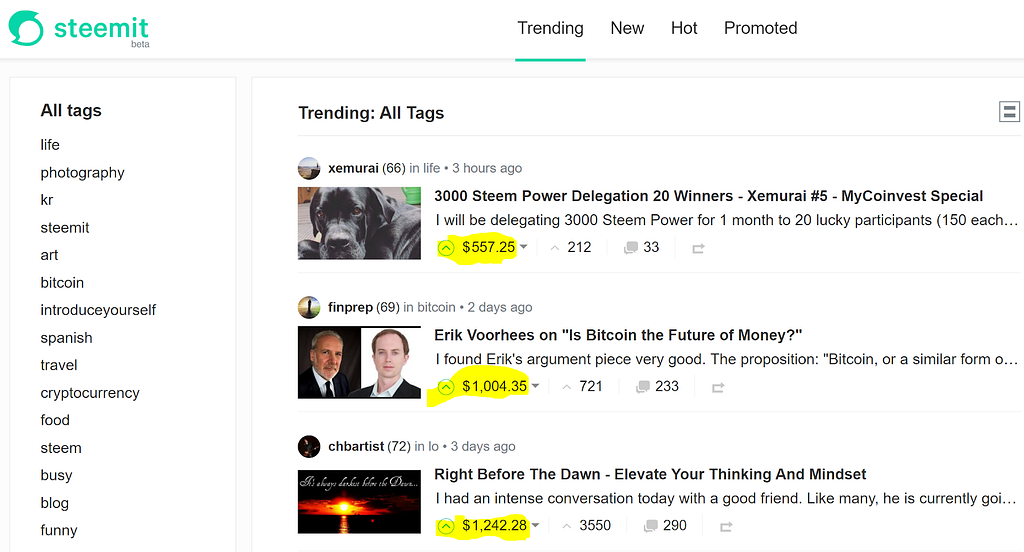

As it stands now for world’s popular content feeds, visibility ranking *seems* to happen according to non-monetary measurements such as Likes on Facebook, Favorites on Twitter, and Claps on Medium. The hope is that then, boosted or paid posts are then clearly marked with #ad.

Ranking content visibility in a strictly monetary way, will likely be a failure in the early theory of TCRs. If my personal content feeds are essentially up to the highest bidder- by secret attempts like Russian bots, Instagram influencing, or biased employees- I’d prefer to be blissfully unaware.

What will likely be a successful implementation of TCRs however, takes a different approach: using a tokenized incentive for users to curate reasonably objective information.

For example, imagine if Apple News was governed by an objective Token Curated Registry.

The real Apple News ToS, (which you probably legally agreed to, but never read) likely states a series of complex rules that are barely human readable. Yet human contractors (armed with machine learning filters) ensure that each publication, author, and post meet the baseline requirements of the ToS.

Such filtering would normally happen behind closed doors. And unless a profile was loud enough on a variety of platforms (like Alex Jones), nobody would notice a profile being purged suddenly. Certainly Alex Jones is reprehensible, but his removal reveals a question of how rampantly the corporate curation model could be abused. A more dire concern, is that the limited liability corporation may be ill-equipped to deal with the existential questions of the future.

Effective enforcement of reasonably objective digital laws may require a level of public forum, due process, and vested interest, that’s logistically impossible for corporations. Non machine readable information (although some curators will likely arm themselves with ML aids), filtered by a set of reasonably objective virtues, will likely achieve more successful outcomes in the TCR experiment.

Leveraging a token economy on an open source platform may incentivize the crowd to produce information on and help arbitrate the terms of service in a transparent way. As an example, let’s simulate a token curated app, called dApple News:

Similar to Apple, but without the haze of limited liability jargon, the dApple News constitution cites virtuous tenets such as “forbidding hate speech,” carefully alongside “promoting the right to free speech.” Furthermore, every publication has the right to editorial freedom so long as basic journalistic standards are met. In certain cases, the answer for whether or not a publication breaks the rules isn’t easily determined by human curators, let alone machines. And unlike Apple, its decentralized counterpart has a public forum for arbitrating non machine readable curation.

With dApple’s token curated registry, a form of due process in a public forum, helps each solution become reasonably objective.

Here’s how dApple News works exactly:

In order to be a verified “newsroom,” an organization has to stake a fixed submission fee, let’s say $100 for this example, which is instantly exchanged for network tokens at a dynamic (and volatile) free market price.

At any time during staking, any user can challenge an organization for potential violation of the network constitution. Challenging, however, requires an equal and opposing token stake to the publication’s initial staking fee. The challenge then, is put up to a vote by the larger token holder community, which any user can join. Discussion is enabled during the challenge process, but voting is blind.

For example, if Infowars stakes to be on the dApple News platform, I could challenge it on the grounds of publishing objectively verifiable hate speech. So I stake 100$ against the Infowars original submission and state my case to the community. In my challenge materials, I present time-stamped proof of Infowars posts brazenly inciting violence against a community. Active discussion on the voting board ensues, of which any user of the platform can weigh in on.

After voting is completed, a majority of token votes verify that the post objectively meets the definition of hate speech. Infowars can either stake more tokens to appeal and state their reasons why, or be kicked off the platform for good. Upon success, I receive back my challenging stake, in addition to Infowars application stake ($200 total worth of tokens).

Token holders tend to want to uphold the virtuous terms of service, as these standards give their network value. Being able to participate in such a platform then affords the underlying tokens real value. Such a system encourages a new species of diligent reader and evangelistic token holder.

So long as each organization upholds the dApple terms of service, they’ll never forfeit their application stake. And any frivolous or failed challenges against them will result in their own token holdings growing. The concept of TCRs are still in the early stages, and much work needs to be done to combat unintended consequences. In the case of dApple, will it eventually evolve into an ideologically homogeneous community? Will an alternative to dApple arise with identical terms of service, yet contain significantly divergent content? Will different groups use TCRs to even more harshly enforce their internal orthodoxies? I believe there will be solutions, and these subjects will be explored more deeply as we continue to explore this evolving space.

While still theoretical, I believe the objective TCR may prove an invaluable experiment at solving the downfalls of the digital world. As the digital world is overtaken by the mob, a solution may be found in the wisdom of the crowd.

Thanks to Guha Jayachandran, Yin Wu, and Joseph Urgo for conversations and ideas which contributed to this post.

Curating a Virtuous Digital World was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.