Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Machines That Play series has been broken into 6 parts. This is Part 3 of the series. It will cover the history of innovations in chess programs, leading up to and including Deep Blue. It will include some technical details (from papers of the Deep Blue team) as well as social and cultural reactions, including Kasparov’s.

Part 1: Machines That Play (Overview)

Part 2: Machines That Play (Building Chess Machines)

Part 3: Machines that Play (Chess)

Part 4: Machines That Play (Deep Blue) — this one

Part 5: Machines That Play (Checkers)

Part 6: Machines That Play (Backgammon)

Being like a human, but (still) not being human

Game: Chess

Garry Kasparov (Time Magazine)

Garry Kasparov (Time Magazine)

The (AI and chess) road so far

Why would anyone want to teach a machine how to follow some arbitrary man-made rules to move a bunch of wooden pieces on checkerboard, with the sole aim of cornering one special wooden piece? It’s so human to attack and capture pawns, knights, bishops and the queen to finally corner the king into an inescapable position. Those are our actions, that is our goal and we balance strategy and tactics to suit those.

Chess is an old game. It is believed to have originated in Eastern India (280–550). It reached Western Europe and Russia in the 9th century and by the year 1000, it had spread throughout Europe. It became popular and writings about chess theory (of how to play chess) began to appear in the 15th century. Throughout history, many people have said many different things about chess.

- During the Age of Enlightenment, chess was viewed as a means of self-improvement. Benjamin Franklin (1750) said, The Game of Chess is not merely an idle amusement; several very valuable qualities of the mind, useful in the course of human life, are to be acquired and strengthened by it, so as to become habits ready on all occasions; for life is a kind of Chess, in which we have often points to gain, and competitors or adversaries to contend with, and in which there is a vast variety of good and ill events, that are, in some degree, the effect of prudence, or the want of it. By playing at Chess then, we may learn: I. Foresight, which looks a little into futurity, and considers the consequences that may attend an action […], II. Circumspection, which surveys the whole Chess-board, or scene of action: — the relation of the several Pieces, and their situations […], III. Caution, not to make our moves too hastily […]

- “Chess is the touchstone of intellect.” — Johann Wolfgang von Goethe (1749–1832)

- “The public must come to see that chess is a violent sport. Chess is mental torture.” — Garry Kasparov (1990s)

- “As a chess player one has to be able to control one’s feelings, one has to be as cold as a machine.” — Levon Aronian (2008) (interview)

Chess has long been regarded as the game of intellect. And that is exactly why people wanted to build a machine that could follow some arbitrary rules of our game and become so good at it and it could one day beat us at our own game. Programming a machine to play chess is not tricky, but programming it so it can become a strong chess player is very hard. Many people argued that a machine that could successfully play chess would prove that thinking can be modeled/understood and that machines can be built to think. Norbert Wiener in 1948 book Cybernetics said (regarding computer chess), “Whether it is possible to construct a chess-playing machine, and whether this sort of ability represents an essential difference between the potentialities of the machine and the mind.” We would build a successful chess machine, but it won’t necessarily help us understand our mind — its way of playing would range from being extremely human to being almost alien to us and it would surely challenge our conception of what to call intelligence.

The idea of creating a chess-playing machine dates to the 18th century. Around 1769, von Kempelen built the chess-playing automaton called The Turk, which became famous before it was exposed as a hoax. In 1912, Leonardo Torres y Quevedo built a machine called El Ajedrecista, which played the endgame, but it was too limited to be useful.Creation of electronic computers began in the 1930s. ENIAC, which is considered to be the first general-purpose electronic computer, became operational in 1946. By the late 1940s computers were used as research and military tools in US, England, Germany, and the former USSR.

Computer chess presented a fascinating and challenging engineering problem. Researchers started making progress in the 1940s and in 1950 Shannon published “A Chess-Playing Machine,” (Scientific American, February, 1950), which set the direction for future AI researchers. In it he stated his case for why a chess-playing machine should be built, “The investigation of the chess-playing problem is intended to develop techniques that can be used for more practical applications. The chess machine is an ideal one to start with for several reasons.”

1940s — 1950s

Early pioneers focused on building machines that would play chess much like humans did, so early chess progress relied heavily on chess heuristics (rules of thumb) to choose the best moves. Researchers emphasized emulation of human chess thought process because they believed teaching a machine how to mimic human thought would produce the best chess machines.

- In 1950, Shannon published the seminal paper on a) why computer chess, b) problems with computer chess c) potential solutions to problems, Programming a Computer for Playing Chess. He wrote, “The chess machine is an ideal one to start with, since: (1) the problem is sharply defined both in allowed operations (the moves) and in the ultimate goal (checkmate); (2) it is neither so simple as to be trivial nor too difficult for satisfactory solution; (3) chess is generally considered to require “thinking” for skillful play; a solution of this problem will force us either to admit the possibility of a mechanized thinking or to further restrict our concept of “thinking”; (4) the discrete structure of chess fits well into the digital nature of modern computers.” He showed that if we assume an average chess game (of 40 moves), then there are 10¹²⁰ possible moves — a game can’t be won by brute force (an approach where all possibilities are explored). Moves have to be selected intelligently and “forward pruning” would help with this, i.e. screen so bad moves need can be ruled out. This influenced the early programmers who spent quite a bit of effort to find rules for identifying moves as bad; later (by 1970s), they started noticing that there were too many exceptions to these rules and this approach

- In 1951, Alan Turing published the first chess computer program, which he developed on paper. It was capable of playing a full game of chess. Turing calculated chess moves by one move looking ahead and scoring the move.

- Search innovation (1950): Minimax search (Shannon, Turing)

- Search innovation (1956): Alpha-beta pruning (McCarthy)

Computing power was limited in the 1950s, so machines could only play at a very basic level. This is the period when researchers developed the fundamental techniques for evaluating chess positions and for searching possible moves (and opponent’s counter-moves). These ideas are still in use today.

1960s

By the end of the 1960s, computer chess programs were good enough to occasionally beat against club-level or amateur players.

- In 1967, Mac Hack Six, by Richard Greenblatt (introduced transposition tables) and became the the first program to defeat a person in tournament play

- In 1968, McCarthy and Mitchie bet David Levy (International Master) $1,000 that a computer would defeat him by 1978.

- Search innovation (1966): Alpha-beta pruning (Kotok, McCarthy)

- Search Innovation (1967): Transposition tables (MacHack)

1970s — 1980s

In 1970–1980, the emphasis was on hardware speed. In the 1950s and 1960s, early pioneers focused on chess heuristics (rules of thumb) to choose the best next moves. Even though programs in 1970s and 1980s also used heuristics, there was a much stronger focus was on software improvements as well as use of faster and more specialized hardware. Customized hardware and software allowed programs to conduct much deeper searches of game trees (involving millions of chess positions), something humans did not (because they could not) do.

The 1980s brought an era of low cost chess computers. First microprocessor-based chess programs started becoming available. Because of the availability of home computers and these programs, anyone could now play chess (and improve their game) against a machine. By the mid-1980s, the sophistication of microprocessor-based chess software had improved so much they began winning tournaments — both against supercomputer based chess programs and some top-ranked human players.

- In 1977, Chess 4.6 became the first chess computer to be successful at a major chess tournament.

- 1978: No machine has been able to beat Levy (Levy defeated Chess 4.7 by 4.5–1.5). Levy wins the bet, but a machine does win the first ever game against him.

- In 1981, Cray Blitz won the Mississippi State Championship with a perfect 5–0 score and a performance rating of 2258. In round 4 it defeated Joe Sentef (2262) to become the first computer to beat a master in tournament play and the first computer to gain a master rating.

- In 1982, Ken Thompson’s hardware chess player Belle earned a US master title and a performance rating of 2250. (Side note: Ken Thompson is the creator of UNIX operating system).

- 1983, 1986: Cray Blitz (software by Robert Hyatt) won consecutive World Computer Chess Championships.

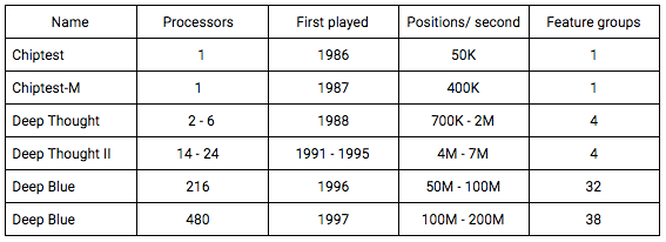

- In 1985, Chiptest is built by Feng-hsiung Hsu ,Thomas Anantharaman, and Murray Campbell. It used a special VLSI move generator chip and could execute 50,000 moves per second

- In 1987, Chiptest-M is built — the Chiptest team removed bugs in the chip. It could now execute 500,000 moves per second.

- 1988: HiTech by Hans Berliner of CMU defeated grandmaster Arnold Denker in a match.

- In 1988, Deep Thought by Hsu and Campbell of CMU shared first place with Tony Miles in the Software Toolworks Championship, ahead of several grandmasters including. It also defeated grandmaster Bent Larsen in a tournament game, making it the first computer to beat a grandmaster in a tournament. It could execute 700,000 moves per second. It obtained the performance rating of 2745, the highest rating obtained by a computer player. IBM took over the project and hired the people behind it.

- In 1989 Deep Thought lost two exhibition games to Garry Kasparov.

- Search innovation (1975): Iterative-deepening (Chess 3.0+)

- Search innovation (1978): Special hardware (Belle)

- Search innovation (1983): Parallel search (Cray Blitz)

- Search innovation (1985): Parallel evaluation (HiTech)

- Speed progress (1980s): In the 1980s a microcomputer could execute a little over 2 million instructions per second.

1990s

In 1990s, chess programs began challenging International Chess masters and later Grandmasters. These programs relied much more on memory and brute force rather than strategic insight , and they consistently started to beat the best humans. Some dramatic moments in computer chess occurred in 1989 — two widely-respected Grandmasters were defeated by CMU’s Hitech and Deep Thought. Researchers felt machines could finally beat a World Chess Champion. This got IBM interested so they began working on this challenge in 1989 and built a specialized chess machine, named Deep Blue. It would end up beating Garry Kasparov, the best human chess player. So, did it think? And was it intelligent?

IBM researchers were awarded the Fredkin prize, a $100,000 award for the first program to beat a reigning world chess champion, which had gone unclaimed for 17 years. This (and later) victories in computer chess were disappointing to many AI researchers because they were interested in building machines that would succeed via “general intelligence” strategies rather than brute-force. In this sense, chess had started to decouple from AI research.

- From 1991–1995, IBM worked on Deep Thought II, which was a stepping stone to Deep Blue. It was a 24 processor system and the Deep Thought software was rewritten to handle parallelism.

- In 1992, the ChessMachine Gideon 3.1 (a microcomputer) by Ed Schröder won the 7th World Computer Chess Championship in front of mainframes, supercomputers and special hardware.

- From 1992–1998: Chess Genius was a series of chess engines by Richard Lang, written for various processor architectures. The first version in 1992 was released as PC program running under 16-bit MS-DOS. These programs won the World Microcomputer Chess Championship in 1984, 1985, 1986, 1987, 1988, 1989, 1990, 1991 and 1993.

- In 1994, during the Intel Grand Prix Cycle, Chess Genius 3, operated by Ossi Weiner, won a speed chess game (25-minutes per side) against Garry Kasparov and drew the second game, knocking Kasparov out of the tournament. This was the first match (speed-chess) that Kasparov ever lost to a computer. In the next round, Chess Genius 3 beat Predrag Nikolić, but then lost to Viswanathan Anand. Unlike Deep Blue, which was running on massively parallel custom-built hardware, ChessGenius was running on an early Pentium PC.

- Speed progress (1990s): By the 1990s computers were executing over 50 million instructions per second.

This section is about these three points (Deep Blue was a highly specialized machine designed to play chess.):

- In 1993, Deep Blue lost a four-game match against Bent Larsen.

- In 1996, Deep Blue lost a six-game match against Garry Kasparov (4–2): a) 1.6 to 2 million positions per second per chip, b) 50 to 100 million positions per second for system

- In 1997, Deep Blue (3.5–2.5) won a six-game match against Garry Kasparov . Kasparov’s loss to Deep Blue was his first ever chess match loss in his entire life: a) Enhanced chess chip with 2 to 2.5 million positions per chip, b) 200 million positions per second

Now:

- No human can play the best computers on equal terms. We (humans) have had well over 1000 years to invent, play, and understand chess — machines have had less than 60 years and they are much much better than we are.

- Speed progress (now): Processors in our tables and phone can execute over a billion instructions per second.

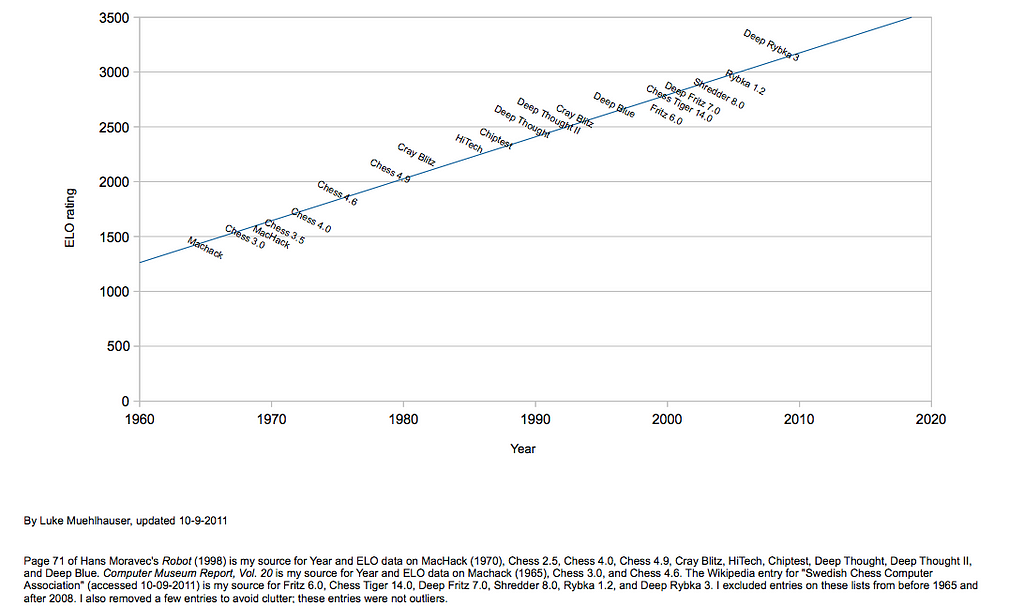

Luke Muehlhauser Historical chess engines’ estimated ELO rankings

Luke Muehlhauser Historical chess engines’ estimated ELO rankings

Some ideas and timelines in computer chess*

*All of these ideas are not addressed in this blog. I might come back and address them in the future.

Now, Deep Blue

Six-game chess matches had been organized between world champion Garry Kasparov and IBM Deep Blue. First match took place in Philadelphia in February 1996. A rematch took place in New York City in May 1997.

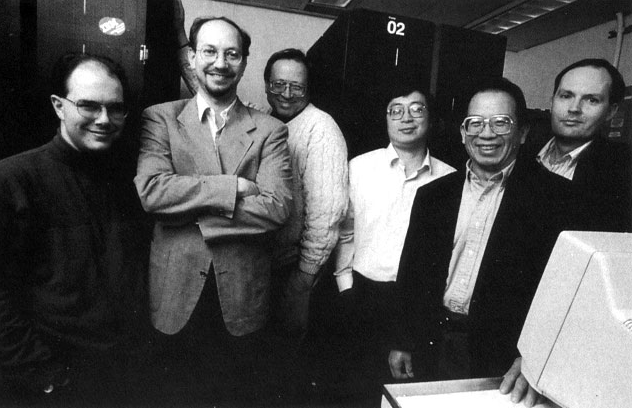

IBM’s Deep Blue Team (from left to right): Joe Hoane, Joel Benjamin, Jerry Brody, F.H. Hsu, C.J. Tan and Murray Campbell

IBM’s Deep Blue Team (from left to right): Joe Hoane, Joel Benjamin, Jerry Brody, F.H. Hsu, C.J. Tan and Murray Campbell

At the time of the first match, Kasparov had a rating of 2800, which was the highest point total ever achieved. Deep Blue’s creators placed the machine at a similar level. Popular Science asked David Levy about Garry Kasparov match against Deep Blue and Levy stated that “…Kasparov can take the match 6 to 0 if he wants to. ‘I’m positive, I’d stake my life on it.’” On the other hand Monte Newborn, a computer science professor at McGill University said, “I’ll give the computer 4 1/2 to Kasparov 1 1/2 [A draw gives each player a half point.] Once a computer gets better than you, it gets clearly better than you very quickly. At worst, it will get a 4 1/2 score.”

“Once a computer gets better than you, it gets clearly better than you very quickly.”

The outcome was unclear. Deep Thought, predecessor of Deep Blue, was a tournament grandmaster, not a match grandmaster. And this was not a regular tournament, this was a match. This was a different challenge than earlier, one IBM had been working on since 1989. Why did a match-play present a different challenge than a tournament-play? Because in a match, the players play multiple games against each other. This gave human grandmasters a opportunity to gauge the machine’s weaknesses and exploit those weaknesses to their advantage. According to Hsu,

“Human Grandmasters, in serious matches, learn from computers’ mistakes, exploit the weaknesses, and drive a truck thru the gaping holes.”

IBM needed to build a machine that would have very few weaknesses and those weaknesses needed to be difficult for human grandmasters to exploit.

Kasparov won the 1996 match. A rematch took place in New York City in 1997. This time, Kasparov lost — a machine had finally succeeded in beating the best human chess player. Kasparov’s loss to Deep Blue was his first ever chess match loss in his entire life. So what was so different in 1997? According to Campbell, Hoane, Hsu, “In fact there are two distinct versions of Deep Blue, one which lost to Garry Kasparov in 1996 and the one which defeated him in 1997.”

In the next section(s), we’ll look into some of the factors that Campbell, Hoane, and Hsu said contributed to Deep Blue’s success in 1997:

- A single-chip chess search engine

- A massively parallel system with multiple levels of parallelism

- A strong emphasis on search extensions

- A complex evaluation function, and

- An effective use of a Grandmaster game database.

In the beginning there was…

In 1985, Feng-hsiung Hsu and (a little later) Murray Campbell worked on ChipTest and then later on Deep Thought, the first grandmaster level chess machine. In 1988, Deep Thought had beaten grandmaster Bent Larsen (in tournament play) with its customized hardware: a pair of custom built processors, each of which included a VLSI chip to generate moves. Later, an experimental six-processor version of Deep Thought played a (two-games) match against Kasparov and lost. In 1989, both Hsu and Campbell were hired to work at IBM Research; their new team members included computer scientists Joe Hoane, Jerry Brody and C. J. Tan. Their new project was named Deep Blue, which they began to investigate how to use parallel processing to solve complex problems. Researchers felt machines could finally beat a World Chess Champion and so IBM began working on this challenge and built a specialized chess machine.

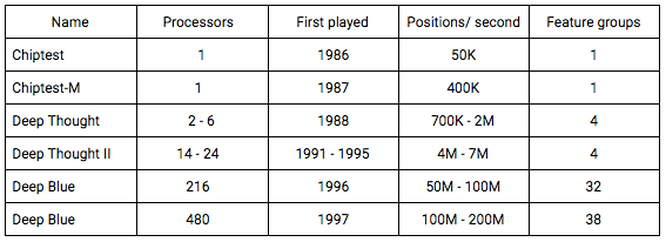

Summary of chess systems (from Campbell, Hoane, Hsu)

Summary of chess systems (from Campbell, Hoane, Hsu)

What’s in a name? (Deep Thought II or Deep Blue Prototype (1991–1995))

What’s in a name? Turns out quite a bit. Hsu wrote in his book Behind Deep Blue: Building the Computer that Defeated the World Chess Champion, “Jerry Present, our communications person at the time, wanted to use the name Deep Blue Prototype instead of Deep Thought II. I wanted to use the name Deep Thought II. My reasoning was as follows. Deep Blue, the new machine, would be at least 100 times faster than Deep Thought II in effective search speed, and furthermore, Deep Blue would have a far better grasp of positional concepts in chess. Comparing Deep Blue with Deep Thought II would be like comparing the sun with the moon. Well, I exaggerate a little bit, but the difference in computation power was roughly of the order of a thousand to one, taking into account the far more complicated chess evaluation computation carried out on the Deep Blue chess chips. I was proud of what had been done, and did not want anything to be linked to Deep Blue unless it did use the new chips. Deep Thought II was still using the chess chips that I designed back in 1985.” He continued,

“As far as I was concerned, Deep Thought II was a dinosaur about to become extinct. It was not going to usurp the name of our new megaton solar blaster, even if only partially.”

The International Computer Chess Association (ICCA) was holding a World Computer Chess Championship tournament in Hong Kong 1995. Hsu, Campbell and team and won the event in 1989 and had not participated in 1992. ICCA approached IBM to sponsor the event and IBM agreed, which meant the team would have to participate. According to Hsu, they would much rather have prepared for the 1996 face-off against Garry Kasparov, but IBM thought it was a great publicity opportunity. By 1992, the Deep Blue team had begun working a new chip design. For Kasparov, they had planned to use the newest chips they had designed, but those chips would not be ready before the Hong Kong event. They had to compete with Deep Thought II, the old dinosaur. What should the machine participating in the World Computer Chess Championship be called: Deep Thought II or Deep Blue Prototype?

This is when the naming debate took place. Hsu wanted Deep Thought II, but Brody wanted Deep Blue Prototype because they wanted to be able to say that Deep Blue was the “successor to the reigning World Computer Chess Champion, Deep Blue Prototype, at the time of the match with Garry Kasparov”. Brody assumed their machine would probably win. And in the “unlikely” event that it did not win the championship, then they would say it was not really Deep Blue playing anyway. The problem, according to Hsu, was that they thought losing was not an “unlikely” event, they placed their chance of winning at about 50–50.

We’ll refer to the machine as Deep Thought II because Hsu and team did so.

In 1995, Deep Thought II played in Hong Kong. Before that, Deep Thought II had been mostly off-line for at least six months before this tournament. Deep Thought II’s first round opponent was Star Socrates, a parallel chess program from MIT, running on a multi-million dollar supercomputer. According to Hsu, “its machine was about a hundred times more expensive than the workstation that Deep Thought II ran on. And it’s search speed was at least comparable to Deep Thought II”. Star Socrates was a big threat to Deep Thought II. Hsu wrote in his book Behind Deep Blue, “When Deep Thought II played Star Socrates the year before, I saw for the first time a program that actually “out-searched” Deep Thought II…Yet Deep Thought II had outclassed it easily. Would one year make a difference? Deep Thought II had been off-line for over half a year, and the MIT folk were probably not sitting idle.” Deep Thought II beat Star Socrates.

While facing it’s last opponent, Fritz, and at one point in the game, Deep Thought II went into a “panic time” state. Deep Thought II was in a bad position and needed to play a move. It had found a move to play, but it was losing and it needed to find a better move. It entered a state where it spent extra time to find an alternative move. In trying to find a good alternative, Deep Thought II was searching a much larger tree (search was exploding). Hsu recalled that Grandmaster Robert Byrne stopped by and whispered to them which move he thought Deep Thought II should play. Hsu and team could do nothing but wait for Deep Thought II to finish its massive search, wondering if Deep Thought II would actually find the move Robert suggested — the move it badly needed to improve its position. Time was up and after all that searching, Deep Thought II played its originally intended move. It did not find a better move. It was over. Deep Thought II lost. Fritz would go on to win the championship.

Deep Thought II was nowhere close to Deep Blue, but it was also not the same machine as Deep Thought. They had made improvements (from Campbell, Hoane, and Hsu):

- Medium-scale multiprocessing” Deep Thought II had 24 chess engines (over time that number decreased as processors failed and were not replaced) compared with Deep Thought’s 2 processors (there were a few versions with 4 and 6 processors).

- Enhanced evaluation hardware: Deep Thought II evaluation hardware used larger RAMs and was able to include a few additional features in the evaluation function.

- Improved search software: The search software was rewritten entirely for Deep Thought II, and was designed to deal better with the parallel search, as well as implement a number of new search extension ideas. [This code would later form the initial basis for the Deep Blue search software.] Hsu wrote in his book Behind Deep Blue, “Officially, the reason for building Deep Thought II was to use it as a prototype for exploring parallel search algorithms. But the main reason was really to open up a large gap between our computer competitors and us, so that the project could go on for an extended period while I struggle through the design of the new chess chip.”

- Extended book: The extended book allowed Deep Thought II to make reasonable opening moves even in the absence of an opening book. [This feature was also inherited by Deep Blue.]

On to Deep Blue — the project task was: win a match against the best human world chess champion under regular time control.

Engineering Deep Blue (1996–1997)

According Deep Blue team’s design philosophy, focusing on the integration level was most important:

- Encapsulate every chess evaluation terms from chess books

- Create evaluation terms to deal with every known computer weaknesses

- Add hooks to handle new weaknesses, if they appear, with external FPGA hardware

- Put everything on one chip

Regarding speed, they said search speed would be obtained by increasing the level of integration (increasing single chip chess machines) and by using massive parallelism.

Deep Blue (1996)

Deep Blue (1996) was based on a single-chip chess search engine, designed over a period of three years. It ran on a 36-node IBM RS/6000 SP computer. It had 216 chess chips. Most of the evaluation function terms were computed directly on the chips — in order, “to perform the same computation on a general purpose computer, as that done by 1986 Deep Blue, would need at least one trillion instructions per second,” said Hsu. These chess chips evaluated the chess positions in a much greater detail than any other chess program before it. Each chess chip could search about 1.6 million chess positions per second. And according to Hsu, the theoretical maximum search speed of Deep Blue (1996) was about 300 million positions per second. The observed search speed was about 100 million positions per second.

Deep Blue team had taken over 3 years to design these chips. They received the first chess chips in September of 1995, but there were problems with the chips. So they needed a revised version of the chips, which they didn’t receive until January of 1996. The first match was scheduled for February 1996, leaving them no time to test the entire system. The full 36-node Deep Blue (1996) played its first tournament-condition games in the actual February 1996 match — against Garry Kasparov. Some part of the system had played some matches in preparation, but the system that had played, known as Deep Blue Jr., was using just a single-node version of Deep Blue with only 24 chess chips. But even the relatively limited Deep Blue Jr. beat Grandmaster Ilya Gurevich 1.5–0.5, drew Grandmaster Patrick Wol 1–1, and lost to Grandmaster Joel Benjamin 0–2.

Deep Blue (1996) was far more powerful than Deep Blue Jr., in fact, it was the most power chess machine ever built. Still, the Deep Blue team didn’t know how it would actually play because they had never seen it play a single game. Deep Blue (1996) was only a two-week old baby, the most powerful baby ever, but a baby nonetheless. Hsu said,

”Would it be the baby Hercules that strangled the two serpents send by the Goddess Hera? Or were we sending a helpless baby up as a tribute to placate the sea monster Cetus, but without the aid of Perseus? We were afraid it would be the latter.”

The first game Deep Blue played against Kasparov, in February 1996, Deep Blue won.

According to Frederic Friedel, who was Kasparov’s computer consultant, the night after game one Kasparov went for a late night walk (in Philadelphia’s below freezing temperatures). During the walk he asked,

“Frederic, what if this thing is invincible?”

In this first game of the match, Kasparov wrote, “the computer nudged a pawn forward to a square where it could easily be captured. It was a wonderful and extremely human move. If I had been playing White, I might have offered this pawn sacrifice…Humans do this sort of thing all the time. But computers generally calculate each line of play so far as possible within the time allotted…computers’ primary way of evaluating chess positions is by measuring material superiority, they are notoriously materialistic. If they “understood” the game, they might act differently, but they don’t understand. So I was stunned by this pawn sacrifice. What could it mean? I had played a lot of computers but had never experienced anything like this. I could feel — I could smell — a new kind of intelligence across the table. While I played through the rest of the game as best I could, I was lost; it played beautiful, flawless chess the rest of the way and won easily.”

“I could feel — I could smell — a new kind of intelligence across the table.”

It turned out that the pawn was not a sacrifice at all. Deep Blue (1996) did calculate every possible move “all the way to the actual recovery of the pawn six moves later”. Kasparov then asked, “So the question is…

“…if the computer makes the same move that I would make for completely different reasons, has it made an “intelligent” move? Is the intelligence of an action dependent on who (or what) takes it?”

Later in his TED talk, Kasparov said, “When I first met Deep Blue in 1996 in February, I had been the world champion for more than 10 years, and I had played 182 world championship games and hundreds of games against other top players in other competitions. I knew what to expect from my opponents and what to expect from myself. I was used to measure their moves and to gauge their emotional state by watching their body language and looking into their eyes. And then I sat across the chessboard from Deep Blue. I immediately sensed something new, something unsettling. You might experience a similar feeling the first time you ride in a driverless car or the first time your new computer manager issues an order at work. But when I sat at that first game, I couldn’t be sure what is this thing capable of. Technology can advance in leaps, and IBM had invested heavily. I lost that game. And I couldn’t help wondering, might it be invincible? Was my beloved game of chess over? These were human doubts, human fears, and the only thing I knew for sure was that my opponent Deep Blue had no such worries at all.”

“And I couldn’t help wondering, might it be invincible? Was my beloved game of chess over? These were human doubts, human fears, and the only thing I knew for sure was that my opponent Deep Blue had no such worries at all.”

The 1996 match was finally won by Kasparov (4–2 score). The match was tied at 2–2 after the first four games and even though it looked like it was a decisive win,it was a fairly close match, closer than generally believed. According to Monty Newborn’s Beyond Deep Blue: Chess in the Stratosphere, “In Game 5, Kasparov chose to avoid his favorite Sicilian Defense, having been unable to win with it in the first and third games. This implicitly showed some recognition of and respect for his opponent’s strength. Instead, he played the Four Knights Opening. Then out of nowhere, on the 23rd move, he offered a draw to this opponent? And why? Deep Blue thought it was behind by three tenths of a pawn and the team was surprised by the offer. The rules of play permitted the team to decide whether or not to accept the draw, the only decision that could be made by humans on behalf of the computer. Kasparov was a bit short on time — about 20 minutes to make the next 17 moves, about a minute a move in contrast with the rate of play that allotted three minutes per move on average. So perhaps he was playing it safe here, feeling he could defeat Deep Blue in the final game with white pieces….The Deep Blue team huddled to discuss the offer. It huddled so long that Deep Blue played its 24th move, effectively rejecting the offer.” Kasparov ended up winning that game. Else after five games, going into the final sixth game, the match would have been tied.

Kasparov ended his essay in Time by talking about why he won the first match. He said “I could figure out its priorities and adjust my play. It couldn’t do the same to me. So although I think I did see some signs of intelligence, it’s a weird kind, an inefficient, inflexible kind that makes me think I have a few years left.”

He did not have a few years left. In May 1997, he would lose the rematch to Deep Blue.

Deep Blue (1997)

Improvements on Deep Blue (1996)

The Deep Blue team knew that there were a number of deficiencies in Deep Blue (1996) that they needed to overcome, such as, gaps in chess knowledge and computation speed. So Campbell, Hoane, Hsu made the following changes for Deep Blue (1997):

- They designed a new and significantly enhanced chess chip: 1) this new chess chip had a completely redesigned evaluation function, going from “around 6,400 features to over 8,000”. Some of these new features were a result of problems they observed in the 1996 games, 2) the new chip added “hardware repetition detection”, which included a number of specialized move generation modes (e.g., to generate all moves that attack the opponent’s pieces:), 3) the new chip had search speed to 2 to 2.5 million positions per second.

- They more than doubled the number of chess chips in the system and used the newer generation of SP computer to support the higher processing demands created from the increase in chess chips.

- They developed a set of software tools to aid in debugging and match preparation, e.g., evaluation tuning and visualization tools.

- They didn’t change search much because the search ability of Deep Blue was already “acceptable”.

Then they designed, tested, and tuned the new evaluation function.

The match

Kasparov had defeated Deep Blue (1996). But Deep Blue (1997) had improved from the first match to the second. It used a faster computing system with improved software for the second match.

It was May 1997 and it was time for a rematch. Kasparov won the first game of the rematch. Predictions were in Kasparov’s favor, many experts predicted the champion to score at least four points out of six. Kasparov was coming off a superb performance at the Linares tournament and his rating was at an all-time high of 2820. In a 2003 movie, he recalled his early confidence: “I will beat the machine, whatever happens. Look at Game One. It’s just a machine. Machines are stupid.”

The Deep Blue team was disappointed in game 1 and Joel Benjamin, a grandmaster who had imparted his grandmaster chess knowledge to the machine, was convinced Deep Blue (1997) could play much better than it had done in game 1. Postmortem analysis showed that Deep Blue could have drawn that game, if only it could have searched a few more plies deeper…

Then came the second game, a game so different that a machine playing such a game had never been seen before. Computers’ strength was in tactical chess; they didn’t play strategically, in other words, until then, computers chose materialistic gains over positional advantages. Until then. Kasparov had set a trap in which Deep Blue would gain a pawn (a materialistic gain) but lose position. Instead of capturing the exposed pawn (as expected by everyone), Deep Blue (1997), chose another route; it chose a positional advantage. At the time, no machine was playing with, what grandmasters called, strategic foresight. Deep Blue had just shown a glimpse of that.

Deep Blue (1997) played game 2 like a human and that is not how machines were supposed to play. Grandmasters who were observing the game later said that had they not known who was playing, they would have thought that Kasparov was playing one of the greatest human players, maybe even himself. Joel Benjamin said he had witnessed a beautiful game of “chess”, not “computer chess”.

This play shook Kasparov and he never won a game against Deep Blue after that. He altered his style and went on the defensive. According to a (later) Wired article, grandmaster Yasser Seirawan said, “It was an incredibly refined move of defending while ahead to cut out any hint of countermoves, and it sent Garry into a tizzy.” Malcolm Pein, chess correspondent for The Daily Telegraph of London said, “There were only three explanations:

Either we were seeing some kind of vast quantum leap in chess programming that none of us knew about, or we were seeing the machine calculate far more deeply than anyone heard it could, or a human had intervened during the game.”

Immediately after the game, Kasparov accused IBM of cheating; he alleged that a grandmaster (presumably a top rival) had been behind a certain move. The claim was repeated in the documentary Game Over: Kasparov and the Machine. He claimed a move like that was too human and there was no way a computer could have chosen such a move.

IBM hadn’t cheated. According to the rules, they were allowed, in between games, to make adjustments to their system. “We would say we ‘tweaked’ the program during the match,” said Joel Benjamin, but they didn’t make any significant changes. After Deep Blue’s failure in game one, IBM went back to the drawing board and re-assigned relative weights for different features of the game.

Kasparov did not win the next three games, but neither did Deep Blue — three draws. Leading up to the final game, the match was tied 2.5–2.5.

Later, the chess-playing community would begin analyzing game 2 and discover something shocking: Kasparov had resigned in a drawn position. According to Jonathan Schaffer, “The analysis is quite deep and extends slightly beyond Deep Blue’s search horizon. And, apparently, also Kasparov’s. Kasparov’s team, which included Grandmaster Yuri Dokhoian and Frederic Friedel, were faced with the delicate task of revealing the news to Kasparov. They waited until lunch the next day, after he had had a nice glass of wine to drink. After they revealed the hidden drawing resource, Kasparov sunk into deep thought (no pun intended) for five minutes before he conceded that he had missed a draw. He later claimed that this was the first time he had resigned a drawn position.”

The last game was brisk and brutal, in just 19 moves Deep Blue stunned the world. Deep Blue had unseated Kasparov. [See rare footage of the last game.]

The final score was 3.5–2.5. Kasparov was such a great player that he had never lost a match until then — in his entire life. And he had played some of the greatest matches in chess history, including several against Anatoly Karpov. But this time was different. Devastated, Kasparov said, “I lost my fighting spirit.” Until then he had never before lost a multi-game match against an individual opponent. After game 6 he said,

‘’I was not in the mood of playing at all..I’m a human being. When I see something that is well beyond my understanding, I’m afraid.’’

Kasparov was frustrated and admitted at the press conference that he was embarrassed and ashamed of his performance. He later asked IBM to see the logs of the game and IBM refused to show them at the time. IBM was criticized for this, but one thing to remember was that the logs of Deep Blue’s computations and moves were understandable to chess masters. Giving logs to Kasparov during the match would be equivalent to giving away Deep Blue’s strategy, as Drew McDermott said, “…it would be equivalent to bugging the hotel room where he[Kasparov] discussed strategy with his seconds.”

A week after the match, Kasparov expressed his admiration for Deep Blue’s play in game 2: “The decisive game of the match was Game 2, which left a scare in my memory … we saw something that went well beyond our wildest expectations of how well a computer would be able to foresee the long-term positional consequences of its decisions. The machine refused to move to a position that had a decisive short-term advantage — showing a very human sense of danger. I think this moment could mark a revolution in computer science that could earn IBM and the Deep Blue team a Nobel Prize. Even today, weeks later, no other chess-playing program in the world has been able to evaluate correctly the consequences of Deep Blue’s position.”

Deep Blue played much better than in 1996. According to Jonathan Schaeffer some of the reasons why Deep Blue won were:

- It made fewer errors, and the errors that it did make were less serious

- Its speed had increased

- Extensive tuning by people such as Joel Benjamin helped. Schaeffer says, Besides Grandmaster Joel Benjamin, Deep Blue was tested by several other grandmasters. Other researchers at IBM, such as Gerry Tesauro(TD-Gammon) were brought in to help — he used his neural net technology to help tune Deep Blue’s evaluation function.

- Kasparov’s preparation for the match was poor. Kasparov wrote about his preparation, “Unfortunately, I based my preparation for this match … on the conventional wisdom of what would constitute good anti-computer strategy. Conventional wisdom is — or was until the end of this match — to avoid early confrontations, play a slow game, try to out-maneuver the machine, force positional mistakes, and then, when the climax comes, not lose your concentration and not make any tactical mistakes…It was my bad luck that this strategy worked perfectly in Game 1 — but never again for the rest of the match. By the middle of the match, I found myself unprepared for what turned out to be a totally new kind of intellectual challenge.”

- Kasparov made some poor choices of openings; he did not play positions that he felt most comfortable in.

Garry Kasparov asked for a rematch, but it never occurred.

Many believed Kasparov was a better player, but his emotions got in the way. Either way, one of the biggest takeaways from this match was that we had collectively underestimated both the physiological and psychological aspects of the match. Our emotions, fears, desires, and doubts had a way of getting the best of us and sometimes we cannot do much more than just stand by and let it pass. And this is a uniquely human problem, one our machine opponents do not worry about.

Our emotions, fears, desires, and doubts had a way of getting the best of us…And this is a uniquely human problem, one our machine opponents do not worry about.

It’s a theme Kasparov hinted at throughout the match and continues to discuss even now [Kasparov’s TED talk].

[Side note: A video summary of Kasparov vs Deep Blue]

Next, a bit on what went into building Deep Blue (1997). Most of this information comes from the following sources:

- Deep Blue By Campbell, Hoane, Hsu

- IBM’s Deep Blue Chess Grandmaster Chips By Hsu

- Designing a Single Chip Chess Grandmaster While Knowing Nothing About Chess By Hsu

System Architecture

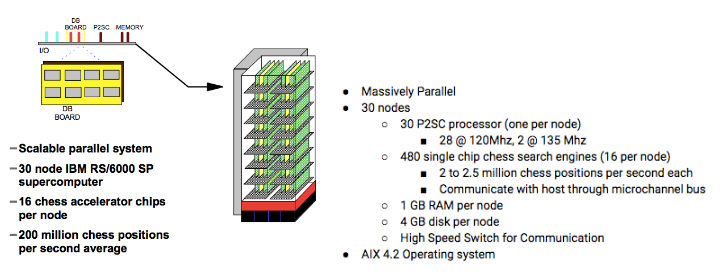

Deep Blue (1997) was a massively parallel system designed for carrying out chess game tree searches. The distributed architecture was composed of 30-nodes (30 processor (one per node)) IBM RS/6000 SP computer, which did high level decision making, and 480 chess chips (each a single-chip chess search engine), with 16 chess chips per SP processor. All nodes had 1GB of RAM, and 4GB of disk. The 480 chips were running in parallel to do a) deep searches, b) move generation and ordering, c) position evaluation (over 8000 evaluation features) effectively. Each chess chip could search 2 to 2.5 million chess positions per second so the maximum system speed reached about 1 billion chess positions per second or 40 tera operations.

Deep Blue Architecture and System overview

Deep Blue Architecture and System overview A brief history of chess machines (repeated from earlier)

A brief history of chess machines (repeated from earlier)

Search

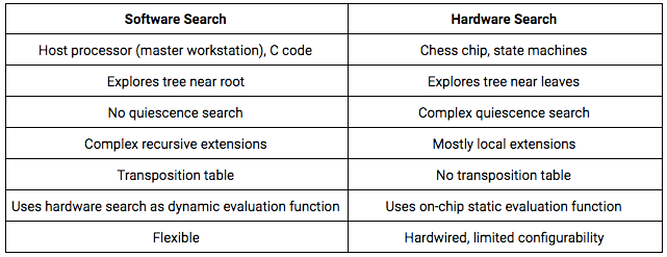

Deep Blue (1997) combined both software and hardware for maximum effect and flexibility (see here and here). The basic high-level strategy was as follows:

- 1st 4 plies will be searched on 1 workstation node

- 2nd 4 plies will be searched in parallel over 30 workstations nodes

- Remaining plies will be searched in hardware — this is where chess chips kick in

According to Deep Blue Team papers (Hsu and Campbell, Hoane, Hsu), Deep Blue was organized in three layers. For example, say the system needs to do a 12-ply search from a given position. Then,

- Master node: One of the workstation nodes, designated as the master node for the entire system, would search the first four plies in software, i.e. it would search the top levels of the chess game tree. Now, after four plies from the current game position, the number of positions increases about a thousand times.

- Worker nodes: At this point, all 30 workstation nodes, 29 worker nodes and 1 master node, would search these new positions (in software) for four more plies, i.e. the master node would distribute the “leaf” positions to the worker nodes. This upper levels of the search would take place in software and made use of transposition tables for improving search efficiency. Again, the number of positions, after these 4 plies, increase by another thousand times.

- Chess chips: Finally at this point, the worker nodes would distribute their positions to the chess chips, which search the remaining (in this case four) plies (this is where fixed-depth null window alpha-beta and quiescence search takes place — see search innovations). The chess chips actually would search the last few levels of the tree.

Comparison of hardware and software searches (*these are not covered in the blog, but I might come back and write about them.)

Comparison of hardware and software searches (*these are not covered in the blog, but I might come back and write about them.)

To summarize, there were 16 chess chips per workstation in the system and the master workstation node distributed the work to chess chips and used MPI (Message Passing Interface) for communication via a high speed switch with other worker nodes to run a distributed and parallel search.

According to Campbell, Hoane, Hsu, overall system speed varied greatly, depending on the specific characteristics of the positions being searched. “For tactical positions, where long forcing move sequences exist, Deep Blue would average about 100 million positions per second. For quieter positions, speeds close to 200 million positions per second were typical. In the course of the 1997 match with Kasparov, the overall average system speed observed in searches longer than one minute was 126 million positions per second.” [Side note: if a system can examine 200 million moves per second, then it means it can examine 50 billion positions, in the three minutes allocated for a single move in a chess game.] They said that more software work could speed up the system by a factor of two to four, but they decided to instead focus the software work on increasing the system’s chess knowledge. Also, it turned out that further tuning to extend the search depth was really not needed; this machine was sufficient to beat Kasparov.

The overall system speed varied greatly. The system could evaluate 100 to 200 million positions per second depending on board status. According to Campbell, Hoane, Hsu, “For tactical positions, where long forcing move sequences exist, Deep Blue would average about 100 million positions per second. For quieter positions, speeds close to 200 million positions per second were typical.” In the 1997 match with Kasparov, the overall average system speed observed in searchers longer than one minute was 126 million positions per second. [Side note: if a system can examine 200 million moves per second, then it means it can examine 50 billion positions, in the three minutes allocated for a single move in a chess game.] It could average 12.2 moves ahead in a 3 minute search.

Chess chips

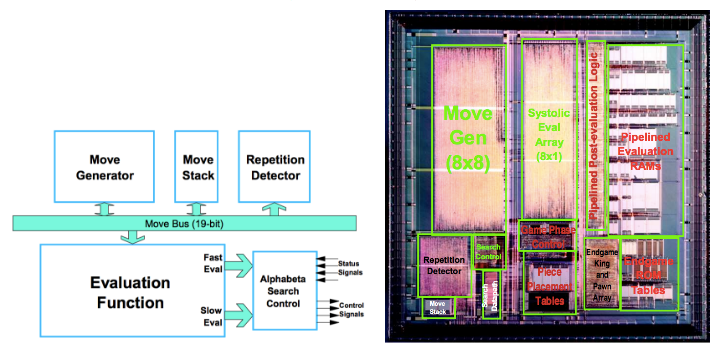

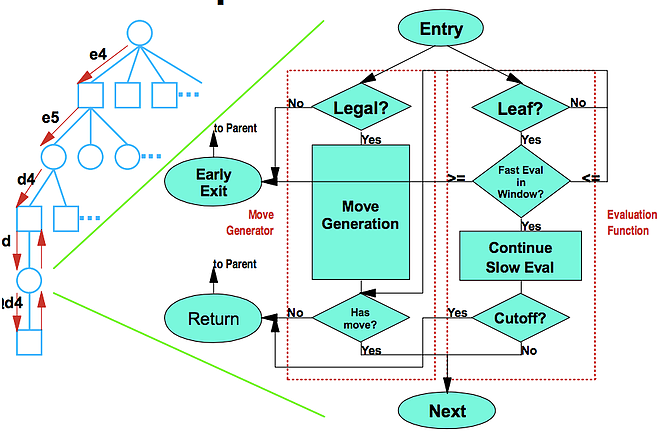

Each chess chip operated as a full chess machine. The chess chip was divided into four parts: the move generator, the smart-move stack, the evaluation function, and the search control.

- 1.5 Million Transistors

- The chip’s cycle time lies between 40 and 50 nanoseconds

- 0.6-micron CMOS technology (Complementary metal–oxide–semiconductor)

- 2 to 2.5 million chess positions per second

- 1 chess position computation was roughly 40,000 instructions on a general purpose processor

- Equivalent to 100-billion instruction per second supercomputer

- Hsu said, “…speedup to about 30 million positions/s is possible with a 0.35-micron process and a new design. Such a chip might make it possible to defeat the World Chess Champion with a desktop personal computer or even a laptop.”

- 4 main parts

- Move Generator

- Evaluation Function

- Search Control

- Smart-move stack (further divided into a regular move stack and a repetition detector)

- Hsu: A single chip system appears to play at strong Grandmaster and possibly Super Grandmaster level

Hsu wrote, “The chess chips in 0.6-micron CMOS searched 2 to 2.5 million chess positions per second per chip. Recent design analysis showed that speedup to about 30 million positions/s is possible with a 0.35-micron process and a new design. Such a chip might make it possible to defeat the World Chess Champion with a desktop personal computer or even a laptop. With a 0.18-micron process and with, say, four chess processors per chip, we could build a chess chip with higher sustained computation speed than the 1997 Deep Blue.”

Block diagram of the chess chip

Block diagram of the chess chip A chess chip’s basic search algorithm, search tree (left), flow chart (right)

A chess chip’s basic search algorithm, search tree (left), flow chart (right)

Later, Hsu wrote, “If willing to spend the money, you could build a chess machine more than a hundred times faster than the 1997 Deep Blue without resorting to faster chess chips.”

Move generator

The move generator in the Deep Blue chip was an extension of the Deep Thought move generator chip, which was an extension of the move generator of the Belle machine. The move generator was based on an 8 x 8 combinatorial array, one cell per square of the chess board and wired like a chess board. It was effectively a silicon chessboard, which meant the wiring corresponded to the way chess pieces move so could evaluate a position and find all legal moves at one time, in one flash.

Hsu described it as follows, “Each cell in the array has four main components: a find-victim transmitter, a find-attacker transmitter, a receiver, and a distributed arbiter. Each cell contains a four-bit piece register that keeps track of the type and color of the piece on the corresponding square of the chessboard.”The chess chip also used an ordering of selected moves, as described by Campbell, Hoane, Hsu: 1) Capture: Low valued piece captures high valued piece 2) Capture: High valued piece captures low valued piece, 3) No capture.

Evaluation functions

The evaluation function was a weighted function of different “features” of a chess position. Features ranged from very simple, such as a particular piece on a particular square, to very complex and each feature was given a weighting. Here’s a sample of evaluation features: rooks on seventh rank, knight trap, rook trap, bishop pair, pawn structure, the number of times a move has been played, relative number of times a move has been played, recentness of the move, results of the move, strength of the players that played the moves, etc. The chess chip recognized about 8,000 different features, and each was assigned a value. Most of the features and weights were tuned by hand.

A fully parallel implementation of the evaluation function is too big so evaluation was divided into two parts, fast and slow. Fast evaluation computed in a single cycle and contained all the (easily computed) major evaluation terms. It calculated the fastest and most valuable features. Slow evaluation had a latency of three cycles per column and took one cycle per column to compute (total of 11 cycles to compute, given the chessboard’s eight columns).

Using expert decisions

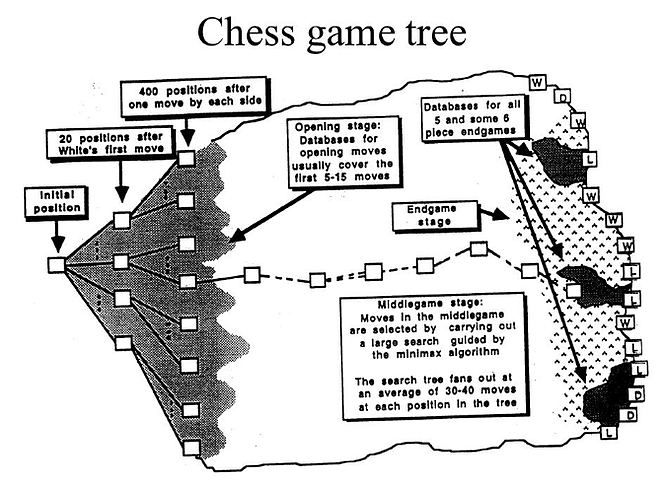

The game of chess consists of three phases: an opening phase, a middle-game phase and an endgame phase. Depending on the situation, Deep Blue used other strategies to play: opening book, extended book, endgame databases.

The opening phase lasts anywhere from 5 to 15 moves. Computers generally accessed large databases for selecting moves during this phase. Deep Blue had a hand curated (primarily by Grandmaster Joel Benjamin) opening book of 4,000 opening positions. Openings were chosen to emphasize positions that Deep Blue played well. These openings included both tactically complex openings as well as more positional openings that Deep Blue handled well in practice. Deep Blue, however, did not outplay Kasparov during this phase.

Computers also played endgames fairly well, but the best players still played better in this phase. But if the endgame had five or fewer pieces on the board then computers were better because they usually had a database of all five-piece endgames. Deep Blue had an endgame database of all chess positions with five or fewer pieces on the board as well as selected positions with six pieces. Each of the 30 processors in the system contained the 4-piece and some important 5-piece databases on their local disk. The rest of the database was was included on 20-GB RAID disk arrays for online lookup.

The hard part, for computers, was to reach the end game. In the middle game, the opening book no longer provides guidance and the endgame databases are not yet useful. It is also in the middle game that search explodes. In a typical middle-game positions there are about 30 to 40 moves. And each move can lead to 30 or 40 new positions, each of those positions will have moves, which in turn lead to more positions, and so on. This is what is meant by the saying that the game tree grows at an exponential rate. Computers must somehow search these massive trees to make effective move decisions. And this is where search innovations really helped.

To address this problem, Deep Blue used all the major search innovations and it was equipped with an extended book of a 700,000 Grandmaster games database that it could use in case the opening book was not sufficient. The idea was to summarize the information available at each position of this large game database and then use that to push Deep Blue in an effective direction.

Kasparov versus Deep Blue: Computer Chess Comes of Age By Monty Newborn

Kasparov versus Deep Blue: Computer Chess Comes of Age By Monty Newborn

We’re now (finally) done with Deep Blue summary. This should have provided a glimpse of what went into building a machine that beat the best chess player. May 1997 was special point in Artificial intelligence and people have opinions about it even now.

Reactions to Deep Blue’s win

At the press conference following game 6, Kasparov said, “I think it is time for Deep Blue to prove this was not a single event. I personally assure you that, if it starts to play competitive chess, put it in a fair contest and I personally guarantee you I will tear it to pieces.’’ [See rare footage of the last game.] He later appeared on Larry King Live and said he was willing to play Deep Blue “all or nothing, winner take all”. But that did not happen. Not long after the rematch, IBM decided to retire Deep Blue and ended all work on it.

James Coates of the Chicago Tribune wrote in October 1997, “IBM’s Deep Blue division needs dinging because its leaders announced on Sept. 23 that they will retire their massively hyped Deep Blue chess-playing computer with a one-win, one-loss record rather than give the flamboyant and unpredictable grandmaster Garry Kasparov a shot at a rematch. The geniuses who built a computer that whipped the world’s best chess player fair and square in May needs to learn here in October the first lesson that every back-room poker player and pool shooter learns from the git go. I’m talking about the rule of threes. Say we’re playing 9 ball or ping-pong and you wipe me out. I ask for a rematch and barely beat you. Then I say bye-bye, I’m the better player and now I’m going home?” He continued,

“Legs have been broken for less in pool halls and card rooms.”

Deep Blue had stunned the world. And everyone had an opinion about the match or Deep Blue or Kasparov or IBM or intelligence or creativity or brute-force or the mind…. Let’s start with Louis Gerstner, CEO of IBM’s view, “What we have is the world’s best chess player vs. Garry Kasparov.”

So, was Deep Blue really the best chess player? Or was Kasparov still the better player? The problem is that the rematch was only six games and Kasparov was only one point behind. Championship matches usually have a lot more games and most end in draws. So, it was hard to say if the rematch of six games said anything about who the better player was. Most would argue that Kasparov was still the better player. But may be that wasn’t the real point. We saw some very special humans put tremendous efforts to create a machine that forced even the best of us to doubt. It beat us at one of our most treasured games and it left us in awe (or some in fear).

Charles Krauthammer, in Be Afraid in the Weekly Standard wrote, “To the amazement of all, not least Kasparov, in this game drained of tactics, Deep Blue won. Brilliantly. Creatively. Humanly. It played with — forgive me — nuance and subtlety.”

Even though Deep Blue played a game that appeared to have some elements of “humanness” and even though its victory seems mind-blowing, Rodney Brooks (and others) said that training a machine to play a difficult game of strategy isn’t intelligence, at least not as we use intelligence for other humans; this view was shared by many researchers in AI. On the other side was Drew McDermott, who said that the usual argument people used to say Deep Blue is not intelligent was faulty. He said, “Saying Deep Blue doesn’t really think about chess is like saying an airplane doesn’t really fly because it doesn’t flap its wings.”

So is Deep Blue intelligent?

May be, a little. Deep Blue was certainly not stupid, but it also wasn’t intelligent, in the same way we say another human being is intelligent. What Deep Blue showcased was a narrow kind of intelligence; the kind that shows brilliance in one domain and it does so because humans create better hardware, better software, better algorithms, and better representations. But if you ask these specialized machines to do anything else, they will fail. Deep Blue would have failed at all those other non-chess related tasks we do; it did not exhibit general intelligence. No machine till date has exhibited general intelligence and it appears that they still have a long way to go before they can.

How did Deep Blue do what it did then? When Murray Campbell was asked about a particular move the computer made, he replied, “The system searches through many billions of possibilities before it makes its move decision, and to actually figure out exactly why it made its move is impossible. It takes forever. You can look at various lines and get some ideas, but…

“…you can never know for sure exactly why it did what it did.”

Deep Blue could only play chess, it could do nothing else. This narrow intelligence, however, was already so complex that its makers could not trace its individual decisions. Deep Blue did not make the same move in a given position and it was simply too complicated, too complex, or too hard to understand its decisions. We’re used to hearing about lack of explainability in our systems now, but it was already too hard then.

Until Deep Blue, humans were winning at chess. Machines really couldn’t beat the best humans — not even close. But then Deep Blue won. And soon so did the other computers and they have been beating us ever since. And we don’t sometimes know how they do what they do. This massive growth is their identity — no matter what our rate of improvement, once machines begin to improve, their learning and progress ends up being measured exponentially. And ours doesn’t.

But it’s not really us vs. them, even though it was Garry Kasparov vs. Deep Blue. That was a game, a way to test how machines could learn, improve, and play. But the biggest win was for the humans because their intelligence had created Deep Blue. And what if the best human minds work together with the best machines?

“Don’t fear intelligent machines, work with them”

It seems right to end with Garry Kasparov’s TED talk and his view on the experience.

“What I learned from my own experience is that we must face our fears if we want to get the most out of our technology, and we must conquer those fears if we want to get the best out of our humanity. While licking my wounds, I got a lot of inspiration from my battles against Deep Blue. As the old Russian saying goes, if you can’t beat them, join them. Then I thought, what if I could play with a computer — together with a computer at my side, combining our strengths, human intuition plus machine’s calculation, human strategy, machine tactics, human experience, machine’s memory. Could it be the perfect game ever played? But unlike in the past, when machines replaced farm animals, manual labor, now they are coming after people with college degrees and political influence. And as someone who fought machines and lost, I am here to tell you this is excellent, excellent news. Eventually, every profession will have to feel these pressures or else it will mean humanity has ceased to make progress. We don’t get to choose when and where technological progress stops.

We cannot slow down. In fact, we have to speed up. Our technology excels at removing difficulties and uncertainties from our lives, and so we must seek out ever more difficult, ever more uncertain challenges. Machines have calculations. We have understanding. Machines have instructions. We have purpose. Machines have objectivity. We have passion. We should not worry about what our machines can do today. Instead, we should worry about what they still cannot do today, because we will need the help of the new, intelligent machines to turn our grandest dreams into reality. And if we fail, if we fail, it’s not because our machines are too intelligent, or not intelligent enough. If we fail, it’s because we grew complacent and limited our ambitions. Our humanity is not defined by any skill, like swinging a hammer or even playing chess.There’s one thing only a human can do. That’s dream. So let us dream big.”

Here’s how the 1997 result stands: The machine won, humanity also won (even though we sometimes forget the latter).

Machines That Play (Deep Blue) was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.