Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Machines That Play series has been broken into 6 parts.

What’s an ideal game? Why are we interested in artificial intelligence (AI) and games? What were some major innovations in AI and games? This is Part 1 of the series and gives an overview of AI efforts related to games: chess, checkers, backgammon.

Part 1: Machines That Play (Overview) — this one

Part 2: Machines That Play (Building Chess Machines)

Part 3: Machines that Play (Chess)

Part 4: Machines That Play (Deep Blue)

Part 5: Machines That Play (Checkers)

Part 6: Machines That Play (Backgammon)

Before we talk about games and machines, let’s first talk about games and humans.

What’s an ideal game?

A game is something with rules and an objective. We “play” a game when we perform actions, constrained by these rules, in order to achieve the established objective.

We (humans) seem to need play almost as much as we need food, water, air, shelter, and tools to survive. What then, for us humans, would make a game ideal? This is a hard question to answer, but I imagine that an ideal game may have at least some of the following characteristics:

- A game in which all players are highly skilled (or at similar levels): A game in which a player is able to use his skill to overcome the challenge that his opponent(s) offer. Ideal pleasure lies using his skill to outsmart or outmaneuver an opponent who is also very good at the game.

- A game that is neither too easy, nor too hard so a player would “just win”: A game in which a player beats his opponent(s), who also performs close to his skill level. It’s a game he could have lost, but doesn’t lose. He wins. And he wins because the game allows him to rise just slightly more than his opponent(s); he conquers the elements of chance and exercises control over his environment (and himself).

- A game that forces a player to develop and reach his highest potential in order to win: A game in which a player develops highest skills to outsmart or outmaneuver his opponent(s). Ideal growth means he is able to realize his potential, to be all that he can be. He develops such great skill that the risk of failure is nearly eliminated.

- A game that changes a player’s psyche: A game that alters a player’s state of mind and in addition to realizing his highest potential, the game allows him to immerse himself fully in order to succeed. It is a game he would choose to continue playing as long as he can so he can accomplish (what he would later call) meaningful actions. And after the game, he would notice that he has changed from the game-playing experience and that his mind has been enriched with new skills, new experiences and new achievements.

- A game that forces a player to be a catalyst for global change (remember this is an ideal game): A game in which the player would need to transform (or even transcend) himself, others, or even the entire world to the fullest extent in order to win. An ideal game would involve the player realizing his (and humans’) deepest yearnings, passions, or values. If not this, then the game would at least consume him so deeply as to free him from the chains of his everyday life. And through his journey he would create a path for others to develop and realize their potential.

Is there an ideal game?

I don’t know if there is an ideal game, but, in my opinion, the following example comes pretty close to being one; it not only satisfies many of the characteristics listed earlier, but it challenges and blurs those same characteristics.

The Seventh Seal by Ingmar Bergman

The Seventh Seal by Ingmar Bergman

Death: Well, I am a pretty skilled chess player.

Knight: But I bet you’re not as good as me.

Death: Why do you want me to play chess with me?

Knight: That’s my business.

Death: Indeed.

Knight: The condition is that you let me live for as long as I can stand against you.

Knight: If I win, you let me go.

The player chooses to play as long as he lives (or can). The stakes are too high and he cannot help but still try.

We are never playing against Death (and almost winning). Our actions, in most games, are not nearly as “meaningful” as saving the lives of other humans. Our games neither give us an opportunity to come to terms with our inescapable despair nor to radically transform (or transcend) ourselves or lives of others.

In reality there is probably no ideal game. Why do we still play then? A vague and simplistic answer is “because games are fun and/or useful”, but that doesn’t seem enough. We continue to both create and play games and we continue because games still demonstrate different combinations of characteristics stated above.

Some of our oldest games

We’ve always played games. Games are one of our oldest sources of play. It’s possible that earlier humans played games to practice skills that prepared them for hunting and combat. For example, the bow and arrow was invented by the end of the Upper Paleolithic. Archery is the skill of using bows to shoot arrows. In most prehistoric cultures, archery was an important military and hunting skill. Practicing archery (or playing to practice or improve) would have improved the archer’s probability of success. In this sense, such physical games may have been played to increase our chance of survival and success.

At a later time, humans began to settle in one place, which meant they weren’t moving around as much. This gave them some routine and physical games began to translate to board games. These games tapped into a variety of our desires. Some of the oldest games are:

- Senet (~3100 BC): The full name of this race board game means the “game of passing”. Ancient Egyptians believed in an afterlife and the journey to the afterlife required the person who had died to perform rituals and pass obstacles. By the time of the New Kingdom in Egypt, senet was viewed as a representation of the journey to the afterlife. It seems senet was not just a game, it represented our struggle to achieve immortality of some kind. [Games of this type tap into our innate desire to survive beyond this human state.]

- Royal Game of Ur (~3000 BC): This is a two-player strategy race board game. The Game of Ur received its name because it was discovered by the English archaeologist Sir Leonard Woolley during his excavations of the Royal Cemetery at Ur between 1922 and 1934. At some point people began to attach spiritual significance to the game and events in the game were believed to reflect the player’s future as well as convey messages from supernatural beings. [Games of this type tap into our yearning to know and control our future.]

- Mancala (~6th century AD): Mancala is not any one game, it is the classification or type of a game: any 2-player turn-based strategy board game. The goal is to capture all or some set of the opponent’s pieces (seeds, stones, beans, etc.). [Games of this type tap into our ancient desire to survive by building shelters, hunting, gathering food, and winning.]

We played (and continue to play) games for a wide variety of reasons:

- We play to experience delight (fun)

- We play to eliminate boredom / to escape reality

- We play to practice and improve skills

- We play to learn how to think critically and strategically

- We play to conquer uncertainty, chance, luck, and probability

- We play to play to create things in order to empower ourselves.

- We play to destroy things in order to lessen our anger and frustration

- We play to settle differences

- We play to collaborate

- We play to sate all of our human desires (prehistoric and current): nurture, hunt, kill, conquer, combat, compete, collaborate, create, survive

- We play to win — to feel a sense of achievement

Throughout history we have created and played games to challenge our intelligence, strength, strategy, emotions, and more. In games, we come together and agree to a set of arbitrary rules. We compete and collaborate, we strategize to conquer chance and uncertainty, we set and achieve goals, we exercise imagination and experience delight of success.

Why AI and games?

Games are hard. Games are interesting. Games are test-beds for AI.

As technology evolved, so did our games. Recent technology has provided us with new teammates as well as new opponents, in form machines. Even though the history of games is fascinating, we’ll focus on automation, artificial intelligence (AI), and games in this series. More specifically, we’ll focus on games where AI has either learned to play just as well as us, or better. This journey will turn out to serve as a humble reminder:

No matter what the rate of improvement is for humans, once machines begin to learn, it will become hard for us to keep up with machines, whose learning and progress will end up being measured exponentially. And ours won’t.

Since the earliest days of computing, people wondered if machines could match or surpass human intelligence. Artificial intelligence is about building machines that are able to perform the tasks that (we think) require “intelligence”. But earlier AI approaches and algorithms were not sufficient to tackle real-world problems because of their complex and ambiguous nature. Programming machines to play games successfully served as one way for computers to learn tactics and strategies that could later be applied to other real-life domains.

Additionally, researchers hoped that teaching machines to play games well would provide a glimpse into how our own minds worked. We will see that even when machines surpassed humans in games, they did not necessarily give insight into the workings of our minds. They did, however, help push progress in computer science (and hence other related fields). And later, the research helped us tackle some complex real-world problems head-on.

“Games are fun and they’re easy to measure. It’s clear who won and who lost, and you always have the human benchmark…Can you do better than a human?” Murray Campbell

Emulate human thought process in games

Early AI researchers emphasized emulation of human thought process in games because they believed the best game-playing machines can be created by teaching them how to mimic human thought. They reasoned that if machines could tackle games successfully then they would most likely exhibit some type of intelligence.

Understand how the human mind works

Early AI researchers hoped that programming machines to play games successfully would help understand how the human mind worked, how it thought, how it solved problems, and ultimately what intelligence was. They assumed that building machines to perform tasks that required intelligence would provide a glimpse into how our own intelligence worked.

Games, board games specifically, are one of the oldest branches of AI, starting with Shannon and Turing 1950. They provided a good way to measure the capacity of AI ideas because of 1) their simplicity of objective, 2) well-defined rules and) the huge range of possible strategies to reach the final objective. Every time AI conquered a game, it helped us tackle at least some complex real-world problems head-on.

The blog series will cover the following topics. Links to images are in original blogs.

The focus of the series is on some of the “firsts” in AI and games (and sometimes some of the predecessors of those programs), not on including *all* or *as many as possible* game programs,

- Game: Chess. Year: 1770

- In the spring of 1770, Wolfgang von Kempelen created a sensation; he presented the world’s first ever chess-playing automaton, which he called the Automaton Chess-player, known in modern times as The Turk. Read more…

A more honest attempt (El Ajedrecista (The Chessplayer))

- Game: Chess. Years: 1910s

- In early 1910s, Torres y Quevedo built an automaton called El Ajedrecista (The Chessplayer), which made its debut, at the University of Paris in 1914. It is considered to be one the world’s first computer games. Read more…

Torres y Quevedo built El Ajedrecista (The Chess player)

Torres y Quevedo built El Ajedrecista (The Chess player)

Some theory of games

- Multiple games: chess, checkers, go, othello. Years: 1928–1944.

- John von Neumann founded the field of game theory. In 1928 he proved the minimax theorem. This theorem states that in zero-sum games (i.e. if one player wins, then the other player loses) with perfect information (i.e. in which players know at each time all moves that have taken place so far), there is a pair of strategies for both players that allows each to minimize his maximum losses, hence the name minimax.

Regarding 1940s-early 1950s: Early pioneers focused on building machines that would play chess much like humans did, so early chess progress relied heavily on chess heuristics (rules of thumb) to choose the best moves. Researchers emphasized emulation of human chess thought process because they believed teaching a machine how to mimic human thought would produce the best chess machines.

How future machines would play chess

- Game: chess. Year: 1950.

- Starting in mid 1940s, scientists from different fields (mathematics, psychology, engineering, etc.) had started discussing the possibility of creating a machine that could think, a machine that could compete with or even surpass humans in pattern recognition, computation, problem solving and even language. Claude Shannon wrote the very first article ever published on programming a computer to play chess. He published a paper in Philosophical Magazine entitled Programming a computer to play chess. Read more…

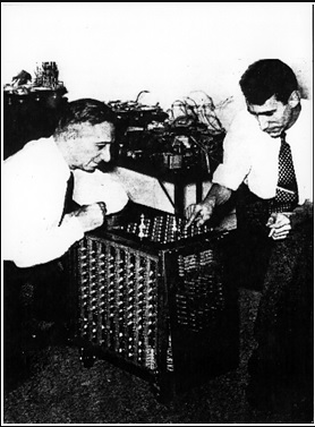

Claude Shannon demonstrates a chess-playing automaton he built for a limited version of chess, to chess champion Edward Laske

Claude Shannon demonstrates a chess-playing automaton he built for a limited version of chess, to chess champion Edward Laske

Computing power was limited in the 1950s, so machines could only play at a very basic level. This is the period when researchers developed the fundamental techniques for evaluating chess positions and for searching possible moves (and opponent’s counter-moves). These ideas are still in use today.

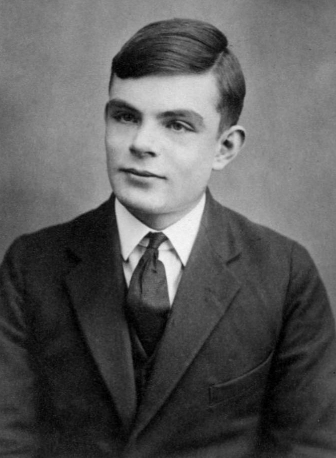

The first program that played chess (Turochamp)

- Game: chess. Years: 1948–1953

- In 1953, Alan Turing published an article on his chess program (Digital Computers Applied to Games) in the book Faster than Thought by B. Bowden. Shannon had not spoken about any particular program in his paper. It was Turing who wrote the first chess program. And he wrote it before computers even existed! He knew computers were coming and once they were powerful enough, they would be able to play chess. In 2012, Garry Kasparov played against Turochamp and defeated it in just 16 moves. Kasparov said (video), “I suppose you might call it primitive, but I would compare it to an early car — you might laugh at them but it is still an incredible achievement…

[Turing] wrote algorithms without having a computer — many young scientists would never believe that was possible. It was an outstanding accomplishment.”

AI before AI (Samuel’s checkers)

- Game: Checkers. Years: 1952–1962

- In 1952, Arthur Samuel completed his first checkers program on IBM 701 — the first major commercial computer. By 1955, Samuel had done something groundbreaking; he had created a program that could learn — something that no one had done before — and it was demonstrated on television in 1956. Samuel had been thinking about machine learning since he joined IBM and wanted to focus on building programs that could learn to play the game of checkers. In 1959 he coined the term “machine learning”. Read more…

Arthur Samuel playing checkers on IBM 701

Arthur Samuel playing checkers on IBM 701

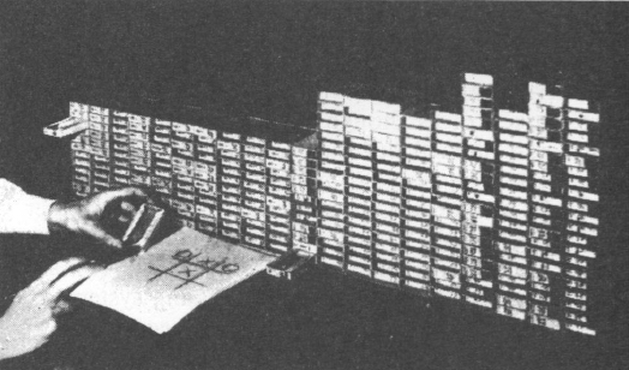

Learning to play a perfect game of Tic-Tac-Toe (MENACE)

- Game: Tic Tac Toe. Year: 1960

- During World War II, Donald Michie, a British computer scientist, worked for the Government Code and Cypher School at Bletchley Park and helped break the German “Tunny” code. In 1960 he developed the Machine Educable Noughts And Crosses Engine (MENACE), one of the first programs capable of learning to play a perfect game of Tic-Tac-Toe. Computers were not readily available so he used 304 matchboxes all filled with colored beads in order to learn to play. Coming soon…

By the end of the 1960s, computer chess programs were good enough to occasionally beat against club-level or amateur players.

First chess program to compete in human tournaments (Mack Hack VI)

- Game: Chess. Year: 1967

- Mac Hack (also known as The Greenblatt Chess Program) is a knowledge-based chess program built by Richard Greenblatt in 1967. In the same year, Mac Hack VI became the first computer to play against humans under human tournament conditions. By the end of the 1967, it had participated in four tournaments. The MacHack program was the “first widely distributed chess program”, running on many PDP machines. It became the first computer to reach the standard of average tournament players. It was demonstrably superior to all previous chess programs as well as most casual players. In 1965, Hubert Dreyfus said, “No chess program could play even amateur chess.” In 1967, Mac Hack VI beats Dreyfus. Read more…

MAC Hack 6 (Robert Q) was running on a DEC PDP-6

MAC Hack 6 (Robert Q) was running on a DEC PDP-6

Regarding programs in 1970s — 1980s: The emphasis, then, was on hardware speed. In the 1950s and 1960s, early pioneers focused on chess heuristics (rules of thumb) to choose the best next moves. Even though programs in 1970s and 1980s also used heuristics, there was a much stronger focus was on software improvements as well as use of faster and more specialized hardware. Customized hardware and software allowed programs to conduct much deeper searches of game trees (example: involving millions of chess positions), something humans did not (because they could not) do.

The first program to beat a world champion (BKG)

- Game: Backgammon. Year: 1979.

- BKG, a backgammon program created by Hans Berliner, was the first time that a machine had beaten a world champion in any game. And it did it with the slow(er) computers of those days. Backgammon is one of the oldest known board games. Its history can be traced back nearly 5,000 years to archeological discoveries in the Middle East. [Side note: Berliner developed the B* search algorithm for game tree searching.] Read more…

Hans Berliner, a backgammon and a computer chess researcher

Hans Berliner, a backgammon and a computer chess researcher

Miles ahead of the strongest human players (Chinook)

- Game: Checkers. Years: (1989–1996)

- After Samuel’s work on checkers, there was a false impression that checkers was a “solved” game. As a result, researchers moved on to chess and mostly ignored checkers until Jonathan Schaeffer began working on Chinook in 1989. Schaeffer’s goal was to develop a program capable of defeating the best checkers player. The best player was Marion Tinsley. During a match, Chinook wins a game against Tinsley, to which Schaeffer responds,

“We’re still members of the human race and Chinook defeating Tinsley in a single game means that it will only be a matter of time before computers will be supreme in checkers, and eventually in other games like chess.”

- Read more to see how Chinook vs. Tinsley plays out.

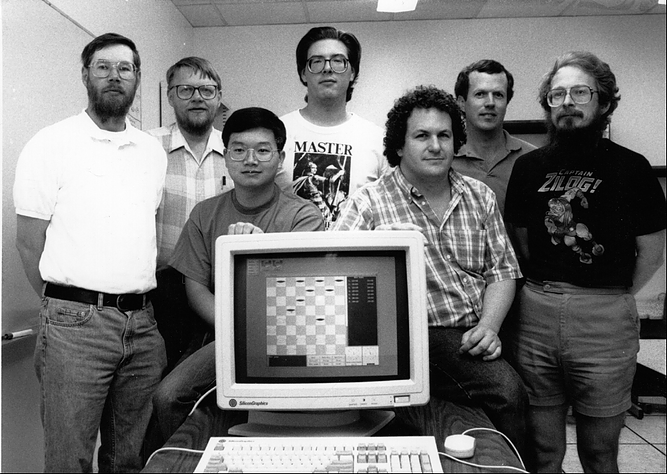

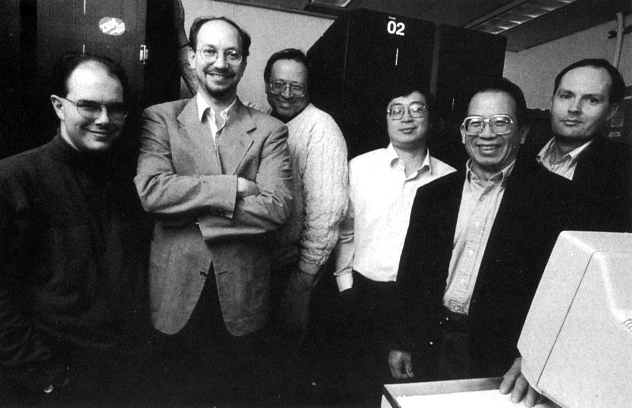

From left to right: Duane Szafron, Joe Culberson, Paul Lu, Brent Knight, Jonathan Schaeffer, Rob Lake, and Steve Sutphen. Our checkers expert, Norman Treloar, is missing

From left to right: Duane Szafron, Joe Culberson, Paul Lu, Brent Knight, Jonathan Schaeffer, Rob Lake, and Steve Sutphen. Our checkers expert, Norman Treloar, is missing

- Game: Chess. Years: 1988–1992

- Read more…

Regarding programs in the 1990s: At this time chess programs begin challenging International Chess masters and later Grandmasters. A specialized chess machine, named Deep Blue, would end up beating Garry Kasparov, the best human chess player. We also see successful applications of reinforcement learning (something AlphaGo does many years later). So, do these machines think? And are they intelligent?

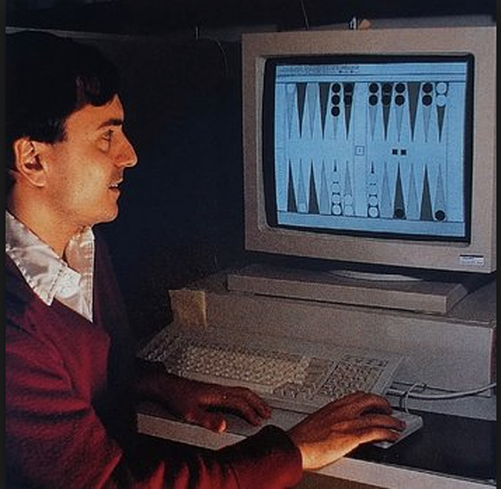

- Game: Backgammon. Years: 1992–1997

- TD-Gammon was backgammon program developed in 1992 by Gerald Tesauro of IBM. TD-Gammon applied a reinforcement learning, which goes back to Samuel’s Checkers program (later we’ll see so does alphaGo). Tesauro said that TD-Gammon’s self-teaching methodology resulted in a surprisingly strong program,”…without lookahead, its positional judgement rivals that of human experts, and when combined with shallow lookahead, it reaches a level of play that surpasses even the best human players.” TD-Gammon 3.0 (1997) used a selective 3-ply search and was already at, or very near, the level of the best human players in the world. In the long run, TD-Gammon was expected to play even better than grandmasters because those grandmasters were only human. And humans get tired. Humans have biases, but TD-Gammon didn’t. TD-Gammon played differently than humans did — a theme we will notice more and more. Read more.

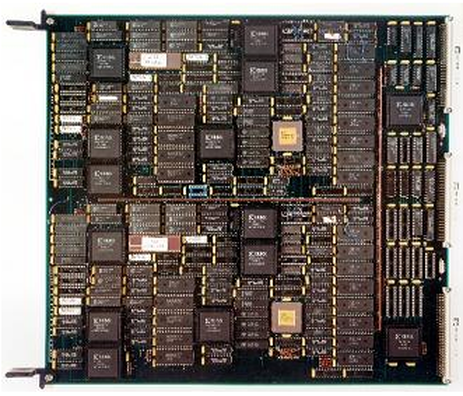

Being like a human, but (still) not being human: Deep Blue

- Game: Chess. Years: 1996–1997

- This one is long (and super interesting). Definitely read it.

- Deep Blue was only a two-week old baby when it faced Garry Kasparov in 1996. Hsu, one of its creators said, “Would it be the baby Hercules that strangled the two serpents send by the Goddess Hera? Or were we sending a helpless baby up as a tribute to placate the sea monster Cetus, but without the aid of Perseus? We were afraid it would be the latter.” The first game it ever played against Kasparov, it won — prompting Kasparov to question himself and asking, “…what if this thing is invincible?” It wouldn’t be invincible and Kasparov would beat it 4–2. This match was much closer than most people think (Read more).

- After the match Kasparov said (about Deep Blue),

“I could feel — I could smell — a new kind of intelligence across the table.”

- There would be a rematch in 1997. This time the Deep Blue team has significantly improved their machine (read about the improvements, the system architecture, the search strategies, the chess chips, etc.) This time Deep Blue plays a game no one expected and beats Garry Kasparov. Kasparov implies IBM cheated (IBM didn’t cheat) because Deep Blue’s play had a component of “humanness”. After this match, Kasparov said,

“I was not in the mood of playing at all..I’m a human being. When I see something that is well beyond my understanding, I’m afraid.’’

- This is the match everyone has an opinion about — is Deep Blue intelligent? Who is a better player? Did Deep Blue think? What does it say about us? etc. Until Deep Blue, humans were winning at chess. Machines really couldn’t beat the best humans — not even close. But then Deep Blue won. And soon so did the other computers and they have been beating us ever since. This massive growth is their identity — no matter what our rate of improvement, once machines begin to improve, their learning and progress ends up being measured exponentially. And ours doesn’t. Read more to get the details of how this machine was built, who was involved, the type of effort the team needed to put into the project, how Kasparov dealt with the defeat and how it became a significant marker in the history of AI.

There would be a rematch in 1997. This time the Deep Blue team has significantly improved their machine (read about the improvements, the system architecture, the search strategies, the chess chips, etc.) This time Deep Blue plays a game no one expected and beats Garry Kasparov. Kasparov implies IBM cheated (IBM didn’t cheat) because Deep Blue’s play had a component of “humanness”. After this match, Kasparov said, ‘’I was not in the mood of playing at all..I’m a human being. When I see something that is well beyond my understanding, I’m afraid.’’ This is the match everyone has an opinion about — is Deep Blue intelligent? Who is a better player? Did Deep Blue think? What does it say about us? etc. Until Deep Blue, humans were winning at chess. Machines really couldn’t beat the best humans — not even close. But then Deep Blue won. And soon so did the other computers and they have been beating us ever since. This massive growth is their identity — no matter what our rate of improvement, once machines begin to improve, their learning and progress ends up being measured exponentially. And ours doesn’t. Read more to get the details of how this machine was built, who was involved, the type of effort the team needed to put into the project, how Kasparov dealt with the defeat and how it became a significant marker in the history of AI.

IBM’s Deep Blue Team (from left to right): Joe Hoane, Joel Benjamin, Jerry Brody, F.H. Hsu, C.J. Tan and Murray Campbell. Source: Daniel King, Kasparov v Deeper Blue

IBM’s Deep Blue Team (from left to right): Joe Hoane, Joel Benjamin, Jerry Brody, F.H. Hsu, C.J. Tan and Murray Campbell. Source: Daniel King, Kasparov v Deeper Blue

Othello (Logistello) (Coming soon)

- Game: Othello. Year: 1997.

- In 1997, Computer Othello program Logistello defeated the world champion Takeshi Murakami with a score of 6–0. Coming soon…

- Game: Checkers. Year: 2007

- In 2007, the makers of Chinook published a paper in the journal Science announcing that Chinook had completely solved Checkers: the program could no longer be defeated by any, human or otherwise. Jonathan Schaeffer and his team had been working to solve the checkers problem since 1989. The article stated, “…checkers is now solved: Perfect play by both sides leads to a draw. This is the most challenging popular game to be solved to date, roughly one million times as complex as Connect Four.” Coming Soon

- Game: Jeopardy. Year: 2011

- In 2011, IBM developed Watson, a question-answering system capable of answering questions posed in natural language, competed on Jeopardy! against legendary champions Brad Rutter and Ken Jennings and won. Coming soon

DeepMind’s AlphaGo and AlphaGo Zero

- Game: Go. Years: 2015–2017.

- In 2015 Google DeepMind’s AlphaGo defeated 3 time European Go champion 2 dan professional Fan Hui 5–0. In 2016 it defeated a 9 dan professional Lee Sedol 4–1. Before the match with AlphaGo, Lee Sedol was confident it would be an easy win and predicted a 5–0 or 4–1 victory. AlphaGo stunned the world because Go had previously been regarded as a very hard problem in machine learning — everyone thought it was out of reach for the technology of that time. In 2017 DeepMind’s AlphaGo Zero beat AlphaGo by 100 games to 0. It had learned by playing games against itself, starting from completely random play. (We’ve seen this before in Samuel’s checkers and Tesauro’s TD-Gammon.) Coming soon

- Game: Poker. Year: 2018

- AI has had successes in perfect information games, but poker is not a perfect information game — a player has private information that others don’t. In a 120,000-hand competition, Libratus defeated 4 top professionals in heads-up no-limit Texas hold’em. (No-limit Texas hold ’em is the most popular form of poker.)

Where are we?

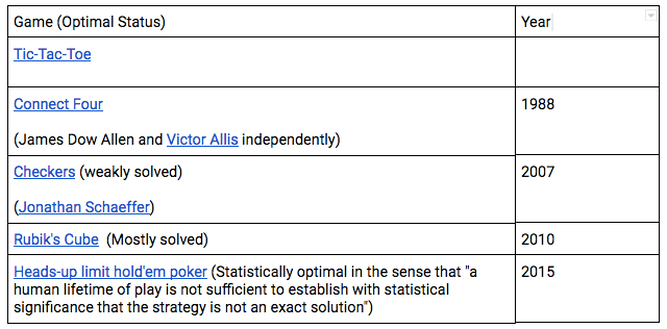

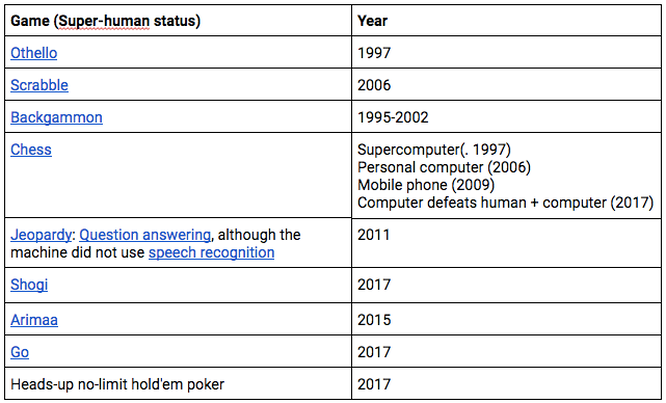

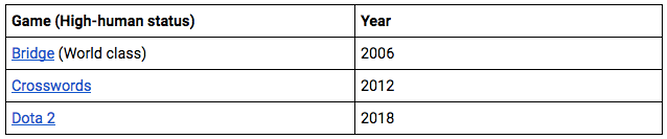

What does machine performance, compared to human performance, look like?

Broad classes of outcome for AI and games are:

Optimal: it is not possible to perform better (some of these entries were solved by humans)

Super-human: performs better than all humans

High-human: performs better than most humans

What next:

In 2016, Katja Grace and others of the Future of Humanity Institute conducted an expert poll , where they asked experts to give median estimates for certain AI tasks. Here are some results that are relevant to AI and games:

- 3 years for championship Angry Birds

- 4 years for the World Series of Poker

- 6 years for StarCraft

- More coming soon

Machines That Play (Overview) was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.