Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Incentive markets that use blockchain technology can create trustless networks that efficiently solve problems before dissolving shortly thereafter. These so-called “trust-less incentive markets” face a number of vulnerabilities worth investigating. One of the most well-known is the threat of a Sybil attack, whereby fake identities are created by the attacker to gain more rewards than they are fairly owed. We’ve discussed in previous posts like this and this a number of strategies that can mitigate the risk of Sybils.

A less commonly identified vulnerability is the threat of collusion by different members of the network working together to cheat the system. In this article, we’ll explain the risks of collusion and explain how networks can be designed to minimize them.

“The English language has 112 words for deception, according to one count, each with a different shade of meaning: collusion, fakery, malingering, self-deception, confabulation, prevarication, exaggeration, denial.” — Robin Henig

Incentive markets work well when they can correctly attribute the value that each member brings to the network. Take, for example, the Red Balloon Challenge, which challenged the general public to find ten red balloons scattered throughout the United States. A successful network would incentivize someone to find a red balloon, obviously, but it would also incentivize people to spread the word and recruit others in the search. In a previous article we described how the team that won the Red Balloon Challenge used cascading incentives:

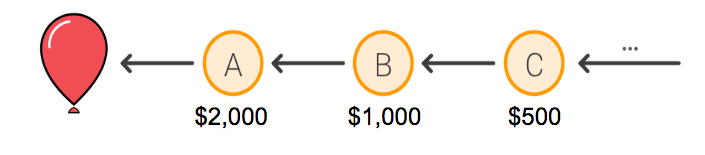

They promised $2,000 to the first person who submitted the correct coordinates for a single balloon, and $1,000 to whomever invited that person to the challenge. Another $500 would go to the person who invited the inviter, and so on. The recursive incentive structure MIT used to solve the Red Balloon Challenge

The recursive incentive structure MIT used to solve the Red Balloon Challenge

Incentive markets that reward people in proportion to their contribution towards a common goal in this recursive way can motivate people to grow a network until its goal are met.

However, this mechanism can be manipulated through collusion between network members who want to cheat the system. Suppose Alice was referred to the Red Balloon Challenge and happens to knows where a red balloon is located. Instead of submitting the location and redeeming her rightful reward, Alice (and her accomplices) can be dishonest: Alice can “refer” her husband Bob, who can “refer” their daughter Carol, who can then claim to have found a red balloon. As a result, Alice, Bob and Carol will receive the three largest rewards of the recursive payout structure and split the windfall rewards among themselves. The person who initially referred Alice gets edged out of these high rewards, which are allocated unfairly to Bob and Carol.

Since the referral chain grew through superfluous referrals, there are more people to be rewarded, and so the cost of finding the red balloon is artificially increased. This collusion makes the network more costly, less efficient, and ultimately unfair to its honest users.

Algorithmic Solutions

Computer algorithms can help detect suspicious activity in a network, but they cannot definitively pinpoint collusion. One possibility would be to design an algorithm that detects the speed at which the last few transfers of a token occur before redemption. If the transfer speeds accelerate dramatically before a reward is redeemed, this could indicate collusion among a tight-knit group trying to fake referrals. Such an algorithm, moreover, could be sensitive to whether there are identical permutations of the same network members cycling tokens in this accelerated fashion at other times.

Algorithms can detect inorganic network behavior.

Algorithms can detect inorganic network behavior.

This kind of fraudulent behavior is not exhibited organically in a social network. Given the strong academic research into what patterns of growth are organic, like this dissertation and this article, network designers should be able to detect many cases of collusion with the right software.

The problem with an algorithmic solution of this nature, though, is that circles of collusion can purposefully transfer tokens in ways that obviate detection — at least if they are clever enough. In the next section, we’ll explain a quite different approach to mitigating the risk of collusion.

Psychological Solutions

Another approach to reduce the possibility of collusion in decentralized network is through psychological mechanisms. Although collusion can be financially profitable, it can also be seen as immoral when framed correctly by network designers. The more the immoral aspects of collusion are highlighted, the less likely it is to occur because people have a distaste for exchanging their moral identity for money or material rewards.

What specifically is immoral about collusion in this context?

Collusion cheats other network members — real people — who have contributed more value to the network that they are compensated for. In our imagined example above, for example, the person who referred Alice, and in fact everyone else in the provenance chain of the token, receives a smaller reward than they deserve. Colluding might increase your own financial windfall, that is, but it decreases every other true network member’s windfall in the process.

Cheating others would likely lead to feelings of guilt.

Cheating others would likely lead to feelings of guilt.

Network designers can highlight these elements of dishonesty based on whether they are likely to resonate emotionally with network members. Simply put: collusion cheats other hard-working citizens of your provenance chain, not merely some abstract entity or corporation. If network designers can emphasize the personal harms that befall the average citizen when collusion occurs, they can motivate people to act more honestly on their networks. What’s more, the fact that collusion cheats other network members can be especially effective if there is a strong sense of kinship or tribalism within the network itself.

Market Solutions

Since every operation on a blockchain is recorded publicly on an immutable ledger, the market itself also provides incentives for people to avoid collusion. It becomes the responsibility of the token holder, for example, to ensure that the person they transfer it to will not partake in collusion. Otherwise, the token holder is the one who ultimately loses. As a result, people will be motivated to monitor the blockchain activity of people they are prospectively transferring tokens to.

The market itself acts to disincentivize collusion.

The market itself acts to disincentivize collusion.

If anyone has a new account or an older account with a history of suspicious behavior, therefore, there are strong incentives in place to avoid putting any tokens in their wallet. Since this is common knowledge, a culture of transparency and a “web of trust” can effectively exclude colluding actors from the network.

There is no foolproof method of detecting and preventing collusion. At nCent, we think that a combination of algorithmic, psychological, and market solutions can significantly mitigate the risk, but network designers need to be aware of these possibilities before they can successfully deploy them.

Overall, the problem of collusion through side-deals is an important one to consider. It hardly negates the potential of incentive markets, but it does require network designers to think carefully about avoiding this unintended consequence of recursive incentives. If you’d like to share your ideas about preventing collusion on a blockchain, email me: kk@ncnt.io. To learn more about our mission at nCent, visit our homepage and telegram channel.

Fighting Network Collusion at its Core was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.