Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Chatbot Development Challenges — Part 2

In Part 1 of the series I talked about common conceptual and day-to-day development challenges in chatbot building. In this part I will discuss in detail some architectural challenges, which are the most difficult ones in my opinion.

Architectural Challenges

What is an architectural challenge in a general sense? Or better, how do you know that there’s an architectural challenge to be faced in your next project?

It can be really hard to know in advance. If you have little experience with the tech that is one of the main components of your next project you will most likely start with an iterative approach and slowly increase complexity over time as you get more comfortable. Sooner or later you will encounter a big problem that stems from the fact that the foundation of the project is very simple and not very well-thought-through. This usually happens when you get somewhere that wasn’t even considered at the starting stage, like scalability problems or widening of the scope, just to name a few. At those times you might have an Aha! moment, followed by these thoughts: “Why didn’t I think of this in the very beginning?”, “Now half of the project’s code has to be changed since my premise was wrong.”, “This is precisely why people hire domain experts instead of learning from tutorials and trying to implement everything themselves.” and so on.

Now that we have a general idea of what constitutes an architectural challenge, let’s consider four of them that I identified during my last 9 months doing chatbot development.

Application Scope

I like the word “chatbot”. It’s short and most people will have some meaningful abstract concept connected to that word so it’s useful in everyday conversations. But it’s also pretty vague which can be harmful when it comes to people’s expectations about the technology.

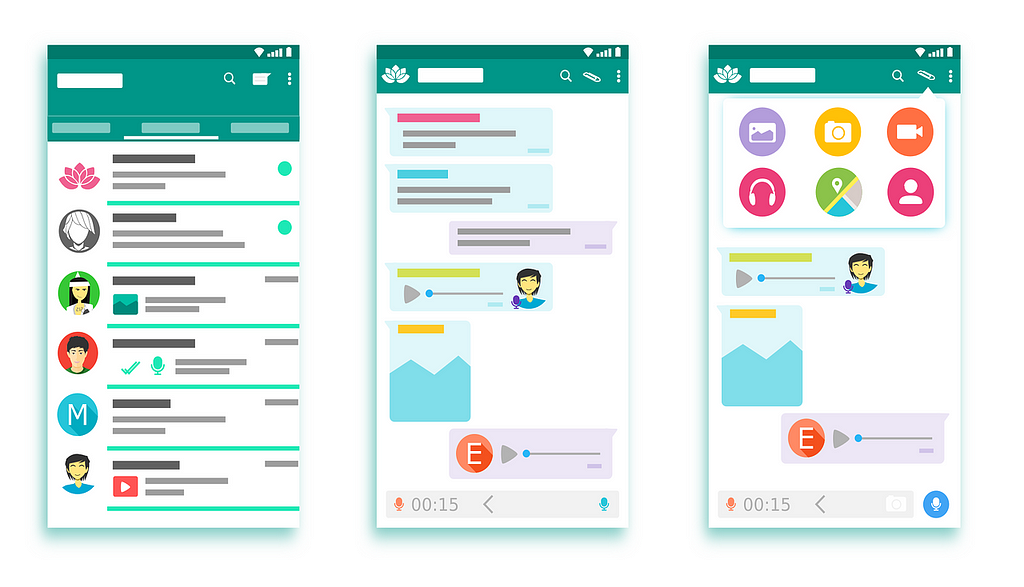

An image from my first article on chatbots from 9 months ago that got me started on this journey.

An image from my first article on chatbots from 9 months ago that got me started on this journey.

A more appropriate name for a chatbot technology (it’s more of a definition) is the following:

programatically extensible interactive natural language FAQ system in a constrained domain

I know this definition is somewhat dense, but it gives a pretty accurate description of most chatbot projects out there.

Let’s break down the definition:

- Chatbots are programatically extensible since you can invoke arbitrary code in response to some user utterance. This is done with the help of Custom Actions in Rasa Core or Fulfillments in Dialogflow and is usually used to query a database or some external API and reply to a user using the returned information.

- Chatbots are interactive since, unsurprisingly, they require input from a user in order to work. They don’t simply list all the information they know as regular FAQ pages do.

- Chatbots are not simply interactive. They accept input in the form of natural language so that the possible input space is extremely large.

- In my experience, the most popular (and easiest to implement!) use case for chatbots is as a FAQ system. Sure, you can make it possible to book tickets or order food via a chatbot. But in my experience the initial use case for people considering chatbots is to harness an endless stream of questions, that have been answered countless times in the past, bombarding their communication channels (email, Slack, Hangouts etc.)

In many organizations email is overused and becomes very hard to manage.

In many organizations email is overused and becomes very hard to manage.

- Chatbots operate over a constrained domain. This is the most critical piece of the definition. It may be an interactive system accepting input in natural language, so, as a user, it’s tempting to subconsciously conclude that you can type anything to a bot and expect something useful to come out. But it’s just an illusion created by the fact that natural language is used as input format. By design, chatbots sacrifice explicitness of application’s capabilities in return for a more novel and intuitive user experience (arguably everyone knows how to use a chat system). As a side note, many existing user interfaces are just fine without being operated via a chatbot so it’s not always a more intuitive alternative.

The last part of the above definition brings us to the challenge of determining the scope of your chatbot application. It’s clear that the more conventional ways of restricting the scope of the application wouldn’t work. I.e. “everything available via the UI is within the scope”; this obviously works for most regular web apps and desktop apps, but it is not going to work for a chatbot, given the input, which is the only method to control the application, is in natural language and is not restricted in any way.

Thus, the scope of a chatbot application has to be restricted in a less direct way. There are mainly two approaches which can be combined, if desired:

- It’s initially very tempting to make your bot seem like a real person (“I didn’t fill all those Small Talk templates for nothing!”*)and start the conversation with something like: “Hi, I am Super Help Bot. Ask me anything.” In my opinion, very few virtual assistants can make such a statement an really mean it (if Alexa or Siri were the ones starting a conversations, they could probably start it with that phrase). For all the other bots out there, stating the bot’s scope in detail up front is a much better approach: “Hi, I am Humble Help Bot. You can ask me about A, B and C. I may be able to help you with X, Y and Z. For all other questions please contact support@bigcompany.com”. This should help reduce a number of users trying to strike up a casual conversation with the bot and expecting complex conversations or asking about some obscure problem in 12 different ways.

- Once a user is in some conversation track that a bot understands, it is helpful to restrict the user’s input so that the user stays within the scope of the current track and don’t jump to a completely unrelated topic, thus confusing the bot. It can be done using the bot’s internal logic, e.g. if a user is asked for the quantity of the item they want to order, the user’s reply has to contain a number, otherwise the user will be asked the same question again (see Slot Filling with a FormAction) if you want to learn how to do this in Rasa). An even better approach would be to provide buttons with numbers that help the user with providing a valid reply. At the same time, it’s important to make it clear for the user how a conversation state can be “restarted”, so that the user doesn’t get stuck in a conversation branch that he doesn’t want to finish; keeping “cancel” button or making the bot capable of recognizing “stop”, “cancel” intents no matter what is the conversation state is helpful with that (use Intent Substitution rule in Rasa Addons to have a more fine-grained control over types of intents a bot can recognize at any point in a conversation).

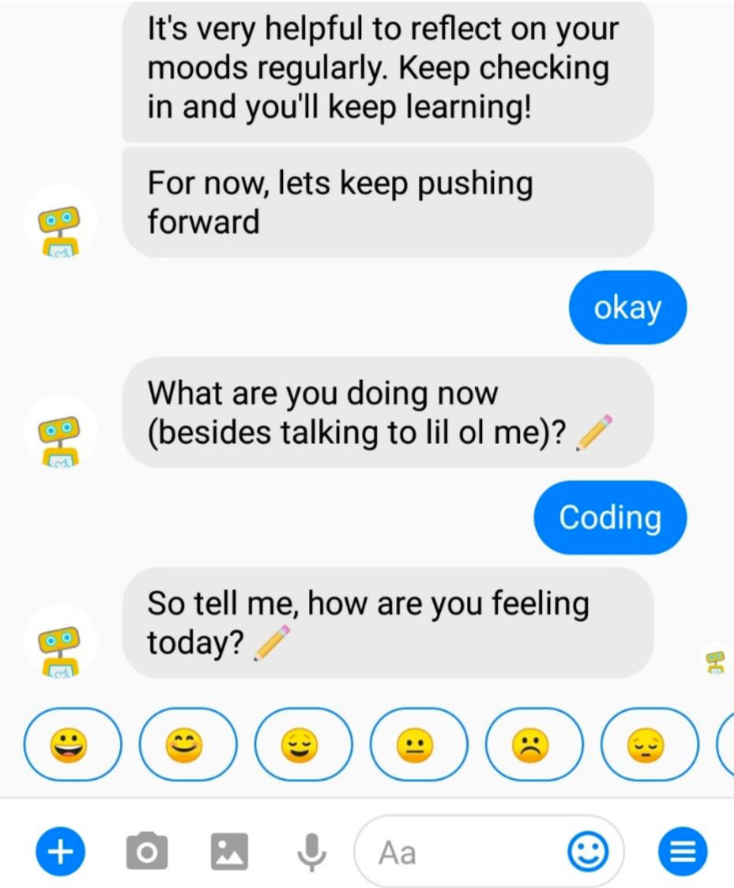

Woebot is one of the most engaging and well-designed chatbots that I’ve tried. One of the reasons it does so well is because it provides buttons for ~80% responses to restrict user input and leads the conversation most of the time.

Woebot is one of the most engaging and well-designed chatbots that I’ve tried. One of the reasons it does so well is because it provides buttons for ~80% responses to restrict user input and leads the conversation most of the time.

It’s important to clearly state the scope of your chatbot not only to the users but also to other stakeholders in the project.

Assume that your team has a member that dedicates some time each day to view chat logs, label and add user utterances to the training dataset. As was discussed above, it’s not easy to make the user understand and accept the scope of the bot, especially at the beginning of the conversation. Therefore, each day that team member will encounter user messages that are outside of the bot’s scope (they will usually be in the opening of the conversation). If it’s just one question like that, you can just skip it and do nothing. But what if there are ten messages within a day on the same topic that is currently outside of the bot’s scope. “It’s just a simple question, right? Why can’t we add it to the bot in the next release? We have all the training data available…” Things like this are a sure recipe for a scope creep in your project. Just adding new FAQ intents as the new questions come in without any proper planning will most likely result in an atrocious intent name structure that will confuse everyone in the future; I will talk more on this in the following subsection.

Intent naming

It’s best to come up with a rough “schema” for your chatbot intents before the project starts. Otherwise over time you may end up having a large amount of similar intents that will make the training data labelling process very confusing. Here’s an example:

- help.smartphone.2fa for help requests related to general 2-FA issues on smartphone

- request.disable.smartphone.2fa for requests to disable smartphone-enabled 2-FA

- qa.disabling.2fa for general question on disabling 2-FA

All of these intent names are fine on their own and probably made sense to the person that was coming up with each new intent name.

But I see 2 problems with these. First, these intents are all describing a very similar concept and it’s really easy to mislabel a new smartphone-2-FA-related utterance when you are going through hundreds of new utterances in the logs. Second, all the training data that maps to these intents will be very similar syntactically and lexically, which is not good for the NLU model, unless you have a very large number of examples per intent and are very accurate during the labelling process.

It’s much better to organize your intent names by the type of work the chatbot does when it actions on an intent. And don’t “hard code” the variables in those actions in intent names, use entities instead. Here’s how I would restructure the previous example:

- request.help would be used for creating all general help requests and placing them into the ticketing system. The fact that the user talks about 2-FA on a smartphone will be extracted as an entity and used to create a more specific help request for that case.

- request.disable would only be used if a chatbot can directly interact with the 2-FA system and unpair devices without any additional processes. In this case “2-FA on a smartphone” will be extracted as an entity so that the bot will know what to disable. And of course it should have access to some database to look up what devices the user has and clarify which one is used for 2-FA. If disabling 2-FA has to be handled by the help desk, I would go without this intent and make it so that users’ utterances asking to disable 2-FA will trigger request.help and create an appropriate support ticket using the information extracted as entities. Even better, it would log the entire user’s utterance that triggered the request.help intent to some “extra info” field of the support ticket, so that the support person viewing the ticket has more context on the request.

- qa.disabling.2fa, or anything starting with qa. in general, will be used to answer simple questions. The bot will simply look up an answer in some database storing intent-to-answer template mapping and utter the template contents.

As you can see, these 3 intents all require the bot to do a different type of action on the backend: creating a ticket, making changes to an external system or fetching a question-answer template. This is a pretty efficient way to keep your intents organized and makes development a bit easier when you scale to having a large number of intents. This approach also makes labelling easier since you can think the type of action that should be done by the bot to satisfy a certain user input and label it with an appropriate action-specific intent.

A few other things: be consistent whether you are using singular or plural nouns in your intent names. Otherwise every time you have to use the intent name in some other part of the application, you would have to come back to the NLU training data to see how exactly the intent name is spelled. Example: request.item.monitor and request.item.ethernet_cables.

Finally, be consistent with your verb conjugation: intents qa.disabling.2fa and qa.disable.account shouldn’t be used together in the same training dataset, just stick to one conjugate form to avoid headaches.

Administration

Just like building any other type of software product, building a chatbot is a continuous process. An important part of building a good chatbot is to frequently monitor latest chat logs and use that data to improve the NLU model and take note which features are missing. This requires a well-designed administration tool.

In my opinion, the main selling point of cloud chatbot solutions from companies like Google and Microsoft is their easy-to-use admin web UI for very small price (at a small scale). With a UI like that, a team member with any level of technical knowledge can easily contribute to the project by reviewing the latest conversation logs, labelling user utterances that weren’t recognized by the bot and creating new intents.

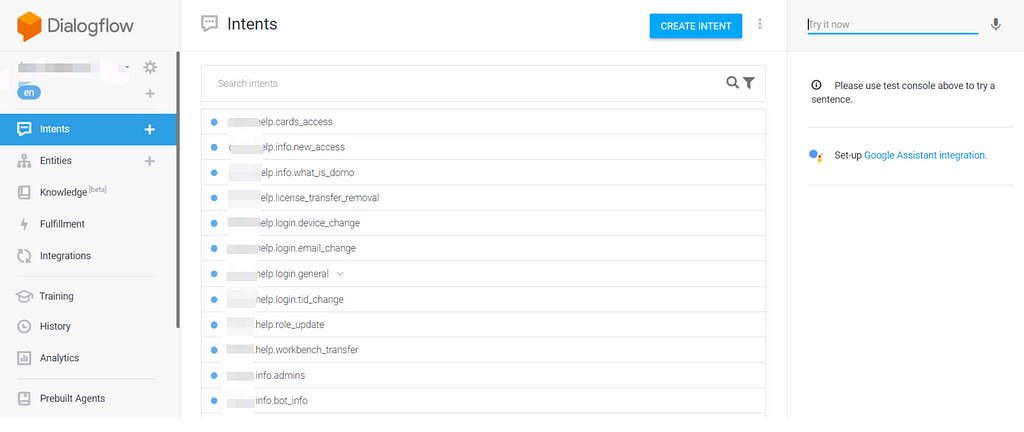

One of the biggest advantages of using Dialogflow is being able to work on your bot using an interface like this.

One of the biggest advantages of using Dialogflow is being able to work on your bot using an interface like this.

In the world of open-source chatbots, a good admin UI is arguably the most valuable asset to a chatbot consultant or an open-source chatbot framework developer. In fact, Rasa Technologies GmbH, the company behind Rasa Stack generates its revenue by providing technical support and selling licenses for Rasa Platform which is a suite of tools that help with development, support and administration of a Rasa Stack chatbot.

How the chatbot is going to be managed day-to-day is an important thing to consider when you are just starting out with the project and unsure which framework to consider.

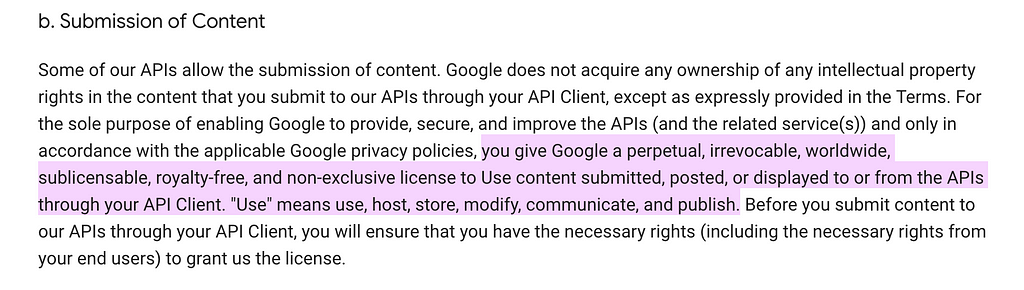

You can go with Dialogflow or Mircrosoft LUIS and be confident that managing your bot won’t become a huge hassle as it grows, thanks to a suite of admin tools with a good UI. You will also have it for free or at a very small cost if the number of users is small (perfect for PoCs!). The tradeoff is that all the infrastructure and user data will be in the cloud (which is not suitable for some organizations). Like with all other Google products, if you decide to use Dialogflow, you give an irrevocable right to use the data that goes through Dialogflow agent to Google forever.

Relevant section from the Google APIs Terms of Service.

Relevant section from the Google APIs Terms of Service.

The other way is to use an open source framework, like Rasa Stack. Unless you are just playing around with the framework or your project is really small, you will soon understand the need for a good admin interface. After that, you need to perform a cost-benefit analysis of whether it makes more sense to roll your own admin tools and complementing UI (there’s a lot more depth to these than you might initially think and thus quite nontrivial to develop) or purchase them from Rasa or some other third-party provider.

Replacing existing process with a chatbot

If you are developing a chatbot with the plan to replace some existing process, it will be an uphill battle.

As in the other parts of this article, I will take an IT help desk chatbot as an example. Assume that the current process to get IT help in the organization is to email the help desk, then someone reads the email and creates the support ticket manually. Now, since we are not considering automated email systems in this article, the chatbot project would, obviously, require us to select some communication channel for the chatbot. Let’s say that the organization is using Google Hangouts for chats so it makes sense to select it as a main channel for the chatbot. Then we tell the users to add the bot to their contact list and contact it with their IT support questions instead of using email. It’s easy to underestimate the difficulty of this pattern change for a lot of users. This change is difficult for several reasons:

- Using a different channel, than previously, to contact support is hard to get used to; after years of emailing the help desk with the IT problems it’s just unnatural to message the bot on Google Hangouts about these issues. The problem is even worse if the channel selected for the chatbot is not used for anything else within the organization: e.g. an organization uses Microsoft Communicator for chats instead of Google Hangouts and email for IT support and chatbot is developed for Hangouts.

Managing multiple chat apps is inconvenient. Don’t make this worse by putting a chatbot in yet another app.

Managing multiple chat apps is inconvenient. Don’t make this worse by putting a chatbot in yet another app.

- A lot of people will miss the fact that IT support is now done via a chatbot altogether. Not everyone reads announcements on IT support webpage; most contact support only when they need help using the customary communication channel.

- Most chatbots perform rather poorly right after initial release to the intended public. The reason is that before the release the only people who tested the chatbot are its developing team and some beta testers. It’s really easy to get entrenched into a particular way of interacting with the bot when you’ve been using it for so long during the development, thus it’s extremely hard to make sure the bot works well for all the possible inputs within its scope. Every chatbot needs to interact with real users to get the training data that will help improve it. At the same time, the first users may be reluctant to use the bot again, since it will not perform well initially due to the lack of the real training data. This is a “chicken-and-egg” dilemma of chatbot development.

The bottom line is: a team that undertakes a task of developing a chatbot that will eventually replace an existing process should try their best to integrate a chatbot with a chat platform that is already used the most within an organization. A mental switch required to become accustomed to communicating your problems to a programatically extensible interactive natural language FAQ system in a constrained domain (a.k.a. a chatbot) instead of a human being is already a large one. Don’t make it worse by changing the platform that enabled that communication, unless it’s absolutely necessary.

Also do controlled releases to ever-increasing groups of users. This will help the bot learn from real data for as long as possible without compromising its reliability within a significantly large group of users. In the IT help desk chatbot context, this would mean making it available to one department within an organization at a time (after a substantial amount of beta-testing, of course).

Conclusion

Chatbots offer a novel way of creating an ever-expanding interactive FAQ system. They also enable users to use a familiar environment (a popular chat application) to interact with services like IT help desk, food delivery, appointment booking assistant or hotel concierge; all without a real person being actively engaged on the other side of the conversation. This results in significant time savings for a business, since workers that were busy handling basic customer interactions can now concentrate on less trivial conversations (which are definitely less numerous) or switch their attention to other work.

Many people are very accustomed to a chat UI.

Many people are very accustomed to a chat UI.

Chatbots also enable users to save their own time. An opportunity cost of contacting a chatbot is minimal. In the worst case, a chatbot won’t know an answer to a user’s inquiry and will handoff a conversation to a human agent; in such case a user wastes a few minutes, compared to if they contacted a human agent right away. In the best case scenario, a bot will be able to help with a user’s inquiry and will act on it immediately; a user will save time by not having to wait in a queue (or an email reply) and will receive response to any request in the matter of milliseconds.

Now, obviously I wouldn’t have written this article if it had been easy to reap the benefits of a chatbot without doing much work. Developing a good chatbot is a lot of work. It requires everyone undertaking the project to challenge some of the misconception people commonly have around the chatbot technology. It also poses some development challenges that are unique to ML-enabled applications (I imagine that for many a chatbot would be their first ML application development experience). Finally, developing a chatbot will require a great deal of planning. This planning involves everything from chatbot and auxiliary tools architecture to its integration with existing processes to strategies for making users accustomed to the chatbot faster. If your hope is to figure this out as you go, you will end up rediscovering a lot of problems that other chatbot developers have previously encountered. In the end, you might waste lots of time solving these problems when a solution was readily available. Chatbots are relatively new but there already exist best practices and essential tools for working with this technology.

I hope that this article was helpful at raising awareness about common chatbot development challenges among those who are just starting out on this path. This article is far from providing silver bullets to the mentioned challenges; looking forward towards other people involved with the chatbots sharing their opinion on these challenges.

You can find other articles by me either in my Medium profile or on my website (the website has slightly more content). I write about pretty much everything that is listed in my Medium bio: ML, chatbots, cryptoassets, InfoSec and Python.

Annotations

* I am referring to this optional feature in Dialogflow. It was recently ported to Rasa Stack.

Chatbot Development Challenges - Part 2 was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.