Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Recently an article by the Wall Street Journal has been floating around online that discussed how models will run the world. I believe there is a lot of truth to that. Machine learning algorithms and models are becoming both ubiquitous and more trusted across industries. This, in turn will lead to us as humans spending less time questioning the output and simply allowing the system to tell us the answer. We already rely on companies like Google, Facebook and Amazon to inform us on ideas for dates, friends birthdays and what the best products are. Some of us don’t even think twice when it comes to the answers we receive from these companies.

As a data engineer who works in healthcare, this is both exciting, and terrifying. In the past year and a half, I have spent my time developing several products to help healthcare professionals make better decisions. Specifically targeting healthcare quality, fraud and drug misuse.

As I was working on the various metrics and algorithms I was constantly asking myself a few questions.

How will this influence the patient treatment?

How will it influence the doctor’s decision?

Will this improve the long-term health of a population?

In my mind, most hospitals are run like businesses, but there is some hope that their goal isn’t always the bottom line. My hope is that they are trying to serve their patients and communities first. If that is the truth, then the algorithms and models we build can’t just be focused on the bottom line (as many are in other industries). Instead, they really need to take a moment to consider how will this impact the patient, how will this impact their overall health, will this metric change the behavior of a doctor in a way that might be negative?

For instance, the Washington Health Alliance, which does a great job at reporting on various methods to help improve healthcare from a cost perspective as well as a care perspective wrote a report focused on improving healthcare cost by reducing wasteful procedures. That is a great idea!

In fact, I have worked on a similar project. That is when I started to think. What happens when some doctors take and over-adjust? I am sure many doctors will appropriately recalibrate their processes. However, what about the ones that try to over adjust?

What happens when some doctors try to correct their behavior too much and cause more harm than good because they don’t want to be flagged as wasteful.

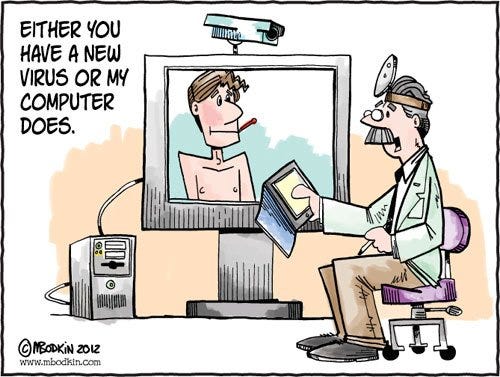

Could we possibly cause doctors to miss out on obvious diagnoses because they are so concerned about costing the hospital and patients too much money? Or worse, perhaps they rely to strongly on their models to diagnosis for them in the future. I know I have over-adjusted my behaviors in the past when I was provided criticism, so what is to stop a doctor from doing the same? There is a fine line somewhere between allowing a human to make a good decision and forcing them to rely on the thinking of a machine(like Google Maps, how many of us actually remember to get anywhere).

But are you thinking because you were told to think…or because you know what you are doing?

But are you thinking because you were told to think…or because you know what you are doing?

There is a risk to focus more on the numbers and less on what the patients are actually saying. Doctors focusing too much on the numbers and not on the patient is a personal concern of mine. If a model is wrong for a company that is selling dress shirts or toasters that means missing out on a sale and missing a quarterly goal.

A model being wrong in healthcare could mean someone dies or isn’t properly treated. So as flashy as it can be to create systems that help us better make decisions. I do often wonder if we as humans have the discipline to not rely on them for the final say.

As healthcare professionals and data specialists, we have an obligation not just to help our company, but to consider the patient. We need to not just be data driven but be human-driven.

We might not be nurses and doctors but the tools we create now and in the future are going to be directly influencing nurse and doctor decisions and we need to take that into account. As data engineers, data scientists and machine learning engineers, we have the ability to make tools to amplify the abilities of the medical professionals we support and we can make a huge impact.

Because I agree, models will slowly start to run our world more and more (they already do for actions like trade, some medical diagnoses, purchasing at Amazon and more). But that means we need to think through all the operational scenarios. We need to consider all the possible outcomes both good and bad.

How To Develop A Successful Healthcare Analytics ProductHow To Use R To Develop Predictive ModelsWeb scraping With Google SheetsWhat is A Decision TreeHow Algorithms Can Become Unethical and BiasedHow To Develop Robust Algorithms4 Must Have Skills For Data Scientists

The Problem With Machine Learning In Healthcare was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.