Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

Linear regression is a simple solution to our classification problems but what happens when it fails. As we will see in below problem

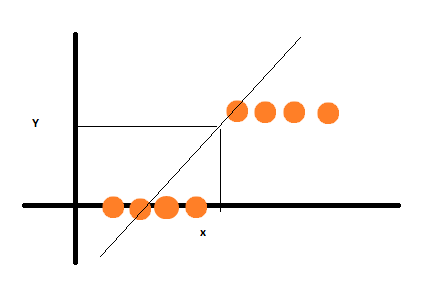

Suppose we want to classify Y= {0,1} and X are data samples. It is binary classification. Let’s try it with linear Regression

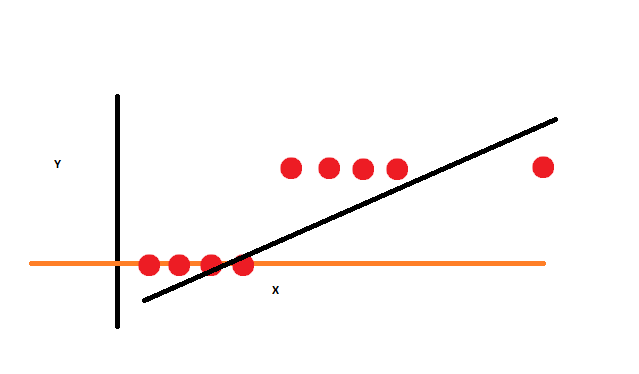

Wow, Linear Regression has done the job.It is really a good fit but what happens if i add a new data to given data set.

It’s really a bad solution. Linear Regression didn’t worked. What should we do next? If there is problem, there is solution and the solution is Logistic Regression. Let’s start with formal definition and have an idea what is linear and logistic Regression are:

In linear regression, the outcome (dependent variable) is continuous. It can have any one of an infinite number of possible values. In logistic regression, the outcome (dependent variable) has only a limited number of possible values. Logistic regression is used when the response variable is categorical in nature.

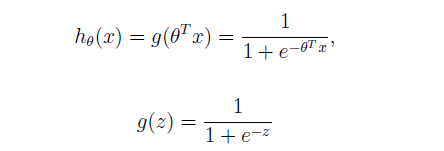

Intuitively, it also doesn’t make sense for h(x) to take values larger than 1 or smaller than 0 when we know that y ∈ {0, 1}.To fix this, lets change the form for our hypotheses h(x). We will choose hypothesis as follows :

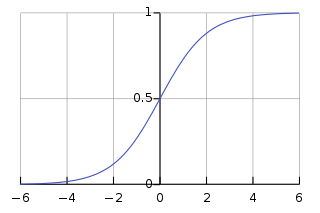

g(z) is called Sigmoid function or Logistic function. It look like as follows:

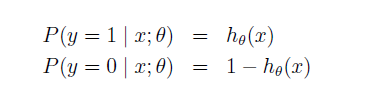

Let’s assume that

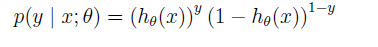

if we combine above equations together it can be re written as follows:

As in above equation when y =0, h(x) will become 1, p(y|x;theta) = (1-h(x)) and when y =1,(1- h(x)) will become 1, p(y|x;theta) = h(x)

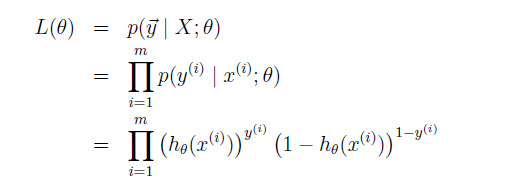

Now, likelihood of the parameters are given by

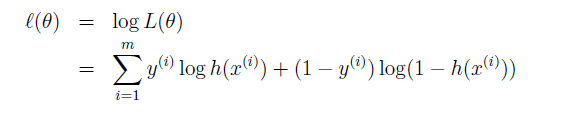

and when we Maximize log likelihood, it will be given by :

Now we can use Gradient Descent , we already know that h(x) is given by sigmoid function.

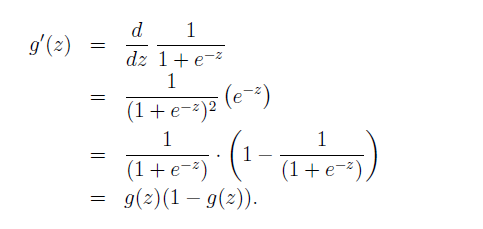

For ease, the partial derivative of g(z) with respect to z is given by

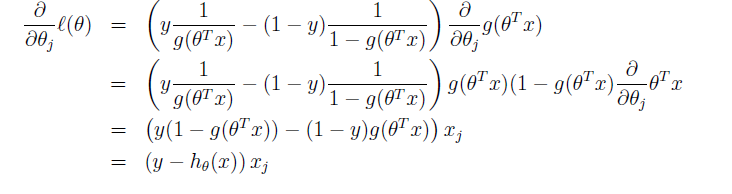

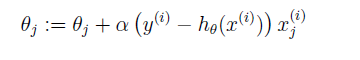

Now if apply gradient descent by taking the partial derivative of log likelihood with respect to theta

If we compare the above rule to the least mean square, it looks identical but it’s not. This is a different learning algorithm because h(x) is now defined as non-linear function of theta transpose * x[i].

If you find any inconsistency in my post, please feel free to point out in the comments. Thanks for reading.

If you want to connect with me. Please feel free to connect with me on LinkedIn.

Sameer Negi - autonomous Vehicle Traniee - Infosys | LinkedIn

How to Classify when linear Regression Fails? was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.