Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

A step by step tutorial to analyse sentiment of Amazon product reviews with the FastText API

Shameless plugin: We are a data annotation platform to make it super easy for you to build ML datasets. Just upload data, invite your team and build datasets super quick. Check us out!

This blog provides a detailed step-by-step tutorial to use FastText for the purpose of text classification. For this purpose, we choose to perform sentiment analysis of customer reviews on Amazon.com and also elaborate on how the reviews of a particular product can be scraped for performing sentiment analysis on them hands on, the results of which may be analysed to decide the quality of a product based on the given feedback, before purchase.

What is FastText?

Text classification has become an essential component of the commercial world; whether it is used in spam filtering or in analysing sentiments of tweet sor customer reviews for E-Commerce websites, which are perhaps the most ubiquitous examples .

FastText is an open-source library developed by the Facebook AI Research (FAIR), exclusively dedicated to the purpose of simplifying text classification. FastText is capable of training with millions of example text data in hardly ten minutes over a multi-core CPU and perform prediction on raw unseen text among more than 300,000 categories in less than five minutes using the trained model.

Pre-Labelled Dataset for Training :

A manually annotated dataset of amazon reviews obtained from Kaggle.com containing few million reviews was collected and used for training the model after conversion to FastText format.

The data format for FastText is as follows :

__label__<X> __label__<Y> ... <Text>

where X and Y represent the class labels.

In the dataset we use, we have the review title prepended to the review, separated by a ‘:’ and a space.

A sample from the training data file is given below, the datasets for training and testing the models can be found here in the Kaggle.com website.

__label__2 Great CD: My lovely Pat has one of the GREAT voices of her generation. I have listened to this CD for YEARS and I still LOVE IT. When I'm in a good mood it makes me feel better. A bad mood just evaporates like sugar in the rain. This CD just oozes LIFE. Vocals are jusat STUUNNING and lyrics just kill. One of life's hidden gems. This is a desert isle CD in my book. Why she never made it big is just beyond me. Everytime I play this, no matter black, white, young, old, male, female EVERYBODY says one thing "Who was that singing ?"

Here, we have only two classes 1 and 2, where __label__1 signifies that the reviewer gave either 1 or 2 stars for the product, while __label__2 indicates a 4 or 5 star rating.

Training FastText for Text Classification :

Pre-process and Clean Data :

Execute the following command to generate a preprocessed and cleaned training data file after normalizing text case and removing unwanted characters.

cat <path to training file> | sed -e “s/\([.\!?,’/()]\)/ \1 /g” | tr “[:upper:]” “[:lower:]” > <path to pre-processed output file>

Setup FastText :

Let us start by downloading the most recent release:

$ wget https://github.com/facebookresearch/fastText/archive/v0.1.0.zip$ unzip v0.1.0.zip

Move to the fastText directory and build it:

$ cd fastText-0.1.0$ make

Running the binary without any argument will print the high level documentation, showing the different use cases supported by fastText:

>> ./fasttextusage: fasttext <command> <args>

The commands supported by fasttext are:

supervised train a supervised classifier quantize quantize a model to reduce the memory usage test evaluate a supervised classifier predict predict most likely labels predict-prob predict most likely labels with probabilities skipgram train a skipgram model cbow train a cbow model print-word-vectors print word vectors given a trained model print-sentence-vectors print sentence vectors given a trained model nn query for nearest neighbors analogies query for analogies

In this tutorial, we mainly use the supervised, test and predict subcommands, which corresponds to learning (and using) text classifier.

Training the model :

The following command is used to train a model for text classification :

./fasttext supervised -input <path to pre-processed training file> -output <path to save model> -label __label__

The -input command line option refers to the training file, while the -outputoption refers to the location where the model is to be saved. After training is complete, a file model.bin, containing the trained classifier, is created in the given location.

Optional parameters for improving models:

Increasing number of epochs for training :

By default, the model is trained on each example for 5 epochs, to increase this parameter for better training, we can specify the -epoch argument.

Example :

./fasttext supervised -input <path to pre-processed training file> -output <path to save model> -label __label__ -epoch 50

Specify learning rate:

Changing learning rate implies changing the learning speed of our model is to increase (or decrease) the learning rate of the algorithm. This corresponds to how much the model changes after processing each example. A learning rate of 0 would means that the model does not change at all, and thus, does not learn anything. Good values of the learning rate are in the range 0.1 - 1.0.

The default value of lr is 0.1. Here’s how we specify this parameter.

./fasttext supervised -input <path to pre-processed training file> -output <path to save model> -label __label__ -lr 0.5

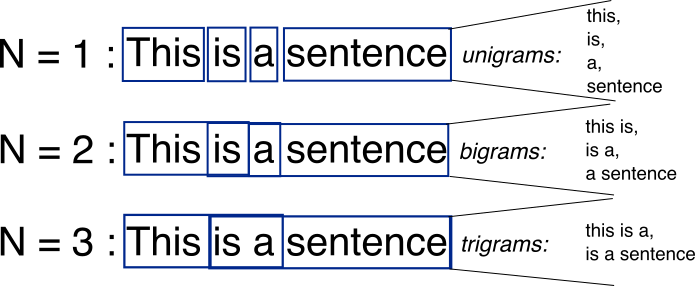

Using n-grams as features :

This is a useful step for problems depending on word order, especially sentiment analysis. It is to specify the usage of the concatenation of consecutive tokens in a n-sized window as features for training.

We specify -wordNgrams parameter for this (ideally value between 2 to 5) :

./fasttext supervised -input <path to pre-processed training file> -output <path to save model> -label __label__ -wordNgrams 3

Test and Evaluate the Model :

The following command is to test the model on a pre-annotated test dataset and compare the original labels with the predicted labels of each review and generate evaluation scores in the form of precision and recall values.

The precision is the number of correct labels among the labels predicted by fastText. The recall is the number of labels that successfully were predicted.

./fasttext test <path to model> <path to test file> k

where the parameter k represents that the model is to predict the top k labels for each review.

The results obtained on evaluating our trained model on a test data of 400000 reviews are as follows . As observed, a precision ,recall of 91% is obtained and the model is trained in a very quick time.

N 400000P@1 0.913R@1 0.913Number of examples: 400000

Analyse Sentiments of Real-Time Customer Reviews of Products on Amazon.com :

Scrape Amazon Customer Reviews :

Scrape Amazon Customer Reviews :

We use an existing python library to scrape reviews from pages.

To setup the module, In your command prompt/terminal type:

pip install amazon-review-scraper

Here’s a sample code to scrape review of a particular product, given the url of the web page :

NOTE : While entering the URL of the customer review page of a particular product, ensure that you append &pageNumber=1 if it does not exist already, for the scraper to function properly.

The above code scrapes the reviews from the given url and creates an output csv file in the following format :

From the above csv file, we extract the Title and the Body and append them together separated by a ‘: and a space as in the training file, and store them in a separate txt file for prediction of sentiments.

Prediction of Sentiments of Scraped Data :

./fasttext predict <path to model> <path to test file> k > <path to prediction file>

where k signifies that the model will predict the top k labels for each review.

The labels predicted for the above reviews are as follows :

__label__2__label__1__label__2__label__2__label__2__label__2__label__2__label__2__label__1__label__2__label__2

Which are quite accurate as verified manually. The prediction file can then be used for further detailed analysis and visualization purposes.

Thus, in this blog, we learnt using the FastText API for text classification, scraping Amazon Customer Reviews for a Given Product and predicting their sentiments with the trained model for analysis.

If you have any queries or suggestions, I would love to hear about it. Please write to me at abhishek.narayanan@dataturks.com.

Shameless plugin: We are a data annotation platform to make it super easy for you to build ML datasets. Just upload data, invite your team and build datasets super quick. Check us out!

Text Classification Simplified with Facebook’s FastText was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.