Latest news about Bitcoin and all cryptocurrencies. Your daily crypto news habit.

AI looking for optimal landing page version

AI looking for optimal landing page versionWhy, what and how?

I’d like to share some of my experiments with neural networks fitted to do multiparametric optimization of landing pages. I’ve been thinking about this idea for half a year already and find it extremely interesting from an automation point of view. A/B test take a huge time cut from marketing specialists and they require huge amounts of traffic to perform well. This becomes quite problematic when a small team is managing lot’s of landing pages, and for some projects, it’s also about “aging” of landing pages — they can become obsolete due to end of promotions or offers.

There are many ways to approach this problem. Old school MVTs (multivariative testings) as done in Google Optimize will evently split traffic between all possible versions of you landing page. And this is fine if you have 3 variations to test. But imagine you way to test 3 titles, 3 subtitles, 2 button colors and 2 header image plus a few version of general layout. This easly goes to 10k+ unique combinations. As you have 50k of traffic estimated and the goal is to find the version that is optimal or close to optimal as fast as possible. You sacrifice pure statistical precision and try to reach as much conversions as you can fast.

I am aware of few potential approaches. First is to think of different features as they are independent entities and just imagine you are doing a separete number A/B test. This way you will reach some results quite fast, but if there is any cross-feature correlations you will miss them and your solution will be non-optimal. Second is to use genetic algorytms. There are some companies doing that — Sentient Ascend for example. From their promo materials, it seems that they use some kind of genetic algorithms. Third is to use multiarmed bandits theory. One of the ways to solve multiarmed bandit problem is to use reinforcement learning and neural networks.

Let’s start with brief overview.

Genetic algorymths

Genetic algorithms are mimicking natural selection process. Think of different webpage variations as traits of a living being — some will help to survive, some are neutral and some will have negative impact. The basic workflow of genetic algorithm looks like that:

- Generate a number (let’s say 100) different offsprings having random sets of traits (100 landing pages having various versions of features)

- Let the traffic flow into them and measure conversion rate (survival in terms of evolution).

- Calculate so-called Fitness Score — how well each page performed

- Take top 20 best-performing pages, extract their features and mix them in a new way. Then, add to the mixture some of the features of bottom 20 worse performing pages and add some to the mix. There could be rare “mutations” hidden by other traits, it’s good for diversity. Generate new 100 pages based on those features.

- Repeat n times -> profit.

While this system looks good I found some certain things I don’t like about it.

- It still requires lot’s of traffic

- It’s slow on changes when user profile/characteristics change during the test (new acquisition channels appear)

- It’s not taking into account the user profile (time of the day, browser, device, etc)

- It is not using neural networks ;-)

So, I decided to build something NN-powered, 2018 style. I made a deal with some shady guys who do CPA marketing: I’ll build a system and they will give me traffic to test it. Win-win. CPA is a perfect use case for this kind of system.

Research stage

What I needed to do is to solve is a so-called “multiarmed bandits” problem using neural networks. There are few other great intros into reinforcement learning, that I studied and you can find links at the bottom. I’ve boiled down this project to few stages, the same way as multiarmed bandits problem evolves.

- Stage 0. There is a casino bandit with few arms. A multi-armed bandit, where each arm (different features of the website) has a slightly different probability of payout (CTR rate). You need to build a system that will find the best-performing arm in the lowest number of attempts, and then stick to always playing this arm (showing this version of the website). Keep in mind, that due to probabilistic nature of this problem, there is always a chance that your solution is wrong. But in the real world, it is always good when it makes more money.

- Stage 1. Here comes the user. There are many multi-armed bandits staying in the casino. Different users will play different bandits, and the system has to find what user should play what bandit. It landing page analogy this means what different users react differently to your landing page, so you have to show every user different page, based on user characteristics.

- Stage 2. Here comes the timing. There are many rooms with many bandits with many hands and you have to go through each room and one of the bandits in every room. And your payout probability depends on every on your performance in each room. This is a full version of a problem, when you have multi-step sales process — pre-landing, landing, email marketing for example.

I’ll share my insights on implementing Stage 0 problem.

Why only stage 0?

My internal feelings were excessively optimistic about amounts of traffic required to solve each step. My CPA guys agreed to 10–20k traffic on a landing page to test my ideas, and I thought it will be enough to test Stage 0 + Stage 1 optimization, but math was against me. It would require an order of magnitude more traffic for Stage 1, IMHO. Could be less after the system is tuned and studied.

Results of the latest run

Here I’ll describe results from the latest run of the system in production and some insights gained. Below you will find some code and implementation details for tech-savvy people.

So, we launched a 50/50 split test. 50% was going to a static landing page and 50% was going to a neural-engine powered dynamic landing page. After first 3–4 days I noticed that my neural solution has hit the variation (local minima) that it was sure about and wasn’t going to change (based on loss and weights). That happened after about 3–4k in traffic.

I became curious to see if I would come up with the same variation based on the pure statistic of the data. I calculated mean CTR for each variation, chosed top performers and compared to the variants suggest by ML. To my huge surprise, those were 80% different! NN was suggesting totally different version. Interesting…

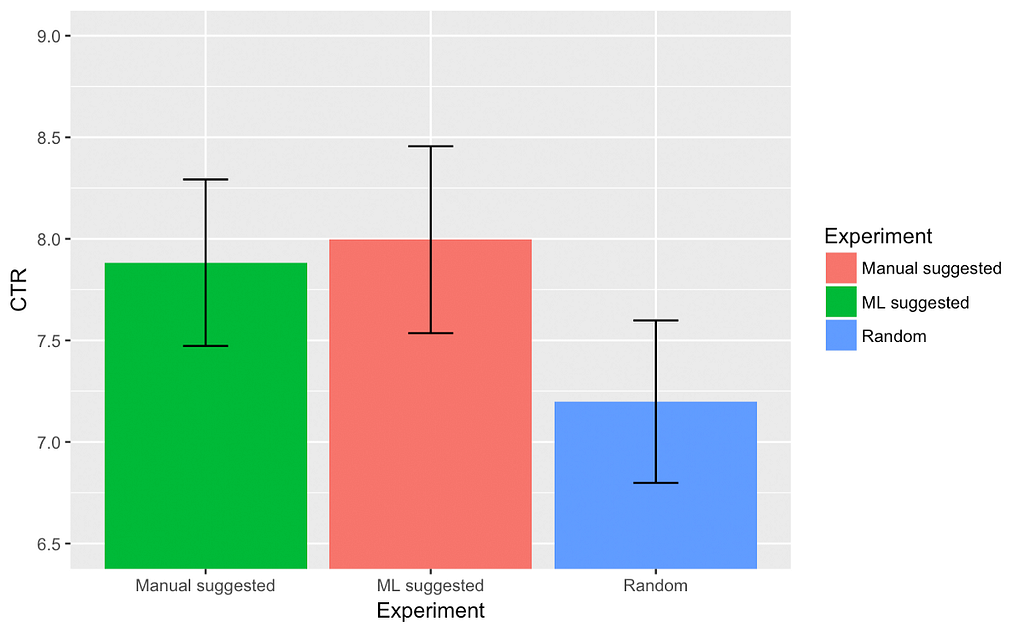

Ok then. The neural net should be smarter than simple linear algebra and me, I thought. To further verify my findings I stopped learning of neural network and did a head-to-head comparison of 3 versions:

- 100% random variations

- Static version, that was proposed using simple comparisons of features performance (get best performer for each feature and combine them into landing page)

- Static version suggested by the neural network

As you can see I waited till difference became statistically significant between random and non-random choices. So, here are the main takeaways:

- Neural network based system performed same as simple P-based statistics

- The variations suggested by statistics and NN-system were 80% different. This can mean that I have collected not enought data to separate performance of both.

- There is a chance that top-performing landing pages are more than a sum of top performing features and correlations of second order do exists.

- We need more data.

Building the system

After some digging, I decided that my problem is a typical “AI” reinforcement learning. Again, there is a great crash-course into it, I’ll refer it in the bottom and I used it heavily. I wanted my code to run in production and Tensorflow is a framework to choose for that. At my day job I prefer MXNet, take a look into it as well. It’s almost production ready.

Neural network

I used a simple two-layered fully connected network, having a static variable as an input and producing probabilities for each feature for a landing page as output. It’s quite easy to modify for Stage 1 system if an input is not static, but is a variable, depending on your user features (time, geolocation, language, etc).

size=[3,2,3,3]self.DummyState = tf.Variable([[0.1]])output = slim.fully_connected(self.DummyState,a_size,\ biases_initializer=None, weights_initializer=tf.ones_initializer())self.res = [tf.squeeze(slim.fully_connected(output, x, \ biases_initializer=None, \ activation_fn=tf.nn.sigmoid, weights_initializer=tf.ones_initializer(), scope='results'+str(i))) for i, x in enumerate(size)]

This is a network I used. Size variable represents the number of features that were tested and a number of variations of each feature.

Production running

To run the system in production I used Sanic backend, Postgres as an SQL storage and Tensorflow as an inference engine. Learning of the system was performed live on the server.

For each website visit, we asked backend system for inference results and variatons of the page to show for this user, it was about 30ms delay. After the conversion, it was matched with user’s version of the page.

I used 15 minutes delay after the visit (with typical conversion window of 5 minutes) to decide if the visit was successful or not, then this visit was used to run a step of neural network training. During the testing period, I was decaying exploration vs exploitation factor. Initially landing page was 100% randomly generated and each day proportion of random vs neural network advice was decayed till it reached 0 in 10 days.

Some tricks

To build the system I needed some kind of virtual testing environment, so I build a simple script that emulated visits to the website and conversions. The cornerstone of this process was to generate a “hidden” probabilities of the conversion rate for each landing page variations. Initially, I postulated that each unique combination of features will have it’s own CTR and all features are fully dependant on each another. That was a failed approach and network often failed to find the optimal solution with meaningful amount of traffic. As I understood that’s not a real case scenario, there’s not so much correlation between title text and color of the bottom 3 scrolls below.

self.bandits = [np.random.random(*size)]

Then I decided to simplify the environment and decided that features are all linearly independent. That is an oversimplification, but it was enough to tune the hyperparameters and make sure that system finds the right solution.

self.bandits = [np.random.random(x)/50+0.95 for x in size]

Making right “hidden” environment here is a key system fine-tuning and requires some extra steps.

Some code

I am sharing to playground script that I used to find hyperparameters and test the NN part in general.

Thanks for taking a look, feel free to ask questions, contact me at savsunenko.sasha@gmail.com

Reading list

- Simple Reinforcement Learning with Tensorflow Part 0: Q-Learning with Tables and Neural Networks

- Solving the Multi-Armed Bandit Problem

- Multi-Armed Bandits and Reinforcement Learning 1 - DataHubbs

Neural networks for landing page optimization was originally published in Hacker Noon on Medium, where people are continuing the conversation by highlighting and responding to this story.

Disclaimer

The views and opinions expressed in this article are solely those of the authors and do not reflect the views of Bitcoin Insider. Every investment and trading move involves risk - this is especially true for cryptocurrencies given their volatility. We strongly advise our readers to conduct their own research when making a decision.